共计 14007 个字符,预计需要花费 36 分钟才能阅读完成。

主题:关于 Oracle 开启自动收集统计信息的 SPA 测试

环境:Oracle RAC 11.2.0.4(Primary + Standby)

需求:生产 Primary 库由于历史原因关闭了自动统计信息的收集,目前客户需求是想要重新开启统计信息的自动收集,虽然一般来说,有了更准确的统计信息,SQL 会有更好的执行计划,但由于生产环境数据复杂,实际上还是需要评估哪些 SQL 会因为重新开启自动统计信息收集性能反而会下降。

方案:本着尽可能减少对生产 Primary 环境影响的原则,在 Standby DG 环境临时开启 snapshot standby 来进行 SPA(SQL Performance Analyze)测试,比对开启统计信息自动收集前后的性能差异,给客户提供有价值的参考。

- 1. 构造测试环境

- 2.DG 备库开启 snapshot 模式

- 3.SPA 测试准备

- 4. 从 AWR 中采集 SQL

- 5.SPA 分析比较

- 6. 获取性能比对分析报告

1. 构造测试环境

检查自动统计信息的开启状态:

select client_name,status from dba_autotask_client;

确认自动统计信息的收集是关闭的,对于“auto optimizer stats collection”的状态应该是“DISABLED”。

| SQL> select client_name,status from dba_autotask_client; | |

| CLIENT_NAME STATUS | |

| ---------------------------------------------------------------- -------- | |

| auto optimizer stats collection DISABLED | |

| auto space advisor ENABLED | |

| sql tuning advisor ENABLED |

附:关闭数据库的自动统计信息收集:

| -- 光闭自动统计信息收集,(慎用,除非有其他手工收集统计信息的完整方案,否则不建议关闭) | |

| BEGIN | |

| DBMS_AUTO_TASK_ADMIN.disable(client_name => 'auto optimizer stats collection', | |

| operation => NULL, | |

| window_name => NULL); | |

| END; | |

| / |

DG 备库保持和主库同步,所以这些设置项也都是完全一样的。

2.DG 备库开启 snapshot 模式

主要就是在 mount 模式下切换数据到 snapshot Standby 模式再 read write 打开库,为之后测试做准备。下面是核心步骤:

| SQL> shutdown immediate | |

| SQL> startup mount | |

| SQL> alter database convert to snapshot standby; | |

| SQL> shutdown immediate | |

| SQL> startup |

关于其他细节可参考下面文章,主要是为“开启 11gR2 DG 的快照模式”,“后续还原成备库”等操作提供参考:

- ORACLE 11gR2 DG(Physical Standby)日常维护 02

3.SPA 测试准备

进行 SPA 测试时,强烈建议在数据库中创建 SPA 测试专用用户,这样可以与其他用户区分开以及避免误操作。

| SQL> | |

| CREATE USER SPA IDENTIFIED BY SPA DEFAULT TABLESPACE SYSAUX; | |

| GRANT DBA TO SPA; | |

| GRANT ADVISOR TO SPA; | |

| GRANT SELECT ANY DICTIONARY TO SPA; | |

| GRANT ADMINISTER SQL TUNING SET TO SPA; |

4. 从 AWR 中采集 SQL

备库从 AWR 中采集到 SQL。

4.1 获取 AWR 快照的边界 ID

| SET LINES 188 PAGES 1000 | |

| COL SNAP_TIME FOR A22 | |

| COL MIN_ID NEW_VALUE MINID | |

| COL MAX_ID NEW_VALUE MAXID | |

| SELECT MIN(SNAP_ID) MIN_ID, MAX(SNAP_ID) MAX_ID | |

| FROM DBA_HIST_SNAPSHOT | |

| WHERE END_INTERVAL_TIME > trunc(sysdate)-10 | |

| ORDER BY 1; |

我这里的结果是:

| MIN_ID MAX_ID | |

| ---------- ---------- | |

| 2755 2848 |

4.2 新建 SQL Set

注意:以下的规范部分都是引用之前同事编写的 SPA 操作规范。

参考规范:

| EXEC DBMS_SQLTUNE.DROP_SQLSET ( - | |

| SQLSET_NAME => '${DBNAME}_SQLSET_${YYYYMMDD}', | |

| SQLSET_OWNER => 'SPA'); | |

| EXEC DBMS_SQLTUNE.CREATE_SQLSET ( - | |

| SQLSET_NAME => '${DBNAME}_SQLSET_${YYYYMMDD}', - | |

| DESCRIPTION => 'SQL Set Create at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS'), - | |

| SQLSET_OWNER => 'SPA'); |

依据我的实验环境,真实的示例为:

| -- 连接用户 | |

| conn SPA/SPA | |

| -- 如果之前有这个 SQLSET 的名字,可以这样删除 | |

| EXEC DBMS_SQLTUNE.DROP_SQLSET (SQLSET_NAME => 'JYZHAO_SQLSET_20180106', SQLSET_OWNER => 'SPA'); | |

| -- 新建 SQLSET:JYZHAO_SQLSET_20180106 | |

| EXEC DBMS_SQLTUNE.CREATE_SQLSET ( - | |

| SQLSET_NAME => 'JYZHAO_SQLSET_20180106', - | |

| DESCRIPTION => 'SQL Set Create at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS'), - | |

| SQLSET_OWNER => 'SPA'); |

4.3 转化 AWR 数据中的 SQL,将其载入到 SQL Set

从备库的 AWR 中提取 SQL(这等同于主库历史的 SQL)。

参考规范:

| DECLARE | |

| SQLSET_CUR DBMS_SQLTUNE.SQLSET_CURSOR; | |

| BEGIN | |

| OPEN SQLSET_CUR FOR | |

| SELECT VALUE(P) FROM TABLE( | |

| DBMS_SQLTUNE.SELECT_WORKLOAD_REPOSITORY( &MINID, &MAXID, | |

| 'PARSING_SCHEMA_NAME NOT IN (''SYS'',''SYSTEM'')', | |

| NULL, NULL, NULL, NULL, 1, NULL, 'ALL')) P; | |

| DBMS_SQLTUNE.LOAD_SQLSET(SQLSET_NAME => '${DBNAME}_SQLSET_${YYYYMMDD}', | |

| SQLSET_OWNER => 'SPA', | |

| POPULATE_CURSOR => SQLSET_CUR, | |

| LOAD_OPTION => 'MERGE', | |

| UPDATE_OPTION => 'ACCUMULATE'); | |

| CLOSE SQLSET_CUR; | |

| END; | |

| / |

依据我的实验环境,真实的示例为:

| DECLARE | |

| SQLSET_CUR DBMS_SQLTUNE.SQLSET_CURSOR; | |

| BEGIN | |

| OPEN SQLSET_CUR FOR | |

| SELECT VALUE(P) FROM TABLE(DBMS_SQLTUNE.SELECT_WORKLOAD_REPOSITORY( 2755, 2848, | |

| 'PARSING_SCHEMA_NAME NOT IN (''SYS'',''SYSTEM'')', | |

| NULL, NULL, NULL, NULL, 1, NULL, 'ALL')) P; | |

| DBMS_SQLTUNE.LOAD_SQLSET(SQLSET_NAME => 'JYZHAO_SQLSET_20180106', | |

| SQLSET_OWNER => 'SPA', | |

| POPULATE_CURSOR => SQLSET_CUR, | |

| LOAD_OPTION => 'MERGE', | |

| UPDATE_OPTION => 'ACCUMULATE'); | |

| CLOSE SQLSET_CUR; | |

| END; | |

| / |

4.4 打包 SQL Set(可不做)

参考规范:

| DROP TABLE SPA.${DBNAME}_SQLSETTAB_${YYYYMMDD}; | |

| EXEC DBMS_SQLTUNE.CREATE_STGTAB_SQLSET ('${DBNAME}_SQLSETTAB_${YYYYMMDD}',‘SPA’, 'SYSAUX'); | |

| EXEC DBMS_SQLTUNE.PACK_STGTAB_SQLSET ( - | |

| SQLSET_NAME => '${DBNAME}_SQLSET_${YYYYMMDD}', - | |

| SQLSET_OWNER =>‘SPA’, - | |

| STAGING_TABLE_NAME => '${DBNAME}_SQLSETTAB_${YYYYMMDD}', - | |

| STAGING_SCHEMA_OWNER =>‘SPA’); |

依据我的实验环境,真实的示例为:

| DROP TABLE SPA.JYZHAO_SQLSETTAB_20180106; | |

| EXEC DBMS_SQLTUNE.CREATE_STGTAB_SQLSET ('JYZHAO_SQLSETTAB_20180106', 'SPA', 'SYSAUX'); | |

| EXEC DBMS_SQLTUNE.PACK_STGTAB_SQLSET ( - | |

| SQLSET_NAME => 'JYZHAO_SQLSET_20180106', - | |

| SQLSET_OWNER => 'SPA', - | |

| STAGING_TABLE_NAME => 'JYZHAO_SQLSETTAB_20180106', - | |

| STAGING_SCHEMA_OWNER => 'SPA'); |

说明:其实在我这里的测试场景下,这一步是不需要做的。因为备库的 SQL Set 可以直接在后面引用,不需要像 SPA 经典场景中,是从生产源环境打包导出来后,在测试环境再导入进去,再解包为 SQL Set。

5.SPA 分析比较

5.1 创建 SPA 分析任务

参考规范:

| VARIABLE SPA_TASK VARCHAR2(64); | |

| EXEC :SPA_TASK := DBMS_SQLPA.CREATE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_${YYYYMMDD}', - | |

| DESCRIPTION => 'SPA Analysis task at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS'), - | |

| SQLSET_NAME => '${DBNAME}_SQLSET_${YYYYMMDD}', - | |

| SQLSET_OWNER =>‘SPA’); |

依据我的实验环境,真实的示例为:

| -- 创建 SPA 分析任务:VARIABLE SPA_TASK VARCHAR2(64); | |

| EXEC :SPA_TASK := DBMS_SQLPA.CREATE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_20180106', - | |

| DESCRIPTION => 'SPA Analysis task at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS'), - | |

| SQLSET_NAME => 'JYZHAO_SQLSET_20180106', - | |

| SQLSET_OWNER => 'SPA'); |

5.2 获取变更前的 SQL 执行效率

参考规范:

| EXEC DBMS_SQLPA.EXECUTE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_${YYYYMMDD}', - | |

| EXECUTION_NAME => 'EXEC_10G_${YYYYMMDD}', - | |

| EXECUTION_TYPE => 'CONVERT SQLSET', - | |

| EXECUTION_DESC => 'Convert 10g SQLSET for SPA Task at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS')); |

依据我的实验环境,真实的示例为:

| EXEC DBMS_SQLPA.EXECUTE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_20180106', - | |

| EXECUTION_NAME => 'EXEC_BEFORE_20180106', - | |

| EXECUTION_TYPE => 'CONVERT SQLSET', - | |

| EXECUTION_DESC => 'Convert Before gathering stats SQLSET for SPA Task at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS')); |

5.3 开启变更操作

变更内容:开启统计信息自动收集并确认已经成功收集了最新的统计信息。

这里首先需要开启统计信息自动收集,并可以把自动收集的窗口时间提前到现在,减少等待的时间。

| -- 检查自动统计信息的开启状态: | |

| select client_name,status from dba_autotask_client; | |

| -- 启动自动统计信息收集 | |

| BEGIN | |

| DBMS_AUTO_TASK_ADMIN.enable(client_name => 'auto optimizer stats collection', | |

| operation => NULL, | |

| window_name => NULL); | |

| END; | |

| / |

查看窗口任务和有关统计信息自动收集的任务执行状态:

| select window_name,repeat_interval,duration,enabled from dba_scheduler_windows; | |

| select owner, job_name, status, ACTUAL_START_DATE, RUN_DURATION from dba_scheduler_job_run_details where job_name like 'ORA$AT_OS_OPT_S%' order by 4; |

调整窗口任务的下一次执行时间:

| -- 需要确认 JOB 可以启动 | |

| alter system set job_queue_processes=1000; | |

| -- 调整窗口任务的下一次执行时间 | |

| EXEC DBMS_SCHEDULER.SET_ATTRIBUTE('SATURDAY_WINDOW','repeat_interval','freq=daily;byday=SAT;byhour=17;byminute=10;bysecond=0'); |

更多有关调整窗口和自动任务的内容可参考文章:

- Oracle 的窗口和自动任务

5.4 变更后再次分析性能

测试运行 SQL Tuning Set 中的 SQL 语句,分析所有语句在收集统计信息之后的执行效率:

参考规范:

| EXEC DBMS_SQLPA.EXECUTE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_${YYYYMMDD}', - | |

| EXECUTION_NAME => 'EXEC_11G_${YYYYMMDD}', - | |

| EXECUTION_TYPE => 'TEST EXECUTE', - | |

| EXECUTION_DESC => 'Execute SQL in 11g for SPA Task at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS')); |

依据我的实验环境,真实的示例为:

| EXEC DBMS_SQLPA.EXECUTE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_20180106', - | |

| EXECUTION_NAME => 'EXEC_AFTER_20180106', - | |

| EXECUTION_TYPE => 'TEST EXECUTE', - | |

| EXECUTION_DESC => 'Execute SQL After gathering stats for SPA Task at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS')); |

5.5 变更前后性能对比

得到两次 SQL Trail 之后,可以对比两次 Trial 之间的 SQL 执行性能,可以从不同的维度对两次 Trail 中的所有 SQL 进行对比分析,主要关注的维度有:SQL 执行时间,SQL 执行的 CPU 时间,SQL 执行的逻辑读。

参考规范:

| 1). 对比两次 Trail 中的 SQL 执行时间 | |

| EXEC DBMS_SQLPA.EXECUTE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_${YYYYMMDD}', - | |

| EXECUTION_NAME => 'COMPARE_ET_${YYYYMMDD}', - | |

| EXECUTION_TYPE => 'COMPARE PERFORMANCE', - | |

| EXECUTION_PARAMS => DBMS_ADVISOR.ARGLIST( - | |

| 'COMPARISON_METRIC', 'ELAPSED_TIME', - | |

| 'EXECUTE_FULLDML', 'TRUE', - | |

| 'EXECUTION_NAME1','EXEC_10G_${YYYYMMDD}', - | |

| 'EXECUTION_NAME2','EXEC_11G_${YYYYMMDD}'), - | |

| EXECUTION_DESC => 'Compare SQLs between 10g and 11g at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS')); | |

| 2). 对比两次 Trail 中的 SQL 执行的 CPU 时间 | |

| EXEC DBMS_SQLPA.EXECUTE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_${YYYYMMDD}', - | |

| EXECUTION_NAME => 'COMPARE_CT_${YYYYMMDD}', - | |

| EXECUTION_TYPE => 'COMPARE PERFORMANCE', - | |

| EXECUTION_PARAMS => DBMS_ADVISOR.ARGLIST( - | |

| 'COMPARISON_METRIC', 'CPU_TIME', - | |

| 'EXECUTION_NAME1','EXEC_10G_${YYYYMMDD}', - | |

| 'EXECUTION_NAME2','EXEC_11G_${YYYYMMDD}'), - | |

| EXECUTION_DESC => 'Compare SQLs between 10g and 11g at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS')); | |

| 3). 对比两次 Trail 中的 SQL 执行的逻辑读 | |

| EXEC DBMS_SQLPA.EXECUTE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_D', - | |

| EXECUTION_NAME => 'COMPARE_BG_D', - | |

| EXECUTION_TYPE => 'COMPARE PERFORMANCE', - | |

| EXECUTION_PARAMS => DBMS_ADVISOR.ARGLIST( - | |

| 'COMPARISON_METRIC', 'BUFFER_GETS', - | |

| 'EXECUTION_NAME1','EXEC_10G_${YYYYMMDD}', - | |

| 'EXECUTION_NAME2','EXEC_11G_${YYYYMMDD}'), - | |

| EXECUTION_DESC => 'Compare SQLs between 10g and 11g at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS')); |

依据我的实验环境,真实的示例为:

| 1). 对比两次 Trail 中的 SQL 执行时间 | |

| EXEC DBMS_SQLPA.EXECUTE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_20180106', - | |

| EXECUTION_NAME => 'COMPARE_ET_20180106', - | |

| EXECUTION_TYPE => 'COMPARE PERFORMANCE', - | |

| EXECUTION_PARAMS => DBMS_ADVISOR.ARGLIST( - | |

| 'COMPARISON_METRIC', 'ELAPSED_TIME', - | |

| 'EXECUTE_FULLDML', 'TRUE', - | |

| 'EXECUTION_NAME1','EXEC_BEFORE_20180106', - | |

| 'EXECUTION_NAME2','EXEC_AFTER_20180106'), - | |

| EXECUTION_DESC => 'Compare SQLs between 10g and 11g at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS')); | |

| 2). 对比两次 Trail 中的 SQL 执行的 CPU 时间 | |

| EXEC DBMS_SQLPA.EXECUTE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_20180106', - | |

| EXECUTION_NAME => 'COMPARE_CT_20180106}', - | |

| EXECUTION_TYPE => 'COMPARE PERFORMANCE', - | |

| EXECUTION_PARAMS => DBMS_ADVISOR.ARGLIST( - | |

| 'COMPARISON_METRIC', 'CPU_TIME', - | |

| 'EXECUTION_NAME1','EXEC_BEFORE_20180106', - | |

| 'EXECUTION_NAME2','EXEC_AFTER_20180106'), - | |

| EXECUTION_DESC => 'Compare SQLs between 10g and 11g at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS')); | |

| 3). 对比两次 Trail 中的 SQL 执行的逻辑读 | |

| EXEC DBMS_SQLPA.EXECUTE_ANALYSIS_TASK( - | |

| TASK_NAME => 'SPA_TASK_20180106', - | |

| EXECUTION_NAME => 'COMPARE_BG_20180106', - | |

| EXECUTION_TYPE => 'COMPARE PERFORMANCE', - | |

| EXECUTION_PARAMS => DBMS_ADVISOR.ARGLIST( - | |

| 'COMPARISON_METRIC', 'BUFFER_GETS', - | |

| 'EXECUTION_NAME1','EXEC_BEFORE_20180106', - | |

| 'EXECUTION_NAME2','EXEC_AFTER_20180106'), - | |

| EXECUTION_DESC => 'Compare SQLs between Before_STATS and After_STATS at :'||TO_CHAR(SYSDATE, 'YYYY-MM-DD HH24:MI:SS')); |

6. 获取性能比对分析报告

参考规范:

| --a) 获取执行时间全部报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL elapsed_all.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_${YYYYMMDD}','HTML','ALL','ALL',NULL,1000,'SPA_TASK_${YYYYMMDD}_COMP_ET')).GETCLOBVAL(0,0) FROM DUAL; | |

| --b) 获取执行时间下降报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL elapsed_regressed.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_${YYYYMMDD}','HTML','REGRESSED','ALL',NULL,1000,'SPA_TASK_${YYYYMMDD}_COMP_ET')).GETCLOBVAL(0,0) FROM DUAL; | |

| --c) 获取逻辑读全部报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL buffer_all.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_${YYYYMMDD}','HTML','ALL','ALL',NULL,1000,'SPA_TASK_${YYYYMMDD}_COMP_BG')).GETCLOBVAL(0,0) FROM DUAL; | |

| --d) 获取逻辑读下降报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL buffer_regressed.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_${YYYYMMDD}','HTML','REGRESSED','ALL',NULL,1000,'SPA_TASK_${YYYYMMDD}_COMP_BG')).GETCLOBVAL(0,0) FROM DUAL; | |

| --e) 获取错误报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL error.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_${YYYYMMDD}','HTML','ERRORS','ALL',NULL,1000,'SPA_TASK_${YYYYMMDD}_COMP_ET')).GETCLOBVAL(0,0) FROM DUAL; | |

| --f) 获取不支持报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL unsupported.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_${YYYYMMDD}','HTML','UNSUPPORTED','ALL',NULL,1000,'SPA_TASK_${YYYYMMDD}_COMP_ET')).GETCLOBVAL(0,0) FROM DUAL; | |

| --g) 获取执行计划变化报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL changed_plans.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_${YYYYMMDD}','HTML','CHANGED_PLANS','ALL',NULL,1000,'SPA_TASK_${YYYYMMDD}_COMP_ET')).GETCLOBVAL(0,0) FROM DUAL; |

依据我的实验环境,真实的示例为:

| --a) 获取执行时间全部报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL elapsed_all.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_20180106','HTML','ALL','ALL',NULL,1000,'COMPARE_ET_20180106')).GETCLOBVAL(0,0) FROM DUAL; | |

| --b) 获取执行时间下降报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL elapsed_regressed.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_20180106','HTML','REGRESSED','ALL',NULL,1000,'COMPARE_ET_20180106')).GETCLOBVAL(0,0) FROM DUAL; | |

| --c) 获取逻辑读全部报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL buffer_all.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_20180106','HTML','ALL','ALL',NULL,1000,'COMPARE_BG_20180106')).GETCLOBVAL(0,0) FROM DUAL; | |

| --d) 获取逻辑读下降报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL buffer_regressed.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_20180106','HTML','REGRESSED','ALL',NULL,1000,'COMPARE_BG_20180106')).GETCLOBVAL(0,0) FROM DUAL; | |

| --e) 获取错误报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL error.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_20180106','HTML','ERRORS','ALL',NULL,1000,'COMPARE_ET_20180106')).GETCLOBVAL(0,0) FROM DUAL; | |

| --f) 获取不支持报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL unsupported.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_20180106','HTML','UNSUPPORTED','ALL',NULL,1000,'COMPARE_ET_20180106')).GETCLOBVAL(0,0) FROM DUAL; | |

| --g) 获取执行计划变化报告 | |

| ALTER SESSION SET EVENTS='31156 TRACE NAME CONTEXT FOREVER, LEVEL 0X400'; | |

| SET LINES 1111 PAGES 50000 LONG 1999999999 TRIM ON TRIMS ON SERVEROUTPUT ON SIZE UNLIMITED | |

| SPOOL changed_plans.html | |

| SELECT XMLTYPE(DBMS_SQLPA.REPORT_ANALYSIS_TASK('SPA_TASK_20180106','HTML','CHANGED_PLANS','ALL',NULL,1000,'COMPARE_ET_20180106')).GETCLOBVAL(0,0) FROM DUAL; |

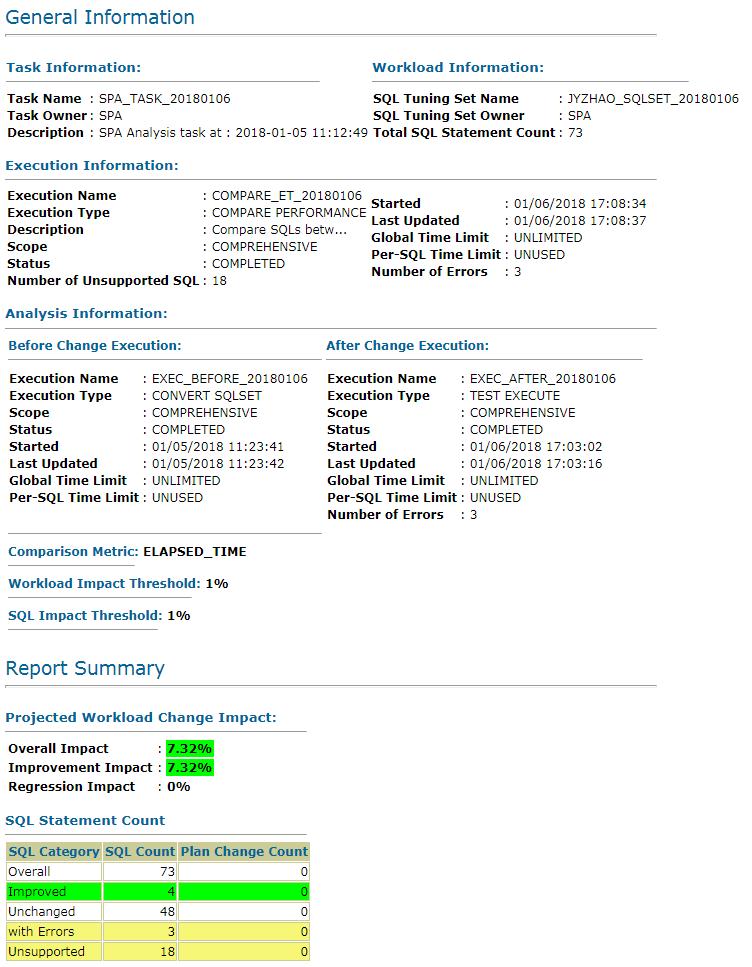

这样就得到了各类的性能对比报告,以执行时间的全部报告为例,生成的报告概要头部类似这样:

当然,具体获取到的这些性能对比报告,针对那些有性能下降的 SQL,还需要人工干预,评估如何优化处理那些性能下降的 SQL。

更多 Oracle 相关信息见Oracle 专题页面 http://www.linuxidc.com/topicnews.aspx?tid=12

本文永久更新链接地址:http://www.linuxidc.com/Linux/2018-01/150160.htm