共计 10299 个字符,预计需要花费 26 分钟才能阅读完成。

1. 今天遇到一个错误,在连接到 hive 之后,无法执行任何命令。

$ beeline -u “jdbc:hive2://hostname.domain.cn:10000/default;principal=hive/_HOST@domain.com;”

Connecting to jdbc:hive2://hostname.domain.cn:10000/default;principal=hive/_HOST@domain.com;

Connected to: Apache Hive (version release-1.2.1-EDH-1.1.2)

Driver: Hive JDBC (version release-1.2.1-EDH-1.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version release-1.2.1-EDH-1.1.2 by Apache Hive

0: jdbc:hive2://hostname.domain.cn:10000> show databases;

返回错误如下

Error: Error while processing statement: FAILED: Execution Error, return code 1 from org.apache.Hadoop.hive.ql.exec.DDLTask. MetaException

(message:For direct MetaStore DB connections, we don’t support retries at the client level.) (state=08S01,code=1)

2. 百度这个错误,很多文档都提及是字符集的问题,需要保证 mysql 的字符集是 latin1.

mysql> show variables like ‘%collation_%’;

+———————-+——————-+

| Variable_name | Value |

+———————-+——————-+

| collation_connection | latin1_swedish_ci |

| collation_database | latin1_swedish_ci |

| collation_server | latin1_swedish_ci |

+———————-+——————-+

3. 如果字符集不是 latin1,需要手动执行 (本次问题,不是这个,我的字符集,原本就是 latin1)

mysql>use hive

mysql>alter database hive character set latin1;

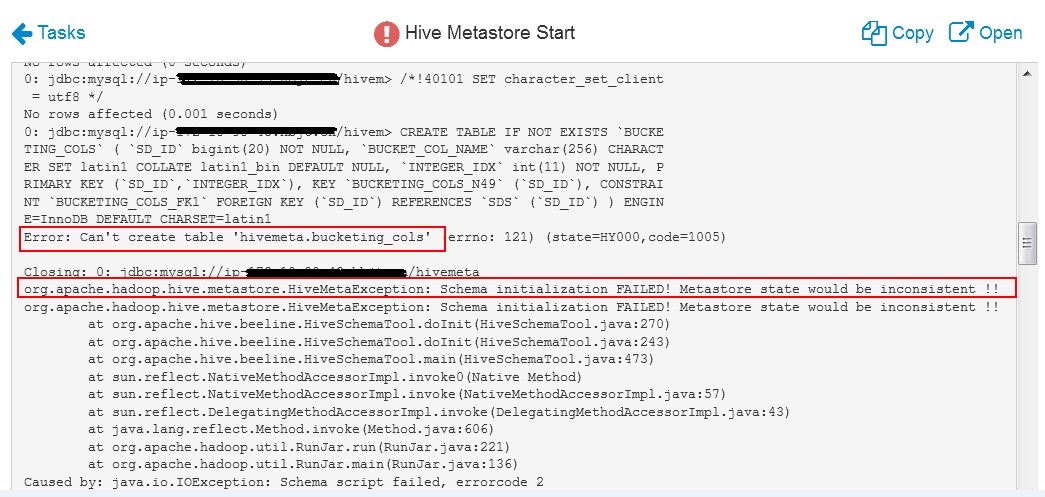

4. 后来尝试重启一下 hive,结果,metastore 竟然启动失败。查看日志,说创建表失败。这个 hive 已经运行一段时间了,一直没有问题。对应的表也都是存在的,没有道理重建的。

5. 日志输出如下:

Traceback (most recent call last):

File “/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive_metastore.py”, line 259, in <module>

HiveMetastore().execute()

File “/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py”, line 280, in execute

method(env)

File “/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive_metastore.py”, line 59, in start

self.configure(env)

File “/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive_metastore.py”, line 73, in configure

hive(name = ‘metastore’)

File “/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py”, line 89, in thunk

return fn(*args, **kwargs)

File “/var/lib/ambari-agent/cache/common-services/HIVE/0.12.0.2.0/package/scripts/hive.py”, line 320, in hive

user = params.hive_user

File “/usr/lib/python2.6/site-packages/resource_management/core/base.py”, line 155, in __init__

self.env.run()

File “/usr/lib/python2.6/site-packages/resource_management/core/environment.py”, line 160, in run

self.run_action(resource, action)

File “/usr/lib/python2.6/site-packages/resource_management/core/environment.py”, line 124, in run_action

provider_action()

File “/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py”, line 273, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File “/usr/lib/python2.6/site-packages/resource_management/core/shell.py”, line 71, in inner

result = function(command, **kwargs)

File “/usr/lib/python2.6/site-packages/resource_management/core/shell.py”, line 93, in checked_call

tries=tries, try_sleep=try_sleep)

File “/usr/lib/python2.6/site-packages/resource_management/core/shell.py”, line 141, in _call_wrapper

result = _call(command, **kwargs_copy)

File “/usr/lib/python2.6/site-packages/resource_management/core/shell.py”, line 294, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of ‘export HIVE_CONF_DIR=/usr/hdp/current/hive-metastore/conf/conf.server ; /usr/hdp/current/hive-metastore/bin/schematool -initSchema -dbType mysql -userName hive -passWord [PROTECTED] -verbose’ returned 1. Metastore connection URL: jdbc:mysql://hostname.mysql.cn/hivemeta

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: hive

Starting metastore schema initialization to 1.2.0

Initialization script hive-schema-1.2.0.mysql.sql

Connecting to jdbc:mysql://hostname.mysql.cn/hivemeta

Connected to: MySQL (version 5.1.73)

Driver: MySQL Connector Java (version mysql-connector-java-5.1.40 ( Revision: 402933ef52cad9aa82624e80acbea46e3a701ce6))

Transaction isolation: TRANSACTION_READ_COMMITTED

0: jdbc:mysql://hostname.mysql.cn/hivem> !autocommit on

Autocommit status: true

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40101 SET @OLD_CHARACTER_SET_C

LIENT=@@CHARACTER_SET_CLIENT */

No rows affected (0.003 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40101 SET @OLD_CHARACTER_SET_R

ESULTS=@@CHARACTER_SET_RESULTS */

No rows affected (0 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40101 SET @OLD_COLLATION_CONNE

CTION=@@COLLATION_CONNECTION */

No rows affected (0 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40101 SET NAMES utf8 */

No rows affected (0 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40103 SET @OLD_TIME_ZONE=@@TIM

E_ZONE */

No rows affected (0.002 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40103 SET TIME_ZONE=’+00:00′ *

/

No rows affected (0 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40014 SET @OLD_UNIQUE_CHECKS=@

@UNIQUE_CHECKS, UNIQUE_CHECKS=0 */

No rows affected (0 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40014 SET @OLD_FOREIGN_KEY_CHE

CKS=@@FOREIGN_KEY_CHECKS, FOREIGN_KEY_CHECKS=0 */

No rows affected (0.001 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40101 SET @OLD_SQL_MODE=@@SQL_

MODE, SQL_MODE=’NO_AUTO_VALUE_ON_ZERO’ */

No rows affected (0 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40111 SET @OLD_SQL_NOTES=@@SQL

_NOTES, SQL_NOTES=0 */

No rows affected (0.001 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40101 SET @saved_cs_client

= @@character_set_client */

No rows affected (0 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> /*!40101 SET character_set_client

= utf8 */

No rows affected (0.001 seconds)

0: jdbc:mysql://hostname.mysql.cn/hivem> CREATE TABLE IF NOT EXISTS `BUCKE

TING_COLS` (`SD_ID` bigint(20) NOT NULL, `BUCKET_COL_NAME` varchar(256) CHARACT

ER SET latin1 COLLATE latin1_bin DEFAULT NULL, `INTEGER_IDX` int(11) NOT NULL, P

RIMARY KEY (`SD_ID`,`INTEGER_IDX`), KEY `BUCKETING_COLS_N49` (`SD_ID`), CONSTRAI

NT `BUCKETING_COLS_FK1` FOREIGN KEY (`SD_ID`) REFERENCES `SDS` (`SD_ID`) ) ENGIN

E=InnoDB DEFAULT CHARSET=latin1

Error: Can’t create table ‘hivemeta.bucketing_cols’ (errno: 121) (state=HY000,code=1005)

Closing: 0: jdbc:mysql://hostname.mysql.cn/hivemeta

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

org.apache.hadoop.hive.metastore.HiveMetaException: Schema initialization FAILED! Metastore state would be inconsistent !!

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:270)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:243)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:473)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: java.io.IOException: Schema script failed, errorcode 2

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:358)

at org.apache.hive.beeline.HiveSchemaTool.runBeeLine(HiveSchemaTool.java:326)

at org.apache.hive.beeline.HiveSchemaTool.doInit(HiveSchemaTool.java:266)

… 8 more

*** schemaTool failed ***

6. 根据关键字“Schema initialization FAILED! Metastore state would be inconsistent !!”,查询到 hdp 的官方论坛。

解释说,这个是一个已知的问题。需要修改 ambari 的 sql 脚本,删除 mysql 的一些 index 等。

https://community.hortonworks.com/questions/113748/hive-metastore-start.html

7. 官方建议的步骤繁多。个人比较懒。而且这个 hive 库,前一天还是正常的。这个问题是突然出现的,我不倾向与,这个是 bug 的可能。

继续分析,查看 hivemetastore 的 log,还是有必要的。

发现问题,表不存在

2018-03-07 15:34:43,871 INFO [pool-8-thread-200]: metastore.HiveMetaStore (HiveMetaStore.java:logInfo(746)) – 200: get_all_databases

2018-03-07 15:34:43,871 INFO [pool-8-thread-200]: HiveMetaStore.audit (HiveMetaStore.java:logAuditEvent(371)) – ugi=ambari-qa-hbjt_hadoop@domain.com ip=/172.18.98.57 cmd=get_all_databases

2018-03-07 15:34:43,873 ERROR [pool-8-thread-200]: metastore.RetryingHMSHandler (RetryingHMSHandler.java:invoke(165)) – HMSHandler Fatal error: javax.jdo.JDOException: Exception thrown when executing query

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:596)

……

NestedThrowablesStackTrace:

com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException: Table ‘hivemeta.dbs’ doesn’t exist

at sun.reflect.GeneratedConstructorAccessor60.newInstance(Unknown Source)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

8. 现在要从 mysql 入手分析,逐步检查。

mysql> use hive

mysql> show tables;

+—————————+

| Tables_in_hivemeta |

+—————————+

| BUCKETING_COLS |

| CDS |

| COLUMNS_V2 |

| COMPACTION_QUEUE |

| COMPLETED_TXN_COMPONENTS |

| DATABASE_PARAMS |

| DBS |

| DB_PRIVS |

| DELEGATION_TOKENS |

9. 问题来了,表不存在。为什么不存在呢?其实我已经知道问题了。Mysql 表名大小写敏感问题。

mysql>select count(*) from `DBS`;

ERROR 1146 (42S02): Table ‘hivemeta.DBS’ doesn’t exist

10. 由于很多 Oracle 数据库的表名是大小写不敏感的。但是 Mysql 却是表名大小写敏感的。所以,很多开发人员,都喜欢将 mysql 设置为表名大小写不敏感。

而 show tables 之后,我就怀疑这个可能了。

11. 经过检查 /etc/my.cnf,确实已经设置 lower_case_table_names=1

12. Hive 库创建的时候,mysql 是大小写敏感的。所以,表名都是大写的。而后期,有人修改了 mysql 参数,取消大小写敏感。这样,问题就来了。Mysql 并不是完全不敏感,而是在设置参数 lower_case_table_names=1 之后,创建和查询的 sql 语句中的表名,默认修改为小写的。而已经创建的表名,还是大写的显示。但是你无论用大写表名,还是小写表名,都无法正确查询到了。

13. 这个问题出现的场景:

只有是在 hive 库创建完成之后,又修改 /etc/my.cnf,添加参数 lower_case_table_names= 1 导致的。

14. 问题找到之后,有两个解决方案:

a. 取消参数 lower_case_table_names=1

b. 如果 mysql 的 hive 库中没有什么数据,删除 hive 库表,重新创建 hive meta 表,直接就创建出小写的表名了。

输出就是小写的了:

mysql> show tables;

+—————————+

| Tables_in_hive_beta |

+—————————+

| bucketing_cols |

| cds |

| columns_v2 |

| compaction_queue |

| completed_txn_components |

| database_params |

| db_privs |

| dbs |

| delegation_tokens |

| func_ru |

15. 我最后选择取消参数 lower_case_table_names=1,并重启 mysql。查询正常了。

mysql> select count(*) from `DBS`;

+———-+

| count(*) |

+———-+

| 2 |

16. Ambari 启动 hive metastore 也成功了。

问题解决,一切恢复正常

$ beeline -u “jdbc:hive2://hostname.domain.cn:10000/default;principal=hive/_HOST@domain.com;”

Connecting to jdbc:hive2://hostname.domain.cn:10000/default;principal=hive/_HOST@domain.com;

Connected to: Apache Hive (version release-1.2.1-EDH-1.1.2)

Driver: Hive JDBC (version release-1.2.1-EDH-1.1.2)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version release-1.2.1-EDH-1.1.2 by Apache Hive

0: jdbc:hive2://hostname.domain.cn:10000> show databases;

+—————-+–+

| database_name |

+—————-+–+

| default |

| hbjt |

+—————-+–+

2 rows selected (1.206 seconds)

最终的原因,就是有人修改的 /etc/my.cnf,导致的本次问题。