共计 8512 个字符,预计需要花费 22 分钟才能阅读完成。

本文介绍 gpdb 的 master 故障及恢复测试以及 segment 故障恢复测试。

环境介绍:

Gpdb 版本:5.5.0 二进制版本

操作系统版本:CentOS linux 7.0

Master segment: 192.168.1.225/24 hostname: mfsmaster

Stadnby segemnt: 192.168.1.227/24 hostname: server227

Segment 节点 1:192.168.1.227/24 hostname: server227

Segment 节点 2:192.168.1.17/24 hostname: server17

Segment 节点 3:192.168.1.11/24 hostname: server11

每个 segment 节点上分别运行一个 primary segment 和一个 mirror segment

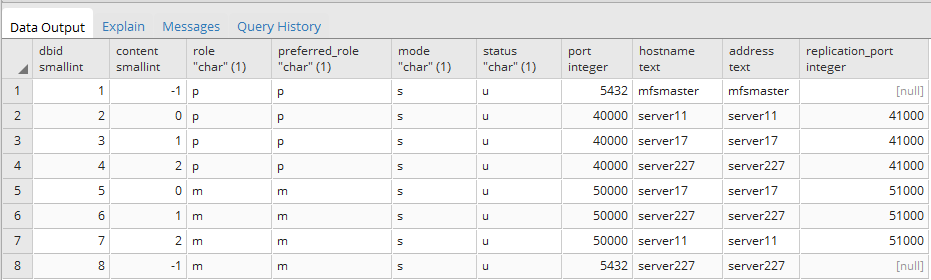

一、查看原始状态

select * from gp_segment_configuration;

$ gpstate -f

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:-Starting gpstate with args: -f

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:-local Greenplum Version: ‘postgres (Greenplum Database) 5.5.0 build commit:67afa18296aa238d53a2dfcc724da60ed2f944f0’

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:-master Greenplum Version: ‘PostgreSQL 8.3.23 (Greenplum Database 5.5.0 build commit:67afa18296aa238d53a2dfcc724da60ed2f944f0) on x86_64-pc-linux-gnu, compiled by GCC gcc (GCC) 6.2.0, 64-bit compiled on Feb 17 2018 15:23:55’

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:-Obtaining Segment details from master…

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:-Standby master details

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:———————–

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:- Standby address = server227

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:- Standby data directory = /home/gpadmin/master/gpseg-1

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:- Standby port = 5432

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:- Standby PID = 22279

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:- Standby status = Standby host passive

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:————————————————————–

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:–pg_stat_replication

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:————————————————————–

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:–WAL Sender State: streaming

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:–Sync state: sync

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:–Sent Location: 0/CF2C470

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:–Flush Location: 0/CF2C470

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:–Replay Location: 0/CF2C470

20180320:13:50:38:021814 gpstate:mfsmaster:gpadmin-[INFO]:————————————————————–

二、master 主从切换

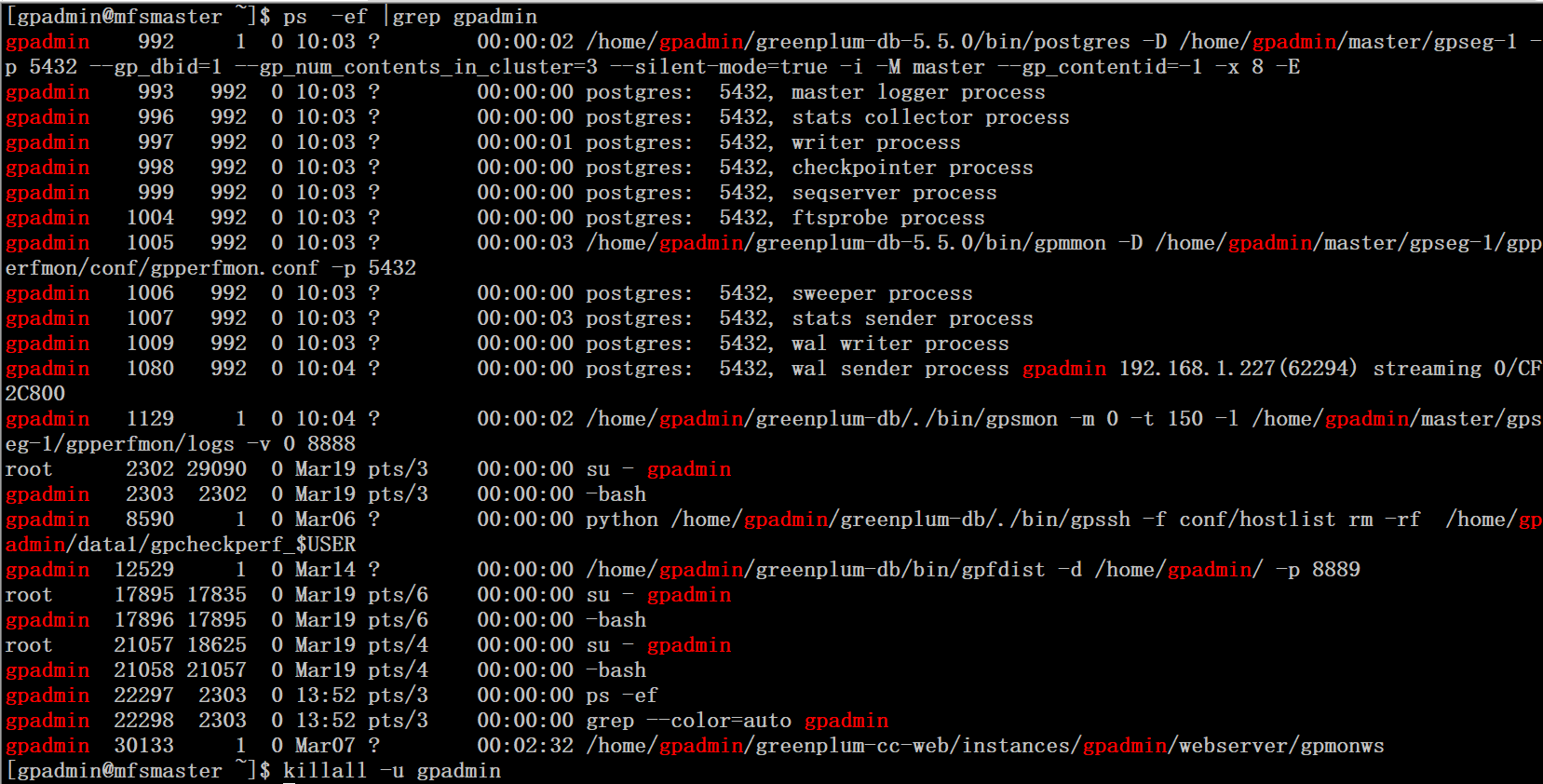

1、模拟当前主库宕机,这里直接采用 killall gpadmin 用户下的所有进程来模拟

2、在 master standby 节点(227 服务器上)进行执行切换命令,提升 227 为 master

$ gpactivatestandby -d master/gpseg-1/

20180320:13:53:20:030558 gpactivatestandby:server227:gpadmin-[INFO]:——————————————————

20180320:13:53:20:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Standby data directory = /home/gpadmin/master/gpseg-1

20180320:13:53:20:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Standby port = 5432

20180320:13:53:20:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Standby running = yes

20180320:13:53:20:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Force standby activation = no

20180320:13:53:20:030558 gpactivatestandby:server227:gpadmin-[INFO]:——————————————————

Do you want to continue with standby master activation? Yy|Nn (default=N):

> y

20180320:13:53:26:030558 gpactivatestandby:server227:gpadmin-[INFO]:-found standby postmaster process

20180320:13:53:26:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Updating transaction files filespace flat files…

20180320:13:53:26:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Updating temporary files filespace flat files…

20180320:13:53:26:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Promoting standby…

20180320:13:53:26:030558 gpactivatestandby:server227:gpadmin-[DEBUG]:-Waiting for connection…

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Standby master is promoted

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Reading current configuration…

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[DEBUG]:-Connecting to dbname=’postgres’

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Writing the gp_dbid file – /home/gpadmin/master/gpseg-1/gp_dbid…

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-But found an already existing file.

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Hence removed that existing file.

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Creating a new file…

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Wrote dbid: 1 to the file.

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Now marking it as read only…

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Verifying the file…

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:——————————————————

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-The activation of the standby master has completed successfully.

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-server227 is now the new primary master.

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-You will need to update your user access mechanism to reflect

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-the change of master hostname.

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Do not re-start the failed master while the fail-over master is

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-operational, this could result in database corruption!

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-MASTER_DATA_DIRECTORY is now /home/gpadmin/master/gpseg-1 if

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-this has changed as a result of the standby master activation, remember

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-to change this in any startup scripts etc, that may be configured

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-to set this value.

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-MASTER_PORT is now 5432, if this has changed, you

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-may need to make additional configuration changes to allow access

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-to the Greenplum instance.

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Refer to the Administrator Guide for instructions on how to re-activate

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-the master to its previous state once it becomes available.

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-Query planner statistics must be updated on all databases

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-following standby master activation.

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:-When convenient, run ANALYZE against all user databases.

20180320:13:53:27:030558 gpactivatestandby:server227:gpadmin-[INFO]:——————————————————

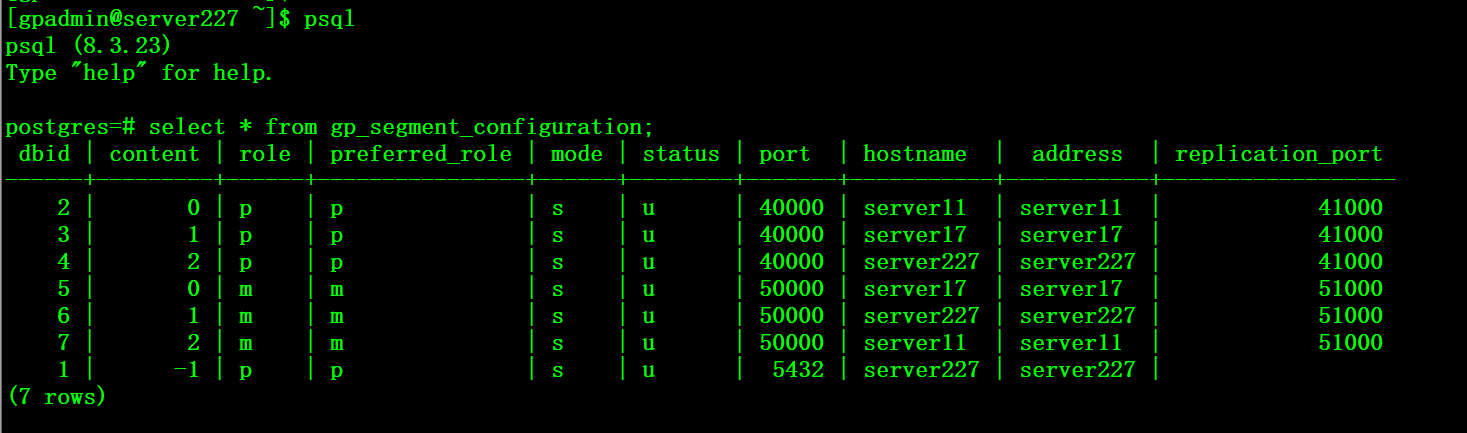

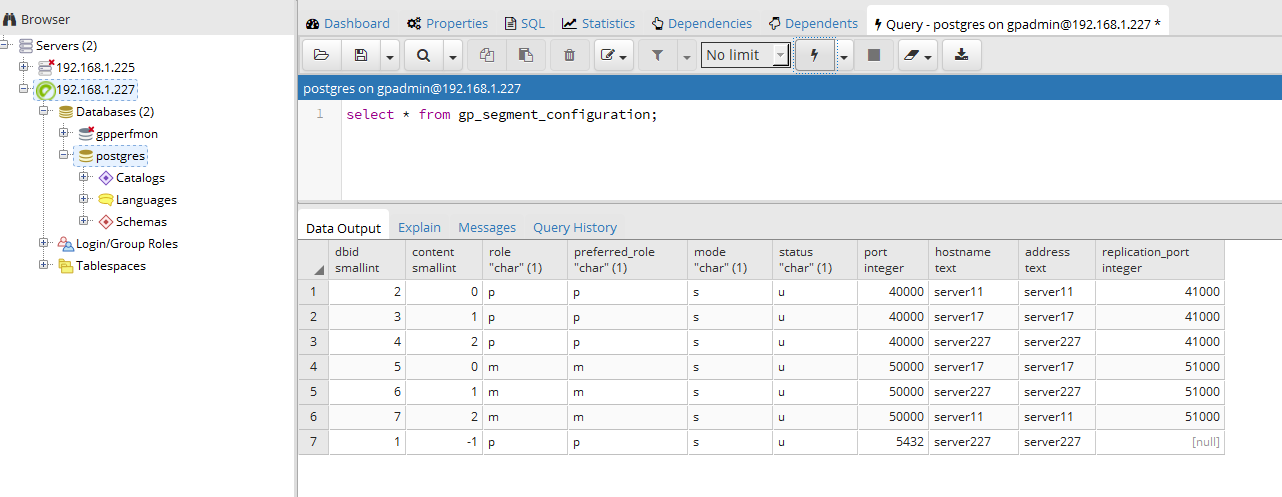

3、测试提升后的主库是否正常

$ psql -d postgres -c ‘ANALYZE’

postgres=# select * from gp_segment_configuration;

4、这里可能需要同步配置一下 pg_hba.conf 文件,才能通过客户端进行远程连接

到这里我们已经完成了 master 节点的故障切换工作。

三、添加新的 master standby

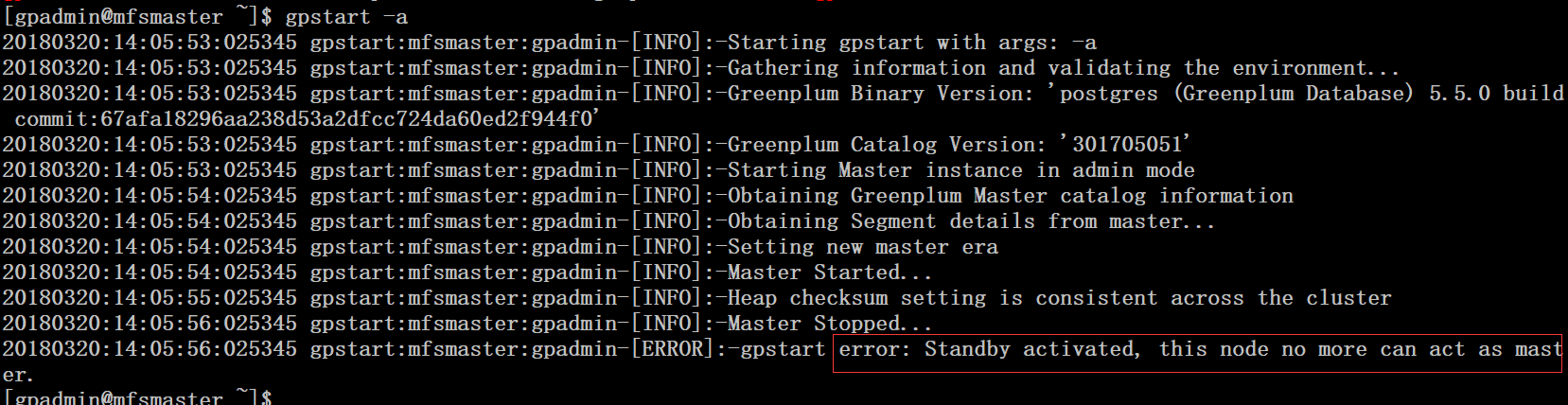

1、在 225 服务器上执行 gpstart - a 命令启动 gpdb 数据库的时候报错”error: Standby active, this node no more can act as master”。当 standby 提升为 master 的时候,原 master 服务器从故障中恢复过来,需要以 standby 的角色加入

2、在原 master 服务器 225 上的数据进行备份

$ cd master/

$ ls

gpseg-1

$ mv gpseg-1/ backup-gpseg-1

3、在当前 master 服务器 227 上进行 gpinitstandby 添加 225 为 standby

$ gpinitstandby -s mfsmaster

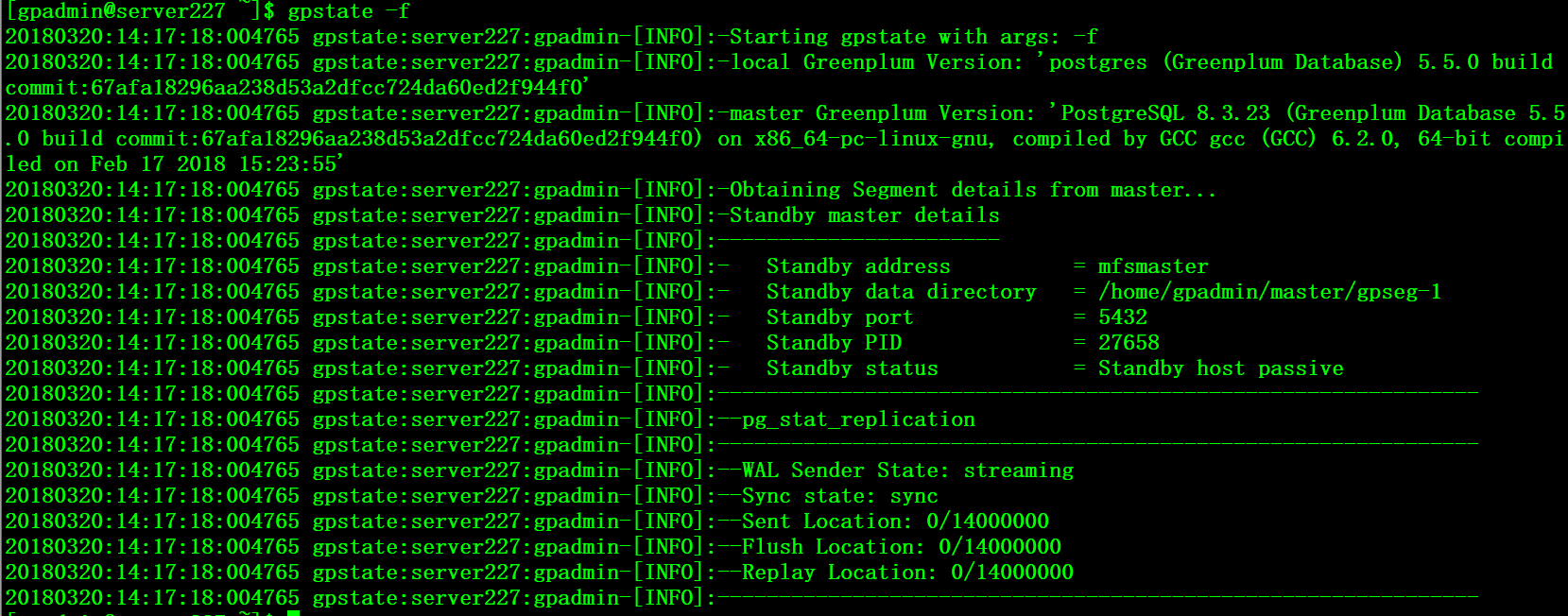

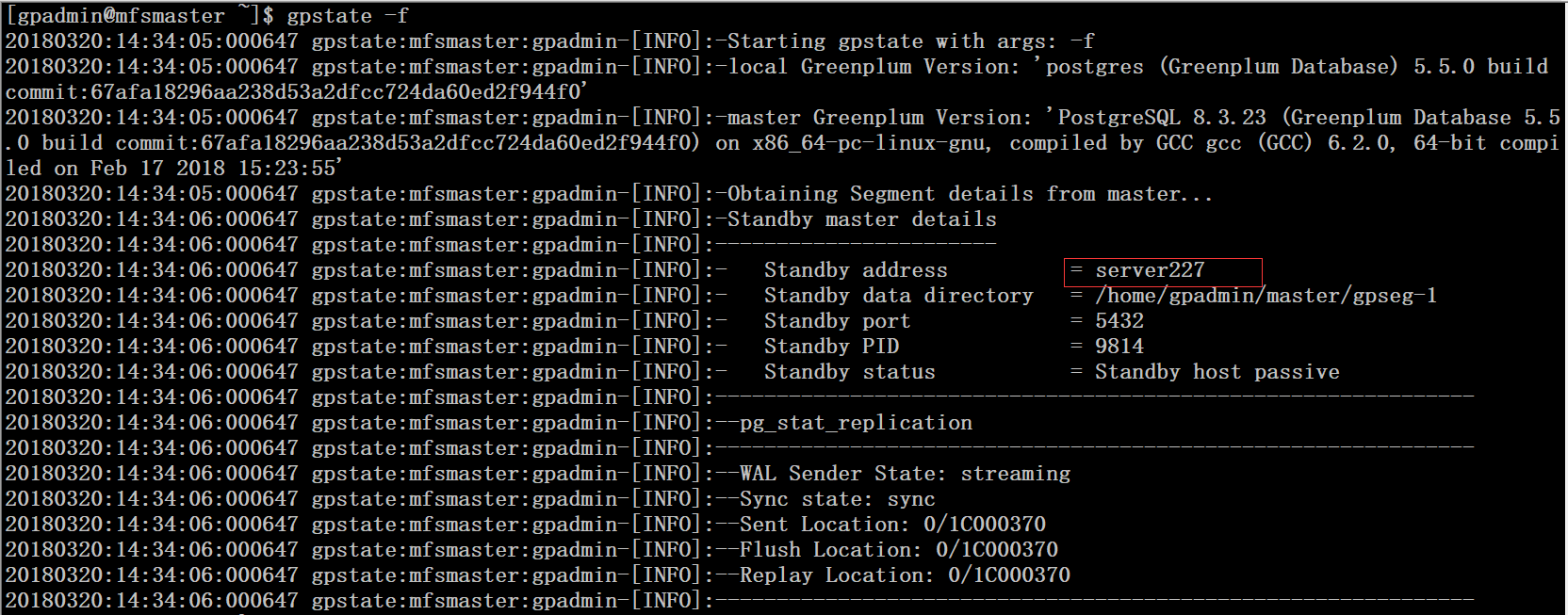

$ gpstate -f

四、primary segment 和 mirror segment 切换

1、首先我们来捋一下当前的数据库环境

Master segment: 192.168.1.227/24 hostname: server227

Stadnby segemnt: 192.168.1.225/24 hostname: mfsmaster

Segment 节点 1:192.168.1.227/24 hostname: server227

Segment 节点 2:192.168.1.17/24 hostname: server17

Segment 节点 3:192.168.1.11/24 hostname: server11

每个 segment 节点上分别运行一个 primary segment 和一个 mirror segment

2、接着我们采用同样的方式把 227 服务器上 gpadmin 用户的所有进行杀掉

$ killall -u gpadmin

3、在 225 服务器上执行切换 master 命令

$ gpactivatestandby -d master/gpseg-1/

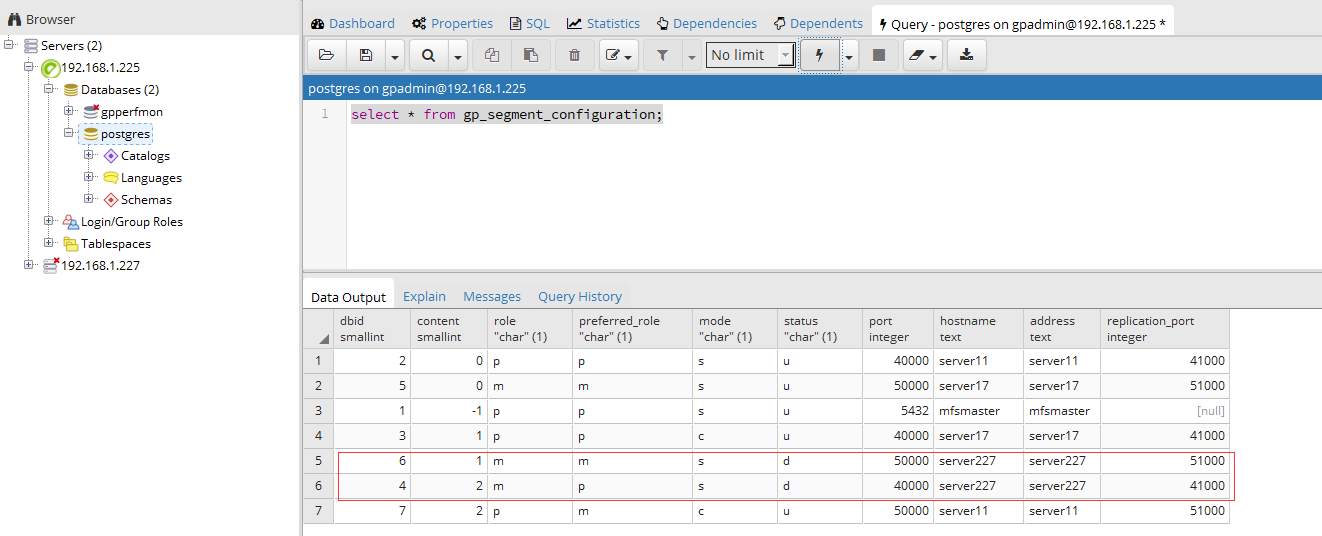

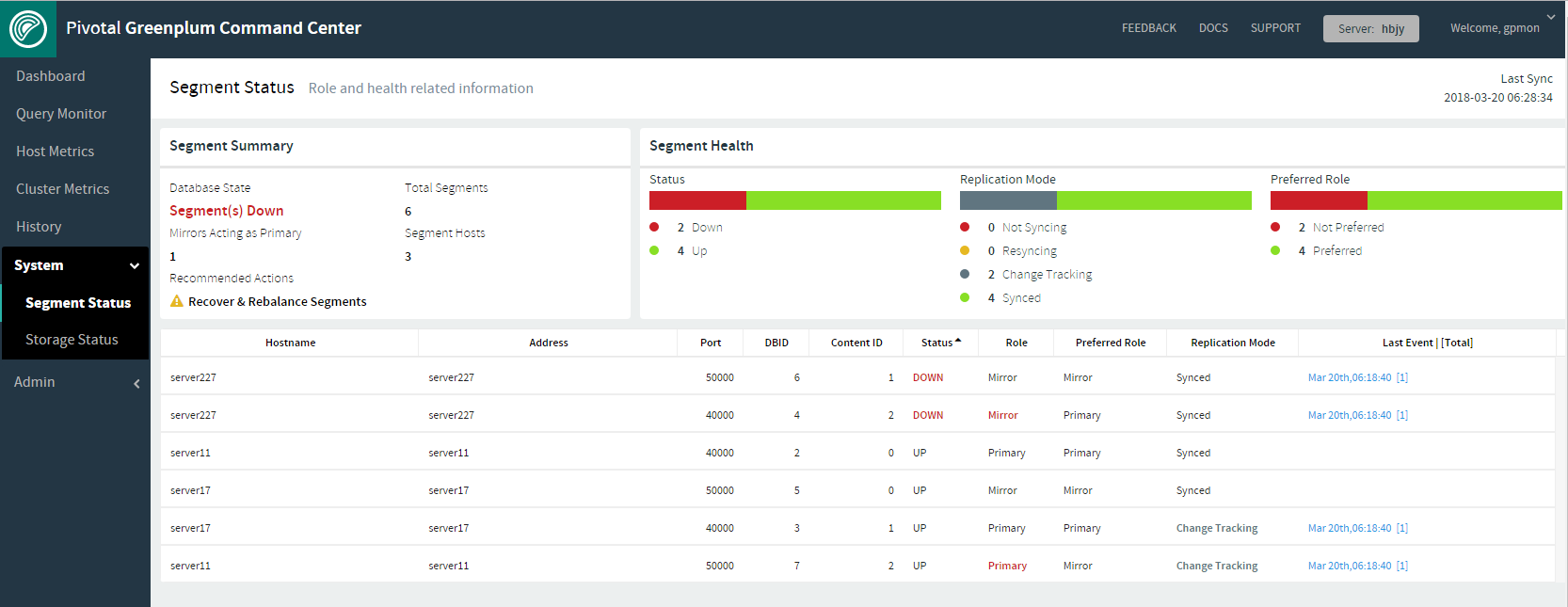

4、完成切换后使用客户端工具连接查看 segment 状态,可以看到 227 服务器上的 server227

的 primary 和 mirror 节点都已经宕机了。

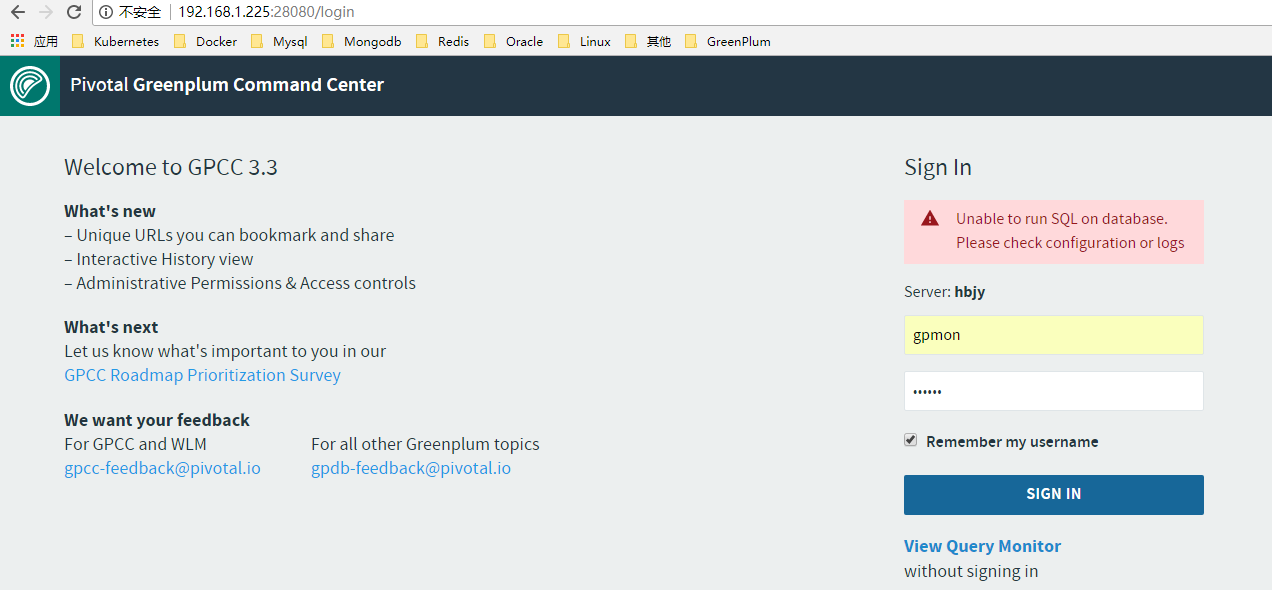

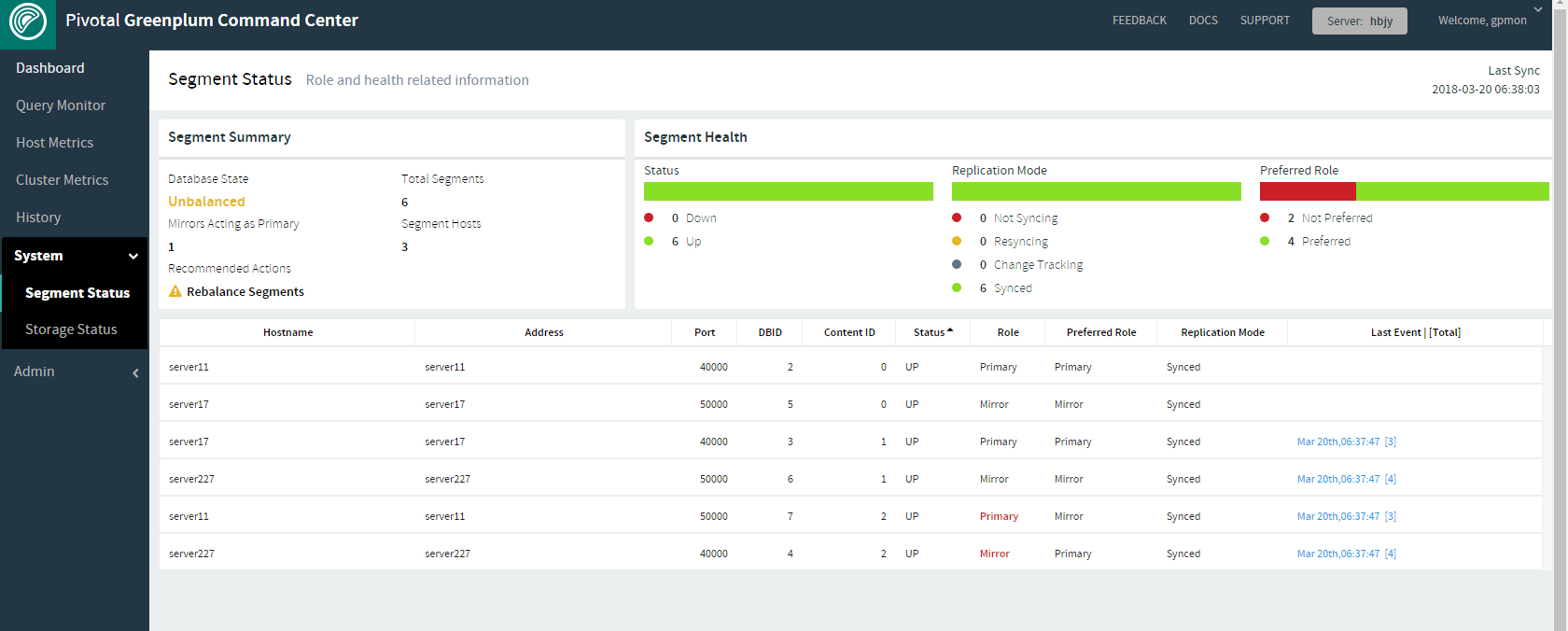

5、这里为了方面查看,我们使用 greenplum-cc-web 工具来查看集群状态

$ gpcmdr –start hbjy

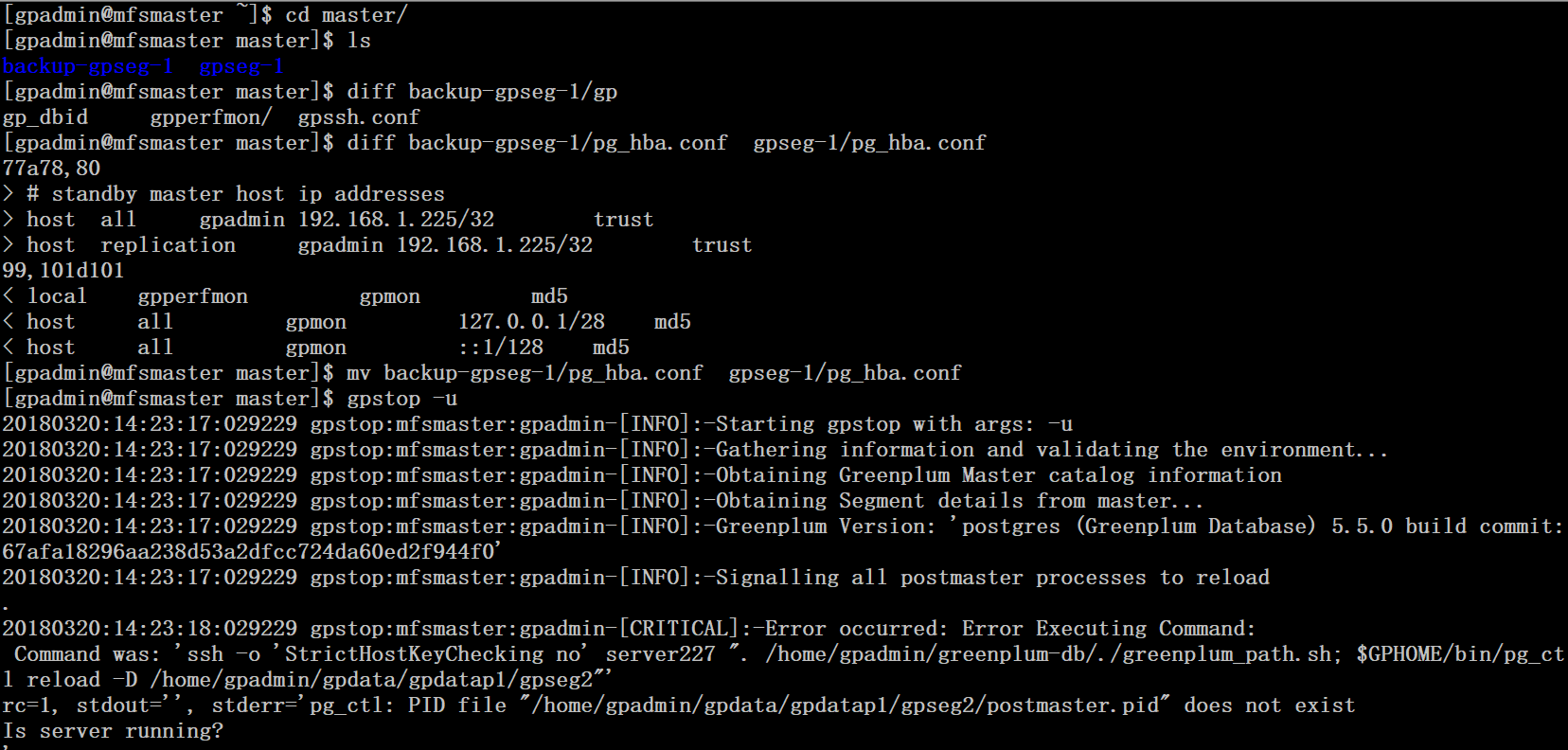

需要将 pg_hba.conf 文件还原回去,因为 227 上所有的 segment 已经宕掉,执行 gpstop - u 命令会有报错

在 segment status 页面中可以看到当前 segment 的状态是异常的。server11 上有两组的 primary segment,这很危险,如果不幸 server11 也宕机了,整个集群的状态就变成不可用了。

6��将 server227 做为 master standby 重新加入集群

$ cd master/

$ mv gpseg-1/ backupgpseg-1

$ gpinitstandby -s server227

7、在 master 上重启集群

$ gpstop -M immediate

$ gpstart -a

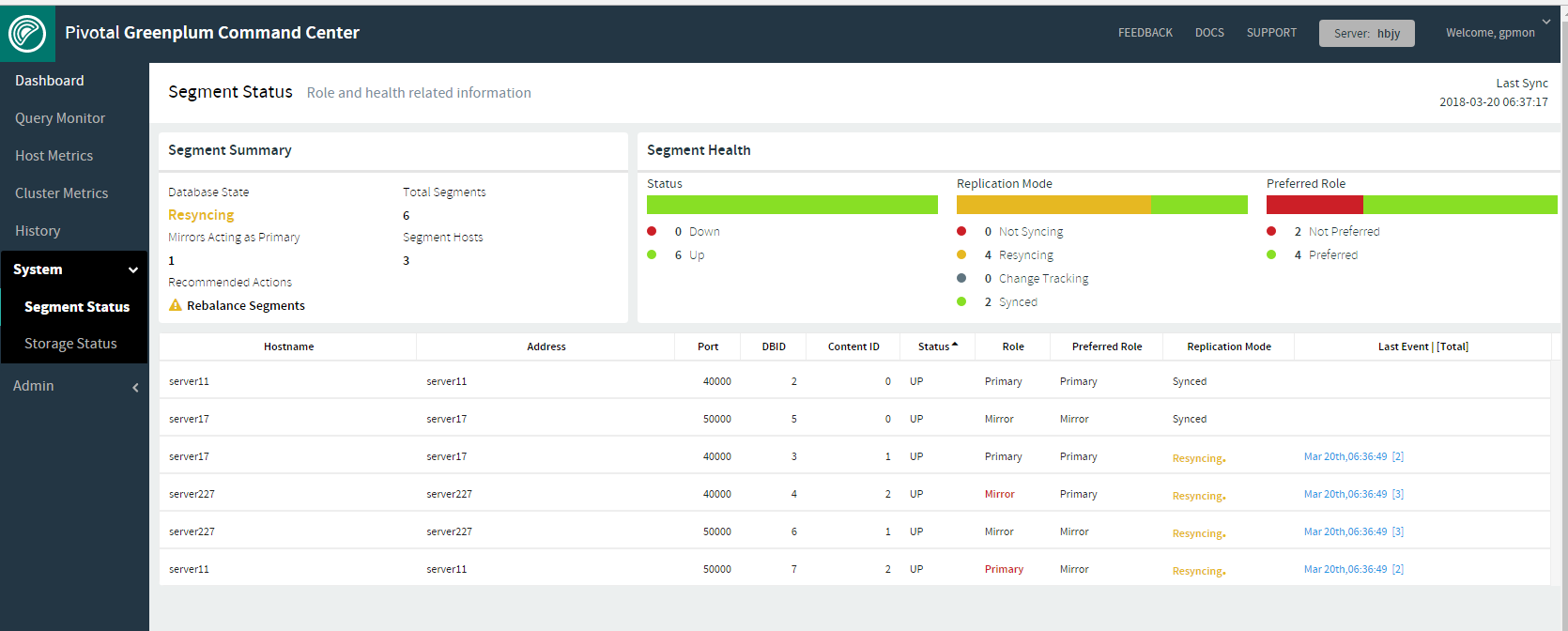

8、在 master 上恢复集群

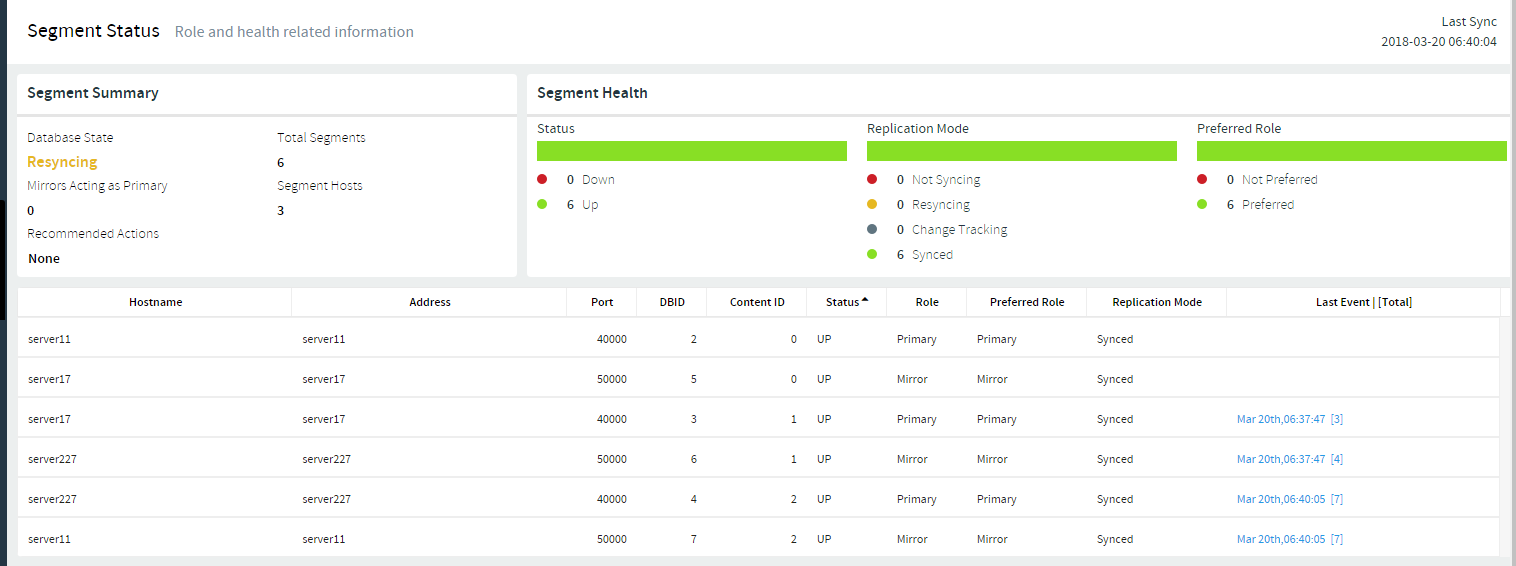

$ gprecoverseg

虽然所有的 segment 均已启动,但 server11 上有还是有两组的 primary segment

9、在 master 上恢复 segment 节点分布到原始状态

$ gprecoverseg -r

参考文档:greenplum