共计 5179 个字符,预计需要花费 13 分钟才能阅读完成。

1. 介绍

PostgreSQL 提供了一个 copy 命令的便利数据加载工具,copy 命令源于 PostgreSQL 数据库,copy 命令支持文件与表之间的数据加载和表对文件的数据卸载。pg_bulkload 是一种用于 PostgreSQL 的高速数据加载工具,相比 copy 命令。最大的优势就是速度。优势在让我们跳过 shared buffer,wal buffer。直接写文件。pg_bulkload 的 direct 模式就是这种思路来实现的,它还包含了数据恢复功能,即导入失败的话,需要恢复。

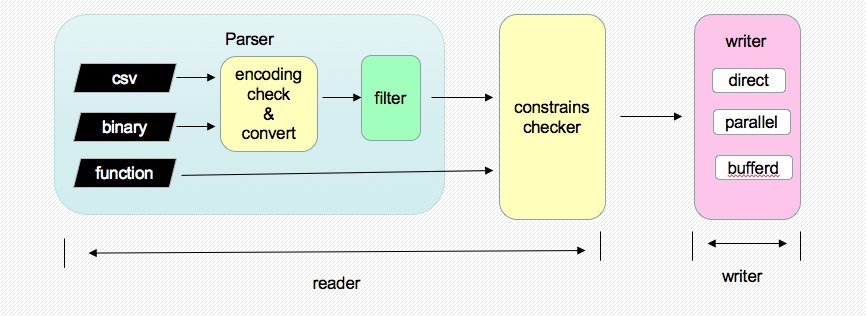

2. pg_bulkload 架构图

pg_bulkload 主要包括两个模块:reader 和 writer。reader 负责读取文件、解析 tuple,writer 负责把解析出的 tuple 写入输出源中。pg_bulkload 最初的版本功能很简单,只是加载数据。3.1 版本增加了数据过滤的功能。

3. pg_bulkload 安装

[root@Postgres201 ~]# unzip pg_bulkload-VERSION3_1_10.zip

[root@Postgres201 ~]# cd pg_bulkload-VERSION3_1_10

[root@Postgres201 pg_bulkload-VERSION3_1_10]# make

[root@Postgres201 pg_bulkload-VERSION3_1_10]# make install

安装完成;要使用它需要建 extension

[postgres@Postgres201 ~]$ psql lottu lottu

psql (9.6.0)

Type “help” for help.

lottu=# create extension pg_bulkload;

CREATE EXTENSION

4. pg_bulkload 参数

[postgres@Postgres201 ~]$ pg_bulkload –help

pg_bulkload is a bulk data loading tool for PostgreSQL

Usage:

Dataload: pg_bulkload [dataload options] control_file_path

Recovery: pg_bulkload -r [-D DATADIR]

Dataload options:

-i, –input=INPUT INPUT path or function

-O, –output=OUTPUT OUTPUT path or table

-l, –logfile=LOGFILE LOGFILE path

-P, –parse-badfile=* PARSE_BADFILE path

-u, –duplicate-badfile=* DUPLICATE_BADFILE path

-o, –option=”key=val” additional option

Recovery options:

-r, –recovery execute recovery

-D, –pgdata=DATADIR database directory

Connection options:

-d, –dbname=DBNAME database to connect

-h, –host=HOSTNAME database server host or socket directory

-p, –port=PORT database server port

-U, –username=USERNAME user name to connect as

-w, –no-password never prompt for password

-W, –password force password prompt

Generic options:

-e, –echo echo queries

-E, –elevel=LEVEL set output message level

–help show this help, then exit

–version output version information, then exit

5. pg_bulkload 的使用

创建测试表 tbl_lottu 和测试文件 tbl_lottu_output.txt

[postgres@Postgres201 ~]$ psql lottu lottu

psql (9.6.0)

Type “help” for help.

lottu=# create table tbl_lottu(id int,name text);

CREATE TABLE

[postgres@Postgres201 ~]$ seq 100000| awk ‘{print $0″|lottu”}’ > tbl_lottu_output.txt

1. 不使用控制文件使用参数

[postgres@Postgres201 ~]$ pg_bulkload -i /home/postgres/tbl_lottu_output.txt -O tbl_lottu -l /home/postgres/tbl_lottu_output.log -P /home/postgres/tbl_lottu_bad.txt -o “TYPE=CSV” -o “DELIMITER=|” -d lottu -U lottu

NOTICE: BULK LOAD START

NOTICE: BULK LOAD END

0 Rows skipped.

100000 Rows successfully loaded.

0 Rows not loaded due to parse errors.

0 Rows not loaded due to duplicate errors.

0 Rows replaced with new rows.

[postgres@Postgres201 ~]$ cat tbl_lottu_output.log

pg_bulkload 3.1.9 on 2018-07-12 13:37:18.326685+08

INPUT = /home/postgres/tbl_lottu_output.txt

PARSE_BADFILE = /home/postgres/tbl_lottu_bad.txt

LOGFILE = /home/postgres/tbl_lottu_output.log

LIMIT = INFINITE

PARSE_ERRORS = 0

CHECK_CONSTRAINTS = NO

TYPE = CSV

SKIP = 0

DELIMITER = |

QUOTE = “\””

ESCAPE = “\””

NULL =

OUTPUT = lottu.tbl_lottu

MULTI_PROCESS = NO

VERBOSE = NO

WRITER = DIRECT

DUPLICATE_BADFILE = /data/postgres/data/pg_bulkload/20180712133718_lottu_lottu_tbl_lottu.dup.csv

DUPLICATE_ERRORS = 0

ON_DUPLICATE_KEEP = NEW

TRUNCATE = NO

0 Rows skipped.

100000 Rows successfully loaded.

0 Rows not loaded due to parse errors.

0 Rows not loaded due to duplicate errors.

0 Rows replaced with new rows.

Run began on 2018-07-12 13:37:18.326685+08

Run ended on 2018-07-12 13:37:18.594494+08

CPU 0.14s/0.07u sec elapsed 0.27 sec

2. 导入之前先清理表数据

[postgres@Postgres201 ~]$ pg_bulkload -i /home/postgres/tbl_lottu_output.txt -O tbl_lottu -l /home/postgres/tbl_lottu_output.log -P /home/postgres/tbl_lottu_bad.txt -o “TYPE=CSV” -o “DELIMITER=|” -o “TRUNCATE=YES” -d lottu -U lottu

NOTICE: BULK LOAD START

NOTICE: BULK LOAD END

0 Rows skipped.

100000 Rows successfully loaded.

0 Rows not loaded due to parse errors.

0 Rows not loaded due to duplicate errors.

0 Rows replaced with new rows.

[postgres@Postgres201 ~]$ psql lottu lottu -c “select count(1) from tbl_lottu;”

count

——–

100000

(1 row)

3. 使用控制文件

新建控制文件 lottu.ctl

INPUT = /home/postgres/lotu01

PARSE_BADFILE = /home/postgres/tbl_lottu_bad.txt

LOGFILE = /home/postgres/tbl_lottu_output.log

LIMIT = INFINITE

PARSE_ERRORS = 0

CHECK_CONSTRAINTS = NO

TYPE = CSV

SKIP = 5

DELIMITER = |

QUOTE = “\””

ESCAPE = “\””

OUTPUT = lottu.tbl_lottu

MULTI_PROCESS = NO

WRITER = DIRECT

DUPLICATE_BADFILE = /home/postgres/tbl_lottu.dup.csv

DUPLICATE_ERRORS = 0

ON_DUPLICATE_KEEP = NEW

TRUNCATE = YES

使用控制文件进行加载操作

pg_bulkload /home/postgres/lottu.ctl -d lottu -U lottu

[postgres@Postgres201 ~]$ pg_bulkload /home/postgres/lottu.ctl -d lottu -U lottu

NOTICE: BULK LOAD START

NOTICE: BULK LOAD END

5 Rows skipped.

95 Rows successfully loaded.

0 Rows not loaded due to parse errors.

0 Rows not loaded due to duplicate errors.

0 Rows replaced with new rows.

6. 总结

pg_bulkload 是一种用于 PostgreSQL 的高速数据加载工具,相比 copy 命令。最大的优势就是速度。优势在让我们跳过 shared buffer,wal buffer。直接写文件。pg_bulkload 的 direct 模式就是这种思路来实现的。不足的是; 表字段的顺序要跟导入的文件报错一致。希望后续版本能开发。

Linux 下 RPM 包方式安装 PostgreSQL https://www.linuxidc.com/Linux/2016-03/128906.htm

Linux 下安装 PostgreSQL https://www.linuxidc.com/Linux/2016-12/138765.htm

Linux 下 PostgreSQL 安装部署指南 https://www.linuxidc.com/Linux/2016-11/137603.htm

Linux 下安装 PostgreSQL 并设置基本参数 https://www.linuxidc.com/Linux/2016-11/137324.htm

Ubuntu 16.04 下 PostgreSQL 主从复制配置 https://www.linuxidc.com/Linux/2017-08/146190.htm

Fedota 24 将数据库升级到 PostgreSQL 9.5 https://www.linuxidc.com/Linux/2016-11/137374.htm

CentOS7 安装配置 PostgreSQL9.6 https://www.linuxidc.com/Linux/2017-10/147536.htm

CentOS5.8_x64 下离线安装 PostgreSQL 9.1 https://www.linuxidc.com/Linux/2017-10/147822.htm

CentOS 6.5 下 PostgreSQL 服务部署 https://www.linuxidc.com/Linux/2017-01/139144.htm

: