共计 42941 个字符,预计需要花费 108 分钟才能阅读完成。

分片是数据跨多台机器存储,MongoDB 使用分片来支持具有非常大的数据集和高吞吐量操作的部署。

具有大型数据集或高吞吐量应用程序的数据库系统可能会挑战单个服务器的容量。例如,高查询率会耗尽服务器的 CPU 容量。工作集大小大于系统的 RAM 会强调磁盘驱动器的 I / O 容量。

有两种解决系统增长的方法:垂直和水平缩放。

垂直扩展 涉及增加单个服务器的容量,例如使用更强大的 CPU,添加更多 RAM 或增加存储空间量。可用技术的局限性可能会限制单个机器对于给定工作负载而言足够强大。此外,基于云的提供商基于可用的硬件配置具有硬性上限。结果,垂直缩放有实际的最大值。

水平扩展 涉及划分系统数据集并加载多个服务器,添加其他服务器以根据需要增加容量。虽然单个机器的总体速度或容量可能不高,但每台机器处理整个工作负载的子集,可能提供比单个高速大容量服务器更高的效率。扩展部署容量只需要根据需要添加额外的服务器,这可能比单个机器的高端硬件的总体成本更低。权衡是基础架构和部署维护的复杂性增加。

MongoDB 支持 通过 分片进行 水平扩展。

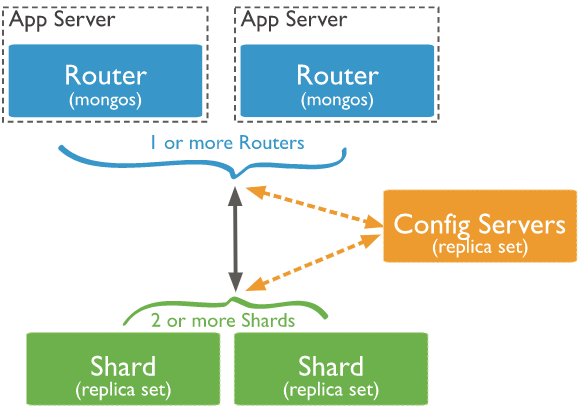

一、组件

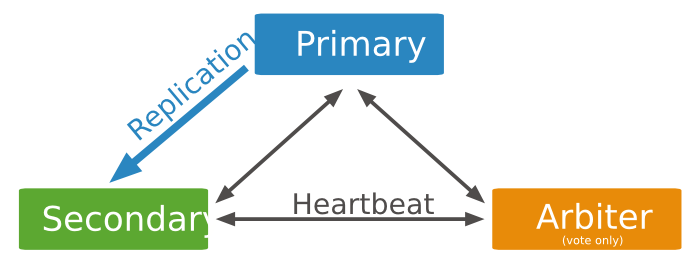

- shard:每个分片包含分片数据的子集。每个分片都可以部署为 副本集(replica set)。可以分片,不分片的数据存于主分片服务器上。部署为 3 成员副本集

- mongos:mongos 充当查询路由器,提供客户端应用程序和分片集群之间的接口。可以部署多个 mongos 路由器。部署 1 个或者多个 mongos

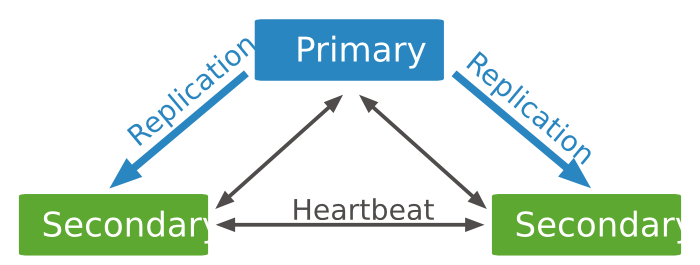

- config servers:配置服务器存储群集的元数据和配置设置。从 MongoDB 3.4 开始,必须将配置服务器 部署为 3 成员副本集

注意:应用程序或者客户端必须要连接 mongos 才能与集群的数据进行交互,永远 不应 连接到单个分片以执行读取或写入操作。

shard 的 replica set 的架构图:

config servers 的 replica set 的架构图:

分片策略

1、散列分片

- 使用散列索引在共享群集中分区数据。散列索引计算单个字段的哈希值作为索引值; 此值用作分片键。

- 使用散列索引解析查询时,MongoDB 会自动计算哈希值。应用程序也不会需要计算哈希值。

- 基于散列值的数据分布有助于更均匀的数据分布,尤其是在分片键单调变化的数据集中。

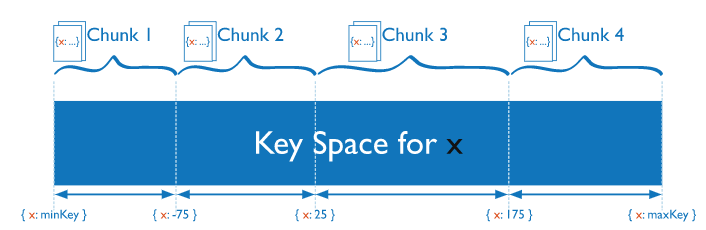

2、范围分片

- 基于分片键值将数据分成范围。然后根据分片键值为每个块分配一个范围。

- mongos 可以将操作仅路由到包含所需数据的分片。

- 分片键的规划很重要,可能导致数据不能均匀分布。

二、部署

1、环境说明

| 服务器名称 | IP 地址 | 操作系统版本 | MongoDB 版本 | 配置服务器 (Config Server) 端口 | 分片服务器 1(Shard Server 1 | 分片服务器 2(Shard Server 2) | 分片服务器 3(Shard Server 3) | 功能 |

| mongo1.example.net | 10.10.18.10 | CentOS7.5 | 4.0 | 27027(Primary) | 27017(Primary) | 27018(Arbiter) | 27019(Secondary) | 配置服务器和分片服务器 |

| mongo2.example.net | 10.10.18.11 | Centos7.5 | 4.0 | 27027(Secondary) | 27017(Secondary) |

27018(Primary) | 27019(Arbiter) | 配置服务器和分片服务器 |

| mongo3.example.net | 10.10.18.12 | Centos7.5 | 4.0 | 27027(Secondary) | 27017(Arbiter) | 27018(Secondary) | 27019(Primary) | 配置服务器和分片服务器 |

| mongos.example.net | 192.168.11.10 | Centos7.5 | 4.0 | mongos 的端口:27017 | mongos |

官方推荐配置中使用逻辑 DNS,所以该文档中,将服务器名称和 IP 地址的 DNS 映射关系写入到各服务器的 /etc/hosts 文件中。

2、部署 MongoDB

环境中 4 台服务器的 MongoDB 的安装部署,详见:MongoDB 安装

创建环境需要的目录:

mkdir -p /data/mongodb/data/{configServer,shard1,shard2,shard3}

mkdir -p /data/mongodb/{log,pid}3、创建配置服务器(Config Server)的 Replica Set(副本集)

3 台服务器上配置文件内容: /data/mongodb/configServer.conf

mongo1.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/configServer.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/configServer"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/configServer.pid"

net:

bindIp: mongo1.example.net

port: 27027

replication:

replSetName: cs0

sharding:

clusterRole: configsvr

mongo2.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/configServer.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/configServer"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/configServer.pid"

net:

bindIp: mongo2.example.net

port: 27027

replication:

replSetName: cs0

sharding:

clusterRole: configsvr

mongo3.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/configServer.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/configServer"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/configServer.pid"

net:

bindIp: mongo3.example.net

port: 27027

replication:

replSetName: cs0

sharding:

clusterRole: configsvr

启动三台服务器 Config Server

mongod -f /data/mongodb/configServer.conf连接到其中一个 Config Server

mongo --host mongo1.example.net --port 27027结果:

1 MongoDB shell version v4.0.10

2 connecting to: mongodb://mongo1.example.net:27027/?gssapiServiceName=mongodb

3 Implicit session: session {"id" : UUID("1a4d4252-11d0-40bb-90da-f144692be88d") }

4 MongoDB server version: 4.0.10

5 Server has startup warnings:

6 2019-06-14T14:28:56.013+0800 I CONTROL [initandlisten]

7 2019-06-14T14:28:56.013+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

8 2019-06-14T14:28:56.013+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

9 2019-06-14T14:28:56.013+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

10 2019-06-14T14:28:56.013+0800 I CONTROL [initandlisten]

11 2019-06-14T14:28:56.013+0800 I CONTROL [initandlisten]

12 2019-06-14T14:28:56.013+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

13 2019-06-14T14:28:56.013+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

14 2019-06-14T14:28:56.014+0800 I CONTROL [initandlisten]

15 2019-06-14T14:28:56.014+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

16 2019-06-14T14:28:56.014+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

17 2019-06-14T14:28:56.014+0800 I CONTROL [initandlisten]

18 >View Code

配置 Replica Set

rs.initiate(

{_id: "cs0",

configsvr: true,

members: [{ _id : 0, host : "mongo1.example.net:27027" },

{_id : 1, host : "mongo2.example.net:27027" },

{_id : 2, host : "mongo3.example.net:27027" }

]

}

)结果:

{

“ok” : 1,

“operationTime” : Timestamp(1560493908, 1),

“$gleStats” : {

“lastOpTime” : Timestamp(1560493908, 1),

“electionId” : ObjectId(“000000000000000000000000”)

},

“lastCommittedOpTime” : Timestamp(0, 0),

“$clusterTime” : {

“clusterTime” : Timestamp(1560493908, 1),

“signature” : {

“hash” : BinData(0,”AAAAAAAAAAAAAAAAAAAAAAAAAAA=”),

“keyId” : NumberLong(0)

}

}

}

查看 Replica Set 的状态

cs0:PRIMARY> rs.status()结果: 可以看出三个服务器: 1 个 Primary,2 个 Secondary

{

“set” : “cs0”,

“date” : ISODate(“2019-06-14T06:33:31.348Z”),

“myState” : 1,

“term” : NumberLong(1),

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“configsvr” : true,

“heartbeatIntervalMillis” : NumberLong(2000),

“optimes” : {

“lastCommittedOpTime” : {

“ts” : Timestamp(1560494006, 1),

“t” : NumberLong(1)

},

“readConcernMajorityOpTime” : {

“ts” : Timestamp(1560494006, 1),

“t” : NumberLong(1)

},

“appliedOpTime” : {

“ts” : Timestamp(1560494006, 1),

“t” : NumberLong(1)

},

“durableOpTime” : {

“ts” : Timestamp(1560494006, 1),

“t” : NumberLong(1)

}

},

“lastStableCheckpointTimestamp” : Timestamp(1560493976, 1),

“members” : [

{

“_id” : 0,

“name” : “mongo1.example.net:27027”,

“health” : 1,

“state” : 1,

“stateStr” : “PRIMARY”,

“uptime” : 277,

“optime” : {

“ts” : Timestamp(1560494006, 1),

“t” : NumberLong(1)

},

“optimeDate” : ISODate(“2019-06-14T06:33:26Z”),

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“infoMessage” : “could not find member to sync from”,

“electionTime” : Timestamp(1560493919, 1),

“electionDate” : ISODate(“2019-06-14T06:31:59Z”),

“configVersion” : 1,

“self” : true,

“lastHeartbeatMessage” : “”

},

{

“_id” : 1,

“name” : “mongo2.example.net:27027”,

“health” : 1,

“state” : 2,

“stateStr” : “SECONDARY”,

“uptime” : 102,

“optime” : {

“ts” : Timestamp(1560494006, 1),

“t” : NumberLong(1)

},

“optimeDurable” : {

“ts” : Timestamp(1560494006, 1),

“t” : NumberLong(1)

},

“optimeDate” : ISODate(“2019-06-14T06:33:26Z”),

“optimeDurableDate” : ISODate(“2019-06-14T06:33:26Z”),

“lastHeartbeat” : ISODate(“2019-06-14T06:33:29.385Z”),

“lastHeartbeatRecv” : ISODate(“2019-06-14T06:33:29.988Z”),

“pingMs” : NumberLong(0),

“lastHeartbeatMessage” : “”,

“syncingTo” : “mongo1.example.net:27027”,

“syncSourceHost” : “mongo1.example.net:27027”,

“syncSourceId” : 0,

“infoMessage” : “”,

“configVersion” : 1

},

{

“_id” : 2,

“name” : “mongo3.example.net:27027”,

“health” : 1,

“state” : 2,

“stateStr” : “SECONDARY”,

“uptime” : 102,

“optime” : {

“ts” : Timestamp(1560494006, 1),

“t” : NumberLong(1)

},

“optimeDurable” : {

“ts” : Timestamp(1560494006, 1),

“t” : NumberLong(1)

},

“optimeDate” : ISODate(“2019-06-14T06:33:26Z”),

“optimeDurableDate” : ISODate(“2019-06-14T06:33:26Z”),

“lastHeartbeat” : ISODate(“2019-06-14T06:33:29.384Z”),

“lastHeartbeatRecv” : ISODate(“2019-06-14T06:33:29.868Z”),

“pingMs” : NumberLong(0),

“lastHeartbeatMessage” : “”,

“syncingTo” : “mongo1.example.net:27027”,

“syncSourceHost” : “mongo1.example.net:27027”,

“syncSourceId” : 0,

“infoMessage” : “”,

“configVersion” : 1

}

],

“ok” : 1,

“operationTime” : Timestamp(1560494006, 1),

“$gleStats” : {

“lastOpTime” : Timestamp(1560493908, 1),

“electionId” : ObjectId(“7fffffff0000000000000001”)

},

“lastCommittedOpTime” : Timestamp(1560494006, 1),

“$clusterTime” : {

“clusterTime” : Timestamp(1560494006, 1),

“signature” : {

“hash” : BinData(0,”AAAAAAAAAAAAAAAAAAAAAAAAAAA=”),

“keyId” : NumberLong(0)

}

}

}

创建管理用户

use admin

db.createUser(

{user: "myUserAdmin",

pwd: "abc123",

roles: [{role: "userAdminAnyDatabase", db: "admin" },"readWriteAnyDatabase"]

}

)开启 Config Server 的登录验证和内部验证

使用 Keyfiles 进行内部认证,在其中一台服务器上创建 Keyfiles

openssl rand -base64 756 > /data/mongodb/keyfile

chmod 400 /data/mongodb/keyfile将这个 keyfile 文件分发到其它的三台服务器上,并保证权限 400

/data/mongodb/configServer.conf 配置文件中开启认证

security:

keyFile: "/data/mongodb/keyfile"

clusterAuthMode: "keyFile"

authorization: "enabled"然后 依次关闭 2 个 Secondary,在关闭 Primary

mongod -f /data/mongodb/configServer.conf --shutdown依次 开启 Primary 和两个 Secondary

mongod -f /data/mongodb/configServer.conf 使用用户密码登录 mongo

mongo --host mongo1.example.net --port 27027 -u myUserAdmin --authenticationDatabase "admin" -p 'abc123'注意:由于刚创建用户的时候没有给该用户管理集群的权限,所有此时登录后,能查看所有数据库,但是不能查看集群的状态信息。

cs0:PRIMARY> rs.status()

{

“operationTime” : Timestamp(1560495861, 1),

“ok” : 0,

“errmsg” : “not authorized on admin to execute command {replSetGetStatus: 1.0, lsid: { id: UUID(\”59dd4dc0-b34f-43b9-a341-a2f43ec1dcfa\”) }, $clusterTime: {clusterTime: Timestamp(1560495849, 1), signature: {hash: BinData(0, A51371EC5AA54BB1B05ED9342BFBF03CBD87F2D9), keyId: 6702270356301807629 } }, $db: \”admin\” }”,

“code” : 13,

“codeName” : “Unauthorized”,

“$gleStats” : {

“lastOpTime” : Timestamp(0, 0),

“electionId” : ObjectId(“7fffffff0000000000000002”)

},

“lastCommittedOpTime” : Timestamp(1560495861, 1),

“$clusterTime” : {

“clusterTime” : Timestamp(1560495861, 1),

“signature” : {

“hash” : BinData(0,”3UkTpXxyU8WI1TyS+u5vgewueGA=”),

“keyId” : NumberLong(“6702270356301807629”)

}

}

}

cs0:PRIMARY> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

赋值该用户具有集群的管理权限

use admin

db.system.users.find() #查看当前的用户信息

db.grantRolesToUser("myUserAdmin", ["clusterAdmin"])查看集群信息

cs0:PRIMARY> rs.status()

{

“set” : “cs0”,

“date” : ISODate(“2019-06-14T07:18:20.223Z”),

“myState” : 1,

“term” : NumberLong(2),

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“configsvr” : true,

“heartbeatIntervalMillis” : NumberLong(2000),

“optimes” : {

“lastCommittedOpTime” : {

“ts” : Timestamp(1560496690, 1),

“t” : NumberLong(2)

},

“readConcernMajorityOpTime” : {

“ts” : Timestamp(1560496690, 1),

“t” : NumberLong(2)

},

“appliedOpTime” : {

“ts” : Timestamp(1560496690, 1),

“t” : NumberLong(2)

},

“durableOpTime” : {

“ts” : Timestamp(1560496690, 1),

“t” : NumberLong(2)

}

},

“lastStableCheckpointTimestamp” : Timestamp(1560496652, 1),

“members” : [

{

“_id” : 0,

“name” : “mongo1.example.net:27027”,

“health” : 1,

“state” : 1,

“stateStr” : “PRIMARY”,

“uptime” : 1123,

“optime” : {

“ts” : Timestamp(1560496690, 1),

“t” : NumberLong(2)

},

“optimeDate” : ISODate(“2019-06-14T07:18:10Z”),

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“infoMessage” : “”,

“electionTime” : Timestamp(1560495590, 1),

“electionDate” : ISODate(“2019-06-14T06:59:50Z”),

“configVersion” : 1,

“self” : true,

“lastHeartbeatMessage” : “”

},

{

“_id” : 1,

“name” : “mongo2.example.net:27027”,

“health” : 1,

“state” : 2,

“stateStr” : “SECONDARY”,

“uptime” : 1113,

“optime” : {

“ts” : Timestamp(1560496690, 1),

“t” : NumberLong(2)

},

“optimeDurable” : {

“ts” : Timestamp(1560496690, 1),

“t” : NumberLong(2)

},

“optimeDate” : ISODate(“2019-06-14T07:18:10Z”),

“optimeDurableDate” : ISODate(“2019-06-14T07:18:10Z”),

“lastHeartbeat” : ISODate(“2019-06-14T07:18:18.974Z”),

“lastHeartbeatRecv” : ISODate(“2019-06-14T07:18:19.142Z”),

“pingMs” : NumberLong(0),

“lastHeartbeatMessage” : “”,

“syncingTo” : “mongo1.example.net:27027”,

“syncSourceHost” : “mongo1.example.net:27027”,

“syncSourceId” : 0,

“infoMessage” : “”,

“configVersion” : 1

},

{

“_id” : 2,

“name” : “mongo3.example.net:27027”,

“health” : 1,

“state” : 2,

“stateStr” : “SECONDARY”,

“uptime” : 1107,

“optime” : {

“ts” : Timestamp(1560496690, 1),

“t” : NumberLong(2)

},

“optimeDurable” : {

“ts” : Timestamp(1560496690, 1),

“t” : NumberLong(2)

},

“optimeDate” : ISODate(“2019-06-14T07:18:10Z”),

“optimeDurableDate” : ISODate(“2019-06-14T07:18:10Z”),

“lastHeartbeat” : ISODate(“2019-06-14T07:18:18.999Z”),

“lastHeartbeatRecv” : ISODate(“2019-06-14T07:18:18.998Z”),

“pingMs” : NumberLong(0),

“lastHeartbeatMessage” : “”,

“syncingTo” : “mongo2.example.net:27027”,

“syncSourceHost” : “mongo2.example.net:27027”,

“syncSourceId” : 1,

“infoMessage” : “”,

“configVersion” : 1

}

],

“ok” : 1,

“operationTime” : Timestamp(1560496690, 1),

“$gleStats” : {

“lastOpTime” : {

“ts” : Timestamp(1560496631, 1),

“t” : NumberLong(2)

},

“electionId” : ObjectId(“7fffffff0000000000000002”)

},

“lastCommittedOpTime” : Timestamp(1560496690, 1),

“$clusterTime” : {

“clusterTime” : Timestamp(1560496690, 1),

“signature” : {

“hash” : BinData(0,”lHiVw7WeO81npTi2IMW16reAN84=”),

“keyId” : NumberLong(“6702270356301807629”)

}

}

}

4、部署分片服务器 1(Shard1)以及 Replica Set(副本集)

3 台服务器上配置文件内容: /data/mongodb/shard1.conf

mongo1.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard1.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard1"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard1.pid"

net:

bindIp: mongo1.example.net

port: 27017

replication:

replSetName: "shard1"

sharding:

clusterRole: shardsvrmongo2.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard1.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard1"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard1.pid"

net:

bindIp: mongo2.example.net

port: 27017

replication:

replSetName: "shard1"

sharding:

clusterRole: shardsvrmongo3.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard1.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard1"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard1.pid"

net:

bindIp: mongo3.example.net

port: 27017

replication:

replSetName: "shard1"

sharding:

clusterRole: shardsvr开启三台服务器上 Shard

mongod -f /data/mongodb/shard1.conf连接 Primary 服务器的 Shard 的副本集

mongo --host mongo1.example.net --port 27017结果

MongoDB shell version v4.0.10

connecting to: mongodb://mongo1.example.net:27017/?gssapiServiceName=mongodb

Implicit session: session {“id” : UUID(“91e76384-cdae-411f-ab88-b7a8bd4555d1”) }

MongoDB server version: 4.0.10

Server has startup warnings:

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten]

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten]

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten]

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is ‘always’.

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** We suggest setting it to ‘never’

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten]

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is ‘always’.

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten] ** We suggest setting it to ‘never’

2019-06-14T15:32:39.243+0800 I CONTROL [initandlisten]

>

配置 Replica Set

rs.initiate(

{_id : "shard1",

members: [{ _id : 0, host : "mongo1.example.net:27017",priority:2 },

{_id : 1, host : "mongo2.example.net:27017",priority:1 },

{_id : 2, host : "mongo3.example.net:27017",arbiterOnly:true }

]

}

)注意:优先级 priority 的值越大,越容易选举成为 Primary

查看 Replica Set 的状态:

shard1:PRIMARY> rs.status()

{

“set” : “shard1”,

“date” : ISODate(“2019-06-20T01:33:21.809Z”),

“myState” : 1,

“term” : NumberLong(2),

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“heartbeatIntervalMillis” : NumberLong(2000),

“optimes” : {

“lastCommittedOpTime” : {

“ts” : Timestamp(1560994393, 1),

“t” : NumberLong(2)

},

“readConcernMajorityOpTime” : {

“ts” : Timestamp(1560994393, 1),

“t” : NumberLong(2)

},

“appliedOpTime” : {

“ts” : Timestamp(1560994393, 1),

“t” : NumberLong(2)

},

“durableOpTime” : {

“ts” : Timestamp(1560994393, 1),

“t” : NumberLong(2)

}

},

“lastStableCheckpointTimestamp” : Timestamp(1560994373, 1),

“members” : [

{

“_id” : 0,

“name” : “mongo1.example.net:27017”,

“health” : 1,

“state” : 1,

“stateStr” : “PRIMARY”,

“uptime” : 43,

“optime” : {

“ts” : Timestamp(1560994393, 1),

“t” : NumberLong(2)

},

“optimeDate” : ISODate(“2019-06-20T01:33:13Z”),

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“infoMessage” : “could not find member to sync from”,

“electionTime” : Timestamp(1560994371, 1),

“electionDate” : ISODate(“2019-06-20T01:32:51Z”),

“configVersion” : 1,

“self” : true,

“lastHeartbeatMessage” : “”

},

{

“_id” : 1,

“name” : “mongo2.example.net:27017”,

“health” : 1,

“state” : 2,

“stateStr” : “SECONDARY”,

“uptime” : 36,

“optime” : {

“ts” : Timestamp(1560994393, 1),

“t” : NumberLong(2)

},

“optimeDurable” : {

“ts” : Timestamp(1560994393, 1),

“t” : NumberLong(2)

},

“optimeDate” : ISODate(“2019-06-20T01:33:13Z”),

“optimeDurableDate” : ISODate(“2019-06-20T01:33:13Z”),

“lastHeartbeat” : ISODate(“2019-06-20T01:33:19.841Z”),

“lastHeartbeatRecv” : ISODate(“2019-06-20T01:33:21.164Z”),

“pingMs” : NumberLong(0),

“lastHeartbeatMessage” : “”,

“syncingTo” : “mongo1.example.net:27017”,

“syncSourceHost” : “mongo1.example.net:27017”,

“syncSourceId” : 0,

“infoMessage” : “”,

“configVersion” : 1

},

{

“_id” : 2,

“name” : “mongo3.example.net:27017”,

“health” : 1,

“state” : 7,

“stateStr” : “ARBITER”,

“uptime” : 32,

“lastHeartbeat” : ISODate(“2019-06-20T01:33:19.838Z”),

“lastHeartbeatRecv” : ISODate(“2019-06-20T01:33:20.694Z”),

“pingMs” : NumberLong(0),

“lastHeartbeatMessage” : “”,

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“infoMessage” : “”,

“configVersion” : 1

}

],

“ok” : 1

}

结果: 可以看出三个服务器: 1 个 Primary,1 个 Secondary,1 一个 Arbiter

创建管理用户

use admin

db.createUser(

{user: "myUserAdmin",

pwd: "abc123",

roles: [{role: "userAdminAnyDatabase", db: "admin" },"readWriteAnyDatabase","clusterAdmin"]

}

)开启 Shard1 的登录验证和内部验证

security:

keyFile: "/data/mongodb/keyfile"

clusterAuthMode: "keyFile"

authorization: "enabled"然后 依次关闭 Arbiter、Secondary、Primary

mongod -f /data/mongodb/shard1.conf --shutdown依次 开启 Primary 和两个 Secondary

mongod -f /data/mongodb/shard1.conf 使用用户密码登录 mongo

mongo --host mongo1.example.net --port 27017 -u myUserAdmin --authenticationDatabase "admin" -p 'abc123'5、部署分片服务器 2(Shard2)以及 Replica Set(副本集)

3 台服务器上配置文件内容: /data/mongodb/shard2.conf

mongo1.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard2.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard2"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard2.pid"

net:

bindIp: mongo1.example.net

port: 27018

replication:

replSetName: "shard2"

sharding:

clusterRole: shardsvrmongo2.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard2.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard2"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard2.pid"

net:

bindIp: mongo2.example.net

port: 27018

replication:

replSetName: "shard2"

sharding:

clusterRole: shardsvrmongo3.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard2.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard2"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard2.pid"

net:

bindIp: mongo3.example.net

port: 27018

replication:

replSetName: "shard2"

sharding:

clusterRole: shardsvr

开启三台服务器上 Shard

mongod -f /data/mongodb/shard2.conf连接 Primary 服务器的 Shard 的副本集

mongo --host mongo2.example.net --port 27018配置 Replica Set(注意:三个服务器的角色发生了改变)

rs.initiate(

{_id : "shard2",

members: [{ _id : 0, host : "mongo1.example.net:27018",arbiterOnly:true },

{_id : 1, host : "mongo2.example.net:27018",priority:2 },

{_id : 2, host : "mongo3.example.net:27018",priority:1 }

]

}

)查看 Replica Set 的状态:

shard2:PRIMARY> rs.status()

{

“set” : “shard2”,

“date” : ISODate(“2019-06-20T01:59:08.996Z”),

“myState” : 1,

“term” : NumberLong(1),

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“heartbeatIntervalMillis” : NumberLong(2000),

“optimes” : {

“lastCommittedOpTime” : {

“ts” : Timestamp(1560995943, 1),

“t” : NumberLong(1)

},

“readConcernMajorityOpTime” : {

“ts” : Timestamp(1560995943, 1),

“t” : NumberLong(1)

},

“appliedOpTime” : {

“ts” : Timestamp(1560995943, 1),

“t” : NumberLong(1)

},

“durableOpTime” : {

“ts” : Timestamp(1560995943, 1),

“t” : NumberLong(1)

}

},

“lastStableCheckpointTimestamp” : Timestamp(1560995913, 1),

“members” : [

{

“_id” : 0,

“name” : “mongo1.example.net:27018”,

“health” : 1,

“state” : 7,

“stateStr” : “ARBITER”,

“uptime” : 107,

“lastHeartbeat” : ISODate(“2019-06-20T01:59:08.221Z”),

“lastHeartbeatRecv” : ISODate(“2019-06-20T01:59:07.496Z”),

“pingMs” : NumberLong(0),

“lastHeartbeatMessage” : “”,

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“infoMessage” : “”,

“configVersion” : 1

},

{

“_id” : 1,

“name” : “mongo2.example.net:27018”,

“health” : 1,

“state” : 1,

“stateStr” : “PRIMARY”,

“uptime” : 412,

“optime” : {

“ts” : Timestamp(1560995943, 1),

“t” : NumberLong(1)

},

“optimeDate” : ISODate(“2019-06-20T01:59:03Z”),

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“infoMessage” : “could not find member to sync from”,

“electionTime” : Timestamp(1560995852, 1),

“electionDate” : ISODate(“2019-06-20T01:57:32Z”),

“configVersion” : 1,

“self” : true,

“lastHeartbeatMessage” : “”

},

{

“_id” : 2,

“name” : “mongo3.example.net:27018”,

“health” : 1,

“state” : 2,

“stateStr” : “SECONDARY”,

“uptime” : 107,

“optime” : {

“ts” : Timestamp(1560995943, 1),

“t” : NumberLong(1)

},

“optimeDurable” : {

“ts” : Timestamp(1560995943, 1),

“t” : NumberLong(1)

},

“optimeDate” : ISODate(“2019-06-20T01:59:03Z”),

“optimeDurableDate” : ISODate(“2019-06-20T01:59:03Z”),

“lastHeartbeat” : ISODate(“2019-06-20T01:59:08.220Z”),

“lastHeartbeatRecv” : ISODate(“2019-06-20T01:59:08.716Z”),

“pingMs” : NumberLong(0),

“lastHeartbeatMessage” : “”,

“syncingTo” : “mongo2.example.net:27018”,

“syncSourceHost” : “mongo2.example.net:27018”,

“syncSourceId” : 1,

“infoMessage” : “”,

“configVersion” : 1

}

],

“ok” : 1,

“operationTime” : Timestamp(1560995943, 1),

“$clusterTime” : {

“clusterTime” : Timestamp(1560995943, 1),

“signature” : {

“hash” : BinData(0,”AAAAAAAAAAAAAAAAAAAAAAAAAAA=”),

“keyId” : NumberLong(0)

}

}

}

结果: 可以看出三个服务器: 1 个 Primary,1 个 Secondary,1 一个 Arbiter

配置登录认证的用户请按照 Shard1 的步骤

6、部署分片服务器 3(Shard3)以及 Replica Set(副本集)

3 台服务器上配置文件内容: /data/mongodb/shard3.conf

mongo1.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard3.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard3"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard3.pid"

net:

bindIp: mongo1.example.net

port: 27019

replication:

replSetName: "shard3"

sharding:

clusterRole: shardsvrmongo2.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard3.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard3"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard3.pid"

net:

bindIp: mongo2.example.net

port: 27019

replication:

replSetName: "shard3"

sharding:

clusterRole: shardsvrmongo3.example.net 服务器上

systemLog:

destination: file

path: "/data/mongodb/log/shard3.log"

logAppend: true

storage:

dbPath: "/data/mongodb/data/shard3"

journal:

enabled: true

wiredTiger:

engineConfig:

cacheSizeGB: 2

processManagement:

fork: true

pidFilePath: "/data/mongodb/pid/shard3.pid"

net:

bindIp: mongo3.example.net

port: 27019

replication:

replSetName: "shard3"

sharding:

clusterRole: shardsvr开启三台服务器上 Shard

mongod -f /data/mongodb/shard3.conf连接 Primary 服务器的 Shard 的副本集

mongo --host mongo3.example.net --port 27019配置 Replica Set(注意:三个服务器的角色发生了改变)

rs.initiate(

{_id : "shard3",

members: [{ _id : 0, host : "mongo1.example.net:27019",priority:1 },

{_id : 1, host : "mongo2.example.net:27019",arbiterOnly:true },

{_id : 2, host : "mongo3.example.net:27019",priority:2 }

]

}

)查看 Replica Set 的状态:

shard3:PRIMARY> rs.status()

{

“set” : “shard3”,

“date” : ISODate(“2019-06-20T02:21:56.990Z”),

“myState” : 1,

“term” : NumberLong(1),

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“heartbeatIntervalMillis” : NumberLong(2000),

“optimes” : {

“lastCommittedOpTime” : {

“ts” : Timestamp(1560997312, 2),

“t” : NumberLong(1)

},

“readConcernMajorityOpTime” : {

“ts” : Timestamp(1560997312, 2),

“t” : NumberLong(1)

},

“appliedOpTime” : {

“ts” : Timestamp(1560997312, 2),

“t” : NumberLong(1)

},

“durableOpTime” : {

“ts” : Timestamp(1560997312, 2),

“t” : NumberLong(1)

}

},

“lastStableCheckpointTimestamp” : Timestamp(1560997312, 1),

“members” : [

{

“_id” : 0,

“name” : “mongo1.example.net:27019”,

“health” : 1,

“state” : 2,

“stateStr” : “SECONDARY”,

“uptime” : 17,

“optime” : {

“ts” : Timestamp(1560997312, 2),

“t” : NumberLong(1)

},

“optimeDurable” : {

“ts” : Timestamp(1560997312, 2),

“t” : NumberLong(1)

},

“optimeDate” : ISODate(“2019-06-20T02:21:52Z”),

“optimeDurableDate” : ISODate(“2019-06-20T02:21:52Z”),

“lastHeartbeat” : ISODate(“2019-06-20T02:21:56.160Z”),

“lastHeartbeatRecv” : ISODate(“2019-06-20T02:21:55.155Z”),

“pingMs” : NumberLong(0),

“lastHeartbeatMessage” : “”,

“syncingTo” : “mongo3.example.net:27019”,

“syncSourceHost” : “mongo3.example.net:27019”,

“syncSourceId” : 2,

“infoMessage” : “”,

“configVersion” : 1

},

{

“_id” : 1,

“name” : “mongo2.example.net:27019”,

“health” : 1,

“state” : 7,

“stateStr” : “ARBITER”,

“uptime” : 17,

“lastHeartbeat” : ISODate(“2019-06-20T02:21:56.159Z”),

“lastHeartbeatRecv” : ISODate(“2019-06-20T02:21:55.021Z”),

“pingMs” : NumberLong(0),

“lastHeartbeatMessage” : “”,

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“infoMessage” : “”,

“configVersion” : 1

},

{

“_id” : 2,

“name” : “mongo3.example.net:27019”,

“health” : 1,

“state” : 1,

“stateStr” : “PRIMARY”,

“uptime” : 45,

“optime” : {

“ts” : Timestamp(1560997312, 2),

“t” : NumberLong(1)

},

“optimeDate” : ISODate(“2019-06-20T02:21:52Z”),

“syncingTo” : “”,

“syncSourceHost” : “”,

“syncSourceId” : -1,

“infoMessage” : “could not find member to sync from”,

“electionTime” : Timestamp(1560997310, 1),

“electionDate” : ISODate(“2019-06-20T02:21:50Z”),

“configVersion” : 1,

“self” : true,

“lastHeartbeatMessage” : “”

}

],

“ok” : 1,

“operationTime” : Timestamp(1560997312, 2),

“$clusterTime” : {

“clusterTime” : Timestamp(1560997312, 2),

“signature” : {

“hash” : BinData(0,”AAAAAAAAAAAAAAAAAAAAAAAAAAA=”),

“keyId” : NumberLong(0)

}

}

}

结果: 可以看出三个服务器: 1 个 Primary,1 个 Secondary,1 一个 Arbiter

配置登录认证的用户请按照 Shard1 的步骤

7、配置 mongos 服务器去连接分片集群

mongos.example.net 服务器上 mongos 的配置文件 /data/mongodb/mongos.conf

systemLog:

destination: file

path: "/data/mongodb/log/mongos.log"

logAppend: true

processManagement:

fork: true

net:

port: 27017

bindIp: mongos.example.net

sharding:

configDB: "cs0/mongo1.example.net:27027,mongo2.example.net:27027,mongo3.example.net:27027"

security:

keyFile: "/data/mongodb/keyfile"

clusterAuthMode: "keyFile"启动 mongos 服务

mongos -f /data/mongodb/mongos.conf连接 mongos

mongo --host mongos.example.net --port 27017 -u myUserAdmin --authenticationDatabase "admin" -p 'abc123'查看当前集群结果:

mongos> sh.status()

--- Sharding Status ---

sharding version: {"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5d0af6ed4fa51757cd032108")

}

shards:

active mongoses:

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{"_id" : "config", "primary" : "config", "partitioned" : true }在集群中先加入 Shard1、Shard2,剩余 Shard3 我们在插入数据有在进行加入(模拟实现扩容)。

sh.addShard("shard1/mongo1.example.net:27017,mongo2.example.net:27017,mongo3.example.net:27017")

sh.addShard("shard2/mongo1.example.net:27018,mongo2.example.net:27018,mongo3.example.net:27018")结果:

mongos> sh.addShard(“shard1/mongo1.example.net:27017,mongo2.example.net:27017,mongo3.example.net:27017”)

{

“shardAdded” : “shard1”,

“ok” : 1,

“operationTime” : Timestamp(1561009140, 7),

“$clusterTime” : {

“clusterTime” : Timestamp(1561009140, 7),

“signature” : {

“hash” : BinData(0,”2je9FsNfMfBMHp+X/6d98B5tLH8=”),

“keyId” : NumberLong(“6704442493062086684”)

}

}

}

mongos> sh.addShard(“shard2/mongo1.example.net:27018,mongo2.example.net:27018,mongo3.example.net:27018”)

{

“shardAdded” : “shard2”,

“ok” : 1,

“operationTime” : Timestamp(1561009148, 5),

“$clusterTime” : {

“clusterTime” : Timestamp(1561009148, 6),

“signature” : {

“hash” : BinData(0,”8FvJuCy8kCrMu5nB9PYILj0bzLk=”),

“keyId” : NumberLong(“6704442493062086684”)

}

}

}

查看集群的状态

mongos> sh.status()

--- Sharding Status ---

sharding version: {"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5d0af6ed4fa51757cd032108")

}

shards:

{"_id" : "shard1", "host" : "shard1/mongo1.example.net:27017,mongo2.example.net:27017", "state" : 1 }

{"_id" : "shard2", "host" : "shard2/mongo2.example.net:27018,mongo3.example.net:27018", "state" : 1 }

active mongoses:

"4.0.10" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{"_id" : "config", "primary" : "config", "partitioned" : true }

8、测试

为了便于测试,设置分片 chunk 的大小为 1M

use config

db.settings.save({"_id":"chunksize","value":1})在连接 mongos 后,执行创建数据库,并启用分片存储

sh.enableSharding("user_center")创建 “user_center” 数据库,并启用分片,查看结果:

mongos> sh.status()

— Sharding Status —

sharding version: {

“_id” : 1,

“minCompatibleVersion” : 5,

“currentVersion” : 6,

“clusterId” : ObjectId(“5d0af6ed4fa51757cd032108”)

}

shards:

{“_id” : “shard1”, “host” : “shard1/mongo1.example.net:27017,mongo2.example.net:27017”, “state” : 1}

{“_id” : “shard2”, “host” : “shard2/mongo2.example.net:27018,mongo3.example.net:27018”, “state” : 1}

active mongoses:

“4.0.10” : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{“_id” : “config”, “primary” : “config”, “partitioned” : true}

config.system.sessions

shard key: {“_id” : 1}

unique: false

balancing: true

chunks:

shard1 1

{“_id” : { “$minKey” : 1} } –>> {“_id” : { “$maxKey” : 1} } on : shard1 Timestamp(1, 0)

{“_id” : “user_center”, “primary” : “shard1”, “partitioned” : true, “version” : { “uuid” : UUID(“3b05ccb5-796a-4e9e-a36e-99b860b6bee0”), “lastMod” : 1 } }

创建 “users” 集合

sh.shardCollection("user_center.users",{"name":1}) # 数据库 user_center 中 users 集合使用了片键{"name":1},这个片键通过字段 name 的值进行数据分配现在查看集群状态

mongos> sh.status()

— Sharding Status —

sharding version: {

“_id” : 1,

“minCompatibleVersion” : 5,

“currentVersion” : 6,

“clusterId” : ObjectId(“5d0af6ed4fa51757cd032108”)

}

shards:

{“_id” : “shard1”, “host” : “shard1/mongo1.example.net:27017,mongo2.example.net:27017”, “state” : 1}

{“_id” : “shard2”, “host” : “shard2/mongo2.example.net:27018,mongo3.example.net:27018”, “state” : 1}

active mongoses:

“4.0.10” : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{“_id” : “config”, “primary” : “config”, “partitioned” : true}

config.system.sessions

shard key: {“_id” : 1}

unique: false

balancing: true

chunks:

shard1 1

{“_id” : { “$minKey” : 1} } –>> {“_id” : { “$maxKey” : 1} } on : shard1 Timestamp(1, 0)

{“_id” : “user_center”, “primary” : “shard2”, “partitioned” : true, “version” : { “uuid” : UUID(“33c79b3f-aa18-4755-a5e8-b8f7f3d05893”), “lastMod” : 1 } }

user_center.users

shard key: {“name” : 1}

unique: false

balancing: true

chunks:

shard2 1

{“name” : { “$minKey” : 1} } –>> {“name” : { “$maxKey” : 1} } on : shard2 Timestamp(1, 0)

写 pyhton 脚本插入数据

#enconding:utf8

import pymongo,string,random

def random_name():

str_args = string.ascii_letters

name_list = random.sample(str_args,5)

random.shuffle(name_list)

return ''.join(name_list)

def random_age():

age_args = string.digits

age_list = random.sample(age_args,2)

random.shuffle(age_list)

return int(''.join(age_list))

def insert_data_to_mongo(url,dbname,collections_name):

print(url)

client = pymongo.MongoClient(url)

db = client[dbname]

collections = db[collections_name]

for i in range(1,100000):

name = random_name()

collections.insert({"name" : name , "age" : random_age(), "status" : "pending"})

print("insert ",name)

if __name__ == "__main__":

mongo_url="mongodb://myUserAdmin:abc123@192.168.11.10:27017/?maxPoolSize=100&minPoolSize=10&maxIdleTimeMS=600000"

mongo_db="user_center"

mongo_collections="users"

insert_data_to_mongo(mongo_url,mongo_db,mongo_collections)插入数据后查看此时集群的状态:

mongos> sh.status()

--- Sharding Status ---

sharding version: {"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5d0af6ed4fa51757cd032108")

}

shards:

{"_id" : "shard1", "host" : "shard1/mongo1.example.net:27017,mongo2.example.net:27017", "state" : 1 }

{"_id" : "shard2", "host" : "shard2/mongo2.example.net:27018,mongo3.example.net:27018", "state" : 1 }

active mongoses:

"4.0.10" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

3 : Success

databases:

{"_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: {"_id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{"_id" : {"$minKey" : 1 } } -->> {"_id" : {"$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

{"_id" : "user_center", "primary" : "shard2", "partitioned" : true, "version" : {"uuid" : UUID("33c79b3f-aa18-4755-a5e8-b8f7f3d05893"), "lastMod" : 1 } }

user_center.users

shard key: {"name" : 1 }

unique: false

balancing: true

chunks:

shard1 9

shard2 8

{"name" : {"$minKey" : 1 } } -->> {"name" : "ABXEw" } on : shard1 Timestamp(2, 0)

{"name" : "ABXEw" } -->> {"name" : "EKdCt" } on : shard1 Timestamp(3, 11)

{"name" : "EKdCt" } -->> {"name" : "ITgcx" } on : shard1 Timestamp(3, 12)

{"name" : "ITgcx" } -->> {"name" : "JKoOz" } on : shard1 Timestamp(3, 13)

{"name" : "JKoOz" } -->> {"name" : "NSlcY" } on : shard1 Timestamp(4, 2)

{"name" : "NSlcY" } -->> {"name" : "RbrAy" } on : shard1 Timestamp(4, 3)

{"name" : "RbrAy" } -->> {"name" : "SQvZq" } on : shard1 Timestamp(4, 4)

{"name" : "SQvZq" } -->> {"name" : "TxpPM" } on : shard1 Timestamp(3, 4)

{"name" : "TxpPM" } -->> {"name" : "YEujn" } on : shard1 Timestamp(4, 0)

{"name" : "YEujn" } -->> {"name" : "cOlra" } on : shard2 Timestamp(3, 9)

{"name" : "cOlra" } -->> {"name" : "dFTNS" } on : shard2 Timestamp(3, 10)

{"name" : "dFTNS" } -->> {"name" : "hLwFZ" } on : shard2 Timestamp(3, 14)

{"name" : "hLwFZ" } -->> {"name" : "lVQzu" } on : shard2 Timestamp(3, 15)

{"name" : "lVQzu" } -->> {"name" : "mNLGP" } on : shard2 Timestamp(3, 16)

{"name" : "mNLGP" } -->> {"name" : "oILav" } on : shard2 Timestamp(3, 7)

{"name" : "oILav" } -->> {"name" : "wJWQI" } on : shard2 Timestamp(4, 1)

{"name" : "wJWQI" } -->> {"name" : {"$maxKey" : 1 } } on : shard2 Timestamp(3, 1)可以看出,数据分别再 Shard1、Shard2 分片上。

将 Shard3 分片也加入到集群中来

mongos> sh.addShard("shard3/mongo1.example.net:27019,mongo2.example.net:27019,mongo3.example.net:27019")在查看集群的状态:

mongos> sh.status()

--- Sharding Status ---

sharding version: {"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5d0af6ed4fa51757cd032108")

}

shards:

{"_id" : "shard1", "host" : "shard1/mongo1.example.net:27017,mongo2.example.net:27017", "state" : 1 }

{"_id" : "shard2", "host" : "shard2/mongo2.example.net:27018,mongo3.example.net:27018", "state" : 1 }

{"_id" : "shard3", "host" : "shard3/mongo1.example.net:27019,mongo3.example.net:27019", "state" : 1 }

active mongoses:

"4.0.10" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

8 : Success

databases:

{"_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: {"_id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{"_id" : {"$minKey" : 1 } } -->> {"_id" : {"$maxKey" : 1 } } on : shard1 Timestamp(1, 0)

{"_id" : "user_center", "primary" : "shard2", "partitioned" : true, "version" : {"uuid" : UUID("33c79b3f-aa18-4755-a5e8-b8f7f3d05893"), "lastMod" : 1 } }

user_center.users

shard key: {"name" : 1 }

unique: false

balancing: true

chunks:

shard1 6

shard2 6

shard3 5

{"name" : {"$minKey" : 1 } } -->> {"name" : "ABXEw" } on : shard3 Timestamp(5, 0)

{"name" : "ABXEw" } -->> {"name" : "EKdCt" } on : shard3 Timestamp(7, 0)

{"name" : "EKdCt" } -->> {"name" : "ITgcx" } on : shard3 Timestamp(9, 0)

{"name" : "ITgcx" } -->> {"name" : "JKoOz" } on : shard1 Timestamp(9, 1)

{"name" : "JKoOz" } -->> {"name" : "NSlcY" } on : shard1 Timestamp(4, 2)

{"name" : "NSlcY" } -->> {"name" : "RbrAy" } on : shard1 Timestamp(4, 3)

{"name" : "RbrAy" } -->> {"name" : "SQvZq" } on : shard1 Timestamp(4, 4)

{"name" : "SQvZq" } -->> {"name" : "TxpPM" } on : shard1 Timestamp(5, 1)

{"name" : "TxpPM" } -->> {"name" : "YEujn" } on : shard1 Timestamp(4, 0)

{"name" : "YEujn" } -->> {"name" : "cOlra" } on : shard3 Timestamp(6, 0)

{"name" : "cOlra" } -->> {"name" : "dFTNS" } on : shard3 Timestamp(8, 0)

{"name" : "dFTNS" } -->> {"name" : "hLwFZ" } on : shard2 Timestamp(3, 14)

{"name" : "hLwFZ" } -->> {"name" : "lVQzu" } on : shard2 Timestamp(3, 15)

{"name" : "lVQzu" } -->> {"name" : "mNLGP" } on : shard2 Timestamp(3, 16)

{"name" : "mNLGP" } -->> {"name" : "oILav" } on : shard2 Timestamp(8, 1)

{"name" : "oILav" } -->> {"name" : "wJWQI" } on : shard2 Timestamp(4, 1)

{"name" : "wJWQI" } -->> {"name" : {"$maxKey" : 1 } } on : shard2 Timestamp(6, 1)加入后,集群的分片数据重新平衡调整,有一部分数据分布到 Shard3 上。

9、备份和恢复

备份

备份的时候需要锁定配置服务器(ConfigServer)和分片服务器(Shard)

在备份前查看当前数据库中数据总条数

mongos> db.users.find().count()

99999然后启动前面的 Python 脚本,可以在脚本中添加 time.sleep 来控制插入的频率。

在 mongos 服务器上停止平衡器。

mongos> sh.stopBalancer()锁定配置服务器和各分片服务器,登录配置服务器和各分片服务器的 Secondary 执行命令

db.fsyncLock()开始备份数据库

mongodump -h mongo2.example.net --port 27027 --authenticationDatabase admin -u myUserAdmin -p abc123 -o /data/backup/config

mongodump -h mongo2.example.net --port 27017 --authenticationDatabase admin -u myUserAdmin -p abc123 -o /data/backup/shard1

mongodump -h mongo3.example.net --port 27018 --authenticationDatabase admin -u myUserAdmin -p abc123 -o /data/backup/shard2

mongodump -h mongo1.example.net --port 27019 --authenticationDatabase admin -u myUserAdmin -p abc123 -o /data/backup/shard3锁定配置服务器和各分片服务器

db.fsyncUnlock()在 mongos 中开启平衡器

sh.setBalancerState(true);在备份的过程中不会影响到数据的写入,备份后查看此时的数据

mongos> db.users.find().count()

107874

恢复

将 Shard1 分片服务器 1 中的数据库删除

shard1:PRIMARY> use user_center

switched to db user_center

shard1:PRIMARY> db.dropDatabase()

{"dropped" : "user_center",

"ok" : 1,

"operationTime" : Timestamp(1561022404, 2),

"$gleStats" : {"lastOpTime" : {"ts" : Timestamp(1561022404, 2),

"t" : NumberLong(2)

},

"electionId" : ObjectId("7fffffff0000000000000002")

},

"lastCommittedOpTime" : Timestamp(1561022404, 1),

"$configServerState" : {"opTime" : {"ts" : Timestamp(1561022395, 1),

"t" : NumberLong(2)

}

},

"$clusterTime" : {"clusterTime" : Timestamp(1561022404, 2),

"signature" : {"hash" : BinData(0,"GO1yQDvdZ6oJBXdvM94noPNnJTM="),

"keyId" : NumberLong("6704442493062086684")

}

}

}然后使用刚备份的数据库进行恢复

mongorestore -h mongo1.example.net --port 27017 --authenticationDatabase admin -u myUserAdmin -p abc123 -d user_center /data/backup/shard1/user_center2019-06-20T17:20:34.325+0800 the –db and –collection args should only be used when restoring from a BSON file. Other uses are deprecated and will not exist in the future; use –nsInclude instead

2019-06-20T17:20:34.326+0800 building a list of collections to restore from /data/backup/shard1/user_center dir

2019-06-20T17:20:34.356+0800 reading metadata for user_center.users from /data/backup/shard1/user_center/users.metadata.json

2019-06-20T17:20:34.410+0800 restoring user_center.users from /data/backup/shard1/user_center/users.bson

2019-06-20T17:20:36.836+0800 restoring indexes for collection user_center.users from metadata

2019-06-20T17:20:37.093+0800 finished restoring user_center.users (30273 documents)

2019-06-20T17:20:37.093+0800 done

根据上述步骤恢复 Shard2、Shard3 的数据

最后恢复的结果:

mongos> db.users.find().count()

100013这个应该是我在锁的时候插入的数据。

: