共计 22460 个字符,预计需要花费 57 分钟才能阅读完成。

1. 什么是 Sqoop

Sqoop 即 SQL to Hadoop,是一款方便的在传统型数据库与 Hadoop 之间进行数据迁移的工具,充分利用 MapReduce 并行特点以批处理的方式加快数据传输,发展至今主要演化了二大版本,Sqoop1 和 Sqoop2。

Sqoop 工具是 hadoop 下连接关系型数据库和 Hadoop 的桥梁,支持关系型数据库和 hive、hdfs,hbase 之间数据的相互导入,可以使用全表导入和增量导入。

那么为什么选择 Sqoop 呢?

高效可控的利用资源,任务并行度,超时时间。数据类型映射与转化,可自动进行,用户也可自定义 支持多种主流数据库,MySQL,Oracle,SQL Server,DB2 等等

2.Sqoop1 和 Sqoop2 对比的异同之处

两个不同的版本,完全不兼容 版本号划分区别,Apache 版本:1.4.x(Sqoop1); 1.99.x(Sqoop2) CDH 版本 : Sqoop-1.4.3-cdh4(Sqoop1) ; Sqoop2-1.99.2-cdh4.5.0 (Sqoop2)Sqoop2 比 Sqoop1 的改进 引入 Sqoop server,集中化管理 connector 等 多种访问方式:CLI,Web UI,REST API 引入基于角色的安全机制

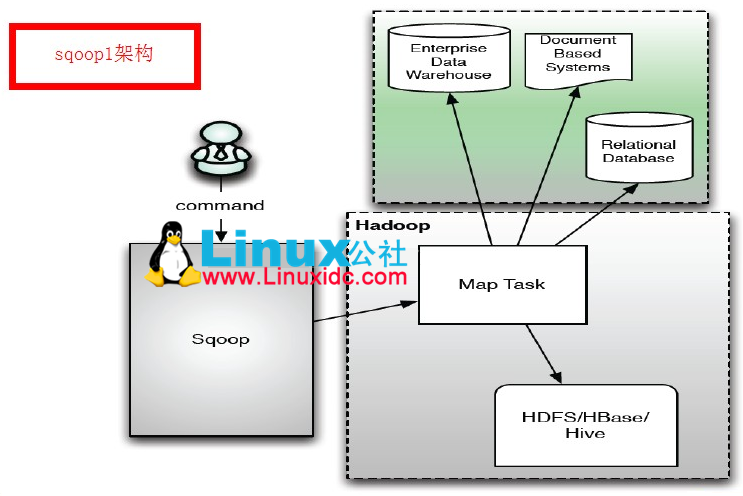

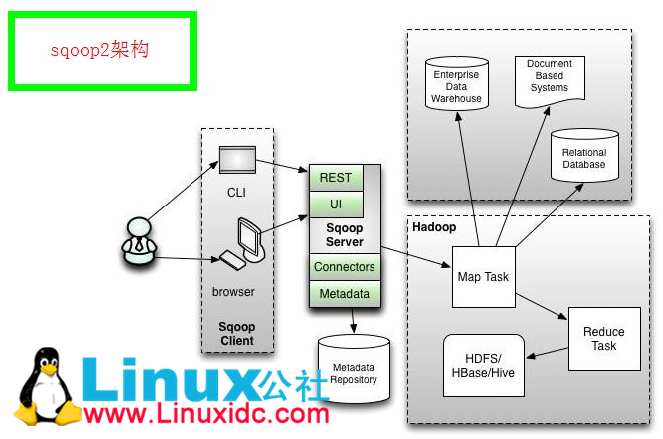

3.Sqoop1 与 Sqoop2 的架构图

Sqoop 架构图 1

Sqoop 架构图 2

通过 Sqoop 实现 Mysql / Oracle 与 HDFS / Hbase 互导数据 http://www.linuxidc.com/Linux/2013-06/85817.htm

[Hadoop] Sqoop 安装过程详解 http://www.linuxidc.com/Linux/2013-05/84082.htm

用 Sqoop 进行 MySQL 和 HDFS 系统间的数据互导 http://www.linuxidc.com/Linux/2013-04/83447.htm

Hadoop Oozie 学习笔记 Oozie 不支持 Sqoop 问题解决 http://www.linuxidc.com/Linux/2012-08/67027.htm

Hadoop 生态系统搭建(hadoop hive hbase zookeeper oozie Sqoop)http://www.linuxidc.com/Linux/2012-03/55721.htm

Hadoop 学习全程记录——使用 Sqoop 将 MySQL 中数据导入到 Hive 中 http://www.linuxidc.com/Linux/2012-01/51993.htm

4.Sqoop1 与 Sqoop2 的优缺点

比较 | Sqoop1 | Sqoop2 |

架构 | 仅仅使用一个 Sqoop 客户端 | 引入了 Sqoop server 集中化管理 connector,以及 rest api,web,UI,并引入权限安全机制 |

部署 | 部署简单,安装需要 root 权限,connector 必须符合 JDBC 模型 | 架构稍复杂,配置部署更繁琐 |

使用 | 命令行方式容易出错,格式紧耦合,无法支持所有数据类型,安全机制不够完善,例如密码暴漏 | 多种交互方式,命令行,web UI,rest API,conncetor 集中化管理,所有的链接安装在 Sqoop server 上,完善权限管理机制,connector 规范化,仅仅负责数据的读写 |

5.Sqoop1 的安装部署

5.0 安装环境

hadoop:hadoop-2.3.0-cdh5.1.2

sqoop:sqoop-1.4.4-cdh5.1.2

5.1 下载安装包及解压

tar -zxvf sqoop-1.4.4-cdh5.1.2.tar.gz

ln -s sqoop-1.4.4-cdh5.1.2 sqoop

5.2 配置环境变量和配置文件

cd sqoop/conf/

cat sqoop-env-template.sh >> sqoop-env.sh

vi sqoop-env.sh

在 sqoop-env.sh 中添加如下代码

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the “License”); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an “AS IS” BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# included in all the hadoop scripts with source command

# should not be executable directly

# also should not be passed any arguments, since we need original $*

# Set Hadoop-specific environment variables here.

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/home/hadoop/hadoop

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/home/hadoop/hadoop

#set the path to where bin/hbase is available

export HBASE_HOME=/home/hadoop/hbase

#Set the path to where bin/hive is available

export HIVE_HOME=/home/hadoop/hive

#Set the path for where zookeper config dir is

export ZOOCFGDIR=/home/hadoop/zookeeper

该配置文件中只有 HADOOP_COMMON_HOME 的配置是必须的 另外关于 hbase 和 hive 的配置 如果用到需要配置 不用的话就不用配置

更多详情见请继续阅读下一页的精彩内容:http://www.linuxidc.com/Linux/2014-10/108337p2.htm

5.3 添加需要的 jar 包到 lib 下面

这里的 jar 包指的是连接关系型数据库的 jar 比如 mysql Oracle 这些 jar 包是需要自己添加到 lib 目录下面去的

cp ~/hive/lib/mysql-connector-java-5.1.30.jar ~/sqoop/lib/

5.4 添加环境变量

vi ~/.profile

添加如下内容

export SQOOP_HOME=/home/Hadoop/sqoop

export SBT_HOME=/home/hadoop/sbt

export PATH=$PATH:$SBT_HOME/bin:$SQOOP_HOME/bin

export CLASSPATH=$CLASSPATH:$SQOOP_HOME/lib

source ~/.profile 使配置文件生效

5.5 测试 mysql 数据库的连接使用

①连接 mysql 数据库,列出所有的数据库

hadoop@linuxidc:~/sqoop/conf$ sqoop list-databases –connect jdbc:mysql://127.0.0.1:3306/ –username root -P

Warning: /home/hadoop/sqoop/../hive-hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

14/10/21 18:15:15 INFO sqoop.Sqoop: Running Sqoop version: 1.4.4-cdh5.1.2

Enter password:

14/10/21 18:15:19 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

information_schema

XINGXUNTONG

XINGXUNTONG_HIVE

amon

hive

hmon

mahout

mysql

oozie

performance_schema

realworld

rman

scm

smon

- P 表示输入密码 可以直接使用 –password 来制定密码

②mysql 数据库的表导入到 HDFS

hadoop@linuxidc:~/sqoop/conf$ sqoop import -m 1 –connect jdbc:mysql://127.0.0.1:3306/realworld –username root -P –table weblogs –target-dir /user/sqoop/test1

Warning: /home/hadoop/sqoop/../hive-hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

14/10/21 18:19:18 INFO sqoop.Sqoop: Running Sqoop version: 1.4.4-cdh5.1.2

Enter password:

14/10/21 18:19:21 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

14/10/21 18:19:21 INFO tool.CodeGenTool: Beginning code generation

14/10/21 18:19:22 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `weblogs` AS t LIMIT 1

14/10/21 18:19:22 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `weblogs` AS t LIMIT 1

14/10/21 18:19:22 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/hadoop

Note: /tmp/sqoop-hadoop/compile/15cb67e2b315154cdf02e3a17cf32bbe/weblogs.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

14/10/21 18:19:23 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/15cb67e2b315154cdf02e3a17cf32bbe/weblogs.jar

14/10/21 18:19:23 WARN manager.MySQLManager: It looks like you are importing from mysql.

14/10/21 18:19:23 WARN manager.MySQLManager: This transfer can be faster! Use the –direct

14/10/21 18:19:23 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

14/10/21 18:19:23 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

14/10/21 18:19:23 INFO mapreduce.ImportJobBase: Beginning import of weblogs

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.3.0-cdh5.1.2/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hbase-0.98.1-cdh5.1.2/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

14/10/21 18:19:24 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

14/10/21 18:19:24 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

14/10/21 18:19:25 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

14/10/21 18:19:25 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

14/10/21 18:19:40 INFO db.DBInputFormat: Using read commited transaction isolation

14/10/21 18:19:41 INFO mapreduce.JobSubmitter: number of splits:1

14/10/21 18:19:42 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1413879907572_0002

14/10/21 18:19:46 INFO impl.YarnClientImpl: Submitted application application_1413879907572_0002

14/10/21 18:19:46 INFO mapreduce.Job: The url to track the job: N/A

14/10/21 18:19:46 INFO mapreduce.Job: Running job: job_1413879907572_0002

14/10/21 18:20:12 INFO mapreduce.Job: Job job_1413879907572_0002 running in uber mode : false

14/10/21 18:20:12 INFO mapreduce.Job: map 0% reduce 0%

14/10/21 18:20:41 INFO mapreduce.Job: map 100% reduce 0%

14/10/21 18:20:45 INFO mapreduce.Job: Job job_1413879907572_0002 completed successfully

14/10/21 18:20:46 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=107189

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=87

HDFS: Number of bytes written=251130

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=22668

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=22668

Total vcore-seconds taken by all map tasks=22668

Total megabyte-seconds taken by all map tasks=23212032

Map-Reduce Framework

Map input records=3000

Map output records=3000

Input split bytes=87

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=41

CPU time spent (ms)=1540

Physical memory (bytes) snapshot=133345280

Virtual memory (bytes) snapshot=1201442816

Total committed heap usage (bytes)=76021760

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=251130

14/10/21 18:20:46 INFO mapreduce.ImportJobBase: Transferred 245.2441 KB in 80.7974 seconds (3.0353 KB/sec)

14/10/21 18:20:46 INFO mapreduce.ImportJobBase: Retrieved 3000 records.

-m 表示启动几个 map 任务来读取数据 如果数据库中的表没有主键这个参数是必须设置的而且只能设定为 1 否则会提示

14/10/21 18:18:27 ERROR tool.ImportTool: Error during import: No primary key could be found for table weblogs. Please specify one with –split-by or perform a sequential import with ‘-m 1’.

而这个参数设置为几会直接决定导入的文件在 hdfs 上面是分成几块的 比如 设置为 1 则会产生一个数据文件

14/10/21 18:23:54 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Found 2 items

-rw-r–r– 1 hadoop supergroup 0 2014-10-21 18:20 /user/sqoop/test1/_SUCCESS

-rw-r–r– 1 hadoop supergroup 251130 2014-10-21 18:20 /user/sqoop/test1/part-m-00000

这里添加主键:

mysql> desc weblogs;

+————–+————-+——+—–+———+——-+

| Field | Type | Null | Key | Default | Extra |

+————–+————-+——+—–+———+——-+

| md5 | varchar(32) | YES | | NULL | |

| url | varchar(64) | YES | | NULL | |

| request_date | date | YES | | NULL | |

| request_time | time | YES | | NULL | |

| ip | varchar(15) | YES | | NULL | |

+————–+————-+——+—–+———+——-+

5 rows in set (0.00 sec)

mysql> alter table weblogs add primary key(md5,ip);

Query OK, 3000 rows affected (1.60 sec)

Records: 3000 Duplicates: 0 Warnings: 0

mysql> desc weblogs;

+————–+————-+——+—–+———+——-+

| Field | Type | Null | Key | Default | Extra |

+————–+————-+——+—–+———+——-+

| md5 | varchar(32) | NO | PRI | | |

| url | varchar(64) | YES | | NULL | |

| request_date | date | YES | | NULL | |

| request_time | time | YES | | NULL | |

| ip | varchar(15) | NO | PRI | | |

+————–+————-+——+—–+———+——-+

5 rows in set (0.02 sec)

然后指定 -m

hadoop@linuxidc:~/sqoop/conf$ sqoop import -m 2 –connect jdbc:mysql://127.0.0.1:3306/realworld –username root -P –table weblogs –target-dir /user/sqoop/test2

Warning: /home/hadoop/sqoop/../hive-hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

14/10/21 18:22:40 INFO sqoop.Sqoop: Running Sqoop version: 1.4.4-cdh5.1.2

Enter password:

14/10/21 18:24:04 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

14/10/21 18:24:04 INFO tool.CodeGenTool: Beginning code generation

14/10/21 18:24:04 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `weblogs` AS t LIMIT 1

14/10/21 18:24:04 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `weblogs` AS t LIMIT 1

14/10/21 18:24:04 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/hadoop

Note: /tmp/sqoop-hadoop/compile/7061f445f29510afa2b89729126a57b9/weblogs.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

14/10/21 18:24:07 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/7061f445f29510afa2b89729126a57b9/weblogs.jar

14/10/21 18:24:07 WARN manager.MySQLManager: It looks like you are importing from mysql.

14/10/21 18:24:07 WARN manager.MySQLManager: This transfer can be faster! Use the –direct

14/10/21 18:24:07 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

14/10/21 18:24:07 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

14/10/21 18:24:07 ERROR tool.ImportTool: Error during import: No primary key could be found for table weblogs. Please specify one with –split-by or perform a sequential import with ‘-m 1’.

hadoop@linuxidc:~/sqoop/conf$ sqoop import -m 2 –connect jdbc:mysql://127.0.0.1:3306/realworld –username root -P –table weblogs –target-dir /user/sqoop/test2

Warning: /home/hadoop/sqoop/../hive-hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/sqoop/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

14/10/21 18:30:04 INFO sqoop.Sqoop: Running Sqoop version: 1.4.4-cdh5.1.2

Enter password:

14/10/21 18:30:07 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

14/10/21 18:30:07 INFO tool.CodeGenTool: Beginning code generation

14/10/21 18:30:07 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `weblogs` AS t LIMIT 1

14/10/21 18:30:07 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `weblogs` AS t LIMIT 1

14/10/21 18:30:07 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /home/hadoop/hadoop

Note: /tmp/sqoop-hadoop/compile/6dbf2401c1a51b81c5b885e6f7d43137/weblogs.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

14/10/21 18:30:09 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-hadoop/compile/6dbf2401c1a51b81c5b885e6f7d43137/weblogs.jar

14/10/21 18:30:09 WARN manager.MySQLManager: It looks like you are importing from mysql.

14/10/21 18:30:09 WARN manager.MySQLManager: This transfer can be faster! Use the –direct

14/10/21 18:30:09 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

14/10/21 18:30:09 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

14/10/21 18:30:09 WARN manager.CatalogQueryManager: The table weblogs contains a multi-column primary key. Sqoop will default to the column md5 only for this job.

14/10/21 18:30:09 WARN manager.CatalogQueryManager: The table weblogs contains a multi-column primary key. Sqoop will default to the column md5 only for this job.

14/10/21 18:30:09 INFO mapreduce.ImportJobBase: Beginning import of weblogs

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/hadoop-2.3.0-cdh5.1.2/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/hbase-0.98.1-cdh5.1.2/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

14/10/21 18:30:09 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

14/10/21 18:30:09 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

14/10/21 18:30:10 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

14/10/21 18:30:10 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

14/10/21 18:30:17 INFO db.DBInputFormat: Using read commited transaction isolation

14/10/21 18:30:17 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`md5`), MAX(`md5`) FROM `weblogs`

14/10/21 18:30:17 WARN db.TextSplitter: Generating splits for a textual index column.

14/10/21 18:30:17 WARN db.TextSplitter: If your database sorts in a case-insensitive order, this may result in a partial import or duplicate records.

14/10/21 18:30:17 WARN db.TextSplitter: You are strongly encouraged to choose an integral split column.

14/10/21 18:30:18 INFO mapreduce.JobSubmitter: number of splits:4

14/10/21 18:30:18 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1413879907572_0003

14/10/21 18:30:19 INFO impl.YarnClientImpl: Submitted application application_1413879907572_0003

14/10/21 18:30:19 INFO mapreduce.Job: The url to track the job: N/A

14/10/21 18:30:19 INFO mapreduce.Job: Running job: job_1413879907572_0003

14/10/21 18:30:32 INFO mapreduce.Job: Job job_1413879907572_0003 running in uber mode : false

14/10/21 18:30:32 INFO mapreduce.Job: map 0% reduce 0%

14/10/21 18:31:12 INFO mapreduce.Job: map 50% reduce 0%

14/10/21 18:31:13 INFO mapreduce.Job: map 75% reduce 0%

14/10/21 18:31:15 INFO mapreduce.Job: map 100% reduce 0%

14/10/21 18:31:21 INFO mapreduce.Job: Job job_1413879907572_0003 completed successfully

14/10/21 18:31:22 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=429312

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=532

HDFS: Number of bytes written=251209

HDFS: Number of read operations=16

HDFS: Number of large read operations=0

HDFS: Number of write operations=8

Job Counters

Launched map tasks=4

Other local map tasks=4

Total time spent by all maps in occupied slots (ms)=160326

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=160326

Total vcore-seconds taken by all map tasks=160326

Total megabyte-seconds taken by all map tasks=164173824

Map-Reduce Framework

Map input records=3001

Map output records=3001

Input split bytes=532

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=806

CPU time spent (ms)=5450

Physical memory (bytes) snapshot=494583808

Virtual memory (bytes) snapshot=4805771264

Total committed heap usage (bytes)=325058560

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=251209

14/10/21 18:31:22 INFO mapreduce.ImportJobBase: Transferred 245.3213 KB in 72.5455 seconds (3.3816 KB/sec)

这里产生的文件跟主键的字段个数以及 - m 的参数是相关的 大致是 - m 的值乘以主键字段数,有待考证

hadoop@linuxidc:~/study/cdh5$ hadoop fs -ls /user/sqoop/test2/

14/10/21 18:32:01 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Found 5 items

-rw-r–r– 1 hadoop supergroup 0 2014-10-21 18:31 /user/sqoop/test2/_SUCCESS

-rw-r–r– 1 hadoop supergroup 0 2014-10-21 18:31 /user/sqoop/test2/part-m-00000

-rw-r–r– 1 hadoop supergroup 251130 2014-10-21 18:31 /user/sqoop/test2/part-m-00001

-rw-r–r– 1 hadoop supergroup 0 2014-10-21 18:31 /user/sqoop/test2/part-m-00002

-rw-r–r– 1 hadoop supergroup 79 2014-10-21 18:31 /user/sqoop/test2/part-m-00003

这里的主键设计的不合理导致数据分布不均匀~~ 有待改进

③数据导出 Oracle 和 HBase

使用 export 可将 hdfs 中数据导入到远程数据库中

export –connect jdbc:oracle:thin:@192.168.**.**:**:**–username **–password=** -m1table VEHICLE–export-dir /user/root/VEHICLE

向 Hbase 导入数据

sqoop import –connect jdbc:oracle:thin:@192.168.**.**:**:**–username**–password=**–m 1 –table VEHICLE –hbase-create-table –hbase-table VEHICLE–hbase-row-key ID –column-family VEHICLEINFO –split-by ID

5.6 测试 Mysql 数据库的使用

前提:导入 mysql jdbc 的 jar 包

①测试数据库连接

sqoop list-databases –connect jdbc:mysql://192.168.10.63 –username root–password 123456

②Sqoop 的使用

以下所有的命令每行之后都存在一个空格,不要忘记

(以下 6 中命令都没有进行过成功测试)

<1>mysql–>hdfs

sqoop export –connect

jdbc:mysql://192.168.10.63/ipj

–username root

–password 123456

–table ipj_flow_user

–export-dir hdfs://192.168.10.63:8020/user/flow/part-m-00000

前提:

(1)hdfs 中目录 /user/flow/part-m-00000 必须存在

(2) 如果集群设置了压缩方式 lzo,那么本机必须得安装且配置成功 lzo

(3)hadoop 集群中每个节点都要有对 mysql 的操作权限

<2>hdfs–>mysql

sqoop import –connect

jdbc:mysql://192.168.10.63/ipj

–table ipj_flow_user

<3>mysql–>hbase

sqoop import –connect

jdbc:mysql://192.168.10.63/ipj

–table ipj_flow_user

–hbase-table ipj_statics_test

–hbase-create-table

–hbase-row-key id

–column-family imei

<4>hbase–>mysql

关于将 Hbase 的数据导入到 mysql 里,Sqoop 并不是直接支持的,一般采用如下 3 种方法:

第一种:将 Hbase 数据扁平化成 HDFS 文件,然后再由 Sqoop 导入.

第二种:将 Hbase 数据导入 Hive 表中,然后再导入 mysql。

第三种:直接使用 Hbase 的 Java API 读取表数据,直接向 mysql 导入

不需要使用 Sqoop。

<5>mysql–>hive

sqoop import –connect

jdbc:mysql://192.168.10.63/ipj

–table hive_table_test

–hive-import

–hive-table hive_test_table 或–create-hive-table hive_test_table

<6>hive–>mysql

sqoop export –connect

jdbc:mysql://192.168.10.63/ipj

–username hive

–password 123456

–table target_table

–export-dir /user/hive/warehouse/uv/dt=mytable

前提:mysql 中表必须存在

③Sqoop 其他操作

<1> 列出 mysql 中的所有数据库

sqoop list-databases –connect jdbc:mysql://192.168.10.63:3306/ –usernameroot –password 123456

<2> 列出 mysql 中某个库下所有表

sqoop list-tables –connect jdbc:mysql://192.168.10.63:3306/ipj –usernameroot –password 123456

6 Sqoop1 的性能

测试数据:

表名:tb_keywords

行数:11628209

数据文件大小:1.4G

测试结果:

HDFS—>DB | HDFS<—DB | |

Sqoop | 428s | 166s |

HDFS<->FILE<->DB | 209s | 105s |

从结果上来看,以 FILE 作为中转方式性能是要高于 SQOOP 的, 原因如下:

本质上 SQOOP 使用的是 JDBC,效率不会比 MYSQL 自带的导入 \ 导出工具效率高以导入数据到 DB 为例,SQOOP 的设计思想是分阶段提交,也就是说假设一个表有 1K 行,那么它会先读出 100 行(默认值),然后插入,提交,再读取 100 行……如此往复

即便如此,SQOOP 也是有优势的,比如说使用的便利性,任务执行的容错性等。在一些测试环境中如果需要的话可以考虑把它拿来作为一个工具使用。

Sqoop 的详细介绍:请点这里

Sqoop 的下载地址:请点这里

1. 什么是 Sqoop

Sqoop 即 SQL to Hadoop,是一款方便的在传统型数据库与 Hadoop 之间进行数据迁移的工具,充分利用 MapReduce 并行特点以批处理的方式加快数据传输,发展至今主要演化了二大版本,Sqoop1 和 Sqoop2。

Sqoop 工具是 hadoop 下连接关系型数据库和 Hadoop 的桥梁,支持关系型数据库和 hive、hdfs,hbase 之间数据的相互导入,可以使用全表导入和增量导入。

那么为什么选择 Sqoop 呢?

高效可控的利用资源,任务并行度,超时时间。数据类型映射与转化,可自动进行,用户也可自定义 支持多种主流数据库,MySQL,Oracle,SQL Server,DB2 等等

2.Sqoop1 和 Sqoop2 对比的异同之处

两个不同的版本,完全不兼容 版本号划分区别,Apache 版本:1.4.x(Sqoop1); 1.99.x(Sqoop2) CDH 版本 : Sqoop-1.4.3-cdh4(Sqoop1) ; Sqoop2-1.99.2-cdh4.5.0 (Sqoop2)Sqoop2 比 Sqoop1 的改进 引入 Sqoop server,集中化管理 connector 等 多种访问方式:CLI,Web UI,REST API 引入基于角色的安全机制

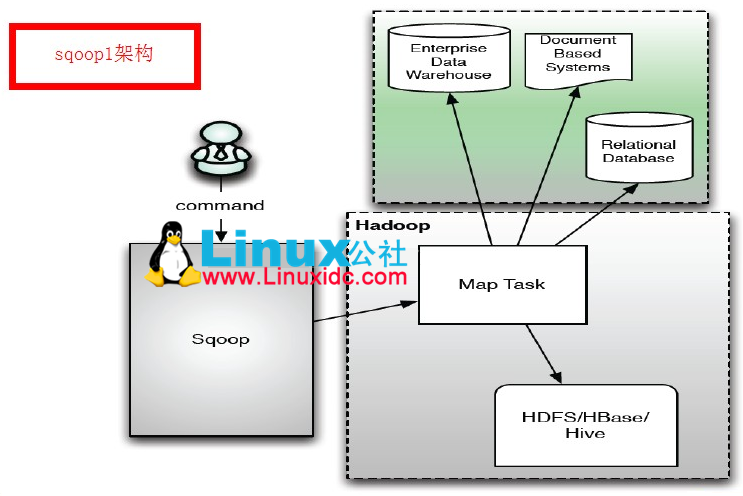

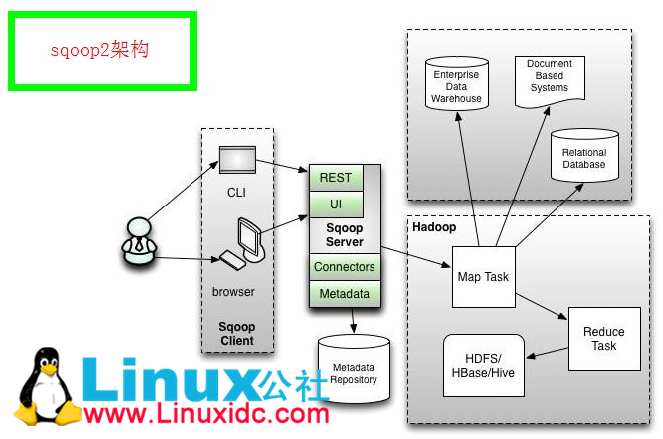

3.Sqoop1 与 Sqoop2 的架构图

Sqoop 架构图 1

Sqoop 架构图 2

通过 Sqoop 实现 Mysql / Oracle 与 HDFS / Hbase 互导数据 http://www.linuxidc.com/Linux/2013-06/85817.htm

[Hadoop] Sqoop 安装过程详解 http://www.linuxidc.com/Linux/2013-05/84082.htm

用 Sqoop 进行 MySQL 和 HDFS 系统间的数据互导 http://www.linuxidc.com/Linux/2013-04/83447.htm

Hadoop Oozie 学习笔记 Oozie 不支持 Sqoop 问题解决 http://www.linuxidc.com/Linux/2012-08/67027.htm

Hadoop 生态系统搭建(hadoop hive hbase zookeeper oozie Sqoop)http://www.linuxidc.com/Linux/2012-03/55721.htm

Hadoop 学习全程记录——使用 Sqoop 将 MySQL 中数据导入到 Hive 中 http://www.linuxidc.com/Linux/2012-01/51993.htm

4.Sqoop1 与 Sqoop2 的优缺点

比较 | Sqoop1 | Sqoop2 |

架构 | 仅仅使用一个 Sqoop 客户端 | 引入了 Sqoop server 集中化管理 connector,以及 rest api,web,UI,并引入权限安全机制 |

部署 | 部署简单,安装需要 root 权限,connector 必须符合 JDBC 模型 | 架构稍复杂,配置部署更繁琐 |

使用 | 命令行方式容易出错,格式紧耦合,无法支持所有数据类型,安全机制不够完善,例如密码暴漏 | 多种交互方式,命令行,web UI,rest API,conncetor 集中化管理,所有的链接安装在 Sqoop server 上,完善权限管理机制,connector 规范化,仅仅负责数据的读写 |

5.Sqoop1 的安装部署

5.0 安装环境

hadoop:hadoop-2.3.0-cdh5.1.2

sqoop:sqoop-1.4.4-cdh5.1.2

5.1 下载安装包及解压

tar -zxvf sqoop-1.4.4-cdh5.1.2.tar.gz

ln -s sqoop-1.4.4-cdh5.1.2 sqoop

5.2 配置环境变量和配置文件

cd sqoop/conf/

cat sqoop-env-template.sh >> sqoop-env.sh

vi sqoop-env.sh

在 sqoop-env.sh 中添加如下代码

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the “License”); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an “AS IS” BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# included in all the hadoop scripts with source command

# should not be executable directly

# also should not be passed any arguments, since we need original $*

# Set Hadoop-specific environment variables here.

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/home/hadoop/hadoop

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/home/hadoop/hadoop

#set the path to where bin/hbase is available

export HBASE_HOME=/home/hadoop/hbase

#Set the path to where bin/hive is available

export HIVE_HOME=/home/hadoop/hive

#Set the path for where zookeper config dir is

export ZOOCFGDIR=/home/hadoop/zookeeper

该配置文件中只有 HADOOP_COMMON_HOME 的配置是必须的 另外关于 hbase 和 hive 的配置 如果用到需要配置 不用的话就不用配置

更多详情见请继续阅读下一页的精彩内容:http://www.linuxidc.com/Linux/2014-10/108337p2.htm