共计 13738 个字符,预计需要花费 35 分钟才能阅读完成。

Hadoop 的安装和配置可以参考我之前的文章:在 Win7 虚拟机下搭建 Hadoop2.6.0 伪分布式环境 http://www.linuxidc.com/Linux/2015-08/120942.htm。

本篇介绍如何在 Hadoop2.6.0 基础上搭建 spark1.4.0 单机环境。

1. 软件准备

scala-2.11.7.tgz

spark-1.4.0-bin-hadoop2.6.tgz

都可以从官网下载。

2. scala 安装和配置

scala-2.11.7.tgz 解压缩即可。我解压缩到目录 /home/vm/tools/scala,之后配置~/.bash_profile 环境变量。

|

#scala export SCALA_HOME=/home/vm/tools/scala export PATH=$SCALA_HOME/bin:$PATH |

使用 source ~/.bash_profile 生效。

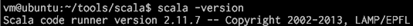

验证 scala 安装是否成功:

交互式使用 scala:

3. spark 安装和配置

解压缩 spark-1.4.0-bin-hadoop2.6.tgz 到 /home/vm/tools/spark 目录,之后配置~/.bash_profile 环境变量。

|

#spark export SPARK_HOME=/home/vm/tools/spark export PATH=$SPARK_HOME/bin:$PATH |

修改 $SPARK_HOME/conf/spark-env.sh

|

export SPARK_HOME=/home/vm/tools/spark export SCALA_HOME=/home/vm/tools/scala export JAVA_HOME=/home/vm/tools/jdk export SPARK_MASTER_IP=192.168.62.129 export SPARK_WORKER_MEMORY=512m |

修改 $SPARK_HOME/conf/spark-defaults.conf

|

spark.master spark://192.168.62.129:7077 spark.serializer org.apache.spark.serializer.KryoSerializer |

修改 $SPARK_HOME/conf/spark-defaults.conf

|

192.168.62.129 这是我测试机器的 IP 地址 |

启动 spark

|

cd /home/vm/tools/spark/sbin sh start-all.sh |

测试 Spark 是否安装成功

|

cd $SPARK_HOME/bin/ ./run-example SparkPi |

SparkPi 的执行日志:

| 1 vm@Ubuntu:~/tools/spark/bin$ ./run-example SparkPi | |

| 2 | |

| 3 Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties | |

| 4 | |

| 5 15/07/29 00:02:32 INFO SparkContext: Running Spark version 1.4.0 | |

| 6 | |

| 7 15/07/29 00:02:33 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| 8 | |

| 9 15/07/29 00:02:34 INFO SecurityManager: Changing view acls to: vm | |

| 10 | |

| 11 15/07/29 00:02:34 INFO SecurityManager: Changing modify acls to: vm | |

| 12 | |

| 13 15/07/29 00:02:34 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(vm); users with modify permissions: Set(vm) | |

| 14 | |

| 15 15/07/29 00:02:35 INFO Slf4jLogger: Slf4jLogger started | |

| 16 | |

| 17 15/07/29 00:02:35 INFO Remoting: Starting remoting | |

| 18 | |

| 19 15/07/29 00:02:36 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@192.168.62.129:34337] | |

| 20 | |

| 21 15/07/29 00:02:36 INFO Utils: Successfully started service 'sparkDriver' on port 34337. | |

| 22 | |

| 23 15/07/29 00:02:36 INFO SparkEnv: Registering MapOutputTracker | |

| 24 | |

| 25 15/07/29 00:02:36 INFO SparkEnv: Registering BlockManagerMaster | |

| 26 | |

| 27 15/07/29 00:02:36 INFO DiskBlockManager: Created local directory at /tmp/spark-78277899-e4c4-4dcc-8c16-f46fce5e657d/blockmgr-be03da6d-31fe-43dd-959c-6cfa4307b269 | |

| 28 | |

| 29 15/07/29 00:02:36 INFO MemoryStore: MemoryStore started with capacity 267.3 MB | |

| 30 | |

| 31 15/07/29 00:02:36 INFO HttpFileServer: HTTP File server directory is /tmp/spark-78277899-e4c4-4dcc-8c16-f46fce5e657d/httpd-fdc26a4d-c0b6-4fc9-9dee-fb085191ee5a | |

| 32 | |

| 33 15/07/29 00:02:36 INFO HttpServer: Starting HTTP Server | |

| 34 | |

| 35 15/07/29 00:02:37 INFO Utils: Successfully started service 'HTTP file server' on port 56880. | |

| 36 | |

| 37 15/07/29 00:02:37 INFO SparkEnv: Registering OutputCommitCoordinator | |

| 38 | |

| 39 15/07/29 00:02:37 INFO Utils: Successfully started service 'SparkUI' on port 4040. | |

| 40 | |

| 41 15/07/29 00:02:37 INFO SparkUI: Started SparkUI at http://192.168.62.129:4040 | |

| 42 | |

| 43 15/07/29 00:02:40 INFO SparkContext: Added JAR file:/home/vm/tools/spark/lib/spark-examples-1.4.0-hadoop2.6.0.jar at http://192.168.62.129:56880/jars/spark-examples-1.4.0-hadoop2.6.0.jar with timestamp 1438099360726 | |

| 44 | |

| 45 15/07/29 00:02:41 INFO Executor: Starting executor ID driver on host localhost | |

| 46 | |

| 47 15/07/29 00:02:41 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 44722. | |

| 48 | |

| 49 15/07/29 00:02:41 INFO NettyBlockTransferService: Server created on 44722 | |

| 50 | |

| 51 15/07/29 00:02:41 INFO BlockManagerMaster: Trying to register BlockManager | |

| 52 | |

| 53 15/07/29 00:02:41 INFO BlockManagerMasterEndpoint: Registering block manager localhost:44722 with 267.3 MB RAM, BlockManagerId(driver, localhost, 44722) | |

| 54 | |

| 55 15/07/29 00:02:41 INFO BlockManagerMaster: Registered BlockManager | |

| 56 | |

| 57 15/07/29 00:02:43 INFO SparkContext: Starting job: reduce at SparkPi.scala:35 | |

| 58 | |

| 59 15/07/29 00:02:43 INFO DAGScheduler: Got job 0 (reduce at SparkPi.scala:35) with 2 output partitions (allowLocal=false) | |

| 60 | |

| 61 15/07/29 00:02:43 INFO DAGScheduler: Final stage: ResultStage 0(reduce at SparkPi.scala:35) | |

| 62 | |

| 63 15/07/29 00:02:43 INFO DAGScheduler: Parents of final stage: List() | |

| 64 | |

| 65 15/07/29 00:02:43 INFO DAGScheduler: Missing parents: List() | |

| 66 | |

| 67 15/07/29 00:02:43 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:31), which has no missing parents | |

| 68 | |

| 69 15/07/29 00:02:44 INFO MemoryStore: ensureFreeSpace(1888) called with curMem=0, maxMem=280248975 | |

| 70 | |

| 71 15/07/29 00:02:44 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 1888.0 B, free 267.3 MB) | |

| 72 | |

| 73 15/07/29 00:02:44 INFO MemoryStore: ensureFreeSpace(1186) called with curMem=1888, maxMem=280248975 | |

| 74 | |

| 75 15/07/29 00:02:44 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1186.0 B, free 267.3 MB) | |

| 76 | |

| 77 15/07/29 00:02:44 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on localhost:44722 (size: 1186.0 B, free: 267.3 MB) | |

| 78 | |

| 79 15/07/29 00:02:44 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:874 | |

| 80 | |

| 81 15/07/29 00:02:44 INFO DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:31) | |

| 82 | |

| 83 15/07/29 00:02:44 INFO TaskSchedulerImpl: Adding task set 0.0 with 2 tasks | |

| 84 | |

| 85 15/07/29 00:02:45 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, PROCESS_LOCAL, 1344 bytes) | |

| 86 | |

| 87 15/07/29 00:02:45 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, PROCESS_LOCAL, 1346 bytes) | |

| 88 | |

| 89 15/07/29 00:02:45 INFO Executor: Running task 1.0 in stage 0.0 (TID 1) | |

| 90 | |

| 91 15/07/29 00:02:45 INFO Executor: Running task 0.0 in stage 0.0 (TID 0) | |

| 92 | |

| 93 15/07/29 00:02:45 INFO Executor: Fetching http://192.168.62.129:56880/jars/spark-examples-1.4.0-hadoop2.6.0.jar with timestamp 1438099360726 | |

| 94 | |

| 95 15/07/29 00:02:45 INFO Utils: Fetching http://192.168.62.129:56880/jars/spark-examples-1.4.0-hadoop2.6.0.jar to /tmp/spark-78277899-e4c4-4dcc-8c16-f46fce5e657d/userFiles-27c8dd76-e417-4d13-9bfd-a978cbbaacd1/fetchFileTemp5302506499464337647.tmp | |

| 96 | |

| 97 15/07/29 00:02:47 INFO Executor: Adding file:/tmp/spark-78277899-e4c4-4dcc-8c16-f46fce5e657d/userFiles-27c8dd76-e417-4d13-9bfd-a978cbbaacd1/spark-examples-1.4.0-hadoop2.6.0.jar to class loader | |

| 98 | |

| 99 15/07/29 00:02:47 INFO Executor: Finished task 1.0 in stage 0.0 (TID 1). 582 bytes result sent to driver | |

| 100 | |

| 101 15/07/29 00:02:47 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 582 bytes result sent to driver | |

| 102 | |

| 103 15/07/29 00:02:47 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 2641 ms on localhost (1/2) | |

| 104 | |

| 105 15/07/29 00:02:47 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 2718 ms on localhost (2/2) | |

| 106 | |

| 107 15/07/29 00:02:47 INFO DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:35) finished in 2.817 s | |

| 108 | |

| 109 15/07/29 00:02:47 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool | |

| 110 | |

| 111 15/07/29 00:02:47 INFO DAGScheduler: Job 0 finished: reduce at SparkPi.scala:35, took 4.244145 s | |

| 112 | |

| 113 Pi is roughly 3.14622 | |

| 114 | |

| 115 15/07/29 00:02:47 INFO SparkUI: Stopped Spark web UI at http://192.168.62.129:4040 | |

| 116 | |

| 117 15/07/29 00:02:47 INFO DAGScheduler: Stopping DAGScheduler | |

| 118 | |

| 119 15/07/29 00:02:48 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! | |

| 120 | |

| 121 15/07/29 00:02:48 INFO Utils: path = /tmp/spark-78277899-e4c4-4dcc-8c16-f46fce5e657d/blockmgr-be03da6d-31fe-43dd-959c-6cfa4307b269, already present as root for deletion. | |

| 122 | |

| 123 15/07/29 00:02:48 INFO MemoryStore: MemoryStore cleared | |

| 124 | |

| 125 15/07/29 00:02:48 INFO BlockManager: BlockManager stopped | |

| 126 | |

| 127 15/07/29 00:02:48 INFO BlockManagerMaster: BlockManagerMaster stopped | |

| 128 | |

| 129 15/07/29 00:02:48 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! | |

| 130 | |

| 131 15/07/29 00:02:48 INFO SparkContext: Successfully stopped SparkContext | |

| 132 | |

| 133 15/07/29 00:02:48 INFO Utils: Shutdown hook called | |

| 134 | |

| 135 15/07/29 00:02:48 INFO Utils: Deleting directory /tmp/spark-78277899-e4c4-4dcc-8c16-f46fce5e657d |

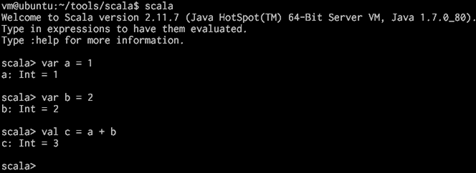

在浏览器中打开地址 http://192.168.62.129:8080 可以查看 spark 集群和任务基本情况:

4. spark-shell 工具

在 /home/vm/tools/spark/bin 下执行./spark-shell,即可进入 spark-shell 交互界面。通过 spark-shell 可以进行一些调试工作。

| 1 vm@ubuntu:~/tools/spark/bin$ ./spark-shell | |

| 2 | |

| 3 log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory). | |

| 4 | |

| 5 log4j:WARN Please initialize the log4j system properly. | |

| 6 | |

| 7 log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info. | |

| 8 | |

| 9 Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties | |

| 10 | |

| 11 15/07/29 00:08:02 INFO SecurityManager: Changing view acls to: vm | |

| 12 | |

| 13 15/07/29 00:08:02 INFO SecurityManager: Changing modify acls to: vm | |

| 14 | |

| 15 15/07/29 00:08:02 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(vm); users with modify permissions: Set(vm) | |

| 16 | |

| 17 15/07/29 00:08:03 INFO HttpServer: Starting HTTP Server | |

| 18 | |

| 19 15/07/29 00:08:04 INFO Utils: Successfully started service 'HTTP class server' on port 56464. | |

| 20 | |

| 21 Welcome to | |

| 22 | |

| 23 ____ __ | |

| 24 | |

| 25 / __/__ ___ _____/ /__ | |

| 26 | |

| 27 _\ \/ _ \/ _ `/ __/ '_/ | |

| 28 | |

| 29 /___/ .__/\_,_/_/ /_/\_\ version 1.4.0 | |

| 30 | |

| 31 /_/ | |

| 32 | |

| 33 | |

| 34 | |

| 35 Using Scala version 2.10.4 (Java HotSpot(TM) 64-Bit Server VM, Java 1.7.0_80) | |

| 36 | |

| 37 Type in expressions to have them evaluated. | |

| 38 | |

| 39 Type :help for more information. | |

| 40 | |

| 41 15/07/29 00:08:37 INFO SparkContext: Running Spark version 1.4.0 | |

| 42 | |

| 43 15/07/29 00:08:38 INFO SecurityManager: Changing view acls to: vm | |

| 44 | |

| 45 15/07/29 00:08:38 INFO SecurityManager: Changing modify acls to: vm | |

| 46 | |

| 47 15/07/29 00:08:38 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(vm); users with modify permissions: Set(vm) | |

| 48 | |

| 49 15/07/29 00:08:40 INFO Slf4jLogger: Slf4jLogger started | |

| 50 | |

| 51 15/07/29 00:08:41 INFO Remoting: Starting remoting | |

| 52 | |

| 53 15/07/29 00:08:42 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@192.168.62.129:59312] | |

| 54 | |

| 55 15/07/29 00:08:42 INFO Utils: Successfully started service 'sparkDriver' on port 59312. | |

| 56 | |

| 57 15/07/29 00:08:42 INFO SparkEnv: Registering MapOutputTracker | |

| 58 | |

| 59 15/07/29 00:08:42 INFO SparkEnv: Registering BlockManagerMaster | |

| 60 | |

| 61 15/07/29 00:08:43 INFO DiskBlockManager: Created local directory at /tmp/spark-621ebed4-8bd8-4e87-9ea5-08b5c7f05e98/blockmgr-a12211dd-e0ba-4556-999c-6249b9c44d8a | |

| 62 | |

| 63 15/07/29 00:08:43 INFO MemoryStore: MemoryStore started with capacity 267.3 MB | |

| 64 | |

| 65 15/07/29 00:08:43 INFO HttpFileServer: HTTP File server directory is /tmp/spark-621ebed4-8bd8-4e87-9ea5-08b5c7f05e98/httpd-8512d909-5a81-4935-8fbd-2b2ed741ae26 | |

| 66 | |

| 67 15/07/29 00:08:43 INFO HttpServer: Starting HTTP Server | |

| 68 | |

| 69 15/07/29 00:08:57 INFO Utils: Successfully started service 'HTTP file server' on port 43678. | |

| 70 | |

| 71 15/07/29 00:09:00 INFO SparkEnv: Registering OutputCommitCoordinator | |

| 72 | |

| 73 15/07/29 00:09:02 INFO Utils: Successfully started service 'SparkUI' on port 4040. | |

| 74 | |

| 75 15/07/29 00:09:02 INFO SparkUI: Started SparkUI at http://192.168.62.129:4040 | |

| 76 | |

| 77 15/07/29 00:09:03 INFO Executor: Starting executor ID driver on host localhost | |

| 78 | |

| 79 15/07/29 00:09:03 INFO Executor: Using REPL class URI: http://192.168.62.129:56464 | |

| 80 | |

| 81 15/07/29 00:09:04 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 50636. | |

| 82 | |

| 83 15/07/29 00:09:04 INFO NettyBlockTransferService: Server created on 50636 | |

| 84 | |

| 85 15/07/29 00:09:04 INFO BlockManagerMaster: Trying to register BlockManager | |

| 86 | |

| 87 15/07/29 00:09:04 INFO BlockManagerMasterEndpoint: Registering block manager localhost:50636 with 267.3 MB RAM, BlockManagerId(driver, localhost, 50636) | |

| 88 | |

| 89 15/07/29 00:09:04 INFO BlockManagerMaster: Registered BlockManager | |

| 90 | |

| 91 15/07/29 00:09:05 INFO SparkILoop: Created spark context.. | |

| 92 | |

| 93 Spark context available as sc. | |

| 94 | |

| 95 15/07/29 00:09:07 INFO HiveContext: Initializing execution hive, version 0.13.1 | |

| 96 | |

| 97 15/07/29 00:09:09 INFO HiveMetaStore: 0: Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore | |

| 98 | |

| 99 15/07/29 00:09:09 INFO ObjectStore: ObjectStore, initialize called | |

| 100 | |

| 101 15/07/29 00:09:10 INFO Persistence: Property datanucleus.cache.level2 unknown - will be ignored | |

| 102 | |

| 103 15/07/29 00:09:10 INFO Persistence: Property hive.metastore.integral.jdo.pushdown unknown - will be ignored | |

| 104 | |

| 105 15/07/29 00:09:11 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies) | |

| 106 | |

| 107 15/07/29 00:09:13 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies) | |

| 108 | |

| 109 15/07/29 00:09:19 INFO ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order" | |

| 110 | |

| 111 15/07/29 00:09:20 INFO MetaStoreDirectSql: MySQL check failed, assuming we are not on mysql: Lexical error at line 1, column 5. Encountered: "@" (64), after : "". | |

| 112 | |

| 113 15/07/29 00:09:23 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table. | |

| 114 | |

| 115 15/07/29 00:09:23 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table. | |

| 116 | |

| 117 15/07/29 00:09:28 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table. | |

| 118 | |

| 119 15/07/29 00:09:28 INFO Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table. | |

| 120 | |

| 121 15/07/29 00:09:29 INFO ObjectStore: Initialized ObjectStore | |

| 122 | |

| 123 15/07/29 00:09:29 WARN ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 0.13.1aa | |

| 124 | |

| 125 15/07/29 00:09:31 INFO HiveMetaStore: Added admin role in metastore | |

| 126 | |

| 127 15/07/29 00:09:31 INFO HiveMetaStore: Added public role in metastore | |

| 128 | |

| 129 15/07/29 00:09:31 INFO HiveMetaStore: No user is added in admin role, since config is empty | |

| 130 | |

| 131 15/07/29 00:09:32 INFO SessionState: No Tez session required at this point. hive.execution.engine=mr. | |

| 132 | |

| 133 15/07/29 00:09:32 INFO SparkILoop: Created sql context (with Hive support).. | |

| 134 | |

| 135 SQL context available as sqlContext. | |

| 136 | |

| 137 | |

| 138 | |

| 139 scala> |

下一篇将介绍分别用 eclipse 和 IDEA 搭建 spark 开发环境。

Ubuntu14.04 下 Hadoop2.4.1 单机 / 伪分布式安装配置教程 http://www.linuxidc.com/Linux/2015-02/113487.htm

CentOS 安装和配置 Hadoop2.2.0 http://www.linuxidc.com/Linux/2014-01/94685.htm

Ubuntu 13.04 上搭建 Hadoop 环境 http://www.linuxidc.com/Linux/2013-06/86106.htm

Ubuntu 12.10 +Hadoop 1.2.1 版本集群配置 http://www.linuxidc.com/Linux/2013-09/90600.htm

Ubuntu 上搭建 Hadoop 环境(单机模式 + 伪分布模式)http://www.linuxidc.com/Linux/2013-01/77681.htm

Ubuntu 下 Hadoop 环境的配置 http://www.linuxidc.com/Linux/2012-11/74539.htm

单机版搭建 Hadoop 环境图文教程详解 http://www.linuxidc.com/Linux/2012-02/53927.htm

更多 Hadoop 相关信息见 Hadoop 专题页面 http://www.linuxidc.com/topicnews.aspx?tid=13

本文永久更新链接地址 :http://www.linuxidc.com/Linux/2015-08/120945.htm