共计 9498 个字符,预计需要花费 24 分钟才能阅读完成。

- 总结

- 除了配置 hosts,和免密码互连之外,先在一台机器上装好所有东西

- 配置好之后,拷贝虚拟机,配置 hosts 和免密码互连

- 之前在公司装的时候 jdk 用的 32 位,Hadoop 的 native 包不能正常加载,浪费好多时间自己编译,所以 jdk 务必 64 位

- 配置免密码互连

- sudo chown -R hadoop /opt

- sudo chgrp -R hadoop /opt

其它也没什么了, 注意下文件的用户组,不一定是 ”hadoop”,根据自己的情况设置

- 准备文件

- linuxmint17x64

- jdk1.8 x64 一定要 64 位,除非你想自己去编译 hadoop 的 native 包

- hadoop2.7.1

- VirtualBox

- MobaXterm SSH 工具 用 putty 也可以,随意

- 虚拟机安装和配置

我们需要三台虚拟机,可以先装一台虚拟机,下载好 hadoop,配置好 JDK,设置好环境变量后拷贝虚拟机

-

- 安装第一台虚拟机

- 安装的步骤不说了,说一下注意点

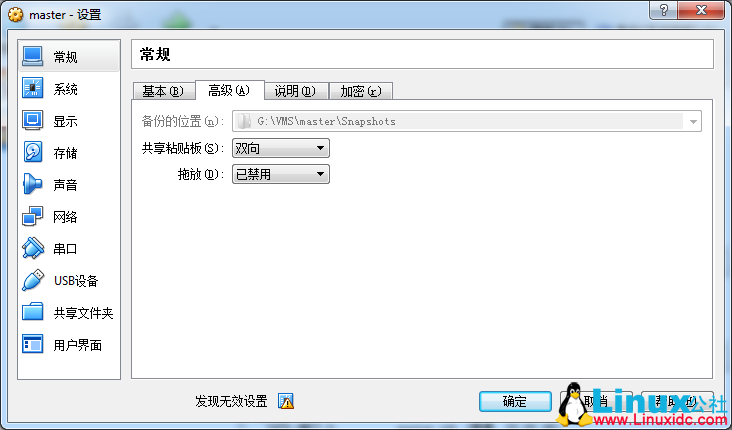

- 注意共享粘贴板

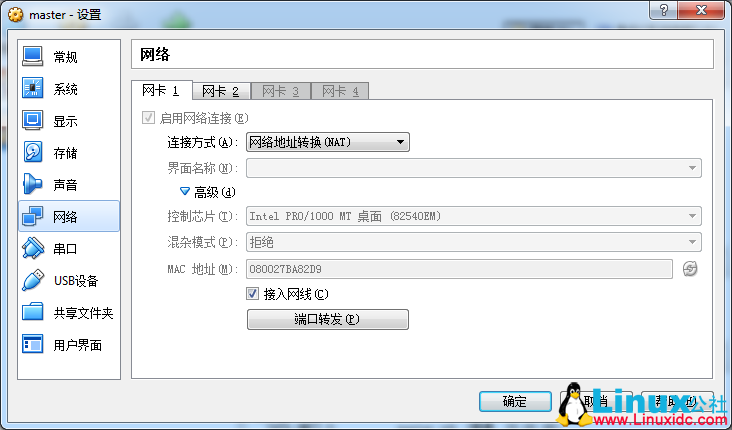

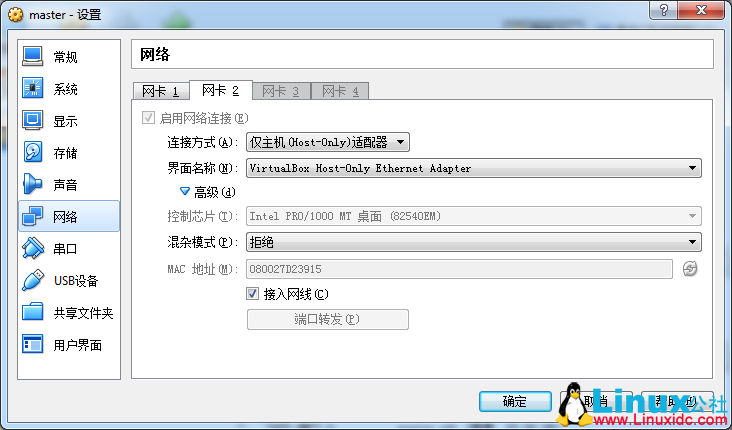

- 多添加一块网卡,选择 ”HostOnly”, 这样我们应该就有两个网卡一个 NAT,一个 HostOnly.

- 安装的时候会有一步下载语言包,这步直接 Skip.

- 安装需要用到的软件和一些设置,其实这块才是最麻烦的

- 查看一下你当前的组groups

sudo chown -R hadoop /opt

sudo chown -R hadoop /opt- sudo chgrp -R hadoop /opt

- 安装 openssh-server,linuxmint 默认应该是没有装过的. sudo apt-get install openssh-server

- 关闭防火墙 sudo ufw disable

- 查看防火墙状态 sudo ufw status inactive

- 安装 vim,sudo apt-get install vim

- 修改 hostname(三台机器的 hostname 最好不一样,比如我是 master-hadoop,slave1-hadoop,slave2-hadoop, 为了好区分)

- Debian 系: vi /etc/hostname

- RedHat 系: vi /etc/sysconfig/network

- 重启

- Debian 系: vi /etc/hostname

- 安装 JDK

- 用 MobaXterm 连接到虚拟机(自己查看一下 IP,第一台应该是 192.168.56.101)

- 创建 lib 目录用来存放一些会用到的组建,比如 jdk

- mkdir /opt/lib

- 把下载的 jdk 上传到 /opt/lib 中(用 MX 直接可以拖放进去)

- 解压 jdk tar -zxvf jdk-8u92-linux-x64.tar.gz

- mv jdk1.8.0_92 jdk8 重命名一下文件夹名称

- 看一下现在的目录结构,注意下 own 和 grp 都是 hadoop(也可以不是 hadoop, 但是最好和 hadoop 相关的文件目录都属于一个组,防止权限不足等情况)

-

hadoop@hadoop-pc / $ cd /opt/ hadoop@hadoop-pc /opt $ ll total 16 drwxr-xr-x 4 hadoop hadoop 4096 Jul 2 00:33 ./ drwxr-xr-x 23 root root 4096 Jul 1 23:23 ../ drwxr-xr-x 3 hadoop hadoop 4096 Nov 29 2015 firefox/ drwxr-xr-x 3 hadoop hadoop 4096 Jul 2 01:04 lib/ hadoop@hadoop-pc /opt $ cd lib/ hadoop@hadoop-pc /opt/lib $ ll total 177156 drwxr-xr-x 3 hadoop hadoop 4096 Jul 2 01:04 ./ drwxr-xr-x 4 hadoop hadoop 4096 Jul 2 00:33 ../ drwxr-xr-x 8 hadoop hadoop 4096 Apr 1 12:20 jdk8/ -rw-rw-r-- 1 hadoop hadoop 181389058 Jul 2 01:00 jdk-8u92-linux-x64.tar.gz hadoop@hadoop-pc /opt/lib $ mkdir package hadoop@hadoop-pc /opt/lib $ mv jdk-8u92-linux-x64.tar.gz package/ hadoop@hadoop-pc /opt/lib $ ll total 16 drwxr-xr-x 4 hadoop hadoop 4096 Jul 2 01:08 ./ drwxr-xr-x 4 hadoop hadoop 4096 Jul 2 00:33 ../ drwxr-xr-x 8 hadoop hadoop 4096 Apr 1 12:20 jdk8/ drwxrwxr-x 2 hadoop hadoop 4096 Jul 2 01:08 package/ hadoop@hadoop-pc /opt/lib $ cd jdk8/ hadoop@hadoop-pc /opt/lib/jdk8 $ ll total 25916 drwxr-xr-x 8 hadoop hadoop 4096 Apr 1 12:20 ./ drwxr-xr-x 4 hadoop hadoop 4096 Jul 2 01:08 ../ drwxr-xr-x 2 hadoop hadoop 4096 Apr 1 12:17 bin/ -r--r--r-- 1 hadoop hadoop 3244 Apr 1 12:17 COPYRIGHT drwxr-xr-x 4 hadoop hadoop 4096 Apr 1 12:17 db/ drwxr-xr-x 3 hadoop hadoop 4096 Apr 1 12:17 include/ -rwxr-xr-x 1 hadoop hadoop 5090294 Apr 1 11:33 Javafx-src.zip* drwxr-xr-x 5 hadoop hadoop 4096 Apr 1 12:17 jre/ drwxr-xr-x 5 hadoop hadoop 4096 Apr 1 12:17 lib/ -r--r--r-- 1 hadoop hadoop 40 Apr 1 12:17 LICENSE drwxr-xr-x 4 hadoop hadoop 4096 Apr 1 12:17 man/ -r--r--r-- 1 hadoop hadoop 159 Apr 1 12:17 README.html -rw-r--r-- 1 hadoop hadoop 525 Apr 1 12:17 release -rw-r--r-- 1 hadoop hadoop 21104834 Apr 1 12:17 src.zip -rwxr-xr-x 1 hadoop hadoop 110114 Apr 1 11:33 THIRDPARTYLICENSEREADME-JAVAFX.txt* -r--r--r-- 1 hadoop hadoop 177094 Apr 1 12:17 THIRDPARTYLICENSEREADME.txt hadoop@hadoop-pc /opt/lib/jdk8 $ - 设置 JAVA_HOME 和环境变量

# /etc/profile: system-wide .profile file for the Bourne shell (sh(1)) # and Bourne compatible shells (bash(1), ksh(1), ash(1), ...). if ["$PS1" ]; then if ["$BASH" ] && ["$BASH" != "/bin/sh" ]; then # The file bash.bashrc already sets the default PS1. # PS1='\h:\w\$' if [-f /etc/bash.bashrc ]; then . /etc/bash.bashrc fi else if ["`id -u`" -eq 0 ]; then PS1='#' else PS1='$' fi fi fi # The default umask is now handled by pam_umask. # See pam_umask(8) and /etc/login.defs. if [-d /etc/profile.d]; then for i in /etc/profile.d/*.sh; do if [-r $i]; then . $i fi done unset i fi #ADD HERE JAVA_HOME=/opt/lib/jdk8 CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar PATH=$JAVA_HOME/bin:$PATH export JAVA_HOME export CLASSPATH export PATH - 检查 JAVA 版本和环境变量

hadoop@hadoop-pc / $ java -version java version "1.8.0_92" Java(TM) SE Runtime Environment (build 1.8.0_92-b14) Java HotSpot(TM) 64-Bit Server VM (build 25.92-b14, mixed mode) hadoop@hadoop-pc / $ echo $JAVA_HOME /opt/lib/jdk8 hadoop@hadoop-pc / $ echo $CLASSPATH .:/opt/lib/jdk8/lib/dt.jar:/opt/lib/jdk8/lib/tools.jar hadoop@hadoop-pc / $ echo $PATH /opt/lib/jdk8/bin:/opt/lib/jdk8/bin:/opt/lib/jdk8/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games hadoop@hadoop-pc / $

- 建立几个 hadoop 需要用的文件夹

- tmp 目录

- mkdir /opt/hadoop-tmp

- hdfs 目录

- mkdir /opt/hadoop-dfs

- name 目录

- mkdir /opt/hadoop-dfs/name

- data 目录

- mkdir /opt/hadoop-dfs/data

- tmp 目录

- 上传 hadoop

- 用 MX 把 hadoop 的压缩包上传到 /opt, 或者在 /opt 下 wget http://mirrors.cnnic.cn/apache/hadoop/common/hadoop-2.7.1/hadoop-2.7.1.tar.gz

- tar -zxvf hadoop-2.7.1.tar.gz

- mv hadoop-2.7.1.tar.gz lib/package/ 把压缩包备份到 package

- mv hadoop-2.7.1 hadoop 重命名一下文件夹

- 修改一下 hadoop 的配置文件

- hadoop 的配置文件在 /opt/hadoop/etc/hadoop 下面

- core-site.xml

-

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://master:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>file:/opt/hadoop-tmp</value> <description>Abasefor other temporary directories.</description> </property> </configuration>

-

- hdfs-site.xml

-

<configuration> <property> <name>dfs.namenode.secondary.http-address</name> <value>master:9001</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/opt/hadoop-dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/opt/hadoop-dfs/data</value> </property> <property> <name>dfs.replication</name> <value>3</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> </configuration>

-

- mapred-site.xml

- cp mapred-site.xml.template mapred-site.xml

-

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>master:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>master:19888</value> </property> </configuration>

- yarn-site.xml

-

<configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>master:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>master:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>master:8035</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>master:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>master:8088</value> </property> </configuration>

-

- slaves

-

12

slave1slave2

-

- hadoop-env.sh

- 修改 JAVA_HOME

export JAVA_HOME=/opt/lib/jdk8

- 修改 JAVA_HOME

- yarn-env.sh

- 添加 JAVA_HOME 环境变量

export JAVA_HOME=/opt/lib/jdk

- 添加 JAVA_HOME 环境变量

- core-site.xml

- 安装的步骤不说了,说一下注意点

- 安装第一台虚拟机

- 到此第一个虚拟机配置的差不多了,把这个虚拟机拷贝两份(注意是完全复制,并且需要重置 mac 地址),就有了三台虚拟机,分别为 master,slave1,slave2

-

- 修改 slave1 和 slave2 的 hostname 为 slave1-hadoop,slave2-hadoop

- 修改三台机器的 hosts

- 192.168.56.101 master

192.168.56.102 slave1

192.168.56.103 slave2

- 192.168.56.101 master

- ip 不一定,需要自己看下虚机的 ip

-

- 配置 master 可以免密码登录其它两台机器和自己

- 在 master 上操作

- ssh-keygen -t rsa -P ”,一切都选择默认操作,该输密码输密码

- ssh-copy-id hadoop@master

- ssh-copy-id hadoop@slave1

- ssh-copy-id hadoop@slave2

- 完成之后测试一下 ssh slave1 正常情况下应该不用密码就直接连接到 slave1 上

hadoop@master-hadoop ~ $ ssh-keygen -t rsa -P ''Generating public/private rsa key pair. Enter file in which to save the key (/home/hadoop/.ssh/id_rsa): Created directory '/home/hadoop/.ssh'. Your identification has been saved in /home/hadoop/.ssh/id_rsa. Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub. The key fingerprint is: 5c:c9:4c:0c:b6:28:eb:21:b9:6f:db:6e:3f:ee:0d:9a hadoop@master-hadoop The key's randomart image is: +--[RSA 2048]----+ | oo. | | o =.. | | . . . = | | . o . . | | o o S | | + . | | . . . | | ....o.o | | .o+E++.. | +-----------------+ hadoop@master-hadoop ~ $ ssh-copy-id hadoop@slave1 The authenticity of host 'slave1 (192.168.56.102)' can't be established. ECDSA key fingerprint is d8:fc:32:ed:a7:2c:e1:c7:d7:15:89:b9:f6:97:fb:c3. Are you sure you want to continue connecting (yes/no)? yes /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys hadoop@slave1's password: Number of key(s) added: 1 Now try logging into the machine, with: "ssh'hadoop@slave1'" and check to make sure that only the key(s) you wanted were added.

- 格式化 namenode

- ./bin/hdfs namenode –format

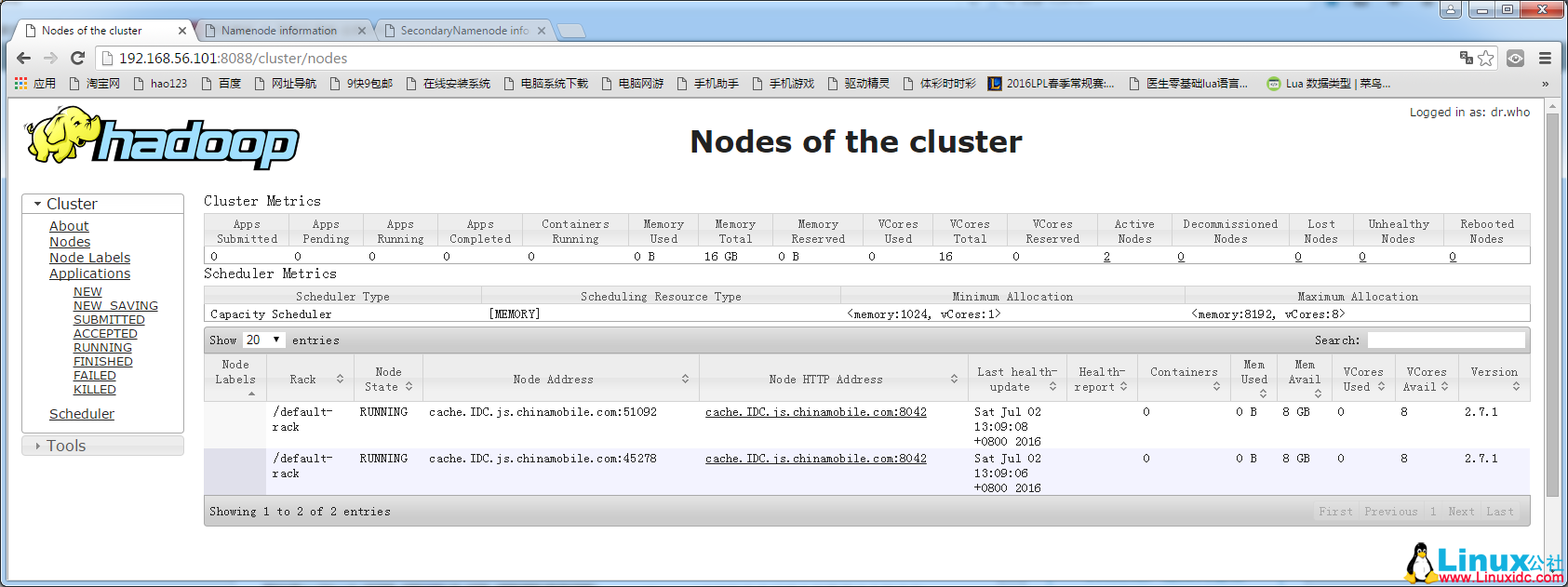

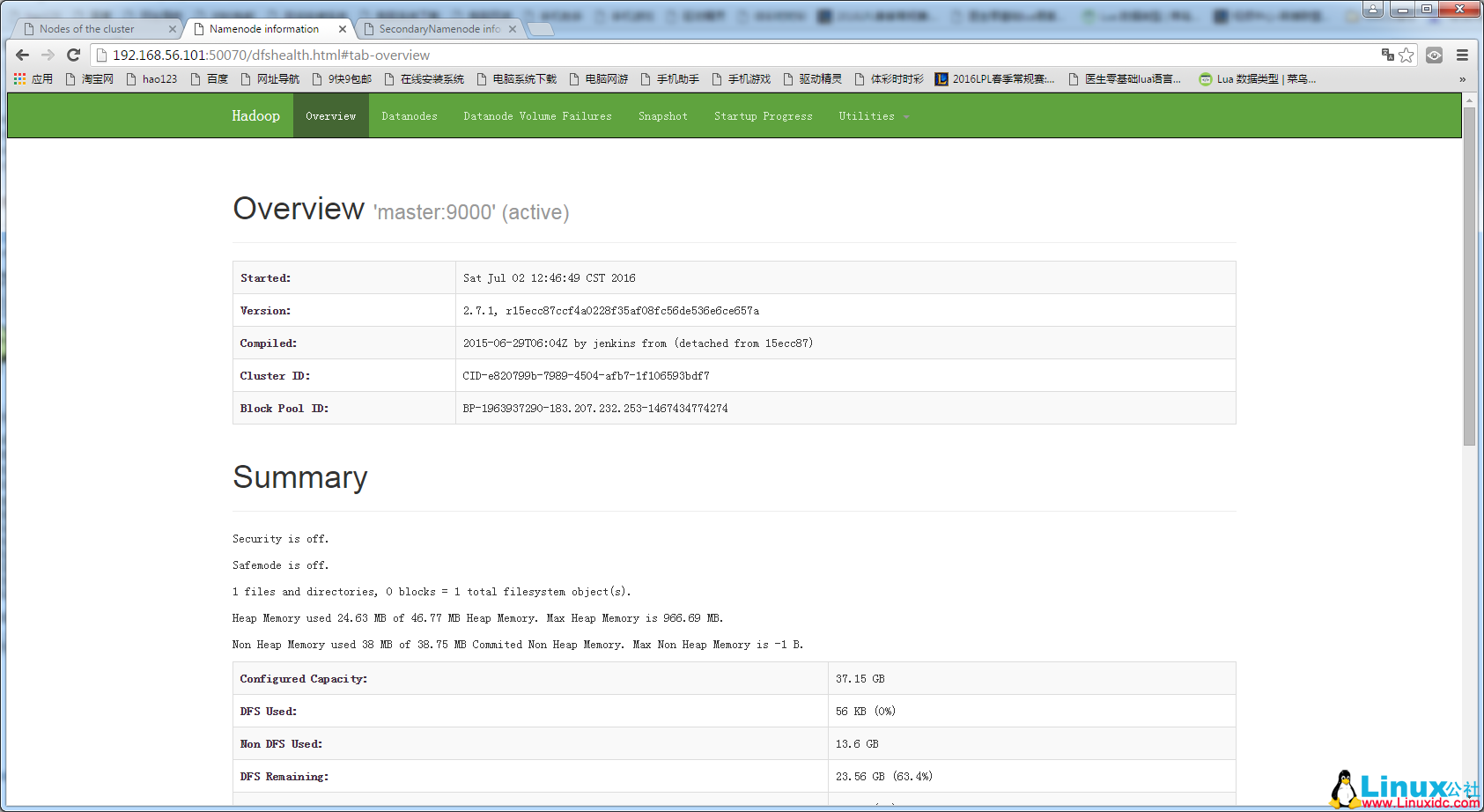

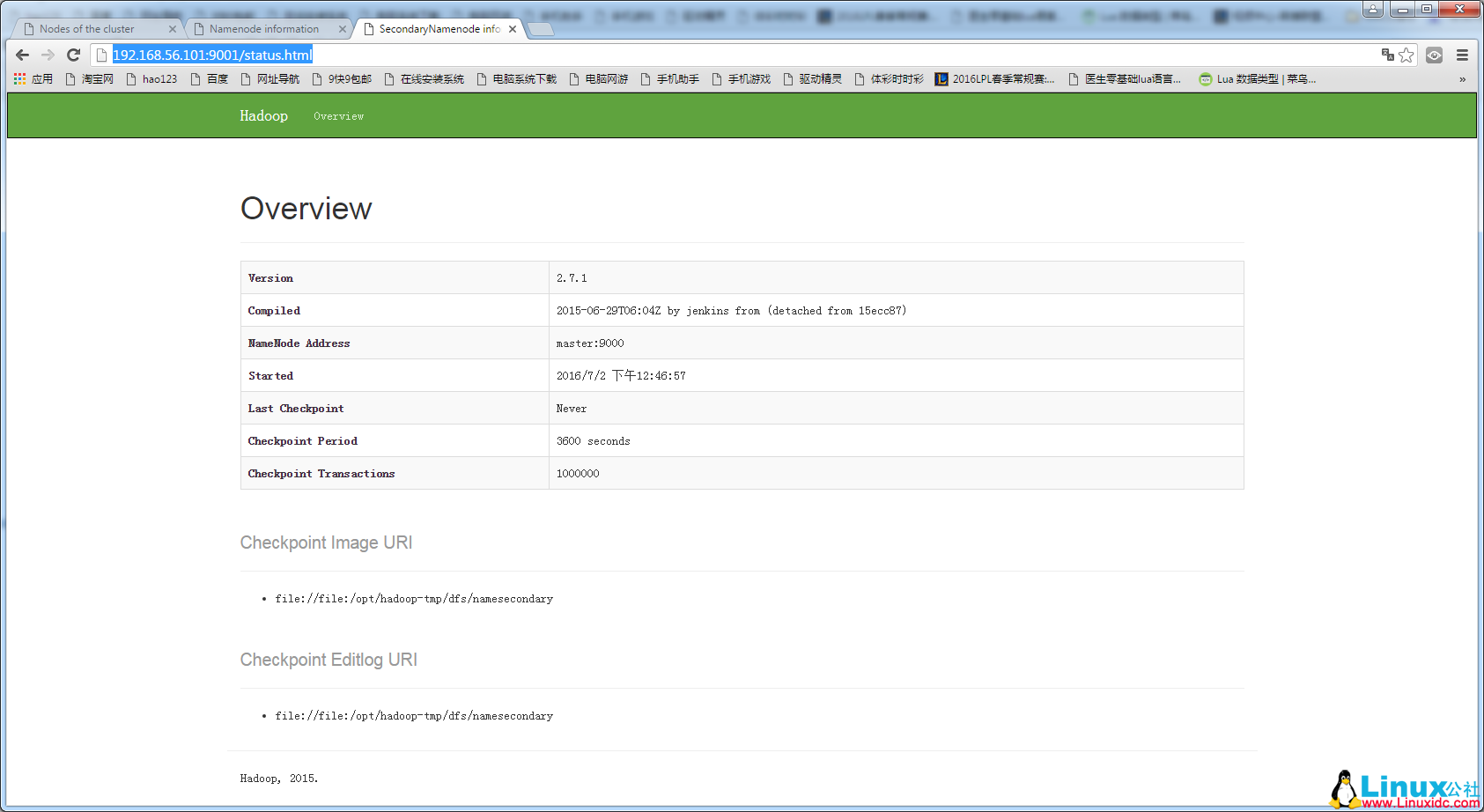

- 启动 hadoop 验证一下

- ./sbin/start-all.sh

- 正常的日志应该是这样:

hadoop@master-hadoop /opt/hadoop/sbin $ ./start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh Starting namenodes on [master] master: starting namenode, logging to /opt/hadoop/logs/hadoop-hadoop-namenode-master-hadoop.out slave1: starting datanode, logging to /opt/hadoop/logs/hadoop-hadoop-datanode-slave1-hadoop.out slave2: starting datanode, logging to /opt/hadoop/logs/hadoop-hadoop-datanode-slave2-hadoop.out Starting secondary namenodes [master] master: starting secondarynamenode, logging to /opt/hadoop/logs/hadoop-hadoop-secondarynamenode-master-hadoop.out starting yarn daemons starting resourcemanager, logging to /opt/hadoop/logs/yarn-hadoop-resourcemanager-master-hadoop.out slave1: starting nodemanager, logging to /opt/hadoop/logs/yarn-hadoop-nodemanager-slave1-hadoop.out slave2: starting nodemanager, logging to /opt/hadoop/logs/yarn-hadoop-nodemanager-slave2-hadoop.out - 看下三个节点的 jps

hadoop@master-hadoop /opt/hadoop/sbin $ jps 5858 ResourceManager 5706 SecondaryNameNode 5514 NameNode 6108 Jps hadoop@slave2-hadoop ~ $ jps 3796 Jps 3621 NodeManager 3510 DataNode hadoop@slave1-hadoop ~ $ jps 3786 Jps 3646 NodeManager 3535 DataNode

- 一切正常,安装完毕

下面关于 Hadoop 的文章您也可能喜欢,不妨看看:

Ubuntu14.04 下 Hadoop2.4.1 单机 / 伪分布式安装配置教程 http://www.linuxidc.com/Linux/2015-02/113487.htm

CentOS 安装和配置 Hadoop2.2.0 http://www.linuxidc.com/Linux/2014-01/94685.htm

Ubuntu 13.04 上搭建 Hadoop 环境 http://www.linuxidc.com/Linux/2013-06/86106.htm

Ubuntu 12.10 +Hadoop 1.2.1 版本集群配置 http://www.linuxidc.com/Linux/2013-09/90600.htm

Ubuntu 上搭建 Hadoop 环境(单机模式 + 伪分布模式)http://www.linuxidc.com/Linux/2013-01/77681.htm

Ubuntu 下 Hadoop 环境的配置 http://www.linuxidc.com/Linux/2012-11/74539.htm

单机版搭建 Hadoop 环境图文教程详解 http://www.linuxidc.com/Linux/2012-02/53927.htm

本文永久更新链接地址:http://www.linuxidc.com/Linux/2016-07/132850.htm

sudo chown -R hadoop /opt

sudo chown -R hadoop /opt