共计 8603 个字符,预计需要花费 22 分钟才能阅读完成。

对于使用 C 语言和 C++ 来开发 GPU 加速应用程序的开发者来说,NVIDIA CUDA Toolkit 可提供一个综合的开发环境。CUDA Toolkit 包含一个针对英伟达 GPU 的编译程序、诸多数学库以及可用于调试和优化应用程序性能的各种工具。你还将找到编程指南、用户手册、API 参考、以及能够帮助你快速着手开发 GPU 加速应用程序的其它文档。

说明:由于 Nvidia 并未给出 Ubuntu 16.04 上面的 CUDA Toolkit,本文方法不一定可行,我这边安装成功,感觉完全是瞎猫碰死耗子了。不过没有安装 sample,只是其他程序可以使用显卡了。

1. 第一个网址,使用

sudo apt-get install nvidia-cuda-toolkit安装 cuda toolkit,要看网速,下载很慢。还有,网址中说重启 ubuntu 有问题(I can’t log in to my computer and end up in infinite login screen)。我这边安装了之后,正常登陆了,没有出现问题。

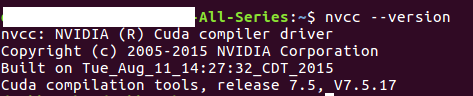

2. 安装完之后的信息:

装的是 7.5.17,不是最新的 7.5.18,但是能用就行。

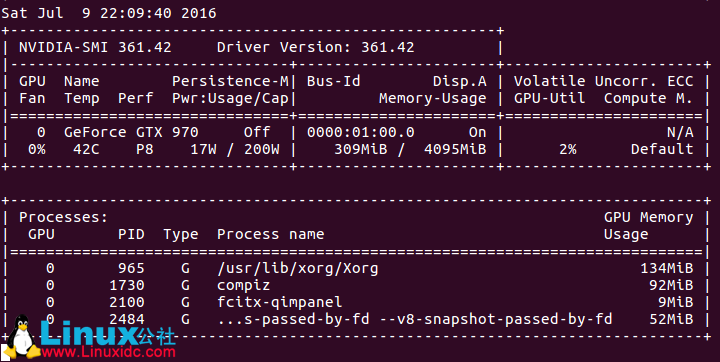

3. 第二个网址中 qed 给出了在终端中持续显示 GPU 当前的使用率(仅限 nvidia 的显卡):

nvidia-smi -l 1

结果:

说明:上面的命令貌似要显卡支持才行。也可以使用 Jonathan 提供的命令(目前没测试):

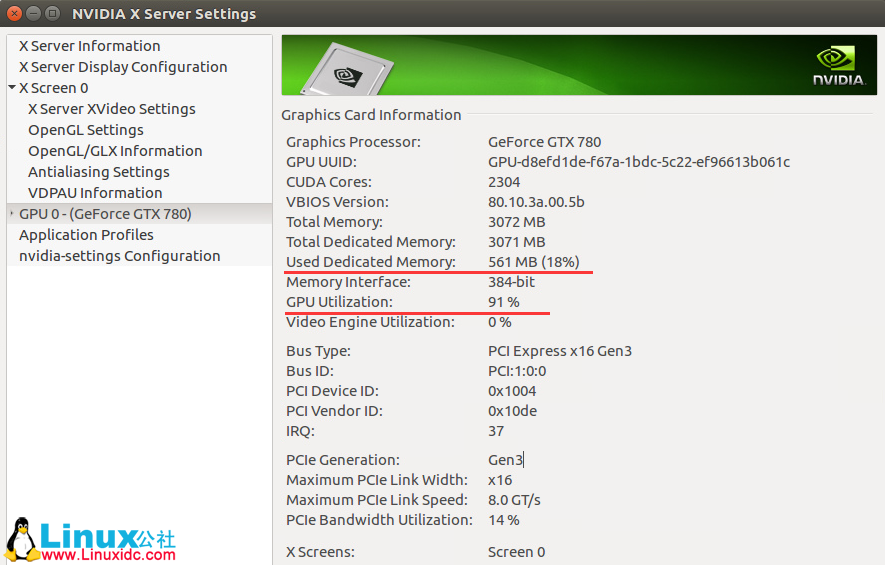

watch -n0.1 "nvidia-settings -q GPUUtilization -q useddedicatedgpumemory"160713 说明 :a. 这条命令显示信息如下:

b. 其实这条命令就是在终端中显示‘NVIDIA X serve settings’中的一些信息,如下(NVIDIA X serve settings 位置为 /usr/share/applications,也可以直接打开该软件查看):

c. 由于这张图使用的 GPU 和之前使用的 GPU 不一样,因而参数不一致(比如显存)。

4. 安装完 cuda 之后,安装 cutorch,之后安装 cunn,都安装成功。使用 GPU 的程序也能正常运行。

5. 第三个参考网址中给出了测试程序,本处稍微进行了修改,打印出来每次循环执行的时间(CPU 版本和 GPU 版本代码实际上差不多):

① CPU 版本:

require 'torch'

require 'nn'

require 'optim'

--require 'cunn'

--require 'cutorch'

mnist = require 'mnist'

fullset = mnist.traindataset()

testset = mnist.testdataset()

trainset = {size = 50000,

data = fullset.data[{{1,50000}}]:double(),

label = fullset.label[{{1,50000}}]

}

validationset = {size = 10000,

data = fullset.data[{{50001,60000}}]:double(),

label = fullset.label[{{50001,60000}}]

}

trainset.data = trainset.data - trainset.data:mean()

validationset.data = validationset.data - validationset.data:mean()

model = nn.Sequential()

model:add(nn.Reshape(1, 28, 28))

model:add(nn.MulConstant(1/256.0*3.2))

model:add(nn.SpatialConvolutionMM(1, 20, 5, 5, 1, 1, 0, 0))

model:add(nn.SpatialMaxPooling(2, 2 , 2, 2, 0, 0))

model:add(nn.SpatialConvolutionMM(20, 50, 5, 5, 1, 1, 0, 0))

model:add(nn.SpatialMaxPooling(2, 2 , 2, 2, 0, 0))

model:add(nn.Reshape(4*4*50))

model:add(nn.Linear(4*4*50, 500))

model:add(nn.ReLU())

model:add(nn.Linear(500, 10))

model:add(nn.LogSoftMax())

model = require('weight-init')(model, 'xavier')

criterion = nn.ClassNLLCriterion()

--model = model:cuda()

--criterion = criterion:cuda()

--trainset.data = trainset.data:cuda()

--trainset.label = trainset.label:cuda()

--validationset.data = validationset.data:cuda()

--validationset.label = validationset.label:cuda()--[[]]

sgd_params = {learningRate = 1e-2,

learningRateDecay = 1e-4,

weightDecay = 1e-3,

momentum = 1e-4

}

x, dl_dx = model:getParameters()

step = function(batch_size)

local current_loss = 0

local count = 0

local shuffle = torch.randperm(trainset.size)

batch_size = batch_size or 200

for t = 1,trainset.size,batch_size do

-- setup inputs and targets for this mini-batch

local size = math.min(t + batch_size - 1, trainset.size) - t

local inputs = torch.Tensor(size, 28, 28)--:cuda()

local targets = torch.Tensor(size)--:cuda()

for i = 1,size do

local input = trainset.data[shuffle[i+t]]

local target = trainset.label[shuffle[i+t]]

-- if target == 0 then target = 10 end

inputs[i] = input

targets[i] = target

end

targets:add(1)

local feval = function(x_new)

-- reset data

if x ~= x_new then x:copy(x_new) end

dl_dx:zero()

-- perform mini-batch gradient descent

local loss = criterion:forward(model:forward(inputs), targets)

model:backward(inputs, criterion:backward(model.output, targets))

return loss, dl_dx

end

_, fs = optim.sgd(feval, x, sgd_params)

-- fs is a table containing value of the loss function

-- (just 1 value for the SGD optimization)

count = count + 1

current_loss = current_loss + fs[1]

end

-- normalize loss

return current_loss / count

end

eval = function(dataset, batch_size)

local count = 0

batch_size = batch_size or 200

for i = 1,dataset.size,batch_size do

local size = math.min(i + batch_size - 1, dataset.size) - i

local inputs = dataset.data[{{i,i+size-1}}]--:cuda()

local targets = dataset.label[{{i,i+size-1}}]:long()--:cuda()

local outputs = model:forward(inputs)

local _, indices = torch.max(outputs, 2)

indices:add(-1)

local guessed_right = indices:eq(targets):sum()

count = count + guessed_right

end

return count / dataset.size

end

max_iters = 5

do

local last_accuracy = 0

local decreasing = 0

local threshold = 1 -- how many deacreasing epochs we allow

for i = 1,max_iters do

timer = torch.Timer()

local loss = step()

print(string.format('Epoch: %d Current loss: %4f', i, loss))

local accuracy = eval(validationset)

print(string.format('Accuracy on the validation set: %4f', accuracy))

if accuracy < last_accuracy then

if decreasing > threshold then break end

decreasing = decreasing + 1

else

decreasing = 0

end

last_accuracy = accuracy

print('Time elapsed: ' .. i .. 'iter: ' .. timer:time().real .. ' seconds')

end

end

testset.data = testset.data:double()

eval(testset)② GPU 版本:

require 'torch'

require 'nn'

require 'optim'

require 'cunn'

require 'cutorch'

mnist = require 'mnist'

fullset = mnist.traindataset()

testset = mnist.testdataset()

trainset = {size = 50000,

data = fullset.data[{{1,50000}}]:double(),

label = fullset.label[{{1,50000}}]

}

validationset = {size = 10000,

data = fullset.data[{{50001,60000}}]:double(),

label = fullset.label[{{50001,60000}}]

}

trainset.data = trainset.data - trainset.data:mean()

validationset.data = validationset.data - validationset.data:mean()

model = nn.Sequential()

model:add(nn.Reshape(1, 28, 28))

model:add(nn.MulConstant(1/256.0*3.2))

model:add(nn.SpatialConvolutionMM(1, 20, 5, 5, 1, 1, 0, 0))

model:add(nn.SpatialMaxPooling(2, 2 , 2, 2, 0, 0))

model:add(nn.SpatialConvolutionMM(20, 50, 5, 5, 1, 1, 0, 0))

model:add(nn.SpatialMaxPooling(2, 2 , 2, 2, 0, 0))

model:add(nn.Reshape(4*4*50))

model:add(nn.Linear(4*4*50, 500))

model:add(nn.ReLU())

model:add(nn.Linear(500, 10))

model:add(nn.LogSoftMax())

model = require('weight-init')(model, 'xavier')

criterion = nn.ClassNLLCriterion()

model = model:cuda()

criterion = criterion:cuda()

trainset.data = trainset.data:cuda()

trainset.label = trainset.label:cuda()

validationset.data = validationset.data:cuda()

validationset.label = validationset.label:cuda()--[[]]

sgd_params = {learningRate = 1e-2,

learningRateDecay = 1e-4,

weightDecay = 1e-3,

momentum = 1e-4

}

x, dl_dx = model:getParameters()

step = function(batch_size)

local current_loss = 0

local count = 0

local shuffle = torch.randperm(trainset.size)

batch_size = batch_size or 200

for t = 1,trainset.size,batch_size do

-- setup inputs and targets for this mini-batch

local size = math.min(t + batch_size - 1, trainset.size) - t

local inputs = torch.Tensor(size, 28, 28):cuda()

local targets = torch.Tensor(size):cuda()

for i = 1,size do

local input = trainset.data[shuffle[i+t]]

local target = trainset.label[shuffle[i+t]]

-- if target == 0 then target = 10 end

inputs[i] = input

targets[i] = target

end

targets:add(1)

local feval = function(x_new)

-- reset data

if x ~= x_new then x:copy(x_new) end

dl_dx:zero()

-- perform mini-batch gradient descent

local loss = criterion:forward(model:forward(inputs), targets)

model:backward(inputs, criterion:backward(model.output, targets))

return loss, dl_dx

end

_, fs = optim.sgd(feval, x, sgd_params)

-- fs is a table containing value of the loss function

-- (just 1 value for the SGD optimization)

count = count + 1

current_loss = current_loss + fs[1]

end

-- normalize loss

return current_loss / count

end

eval = function(dataset, batch_size)

local count = 0

batch_size = batch_size or 200

for i = 1,dataset.size,batch_size do

local size = math.min(i + batch_size - 1, dataset.size) - i

local inputs = dataset.data[{{i,i+size-1}}]:cuda()

local targets = dataset.label[{{i,i+size-1}}]:long():cuda()

local outputs = model:forward(inputs)

local _, indices = torch.max(outputs, 2)

indices:add(-1)

local guessed_right = indices:eq(targets):sum()

count = count + guessed_right

end

return count / dataset.size

end

max_iters = 5

do

local last_accuracy = 0

local decreasing = 0

local threshold = 1 -- how many deacreasing epochs we allow

for i = 1,max_iters do

timer = torch.Timer()

local loss = step()

print(string.format('Epoch: %d Current loss: %4f', i, loss))

local accuracy = eval(validationset)

print(string.format('Accuracy on the validation set: %4f', accuracy))

if accuracy < last_accuracy then

if decreasing > threshold then break end

decreasing = decreasing + 1

else

decreasing = 0

end

last_accuracy = accuracy

print('Time elapsed: ' .. i .. 'iter: ' .. timer:time().real .. ' seconds')

end

end

testset.data = testset.data:double()

eval(testset)6. CPU 和 GPU 使用率

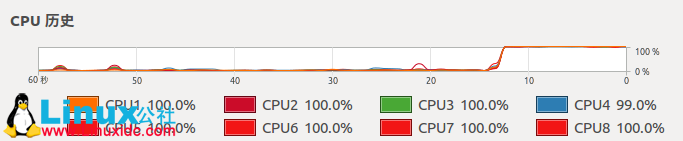

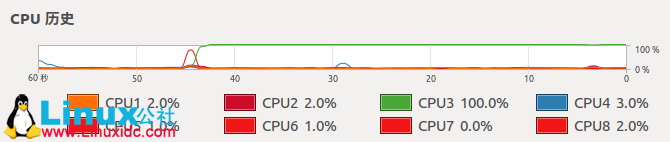

① CPU 版本

CPU 情况:

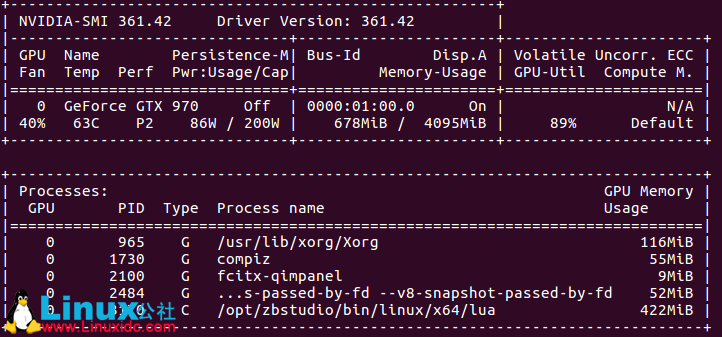

GPU 情况:

② GPU 版本

CPU 情况:

GPU 情况:

7. 可以看出,CPU 版本的程序,CPU 全部使用上了,GPU 则基本没用。GPU 版本,只有一个核心(线程)的 CPU 完全是用上了,其他的则在围观。。。而 GPU 使用率已经很高了。

8. 时间比较

CPU 版本:

Epoch: 1 Current loss: 0.619644

Accuracy on the validation set: 0.924800

Time elapsed: 1iter: 895.69850516319 seconds

Epoch: 2 Current loss: 0.225129

Accuracy on the validation set: 0.949000

Time elapsed: 2iter: 914.15352702141 secondsGPU 版本:

Epoch: 1 Current loss: 0.687380

Accuracy on the validation set: 0.925300

Time elapsed: 1iter: 14.031280994415 seconds

Epoch: 2 Current loss: 0.231011

Accuracy on the validation set: 0.944000

Time elapsed: 2iter: 13.848378896713 seconds

Epoch: 3 Current loss: 0.167991

Accuracy on the validation set: 0.959800

Time elapsed: 3iter: 14.071791887283 seconds

Epoch: 4 Current loss: 0.135209

Accuracy on the validation set: 0.963700

Time elapsed: 4iter: 14.238609790802 seconds

Epoch: 5 Current loss: 0.113471

Accuracy on the validation set: 0.966800

Time elapsed: 5iter: 14.328102111816 seconds说明:① CPU 为 4790K@4.4GHZ(8 线程全开时,应该没有这么高的主频,具体多少没注意);GPU 为 nvidia GTX 970。

② 由于 CPU 版本的执行时间实在太长,我都怀疑程序是否有问题了。。。但是看着 CPU 一直 100% 的全力工作,又不忍心暂停。直到第一次循环结束,用了将近 900s,才意识到,原来程序应该木有错误。。。等第二次循环结束,就直接停止测试了。。。GPU 版本的程序,每次循环则只用 14s,时间上差距。。。额,使用 CPU 执行时间是 GPU 执行时间的 64 倍。。。

更多 Ubuntu 相关信息见 Ubuntu 专题页面 http://www.linuxidc.com/topicnews.aspx?tid=2

本文永久更新链接地址 :http://www.linuxidc.com/Linux/2016-07/133200.htm