共计 10613 个字符,预计需要花费 27 分钟才能阅读完成。

ELK 简介

ELKStack 即 Elasticsearch + Logstash + Kibana。日志监控和分析在保障业务稳定运行时,起到了很重要的作用。比如对 nginx 日志的监控分析,nginx 是有日志文件的,它的每个请求的状态等都有日志文件进行记录,所以可以通过读取日志文件来分析;redis 的 list 结构正好可以作为队列使用,用来存储 logstash 传输的日志数据。然后 elasticsearch 就可以进行分析和查询了。

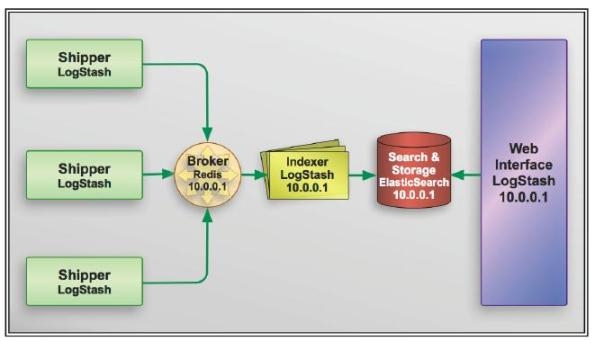

本文搭建的的是一个分布式的日志收集和分析系统。logstash 有 agent 和 indexer 两个角色。对于 agent 角色,放在单独的 web 机器上面,然后这个 agent 不断地读取 nginx 的日志文件,每当它读到新的日志信息以后,就将日志传送到网络上的一台 redis 队列上。对于队列上的这些未处理的日志,有不同的几台 logstash indexer 进行接收和分析。分析之后存储到 elasticsearch 进行搜索分析。再由统一的 kibana 进行日志 web 界面的展示[3]。

目前我用两台机器做测试,Hadoop-master 安装 nginx 和 logstash agent(tar 源码包安装),hadoop-slave 机器安装安装 logstash agent、elasticsearch、redis、nginx。

同时分析两台机器的 nginx 日志,具体配置可参见说明文档。以下记录了 ELK+redis 来收集和分析日志的配置过程,参考了官方文档和前人的文章。

系统环境

主机环境

1

2

|

hadoop-master 192.168.186.128 #logstash index、nginx

hadoop-slave 192.168.186.129 # 安装 logstash agent、elasticsearch、redis、nginx

|

系统信息

1

2

3

4

5

6

7

|

[root@hadoop-slave ~]# Java -version #Elasticsearch 是 java 开发的,需要 JDK 环境,本机安装 JDK 1.8

java version "1.8.0_20"

Java(TM) SE Runtime Environment (build 1.8.0_20-b26)

Java HotSpot(TM) 64-Bit Server VM (build 25.20-b23, mixed mode)

[root@hadoop-slave ~]# cat /etc/issue

CentOS release 6.4 (Final)

Kernel \r on an \m

|

Redis 安装

1

2

3

4

5

|

[root@hadoop-slave ~]# wget https:

[root@hadoop-slave ~]# tar -zxf 2.8.20

[root@hadoop-slave ~]# mv redis-2.8.20/ /usr/local/src/

[root@hadoop-slave src]# cd redis-2.8.20/

[root@hadoop-slave src]# make

|

执行完后,会在当前目录中的 src 目录中生成相应的执行文件,如:redis-server redis-cli 等;

我们在 /usr/local/ 目录中创建 redis 位置目录和相应的数据存储目录、配置文件目录等.

1

2

3

4

5

6

|

[root@hadoop-slave local]# mkdir /usr/local/redis/{conf,run,db} -pv

[root@hadoop-slave local]# cd /usr/local/src/redis-2.8.20/

[root@hadoop-slave redis-2.8.20]# cp redis.conf /usr/local/redis/conf/

[root@hadoop-slave redis-2.8.20]# cd src/

[root@hadoop-slave src]# cp redis-benchmark redis-check-aof redis-check-dump redis-cli redis-server mkreleasehdr.sh /usr/local/redis/

`

|

到此 Redis 安装完成了。

下面来试着启动一下,并查看相应的端口是否已经启动:

1

2

3

4

|

[root@hadoop-slave src]# /usr/local/redis/redis-server /usr/local/redis/conf/redis.conf & #可以打入后台

[root@hadoop-slave redis]# netstat -antulp | grep 6379

tcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN 72669/redis-server

tcp 0 0 :::6379 :::* LISTEN 72669/redis-server

|

启动没问题了,ok!

Elasticserach 安装

ElasticSearch 默认的对外服务的 HTTP 端口是 9200,节点间交互的 TCP 端口是 9300,注意打开 tcp 端口。

Elasticsearch 安装

从官网下载最新版本的 tar 包

Search & Analyze in Real Time: Elasticsearch is a distributed, open source search and analytics engine, designed for horizontal scalability, reliability, and easy management.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

[root@hadoop-slave ~]# wget https://download.elastic.co/elasticsearch/elasticsearch/elasticsearch-1.7.1.tar.gz

[root@hadoop-slave ~]# mkdir /usr/local/elk

[root@hadoop-slave ~]# tar zxf elasticsearch-1.7.1.tar.gz -C /usr/local/elk/

[root@hadoop-slave bin]# ln -s /usr/local/elk/elasticsearch-1.7.1/bin/elasticsearch /usr/bin

[root@hadoop-slave bin]# elasticsearch start

[2015-08-17 20:49:21,566][INFO ][node ] [Eliminator] version[1.7.1], pid[5828], build[b88f43f/2015-07-29T09:54:16Z]

[2015-08-17 20:49:21,585][INFO ][node ] [Eliminator] initializing ...

[2015-08-17 20:49:21,870][INFO ][plugins ] [Eliminator] loaded [], sites []

[2015-08-17 20:49:22,101][INFO ][env ] [Eliminator] using [1] data paths, mounts [[/ (/dev/sda2)]], net usable_space [27.9gb], net total_space [37.1gb], types [ext4]

[2015-08-17 20:50:08,097][INFO ][node ] [Eliminator] initialized

[2015-08-17 20:50:08,099][INFO ][node ] [Eliminator] starting ...

[2015-08-17 20:50:08,593][INFO ][transport ] [Eliminator] bound_address {inet[/0:0:0:0:0:0:0:0:9300]}, publish_address {inet[/192.168.186.129:9300]}

[2015-08-17 20:50:08,764][INFO ][discovery ] [Eliminator] elasticsearch/XbpOYtsYQbO-6kwawxd7nQ

[2015-08-17 20:50:12,648][INFO ][cluster.service ] [Eliminator] new_master [Eliminator][XbpOYtsYQbO-6kwawxd7nQ][hadoop-slave][inet[/192.168.186.129:9300]], reason: zen-disco-join (elected_as_master)

[2015-08-17 20:50:12,683][INFO ][http ] [Eliminator] bound_address {inet[/0:0:0:0:0:0:0:0:9200]}, publish_address {inet[/192.168.186.129:9200]}

[2015-08-17 20:50:12,683][INFO ][node ] [Eliminator] started

[2015-08-17 20:50:12,771][INFO ][gateway ] [Eliminator] recovered [0] indices into cluster_state

`

|

测试

出现 200 返回码表示 ok

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[root@hadoop-slave ~]# elasticsearch start -d

[root@hadoop-slave ~]# curl -X GET http://localhost:9200

{

"status" : 200,

"name" : "Wasp",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "1.7.1",

"build_hash" : "b88f43fc40b0bcd7f173a1f9ee2e97816de80b19",

"build_timestamp" : "2015-07-29T09:54:16Z",

"build_snapshot" : false,

"lucene_version" : "4.10.4"

},

"tagline" : "You Know, for Search"

}

|

Logstash 安装

Logstash is a flexible, open source, data collection, enrichment, and transport pipeline designed to efficiently process a growing list of log, event, and unstructured data sources for distribution into a variety of outputs, including Elasticsearch.

Logstash 默认的对外端口是 9292,如果防火墙开启了要打开 tcp 端口。

源码安装

192.168.186.128 主机源码安装,解压到 /usr/local/ 目录下

1

2

3

|

[root@hadoop-master ~]# wget https:

[root@hadoop-master ~]# tar -zxf logstash-1.5.3.tar.gz -C /usr/local/

[root@hadoop-master logstash-1.5.3]# mkdir /usr/local/logstash-1.5.3/etc

|

yum 安装

192.168.186.129 采用 yum 安装

1

2

3

4

5

6

7

8

9

|

[root@hadoop-slave ~]

[root@hadoop-slave ~]

[logstash-1.5]

name=Logstash repository for 1.5.x packages

baseurl=http://packages.elasticsearch.org/logstash/1.5/centos

gpgcheck=1

gpgkey=http://packages.elasticsearch.org/GPG-KEY-elasticsearch

enabled=1

[root@hadoop-slave ~]

|

测试

1

2

3

4

|

[root@hadoop-slave ~]# cd /opt/logstash/

[root@hadoop-slave logstash]# ls

bin CHANGELOG.md CONTRIBUTORS Gemfile Gemfile.jruby-1.9.lock lib LICENSE NOTICE.TXT vendor

[root@hadoop-slave logstash]# bin/logstash -e 'input{stdin{}}output{stdout{codec=>rubydebug}}'

|

然后你会发现终端在等待你的输入。没问题,敲入 Hello World,回车,然后看看会返回什么结果!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[root@hadoop-slave logstash]# vi logstash-simple.conf #sleasticsearch 的 host 是本机

input {stdin {} }

output {

elasticsearch {host => localhost}

stdout {codec => rubydebug}

}

[root@hadoop-slave logstash]# ./bin/logstash -f logstash-simple.conf #可以打入后台运行

……

{

"message" => "",

"@version" => "1",

"@timestamp" => "2015-08-18T06:26:19.348Z",

"host" => "hadoop-slave"

}

……

|

表明 elasticsearch 已经收到 logstash 传来的数据了,通信 ok!

也可以通过下面的方式

1

2

|

[root@hadoop-slave etc]# curl 'http://192.168.186.129:9200/_search?pretty'

# 出现一堆数据表示 ok!

|

logstash 配置

logstash 语法

摘录自说明文档:

Logstash 社区通常习惯用 shipper,broker 和 indexer 来描述数据流中不同进程各自的角色。如下图:

broker 一般选择 redis。不过我见过很多运用场景里都没有用 logstash 作为 shipper(也是 agent 的概念),或者说没有用 elasticsearch 作为数据存储也就是说也没有 indexer。所以,我们其实不需要这些概念。只需要学好怎么使用和配置 logstash 进程,然后把它运用到你的日志管理架构中最合适它的位置就够了。

设置 nginx 日志格式

两台机器都安装了nginx,所以都要修改nginx.conf,设置日志格式。

1

2

3

4

5

6

7

|

[root@hadoop-master ~]

[root@hadoop-master conf]

log_format main '$remote_addr - $remote_user [$time_local] "$request"'

'$status $body_bytes_sent "$http_referer"'

'"$http_user_agent" "$http_x_forwarded_for"'

access_log logs/host.access.log main

[root@hadoop-master conf]

|

hadoop-slave机器同上操作

开启 logstash agent

logstash agent 负责收集信息传送到 redis 队列上

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[root@hadoop-master ~]# cd /usr/local/logstash-1.5.3/

[root@hadoop-master logstash-1.5.3]# mkdir etc

[root@hadoop-master etc]# vi logstash_agent.conf

input {

file {

type => "nginx access log"

path => ["/usr/local/nginx/logs/host.access.log"]

}

}

output {

redis {

host => "192.168.186.129" #redis server

data_type => "list"

key => "logstash:redis"

}

}

[root@hadoop-master etc]# nohup /usr/local/logstash-1.5.3/bin/logstash -f /usr/local/logstash-1.5.3/etc/logstash_agent.conf &

# 在另一台机器上的 logstash_agent 也同样配置

|

开启 logstash indexer

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

[root@hadoop-slave conf]

[root@hadoop-slave logstash]

[root@hadoop-slave etc]

input {

redis {

host => "192.168.186.129"

data_type => "list"

key => "logstash:redis"

type => "redis-input"

}

}

filter {

grok {

type => "nginx_access"

match => [

"message", "%{IPORHOST:http_host} %{IPORHOST:client_ip} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:http_verb} %{NOTSPACE:http_request}(?: HTTP/%{NUMBER:http_version})?|%{DATA:raw_http_request})\"%{NUMBER:http_status_code} (?:%{NUMBER:bytes_read}|-) %{QS:referrer} %{QS:agent} %{NUMBER:time_duration:float} %{NUMBER:time_backend_response:float}",

"message", "%{IPORHOST:http_host} %{IPORHOST:client_ip} \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:http_verb} %{NOTSPACE:http_request}(?: HTTP/%{NUMBER:http_version})?|%{DATA:raw_http_request})\"%{NUMBER:http_status_code} (?:%{NUMBER:bytes_read}|-) %{QS:referrer} %{QS:agent} %{NUMBER:time_duration:float}"

]

}

}

output {

elasticsearch {

embedded => false

protocol => "http"

host => "localhost"

port => "9200"

}

}

[root@hadoop-slave etc]

|

配置完成!

Kibana 安装

Explore and Visualize Your Data: Kibana is an open source data visualization platform that allows you to interact with your data through stunning, powerful graphics that can be combined into custom dashboards that help you share insights from your data far and wide.

1

2

3

4

5

6

|

[root@hadoop-slave ~]# wget https://download.elastic.co/kibana/kibana/kibana-4.1.1-linux-x64.tar.gz

[root@hadoop-slave elk]# tar -zxf kibana-4.1.1-linux-x64.tar.gz

[root@hadoop-slave elk]# mv kibana-4.1.1-linux-x64 /usr/local/elk

[root@hadoop-slave bin]# pwd

/usr/local/elk/kibana/bin

[root@hadoop-slave bin]# ./kibana &

|

打开 http://192.168.186.129:5601/

如果需要远程访问,需要打开 iptables 的 tcp 的 5601 端口。

ELK+redis 测试

如果 ELK+redis 都没启动,以下命令启动:

1

2

3

4

5

6

7

|

[root@hadoop-slave src]# /usr/local/redis/redis-server /usr/local/redis/conf/redis.conf & #启动 redis

[root@hadoop-slave ~]# elasticsearch start -d #启动 elasticsearch

[root@hadoop-master etc]# nohup /usr/local/logstash-1.5.3/bin/logstash -f /usr/local/logstash-1.5.3/etc/logstash_agent.conf &

[root@hadoop-slave etc]# nohup /opt/logstash/bin/logstash -f /opt/logstash/etc/logstash_indexer.conf &

[root@hadoop-slave etc]# nohup /opt/logstash/bin/logstash -f /opt/logstash/etc/logstash_agent.conf &

[root@hadoop-slave bin]# ./kibana & #启动 kibana

`

|

打开 http://192.168.186.129/ 和 http://192.168.186.128/

每刷新一次页面会产生一条访问记录,记录在 host.access.log 文件中。

1

2

3

4

5

6

7

8

|

[root@hadoop-master logs]# cat host.access.log

……

192.168.186.1 - - [18/Aug/2015:22:59:00 -0700] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/44.0.2403.155 Safari/537.36" "-"

192.168.186.1 - - [18/Aug/2015:23:00:21 -0700] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/44.0.2403.155 Safari/537.36" "-"

192.168.186.1 - - [18/Aug/2015:23:06:38 -0700] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/44.0.2403.155 Safari/537.36" "-"

192.168.186.1 - - [18/Aug/2015:23:15:52 -0700] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/44.0.2403.155 Safari/537.36" "-"

192.168.186.1 - - [18/Aug/2015:23:16:52 -0700] "GET / HTTP/1.1" 304 0 "-" "Mozilla/5.0 (Windows NT 6.3; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/44.0.2403.155 Safari/537.36" "-"

[root@hadoop-master logs]#

|

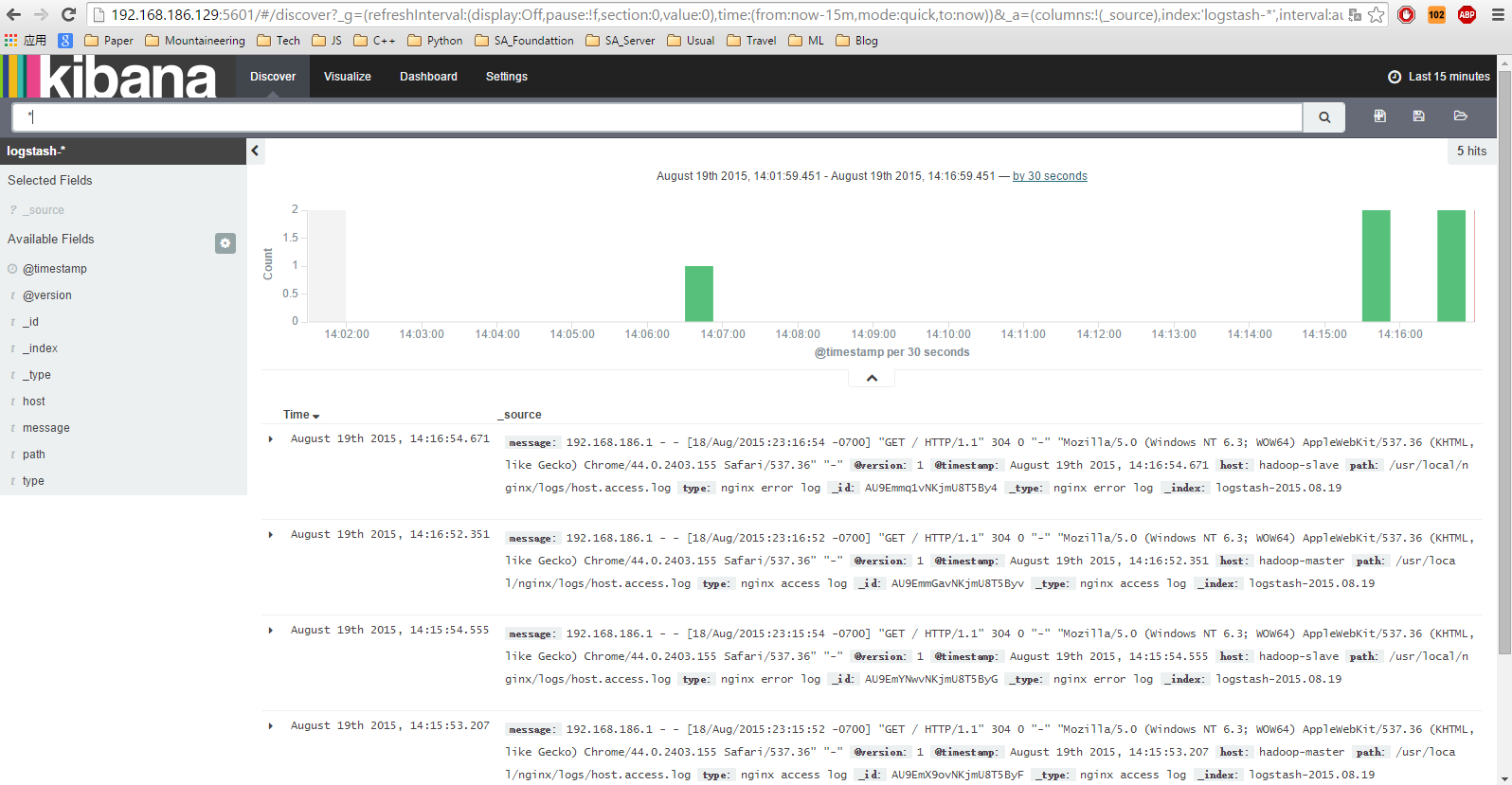

打开 kibana 页面即可显示两台机器nginx 的访问日志信息,显示时间是由于虚拟机的时区和物理机时区不一致,不影响。

此时访问出现如下界面

本文永久更新链接地址:http://www.linuxidc.com/Linux/2016-09/135115.htm