共计 22534 个字符,预计需要花费 57 分钟才能阅读完成。

Hadoop 安装使用

| 1.1 Hadoop 简介 | |

| 1.2 HDFS 分布式存储系统 | |

| 1.3 单机安装 | |

| 1.4 Mapreduce 案例 | |

| 1.5 伪分布式安装 | |

| 1.6 课后作业 |

1.1 Hadoop 简介

了解 Hadoop 的简介以及生态圈,更多 Hadoop 相关信息见Hadoop 专题页面 http://www.linuxidc.com/topicnews.aspx?tid=13

1.2 HDFS 分布式存储系统(Hadoop Distributed File System)

HDFS 优点

- 高容错性

- 数据自动保存多个副本

- 副本都时候会自动恢复

- 适合批量处理

- 移动计算而非数据

- 数据位置暴露给计算框架

- 适合大数据处理

- GB/TB/ 甚至 PB 级数据

- 百万规模以上文件数量

- 10k+

- 可构建廉价的机器上

- 不管机器人有多么的垃圾, 只要有空间内存就可以搭建 Hadoop

*HDFS 缺点 *

- 低延迟数据访问

- 比如毫秒级

- 低延迟与高吞吐率

- 小文件存取

- 占用 NameNode 大量内存

- 寻道时间超过读取时间

- 并发写入 / 文件随机修改

- 一个文件只能有一个写者

- 只支持 append

HDFS 架构

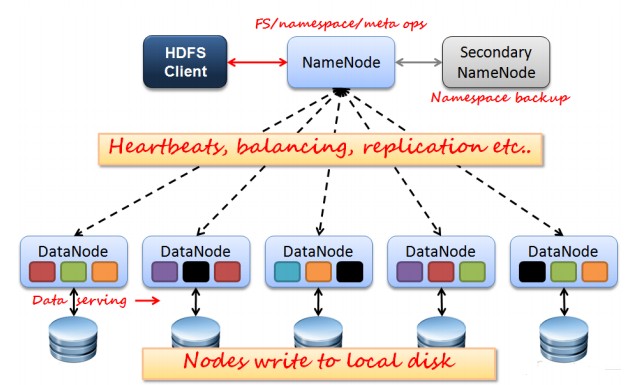

如图所示,HDFS 是按照 Master 和 Slave 的结构

NameNode:类似项目经理职位 下达需求, 监控团队进度, 工作分配。

管理数据块映射;处理客户端的读写请求;配置副本策略;管理 HDFS 的名称空间;

| NameNode 的主要功能: 接受客户端的读写服务。上传文件 读取文件都要联系 NameNode | |

| Namenode 保存了 metadate 信息, 而 metadate 保存着 datanode 的元数据 包含了 block 的位置信息(路径), 以及 datanode 的详细信息。Namenode 的 metadate 信息启动后会加载到内存,metadate 存储到磁盘的文件名;"fsimage", 但是其中不包含 Block 的位置信息, 而 block 的位置信息是在每一次 Datanode 启动的时候将 block 的位置信息发送给 NameNode 内存中。**edits** 记录对 metadate 的操作日志。 |

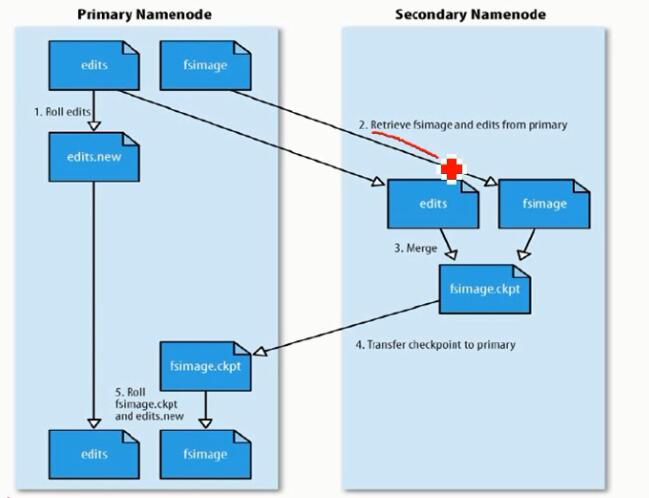

SecondaryNameNode:类似经理助理的职位 分担领导 (Namenode) 的工作量;

| SecondaryNameNode 他不是主节点也不是从节点, 它是用来辅助 NameNode 来完成 editslog 操作日志的。合并 edits 的操作可以配置: | |

| 设置 fs.checkpoint.period 默认 3600 秒 | |

| 设置 edits log 大小 fs.checkpoint.size 规定 edits 文件的最大默认 64MB |

DataNode: 程序员干活的, 上头 (NameNode) 下达一个需求功能需要你 (DataNode) 实现。存储 client 发来的数据块 block;执行数据块的读写操作。

| dataNode(block)它是数据存储单元, 就是数据真正存储的地方。每一个 block 都会被固定大小的数 | |

| 据块, 默认数据块的大小是 64MB** 可配置 **。问: 我这个文件大小没有达到 64MB 怎么办呢? | |

| 答: 如果该文件还不到 64MB 的时候, 则单独成一个 block | |

| 问: 那如果大于 64MB 怎么办? | |

| 答: 如果大了就在切分一个 block 出来, 就像我们切肉 / 切菜一样, 想把他们切的均衡(64MB), 假如肉切的 1 厘米, 到最后还剩下 3 厘米怎么办? 那就继续再切分。 |

如图所示

假如我上传文件大小为 "50G"--> 切分为多个 block。

每一个 Block 都会有一个 3 个副本(可配置)

每一个 block 的 3 个副本都存在不同的节点 (机器) 上, 如图 "block1"。

HDFS 写流程

简单易懂

| 1.HDFS Client-->NameNode: 我要上次新文件 | |

| 2.NameNode 检查 DataNode 空间, 检查出: xxx 机器上的节点可以存储 | |

| 3.NameNode 上传 | |

| 4. 记录 log 日志 |

下面是专业术语

| 客户端调用 create()来创建文件 | |

| DistributedFileSystem 用 RPC 调用元数据节点,在文件系统的命名空间中创建一个新的文件。元数据节点首先确定文件原来不存在,并且客户端有创建文件的权限,然后创建新文件。DistributedFileSystem 返回 DFSOutputStream,客户端用于写数据。客户端开始写入数据,DFSOutputStream 将数据分成块,写入 data queue。Data queue 由 Data Streamer 读取,并通知元数据节点分配数据节点,用来存储数据块(每块默认复制 3 块)。分配的数据节点放在一个 pipeline 里。Data Streamer 将数据块写入 pipeline 中的第一个数据节点。第一个数据节点将数据块发送给第二个数据节点。第二个数据节点将数据发送给第三个数据节点。DFSOutputStream 为发出去的数据块保存了 ack queue,等待 pipeline 中的数据节点告知数据已经写入成功。如果数据节点在写入的过程中失败:关闭 pipeline,将 ack queue 中的数据块放入 data queue 的开始。当前的数据块在已经写入的数据节点中被元数据节点赋予新的标示,则错误节点重启后能够察觉其数据块是过时的,会被删除。失败的数据节点从 pipeline 中移除,另外的数据块则写入 pipeline 中的另外两个数据节点。元数据节点则被通知此数据块是复制块数不足,将来会再创建第三份备份。当客户端结束写入数据,则调用 stream 的 close 函数。此操作将所有的数据块写入 pipeline 中的数据节点,并等待 ack queue 返回成功。最后通知元数据节点写入完毕。 |

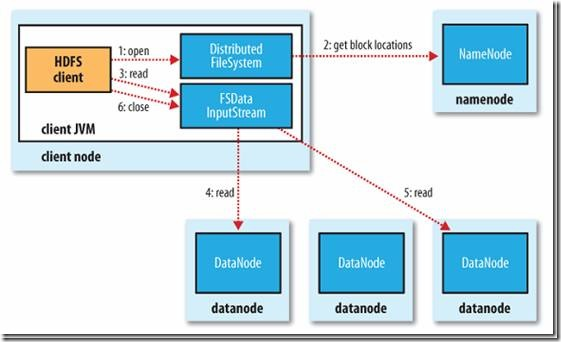

读文件

| 客户端 (client) 用 FileSystem 的 open()函数打开文件 | |

| DistributedFileSystem 用 RPC 调用元数据节点,得到文件的数据块信息。对于每一个数据块,元数据节点返回保存数据块的数据节点的地址。DistributedFileSystem 返回 FSDataInputStream 给客户端,用来读取数据。客户端调用 stream 的 read()函数开始读取数据。DFSInputStream 连接保存此文件第一个数据块的最近的数据节点。Data 从数据节点读到客户端(client) | |

| 当此数据块读取完毕时,DFSInputStream 关闭和此数据节点的连接,然后连接此文件下一个数据块的最近的数据节点。当客户端读取完毕数据的时候,调用 FSDataInputStream 的 close 函数。在读取数据的过程中,如果客户端在与数据节点通信出现错误,则尝试连接包含此数据块的下一个数据节点。失败的数据节点将被记录,以后不再连接。 |

安全模式

Name 启动的时候, 首先将映像文件 (fsimage) 载入内存, 并执行编辑日志 (edits) 中的各项操作, 一旦在内存中成功建立文件系统元数据映射, 则创建一个新的 fsimage 文件 (SecondaryNameNode) 和一个空的 (edits) 编辑日志。此刻 Namenode 运行 "安全模式", 即 Namenode 的文件系统对于客户端来说是只读的。(显示目录, 显示文件内容等。但是 增删改都会失败)。

1.3 单机安装

系统环境

| 名称 | 版本 | 下载 |

|---|---|---|

| linux | CentOS | 百度 |

| jdk | 1.7 | 百度 |

| hadoop | 2.6.0 | 百度 |

jdk 安装

SSH 免验证

| $ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa | |

| $ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys |

配置 Hadoop

一.Hadoop-env.sh

| Hadoop 也是需要 java 的, 所以我们需要配置 (hadoop-env.sh) 它调用的 java。vi hadoop-env.sh | |

| 它默认的配置是:export JAVA_HOME=${JAVA_HOME}。这样的话必须定义变量才能启动 JAVA, 所以我们需要将 (hadoop-env.sh) 里面的 JAVA_HOME 变量指定的内容改成我们的 JAVA 安装的绝对路径, 我 JDK 安装的路径在 "/usr/bin/java" 目录下。export JAVA_HOME=/usr/bin/java |

二.core-site.xml

| 我们需要配置两个参数 defaultFS(HDFS 默认名称) | |

| // 配置 HDFS 名称 | |

| hdfs: 协议头, 如:http https 等 | |

| localhost: 主机名 | |

| 9000: 端口 | |

| <property> | |

| <name>fs.defaultFS</name> | |

| <value>hdfs://localhost:9000</value> | |

| </property> |

三.hdfs-site.xml

| replication(副本个数 默认为 3 个), 因为我这里搭建的是单机所以只要一个。replication: 设置的副本个数 | |

| <property> | |

| <name>dfs.replication</name> | |

| <value>1</value> | |

| </property> |

四.mapred-site.xml

== 注意:mapred.site.xml 开始并不存在, 而是 mapred-site.xml.template。我们需要使用 cp 命令一份命名为 mapred.site.xml==

| <property> | |

| <name>mapreduce.framework.name</name> | |

| <value>yarn</value> | |

| </property> |

五.yarn-site.xml

| <property> | |

| <name>yarn.nodemanager.aux-services</name> | |

| <value>mapreduce_shuffle</value> | |

| </property> | |

| <property> | |

| <name>yarn.resourcemanager.address</name> | |

| <value>localhost:8032</value> | |

| </property> |

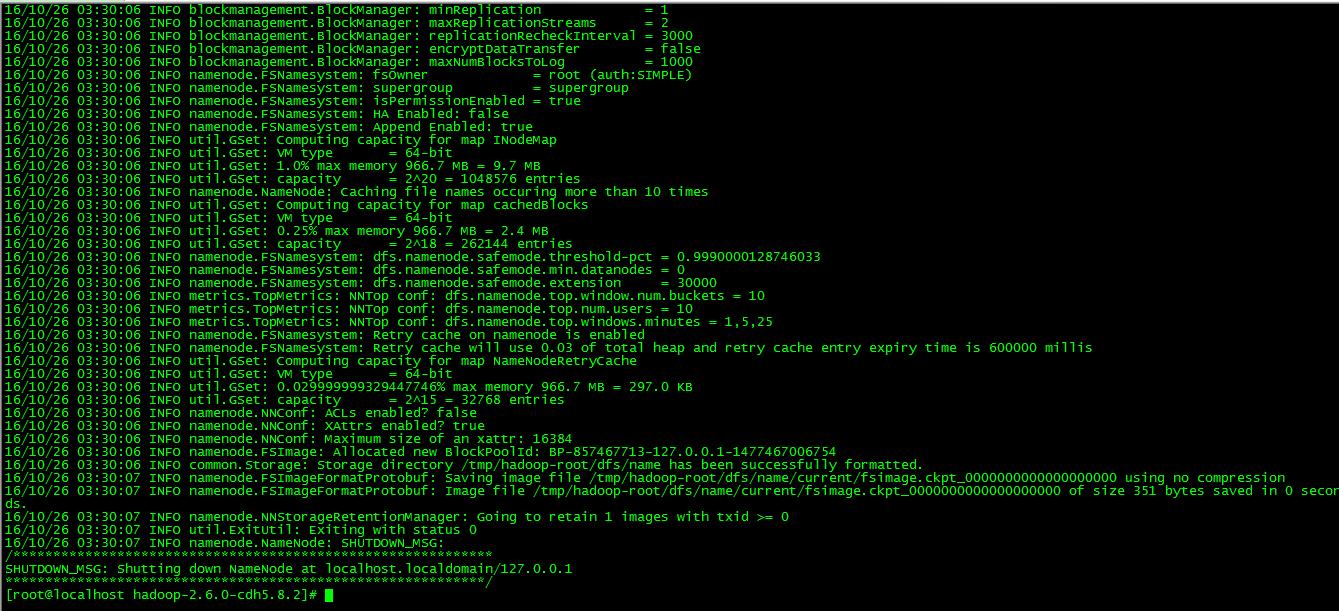

格式化 HDFS

| 1. $ bin/hdfs namenode -format | |

| 格式化 NameNode, 不需要每次启动都需要格式化, 机器第一次启动的时候需要格式化一次 | |

| 16/10/26 03:30:03 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] | |

| 16/10/26 03:30:03 INFO namenode.NameNode: createNameNode [-format] | |

| 16/10/26 03:30:05 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| Formatting using clusterid: CID-2eba6061-bea3-4e86-85cc-ebb1b51cf730 | |

| 16/10/26 03:30:05 INFO namenode.FSNamesystem: No KeyProvider found. | |

| 16/10/26 03:30:05 INFO namenode.FSNamesystem: fsLock is fair:true | |

| 16/10/26 03:30:06 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 | |

| 16/10/26 03:30:06 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true | |

| 16/10/26 03:30:06 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 | |

| 16/10/26 03:30:06 INFO blockmanagement.BlockManager: The block deletion will start around 2016 Oct 26 03:30:06 | |

| 16/10/26 03:30:06 INFO util.GSet: Computing capacity for map BlocksMap | |

| 16/10/26 03:30:06 INFO util.GSet: VM type = 64-bit | |

| 16/10/26 03:30:06 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB | |

| 16/10/26 03:30:06 INFO util.GSet: capacity = 2^21 = 2097152 entries | |

| 16/10/26 03:30:06 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false | |

| 16/10/26 03:30:06 INFO blockmanagement.BlockManager: defaultReplication = 1 | |

| 16/10/26 03:30:06 INFO blockmanagement.BlockManager: maxReplication = 512 | |

| 16/10/26 03:30:06 INFO blockmanagement.BlockManager: minReplication = 1 | |

| 16/10/26 03:30:06 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 | |

| 16/10/26 03:30:06 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 | |

| 16/10/26 03:30:06 INFO blockmanagement.BlockManager: encryptDataTransfer = false | |

| 16/10/26 03:30:06 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 | |

| 16/10/26 03:30:06 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE) | |

| 16/10/26 03:30:06 INFO namenode.FSNamesystem: supergroup = supergroup | |

| 16/10/26 03:30:06 INFO namenode.FSNamesystem: isPermissionEnabled = true | |

| 16/10/26 03:30:06 INFO namenode.FSNamesystem: HA Enabled: false | |

| 16/10/26 03:30:06 INFO namenode.FSNamesystem: Append Enabled: true | |

| 16/10/26 03:30:06 INFO util.GSet: Computing capacity for map INodeMap | |

| 16/10/26 03:30:06 INFO util.GSet: VM type = 64-bit | |

| 16/10/26 03:30:06 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB | |

| 16/10/26 03:30:06 INFO util.GSet: capacity = 2^20 = 1048576 entries | |

| 16/10/26 03:30:06 INFO namenode.NameNode: Caching file names occuring more than 10 times | |

| 16/10/26 03:30:06 INFO util.GSet: Computing capacity for map cachedBlocks | |

| 16/10/26 03:30:06 INFO util.GSet: VM type = 64-bit | |

| 16/10/26 03:30:06 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB | |

| 16/10/26 03:30:06 INFO util.GSet: capacity = 2^18 = 262144 entries | |

| 16/10/26 03:30:06 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 | |

| 16/10/26 03:30:06 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 | |

| 16/10/26 03:30:06 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 | |

| 16/10/26 03:30:06 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 | |

| 16/10/26 03:30:06 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 | |

| 16/10/26 03:30:06 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 | |

| 16/10/26 03:30:06 INFO namenode.FSNamesystem: Retry cache on namenode is enabled | |

| 16/10/26 03:30:06 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis | |

| 16/10/26 03:30:06 INFO util.GSet: Computing capacity for map NameNodeRetryCache | |

| 16/10/26 03:30:06 INFO util.GSet: VM type = 64-bit | |

| 16/10/26 03:30:06 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB | |

| 16/10/26 03:30:06 INFO util.GSet: capacity = 2^15 = 32768 entries | |

| 16/10/26 03:30:06 INFO namenode.NNConf: ACLs enabled? false | |

| 16/10/26 03:30:06 INFO namenode.NNConf: XAttrs enabled? true | |

| 16/10/26 03:30:06 INFO namenode.NNConf: Maximum size of an xattr: 16384 | |

| 16/10/26 03:30:06 INFO namenode.FSImage: Allocated new BlockPoolId: BP-857467713-127.0.0.1-1477467006754 | |

| 16/10/26 03:30:06 INFO common.Storage: Storage directory /tmp/hadoop-root/dfs/name has been successfully formatted. | |

| 16/10/26 03:30:07 INFO namenode.FSImageFormatProtobuf: Saving image file /tmp/hadoop-root/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression | |

| 16/10/26 03:30:07 INFO namenode.FSImageFormatProtobuf: Image file /tmp/hadoop-root/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 351 bytes saved in 0 seconds. | |

| 16/10/26 03:30:07 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 | |

| 16/10/26 03:30:07 INFO util.ExitUtil: Exiting with status 0 | |

| 16/10/26 03:30:07 INFO namenode.NameNode: SHUTDOWN_MSG: | |

| /************************************************************ | |

| SHUTDOWN_MSG: Shutting down NameNode at localhost.localdomain/127.0.0.1 | |

| ************************************************************/ | |

| [root@localhost hadoop-2.6.0-cdh5.8.2]# |

| 截取后半部分, 这样就表示格式化完成 | |

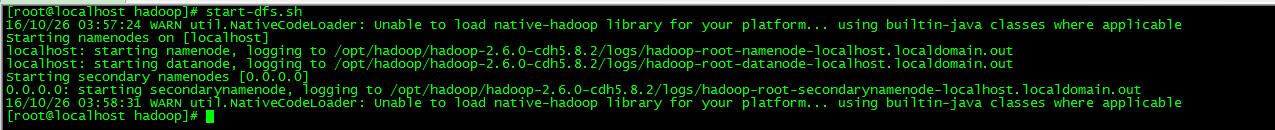

| 2. sbin/start-dfs.sh | |

| 启动 HDFS(NameNode,secondarynamenode,DataNode) | |

| [root@localhost hadoop]# start-dfs.sh | |

| 16/10/26 03:57:24 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| Starting namenodes on [localhost] | |

| localhost: starting namenode, logging to /opt/hadoop/hadoop-2.6.0-cdh5.8.2/logs/hadoop-root-namenode-localhost.localdomain.out | |

| localhost: starting datanode, logging to /opt/hadoop/hadoop-2.6.0-cdh5.8.2/logs/hadoop-root-datanode-localhost.localdomain.out | |

| Starting secondary namenodes [0.0.0.0] | |

| 0.0.0.0: starting secondarynamenode, logging to /opt/hadoop/hadoop-2.6.0-cdh5.8.2/logs/hadoop-root-secondarynamenode-localhost.localdomain.out | |

| 16/10/26 03:58:31 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable |

| 启动完成以后我们调用 "jps" 命令看是否真的启动成功了 | |

| [] | |

| 28442 Jps | |

| 27137 NameNode | |

| 27401 SecondaryNameNode | |

| 27246 DataNode |

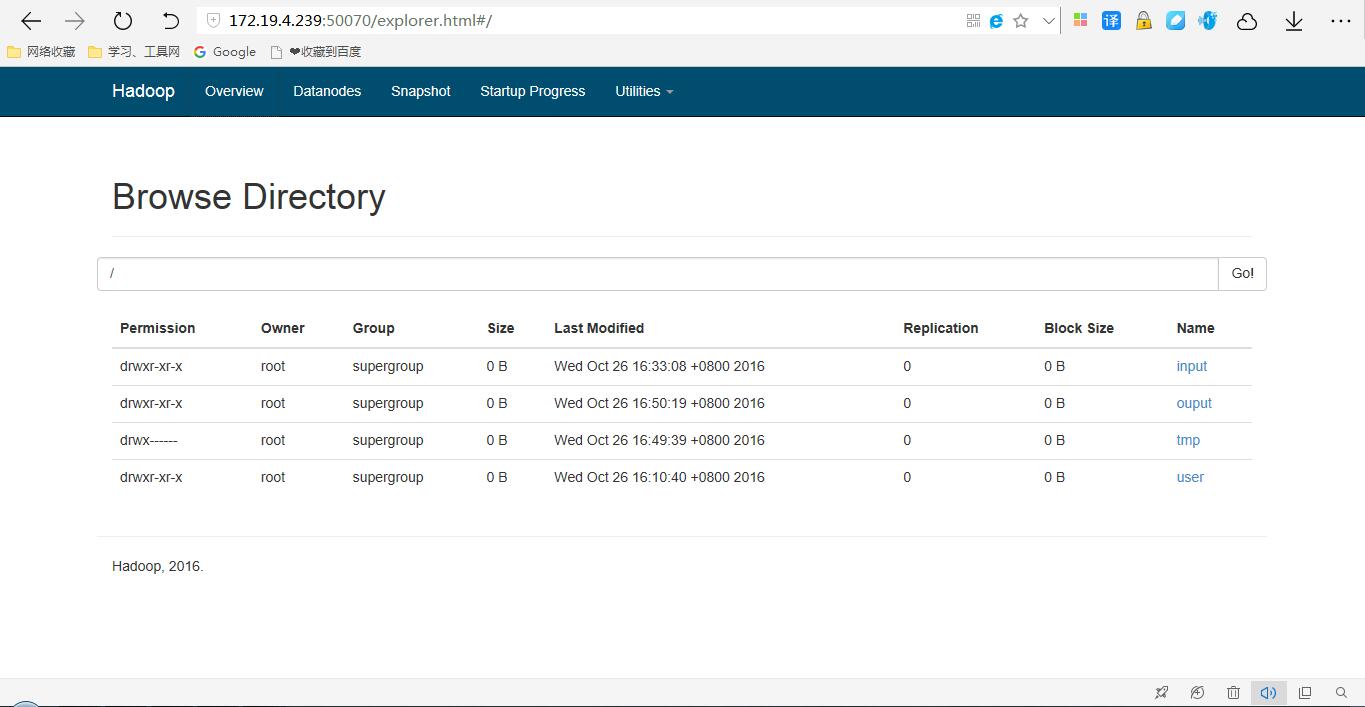

Web 浏览 HDFS 文件

| 到这一步我们可以通过 ip 地址访问 HDFS 文件系统。[root@localhost hadoop]# ifconfig | |

| inet **172.19.4.239** netmask 255.255.255.0 broadcast 172.19.4.255 | |

| inet6 fe80::250:56ff:fe8d:1144 prefixlen 64 scopeid 0x20<link> | |

| ether 00:50:56:8d:11:44 txqueuelen 1000 (Ethernet) | |

| RX packets 1638661 bytes 777256881 (741.2 MiB) | |

| RX errors 0 dropped 8125 overruns 0 frame 0 | |

| TX packets 333206 bytes 24964212 (23.8 MiB) | |

| TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 | |

| 我这里的地址为 172.19.4.239 那么外部访问的地址是:http://172.19.4.239:50070(默认的端口号)/ 就可以访问。 |

命令操作 HDFS 文件

| 我们讲到了配置 --> 启动, 现在我们来讲讲对 HDFS 文件系统的操作, 操作 HDFS 在 linux 中的命令: | |

| [root@localhost hadoop]# hdfs dfs | |

| Usage: hadoop fs [generic options] | |

| [-appendToFile <localsrc> ... <dst>] | |

| [-cat [-ignoreCrc] <src> ...] | |

| [-checksum <src> ...] | |

| [-chgrp [-R] GROUP PATH...] | |

| [-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...] | |

| [-chown [-R] [OWNER][:[GROUP]] PATH...] | |

| [-copyFromLocal [-f] [-p] [-l] <localsrc> ... <dst>] | |

| [-copyToLocal [-p] [-ignoreCrc] [-crc] <src> ... <localdst>] | |

| [-count [-q] [-h] [-v] <path> ...] | |

| [-cp [-f] [-p | -p[topax]] <src> ... <dst>] | |

| [-createSnapshot <snapshotDir> [<snapshotName>]] | |

| [-deleteSnapshot <snapshotDir> <snapshotName>] | |

| [-df [-h] [<path> ...]] | |

| [-du [-s] [-h] <path> ...] | |

| [-expunge] | |

| [-find <path> ... <expression> ...] | |

| [-get [-p] [-ignoreCrc] [-crc] <src> ... <localdst>] | |

| [-getfacl [-R] <path>] | |

| [-getfattr [-R] {-n name | -d} [-e en] <path>] | |

| [-getmerge [-nl] <src> <localdst>] | |

| [-help [cmd ...]] | |

| [-ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [<path> ...]] | |

| [-mkdir [-p] <path> ...] | |

| [-moveFromLocal <localsrc> ... <dst>] | |

| [-moveToLocal <src> <localdst>] | |

| [-mv <src> ... <dst>] | |

| [-put [-f] [-p] [-l] <localsrc> ... <dst>] | |

| [-renameSnapshot <snapshotDir> <oldName> <newName>] | |

| [-rm [-f] [-r|-R] [-skipTrash] <src> ...] | |

| [-rmdir [--ignore-fail-on-non-empty] <dir> ...] | |

| [-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]] | |

| [-setfattr {-n name [-v value] | -x name} <path>] | |

| [-setrep [-R] [-w] <rep> <path> ...] | |

| [-stat [format] <path> ...] | |

| [-tail [-f] <file>] | |

| [-test -[defsz] <path>] | |

| [-text [-ignoreCrc] <src> ...] | |

| [-touchz <path> ...] | |

| [-usage [cmd ...]] | |

| Generic options supported are | |

| -conf <configuration file> specify an application configuration file | |

| -D <property=value> use value for given property | |

| -fs <local|namenode:port> specify a namenode | |

| -jt <local|resourcemanager:port> specify a ResourceManager | |

| -files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster | |

| -libjars <comma separated list of jars> specify comma separated jar files to include in the classpath. | |

| -archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines. | |

| The general command line syntax is | |

| bin/hadoop command [genericOptions] [commandOptions] | |

| ** 建立一个文件夹 ** | |

| [root@localhost hadoop]# hdfs dfs -mkdir /user | |

| 16/10/26 04:10:38 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| ** 查看是否创建成功 ** | |

| [root@localhost hadoop]# hdfs dfs -ls / | |

| 16/10/26 04:12:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| Found 1 items | |

| drwxr-xr-x - root supergroup 0 2016-10-26 04:10 /user | |

| 简单命令就讲到这里以后会更单独讲解 HDFS |

Mapreduce 案例

| 首先运行计算就要启动 mapreduce, 我们刚刚才启动了 start-dfs.sh (HDFS 文件系统), 所以不能计算那么我们把 mapreduce 的程序也启动起来。启动 mapreduce 命令 | |

| [] | |

| starting yarn daemons | |

| starting resourcemanager, logging to /opt/hadoop/hadoop-2.6.0-cdh5.8.2/logs/yarn-root-resourcemanager-localhost.localdomain.out | |

| localhost: starting nodemanager, logging to /opt/hadoop/hadoop-2.6.0-cdh5.8.2/logs/yarn-root-nodemanager-localhost.localdomain.out | |

| 启动完成以后我们调用 "jps" 命令看是否真的启动成功了 | |

| 28113 NodeManager | |

| 28011 ResourceManager | |

| 28442 Jps | |

| 27137 NameNode | |

| 27401 SecondaryNameNode | |

| 27246 DataNode | |

| 可以很明显的看到多出来两个程序。 |

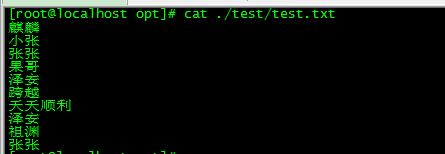

创建测试文件

| 创建一个测试的数据: | |

| vi /opt/test/test.txt | |

| 麒麟 | |

| 小张 | |

| 张张 | |

| 果哥 | |

| 泽安 | |

| 跨越 | |

| 天天顺利 | |

| 泽安 | |

| 祖渊 | |

| 张张 |

将测试文件上传到 HDFS

首先我们要在 HDFS 上再创建两个文件, 一个 input(输入) / ouput(输出)的文件夹。

| [] | |

| 16/10/26 04:30:33 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| 将测试数据上传到 "input" 文件夹 | |

| [] | |

| 16/10/26 04:33:03 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| 查看是上传成功 | |

| [] | |

| 16/10/26 04:34:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| 麒麟 | |

| 小张 | |

| 张张 | |

| 果哥 | |

| 泽安 | |

| 跨越 | |

| 天天顺利 | |

| 泽安 | |

| 祖渊 | |

| 张张 |

调用 Hadoop 自带的 WordCount 方法

| [root@localhost ~]# hadoop jar /opt/hadoop/hadoop-2.6.0-cdh5.8.2/share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.6.0-cdh5.8.2.jar wordcount /input /ouput/test | |

| 16/10/26 04:49:37 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| 16/10/26 04:49:38 INFO client.RMProxy: Connecting to ResourceManager at localhost/127.0.0.1:8032 | |

| 16/10/26 04:49:42 INFO input.FileInputFormat: Total input paths to process : 1 | |

| 16/10/26 04:49:43 INFO mapreduce.JobSubmitter: number of splits:1 | |

| 16/10/26 04:49:44 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1477471653063_0001 | |

| 16/10/26 04:49:46 INFO impl.YarnClientImpl: Submitted application application_1477471653063_0001 | |

| 16/10/26 04:49:47 INFO mapreduce.Job: The url to track the job: http://localhost:8088/proxy/application_1477471653063_0001/ | |

| 16/10/26 04:49:47 INFO mapreduce.Job: Running job: job_1477471653063_0001 | |

| 16/10/26 04:50:21 INFO mapreduce.Job: Job job_1477471653063_0001 running in uber mode : false | |

| 16/10/26 04:50:21 INFO mapreduce.Job: map 0% reduce 0% | |

| 16/10/26 04:50:44 INFO mapreduce.Job: map 100% reduce 0% | |

| 16/10/26 04:51:04 INFO mapreduce.Job: map 100% reduce 100% | |

| 16/10/26 04:51:06 INFO mapreduce.Job: Job job_1477471653063_0001 completed successfully | |

| 16/10/26 04:51:06 INFO mapreduce.Job: Counters: 49 | |

| File System Counters | |

| FILE: Number of bytes read=116 | |

| FILE: Number of bytes written=232107 | |

| FILE: Number of read operations=0 | |

| FILE: Number of large read operations=0 | |

| FILE: Number of write operations=0 | |

| HDFS: Number of bytes read=177 | |

| HDFS: Number of bytes written=78 | |

| HDFS: Number of read operations=6 | |

| HDFS: Number of large read operations=0 | |

| HDFS: Number of write operations=2 | |

| Job Counters | |

| Launched map tasks=1 | |

| Launched reduce tasks=1 | |

| Data-local map tasks=1 | |

| Total time spent by all maps in occupied slots (ms)=18128 | |

| Total time spent by all reduces in occupied slots (ms)=17756 | |

| Total time spent by all map tasks (ms)=18128 | |

| Total time spent by all reduce tasks (ms)=17756 | |

| Total vcore-seconds taken by all map tasks=18128 | |

| Total vcore-seconds taken by all reduce tasks=17756 | |

| Total megabyte-seconds taken by all map tasks=18563072 | |

| Total megabyte-seconds taken by all reduce tasks=18182144 | |

| Map-Reduce Framework | |

| Map input records=10 | |

| Map output records=10 | |

| Map output bytes=116 | |

| Map output materialized bytes=116 | |

| Input split bytes=101 | |

| Combine input records=10 | |

| Combine output records=8 | |

| Reduce input groups=8 | |

| Reduce shuffle bytes=116 | |

| Reduce input records=8 | |

| Reduce output records=8 | |

| Spilled Records=16 | |

| Shuffled Maps =1 | |

| Failed Shuffles=0 | |

| Merged Map outputs=1 | |

| GC time elapsed (ms)=454 | |

| CPU time spent (ms)=3450 | |

| Physical memory (bytes) snapshot=306806784 | |

| Virtual memory (bytes) snapshot=3017633792 | |

| Total committed heap usage (bytes)=163450880 | |

| Shuffle Errors | |

| BAD_ID=0 | |

| CONNECTION=0 | |

| IO_ERROR=0 | |

| WRONG_LENGTH=0 | |

| WRONG_MAP=0 | |

| WRONG_REDUCE=0 | |

| File Input Format Counters | |

| Bytes Read=76 | |

| File Output Format Counters | |

| Bytes Written=78 | |

| 运行完成我们看看计算出来的结果: | |

| [root@localhost ~]# hdfs dfs -ls /ouput/test | |

| 16/10/26 04:53:22 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| Found 2 items | |

| -rw-r--r-- 1 root supergroup 0 2016-10-26 04:51 /ouput/test/_SUCCESS | |

| -rw-r--r-- 1 root supergroup 78 2016-10-26 04:51 /ouput/test/part-r-00000 | |

| [root@localhost ~]# hdfs dfs -cat /ouput/test/part-r-00000 | |

| 16/10/26 04:53:41 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| 天天顺利 1 | |

| 小张 1 | |

| 张张 2 | |

| 果哥 1 | |

| 泽安 2 | |

| 祖渊 1 | |

| 跨越 1 | |

| 麒麟 1 |

错误记录

| [root@localhost ~]# hadoop jar /opt/hadoop/hadoop-2.6.0-cdh5.8.2/share/hadoop/mapreduce2/hadoop-mapreduce-examples-2.6.0-cdh5.8.2.jar wordcount /input /ouput | |

| 16/10/26 04:40:32 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable | |

| 16/10/26 04:40:33 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032 | |

| 16/10/26 04:40:34 WARN security.UserGroupInformation: PriviledgedActionException as:root (auth:SIMPLE) cause:java.net.ConnectException: Call From localhost.localdomain/127.0.0.1 to localhost:9001 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused | |

| java.net.ConnectException: Call From localhost.localdomain/127.0.0.1 to localhost:9001 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused | |

| at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) | |

| at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57) | |

| at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) | |

| at java.lang.reflect.Constructor.newInstance(Constructor.java:526) | |

| at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:791) | |

| at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:731) | |

| at org.apache.hadoop.ipc.Client.call(Client.java:1475) | |

| at org.apache.hadoop.ipc.Client.call(Client.java:1408) | |

| at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:230) | |

| at com.sun.proxy.$Proxy9.getFileInfo(Unknown Source) | |

| at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:762) | |

| at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) | |

| at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) | |

| at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) | |

| at java.lang.reflect.Method.invoke(Method.java:606) | |

| at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:256) | |

| at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:104) | |

| at com.sun.proxy.$Proxy10.getFileInfo(Unknown Source) | |

| at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:2121) | |

| at org.apache.hadoop.hdfs.DistributedFileSystem$19.doCall(DistributedFileSystem.java:1215) | |

| at org.apache.hadoop.hdfs.DistributedFileSystem$19.doCall(DistributedFileSystem.java:1211) | |

| at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81) | |

| at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1211) | |

| at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1412) | |

| at org.apache.hadoop.mapreduce.lib.output.FileOutputFormat.checkOutputSpecs(FileOutputFormat.java:145) | |

| at org.apache.hadoop.mapreduce.JobSubmitter.checkSpecs(JobSubmitter.java:270) | |

| at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:143) | |

| at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1307) | |

| at org.apache.hadoop.mapreduce.Job$10.run(Job.java:1304) | |

| at java.security.AccessController.doPrivileged(Native Method) | |

| at javax.security.auth.Subject.doAs(Subject.java:415) | |

| at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1693) | |

| at org.apache.hadoop.mapreduce.Job.submit(Job.java:1304) | |

| at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1325) | |

| at org.apache.hadoop.examples.WordCount.main(WordCount.java:87) | |

| at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) | |

| at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) | |

| at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) | |

| at java.lang.reflect.Method.invoke(Method.java:606) | |

| at org.apache.hadoop.util.ProgramDriver$ProgramDescription.invoke(ProgramDriver.java:71) | |

| at org.apache.hadoop.util.ProgramDriver.run(ProgramDriver.java:144) | |

| at org.apache.hadoop.examples.ExampleDriver.main(ExampleDriver.java:74) | |

| at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) | |

| at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) | |

| at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) | |

| at java.lang.reflect.Method.invoke(Method.java:606) | |

| at org.apache.hadoop.util.RunJar.run(RunJar.java:221) | |

| at org.apache.hadoop.util.RunJar.main(RunJar.java:136) | |

| Caused by: java.net.ConnectException: Connection refused | |

| at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) | |

| at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:739) | |

| at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206) | |

| at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:530) | |

| at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:494) | |

| at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:614) | |

| at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:713) | |

| at org.apache.hadoop.ipc.Client$Connection.access$2900(Client.java:375) | |

| at org.apache.hadoop.ipc.Client.getConnection(Client.java:1524) | |

| at org.apache.hadoop.ipc.Client.call(Client.java:1447) | |

| ... 41 more | |

| [root@localhost ~]# | |

| 问题: 没有关闭防火墙 | |

| service iptables stop 关闭 | |

| chkconfig iptables off 永久关闭 |

下面关于 Hadoop 的文章您也可能喜欢,不妨看看:

Ubuntu14.04 下 Hadoop2.4.1 单机 / 伪分布式安装配置教程 http://www.linuxidc.com/Linux/2015-02/113487.htm

CentOS 安装和配置 Hadoop2.2.0 http://www.linuxidc.com/Linux/2014-01/94685.htm

Ubuntu 14.04 LTS 下安装 Hadoop 1.2.1(伪分布模式)http://www.linuxidc.com/Linux/2016-09/135406.htm

Ubuntu 上搭建 Hadoop 环境(单机模式 + 伪分布模式)http://www.linuxidc.com/Linux/2013-01/77681.htm

Ubuntu 下 Hadoop 环境的配置 http://www.linuxidc.com/Linux/2012-11/74539.htm

单机版搭建 Hadoop 环境图文教程详解 http://www.linuxidc.com/Linux/2012-02/53927.htm

Hadoop 2.6.0 HA 高可用集群配置详解 http://www.linuxidc.com/Linux/2016-08/134180.htm

Spark 1.5、Hadoop 2.7 集群环境搭建 http://www.linuxidc.com/Linux/2016-09/135067.htm

本文永久更新链接地址:http://www.linuxidc.com/Linux/2016-10/136498.htm