共计 8208 个字符,预计需要花费 21 分钟才能阅读完成。

一、准备工作

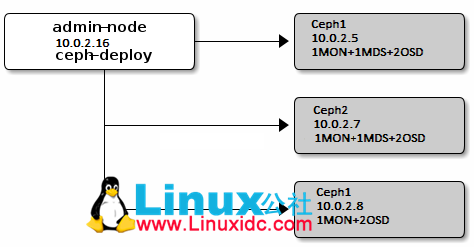

1、环境说明

| 节点名称 | IP 地址 | 部署进程 | 数据盘 |

| ceph1 |

10.0.2.5

|

1MON+1MDS+2OSD | /dev/vdb, /dev/vdc |

|

ceph2

|

10.0.2.7

|

1MON+1MDS+2OSD | /dev/vdb, /dev/vdc |

|

ceph3

|

10.0.2.8

|

1MON+1RGW+2OSD |

/dev/vdb, /dev/vdc

|

2、Ceph deploy 管理节点部署

| yum install -y yum-utils && yum-config-manager --add-repo https://dl.Fedoraproject.org/pub/epel/7/x86_64/ | |

| && yum install --nogpgcheck -y epel-release && rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 | |

| && rm /etc/yum.repos.d/dl.fedoraproject.org* |

创建一个 Ceph yum 源配置文件:

| vi /etc/yum.repos.d/ceph.repo | |

| [Ceph] | |

| name=Ceph packages for $basearch | |

| baseurl=http://download.ceph.com/rpm-infernalis/el7/$basearch | |

| enabled=1 | |

| gpgcheck=1 | |

| type=rpm-md | |

| gpgkey=https://download.ceph.com/keys/release.asc | |

| priority=1 | |

| [Ceph-noarch] | |

| name=Ceph noarch packages | |

| baseurl=http://download.ceph.com/rpm-infernalis/el7/noarch | |

| enabled=1 | |

| gpgcheck=1 | |

| type=rpm-md | |

| gpgkey=https://download.ceph.com/keys/release.asc | |

| priority=1 | |

| [ceph-source] | |

| name=Ceph source packages | |

| baseurl=http://download.ceph.com/rpm-infernalis/el7/SRPMS | |

| enabled=1 | |

| gpgcheck=1 | |

| type=rpm-md | |

| gpgkey=https://download.ceph.com/keys/release.asc | |

| priority=1 |

yum update && yum install ceph-deploy

3、Ceph 节点部署环境准备

yum -y install ntp ntpdate ntp-doc

| vi /etc/hosts | |

| 10.0.2.5 ceph1 | |

| 10.0.2.7 ceph2 | |

| 10.0.2.8 ceph3 | |

| 10.0.2.16 ceph-mgmt |

| # useradd -d /home/dpadmin -m dpadmin | |

| #echo "dpadmin ALL = (root) NOPASSWD:ALL" | tee /etc/sudoers.d/dpadmin | |

| #chmod 0440 /etc/sudoers.d/dpadmin |

| # useradd -d /home/dpadmin -m dpadmin | |

| #su - dpadmin | |

| $ssh-keygen | |

| 注:设置密钥密码为空 | |

| $ssh-copy-id dpadmin@ceph1 | |

| $ssh-copy-id dpadmin@ceph2 | |

| $ssh-copy-id dpadmin@ceph3 |

| firewall-cmd --zone=public --add-port=6789/tcp --permanent | |

| firewall-cmd --zone=public --add-port=7480/tcp --permanent | |

| firewall-cmd --zone=public --add-port=6800:7300/tcp --permanent |

| #visudo -- 修改如下 | |

| Defaults:ceph !requiretty |

二、创建 Ceph 存储集群

1、在管理节点上创建一个目录,用于保存集群的配置文件和密钥文件。

| mkdir my-cluster | |

| cd my-cluster |

2、开始创建新集群,生成集群使用的配置文件和密钥

| $ceph-deploy new ceph{1,2,3} | |

| $ ls | |

| ceph.conf ceph.log ceph.mon.keyring |

| $vi ceph.conf | |

| [global] | |

| fsid = c10f9ac8-6524-48ea-bb52-257936e42b35 | |

| mon_initial_members = ceph1, ceph2, ceph3 | |

| mon_host = 10.0.2.5,10.0.2.7,10.0.2.8 | |

| auth_cluster_required = cephx | |

| auth_service_required = cephx | |

| auth_client_required = cephx | |

| filestore_xattr_use_omap = true | |

| osd pool default size = 2 |

3、运行 ceph-deploy install 命令在各节点上安装 ceph 软件

$ceph-deploy install ceph1

# ceph-deploy install ceph-mgmt

$ceph -v

4、初始化集群的监控服务并收集密钥文件

| $cd my-cluster | |

| $ceph-deploy mon create-initial | |

| $ ls | |

| ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring | |

| ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph.log |

5、增加 OSDs

| ceph-deploy disk zap {osd-server-name}:{disk-name} | |

| ceph-deploy disk zap osdserver1:sdb |

| $ceph-deploy disk list ceph1 | |

| $ceph-deploy disk list ceph2 | |

| $ceph-deploy disk list ceph3 |

| [ceph1][DEBUG] /dev/vdb other, unknown | |

| [ceph1][DEBUG] /dev/vdc other, unknown |

| $ceph-deploy osd create ceph1:/dev/vdb ceph2:/dev/vdb ceph3:/dev/vdb | |

| $ceph-deploy osd create ceph1:/dev/vdc ceph2:/dev/vdc ceph3:/dev/vdc |

6、将 配置文件和 Admin key 复制到各个节点

$ceph-deploy admin ceph{1,2,3}

| # cp ceph.client.admin.keyring ceph.conf /etc/ceph | |

| #chmod +r /etc/ceph/ceph.client.admin.keyring |

sudo chmod +r /etc/ceph/ceph.client.admin.keyring

7、Ceph 集群的监控

| $ ceph | |

| ceph> health | |

| HEALTH_OK | |

| ceph> status | |

| cluster ef472f1b-8967-4041-826c-18bd31118a9a | |

| health HEALTH_OK | |

| monmap e1: 3 mons at {ceph1=10.0.2.5:6789/0,ceph2=10.0.2.7:6789/0,ceph3=10.0.2.8:6789/0} | |

| election epoch 4, quorum 0,1,2 ceph1,ceph2,ceph3 | |

| osdmap e34: 6 osds: 6 up, 6 in | |

| flags sortbitwise | |

| pgmap v82: 64 pgs, 1 pools, 0 bytes data, 0 objects | |

| 203 MB used, 569 GB / 569 GB avail | |

| 64 active+clean | |

| ceph> quorum_status | |

| {"election_epoch":4,"quorum":[0,1,2],"quorum_names":["ceph1","ceph2","ceph3"],"quorum_leader_name":"ceph1","monmap":{"epoch":1,"fsid":"ef472f1b-8967-4041-826c-18bd31118a9a","modified":"0.000000","created":"0.000000","mons":[{"rank":0,"name":"ceph1","addr":"10.0.2.5:6789\/0"},{"rank":1,"name":"ceph2","addr":"10.0.2.7:6789\/0"},{"rank":2,"name":"ceph3","addr":"10.0.2.8:6789\/0"}]}} | |

| ceph> mon_status | |

| {"name":"ceph2","rank":1,"state":"peon","election_epoch":4,"quorum":[0,1,2],"outside_quorum":[],"extra_probe_peers":["10.0.2.5:6789\/0","10.0.2.8:6789\/0"],"sync_provider":[],"monmap":{"epoch":1,"fsid":"ef472f1b-8967-4041-826c-18bd31118a9a","modified":"0.000000","created":"0.000000","mons":[{"rank":0,"name":"ceph1","addr":"10.0.2.5:6789\/0"},{"rank":1,"name":"ceph2","addr":"10.0.2.7:6789\/0"},{"rank":2,"name":"ceph3","addr":"10.0.2.8:6789\/0"}]}} | |

| ceph> osd tree | |

| ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY | |

| -1 0.55618 root default | |

| -2 0.18539 host ceph1 | |

| 0 0.09270 osd.0 up 1.00000 1.00000 | |

| 3 0.09270 osd.3 up 1.00000 1.00000 | |

| -3 0.18539 host ceph2 | |

| 1 0.09270 osd.1 up 1.00000 1.00000 | |

| 4 0.09270 osd.4 up 1.00000 1.00000 | |

| -4 0.18539 host ceph3 | |

| 2 0.09270 osd.2 up 1.00000 1.00000 | |

| 5 0.09270 osd.5 up 1.00000 1.00000 |

$ceph status 或 $ceph -s

| $ ceph osd stat | |

| $ ceph osd dump | |

| $ ceph osd tree |

| $ceph mon stat | |

| $ceph mon dump | |

| $ceph quorum_status |

| $ceph mds stat | |

| $ceph mds dump |

8、创建 METADATA SERVER

$ceph-deploy mds create ceph1 ceph2

9、创建 RGW 服务实例

$ ceph-deploy rgw create ceph3

三、在客户机上使用 Ceph RBD 块存储服务

1、测试机的环境准备

2、安装 ceph 软件

3、拷贝配置文件到测试机

sudo chmod +r /etc/ceph/ceph.client.admin.keyring4、在测试机上创建一个块设备映像

5、在测试机上把块设备映像映射到一个块设备上去

6、创建文件系统

7、挂载并测试块存储设备

| $sudo mkdir /mnt/ceph-block-device | |

| $sudo mount /dev/rbd/rbd/foo /mnt/ceph-block-device | |

| $cd /mnt/ceph-block-device |

在 CentOS 7.1 上安装分布式存储系统 Ceph http://www.linuxidc.com/Linux/2015-08/120990.htm

Ceph 环境配置文档 PDF http://www.linuxidc.com/Linux/2013-05/85212.htm

CentOS 6.3 上部署 Ceph http://www.linuxidc.com/Linux/2013-05/85213.htm

Ceph 的安装过程 http://www.linuxidc.com/Linux/2013-05/85210.htm

HOWTO Install Ceph On FC12, FC 上安装 Ceph 分布式文件系统 http://www.linuxidc.com/Linux/2013-05/85209.htm

Ceph 文件系统安装 http://www.linuxidc.com/Linux/2013-05/85208.htm

CentOS 6.2 64 位上安装 Ceph 0.47.2 http://www.linuxidc.com/Linux/2013-05/85206.htm

Ubuntu 12.04 Ceph 分布式文件系统 http://www.linuxidc.com/Linux/2013-04/82588.htm

Ubuntu 16.04 快速安装 Ceph 集群 http://www.linuxidc.com/Linux/2016-09/135261.htm

Ceph 的详细介绍 :请点这里

Ceph 的下载地址 :请点这里

本文永久更新链接地址 :http://www.linuxidc.com/Linux/2016-11/137094.htm