共计 30638 个字符,预计需要花费 77 分钟才能阅读完成。

HDFS Architecture

- HDFS Architecture(HDFS 架构)

- Introduction(简介)

- Assumptions and Goals(假设和目标)

- Hardware Failure(硬件失效是常态)

- Streaming Data Access(支持流式访问)

- Large Data Sets(大数据集)

- Simple Coherency Model(简单一致性模型)

- “Moving Computation is Cheaper than Moving Data”(移动计算比移动数据更划算)

- Portability Across Heterogeneous Hardware and Software Platforms(轻便的跨异构的软硬件平台)

- NameNode and DataNodes

- The File System Namespace(文件系统命名空间)

- Data Replication(数据副本)

- Replica Placement: The First Baby Steps(副本选址:第一次小尝试)

- Replica Selection(副本选择)

- Safemode(安全模式)

- The Persistence of File System Metadata(文件系统元数据的持久化)

- The Communication Protocols(通讯协议)

- Robustness(鲁棒性)

- Data Disk Failure, Heartbeats and Re-Replication(数据磁盘失效、心跳机制和重新复制)

- Cluster Rebalancing(集群调整)

- Data Integrity(数据完整性)

- Metadata Disk Failure(元数据磁盘失效)

- Snapshots(快照)

- Data Organization(数据结构)

- Data Blocks(数据块)

- Staging(分段)

- Replication Pipelining(复制管道)

- Accessibility(可访问性)

- FS Shell(FS命令行)

- DFSAdmin(用户管理命令行)

- Browser Interface(浏览器接口)

- Space Reclamation(空间回收)

- File Deletes and Undeletes(文件删除和恢复)

- Decrease Replication Factor(减少副本因子)

- References

- HDFS Architecture(HDFS 架构)

Introduction

The Hadoop Distributed File System (HDFS) is a distributed file system designed to run on commodity hardware. It has many similarities with existing distributed file systems. However, the differences from other distributed file systems are significant. HDFS is highly fault-tolerant and is designed to be deployed on low-cost hardware. HDFS provides high throughput access to application data and is suitable for applications that have large data sets. HDFS relaxes a few POSIX requirements to enable streaming access to file system data. HDFS was originally built as infrastructure for the Apache Nutch web search engine project. HDFS is part of the Apache Hadoop Core project. The project URL is http://hadoop.apache.org/.

Hadoop 分布式文件系统是一个设计可以运行在廉价硬件的分布式系统。它跟目前存在的分布式系统有很多相似之处。然而,不同之处才是重要的。HDFS 是一个高容错和可部署在廉价机器上的系统。HDFS 提供高吞吐数据能力适合处理大量数据。HDFS 松散了一些需求使得支持流式传输。HDFS 原本是为 Apache Butch 的搜索引擎设计的,现在是 Apache Hadoop 项目的子项目。

Assumptions and Goals

Hardware Failure(硬件失效)

Hardware failure is the norm rather than the exception. An HDFS instance may consist of hundreds or thousands of server machines, each storing part of the file system’s data. The fact that there are a huge number of components and that each component has a non-trivial probability of failure means that some component of HDFS is always non-functional. Therefore, detection of faults and quick, automatic recovery from them is a core architectural goal of HDFS.

硬件失效是常态而不是意外。HDFS 实例可能包含上百成千个服务器,每个节点存储着文件系统的部分数据。事实是集群有大量的节点,而每个节点都存在一定的概率失效也就意味着 HDFS 的一些组成部分经常失效。因此,检测错误、快速和自动恢复是 HDFS 的核心架构。

Streaming Data Access(流式访问)

Applications that run on HDFS need streaming access to their data sets. They are not general purpose applications that typically run on general purpose file systems. HDFS is designed more for batch processing rather than interactive use by users. The emphasis is on high throughput of data access rather than low latency of data access. POSIX imposes many hard requirements that are not needed for applications that are targeted for HDFS. POSIX semantics in a few key areas has been traded to increase data throughput rates.

应���运行在 HDFS 需要允许流式访问它的数据集。这不是普通的应用程序运行在普通的文件系统上。HDFS 是被设计用于批量处理而非用户交互。设计的重点是高吞吐量访问而不是低延迟数据访问。POSIX 语义在一些关键领域是用来提高吞吐量。

Large Data Sets(大数据集)

Applications that run on HDFS have large data sets. A typical file in HDFS is gigabytes to terabytes in size. Thus, HDFS is tuned to support large files. It should provide high aggregate data bandwidth and scale to hundreds of nodes in a single cluster. It should support tens of millions of files in a single instance.

运行在 HDFS 的应用程序有大数据集。一个典型文档在 HDFS 是 GB 到 TB 级别的。因此,HDFS 是用来支持大文件。它应该提供高带宽和可扩展到上百节点在一个集群中。它应该支持在一个实例中有以千万计的文件数。

Simple Coherency Model(简单一致性模型)

HDFS applications need a write-once-read-many access model for files. A file once created, written, and closed need not be changed except for appends and truncates. Appending the content to the end of the files is supported but cannot be updated at arbitrary point. This assumption simplifies data coherency issues and enables high throughput data access. A MapReduce application or a web crawler application fits perfectly with this model.

HDFS 应用需要一个一次写入多次读取的文件访问模型。一个文件一旦创建,写入和关系都不需要改变。支持在文件的末端进行追加数据而不支持在文件的任意位置进行修改。这个假设简化了数据一致性问题和支持高吞吐量的访问。支持在数据尾部增加内容而不支持在任意位置更新。一个 Map/Reduce 任务或者 web 爬虫完美匹配了这个模型。

“Moving Computation is Cheaper than Moving Data”(移动计算比移动数据更划算)

A computation requested by an application is much more efficient if it is executed near the data it operates on. This is especially true when the size of the data set is huge. This minimizes network congestion and increases the overall throughput of the system. The assumption is that it is often better to migrate the computation closer to where the data is located rather than moving the data to where the application is running. HDFS provides interfaces for applications to move themselves closer to where the data is located.。

如果应用的计算在它要操作的数据附近执行那就会更高效。尤其是数据集非常大的时候。这将最大限度地减少网络拥堵和提高系统的吞吐量。这个假设是在应用运行中经常移动计算到要操作的数据附近比移动数据数据更好 HDFS 提供接口让应用去移动计算到数据所在的位置。

Portability Across Heterogeneous Hardware and Software Platforms(轻便的跨异构的软硬件平台)

HDFS has been designed to be easily portable from one platform to another. This facilitates widespread adoption of HDFS as a platform of choice for a large set of applications.

HDFS 被设计成可轻便从一个平台跨到另一个平台。这促使 HDFS 被广泛地采用作为应用的大数据集系统。

NameNode and DataNodes

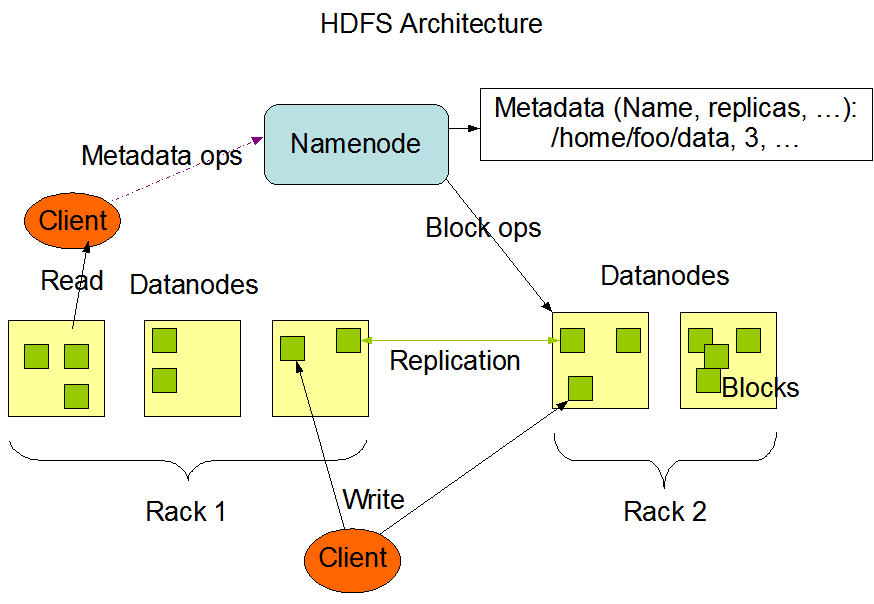

HDFS has a master/slave architecture. An HDFS cluster consists of a single NameNode, a master server that manages the file system namespace and regulates access to files by clients. In addition, there are a number of DataNodes, usually one per node in the cluster, which manage storage attached to the nodes that they run on. HDFS exposes a file system namespace and allows user data to be stored in files. Internally, a file is split into one or more blocks and these blocks are stored in a set of DataNodes. The NameNode executes file system namespace operations like opening, closing, and renaming files and directories. It also determines the mapping of blocks to DataNodes. The DataNodes are responsible for serving read and write requests from the file system’s clients. The DataNodes also perform block creation, deletion, and replication upon instruction from the NameNode.

The NameNode and DataNode are pieces of software designed to run on commodity machines. These machines typically run a GNU/Linux operating system (OS). HDFS is built using the Java language; any machine that supports Java can run the NameNode or the DataNode software. Usage of the highly portable Java language means that HDFS can be deployed on a wide range of machines. A typical deployment has a dedicated machine that runs only the NameNode software. Each of the other machines in the cluster runs one instance of the DataNode software. The architecture does not preclude running multiple DataNodes on the same machine but in a real deployment that is rarely the case.

The existence of a single NameNode in a cluster greatly simplifies the architecture of the system. The NameNode is the arbitrator and repository for all HDFS metadata. The system is designed in such a way that user data never flows through the NameNode.

HDFS 使用主 / 从架构。一个 HDFS 集群包含一个 NameNode,一个服务器管理系统的命名空间和并控制客户端对文件的访问。此外,有许多的 DataNode,通常是集群中的每个节点,用来管理它们所运行的节点的内存。HDFS 暴露文件系统的命名空间和允许用户数据存储在文件中。在系统内部,一个文件被切割成一个或者多个块而这些块将储存在一系列的 DataNode 中。NameNode 执行文件系统的命名空间操作例如打开、关闭和从命名文件和路径。它也指定数据块对应的 DataNode。DataNode 负责提供客户端对文件的读写服务。DataNode 也负责执行 NameNode 的创建、删除和复制指令。

NameNode 和 DatNode 是设计运行在商业电脑的软件框架。这些机器通常是运行着 GNU/Linux 操作系统。HDFS 是用 Java 语言构建的;任何机器只要支持 Java 就可以运行 NameNode 或者 DataNode。使用 Java 这种高可移植性的语言就意味着 HDFS 可以部署在大范围的机器上。部署通常是在专用的机器上只运行 NameNode 软件。集群中的其他每个机器运行着单个 DaaNode 实例。架构并不排除在同一台机器部署多个 DataNode,但是这种情况比较少见。

集群中只存在一个 NameNode 实例极大地简化系统的架构。NameNode 是 HDFS 元数据的仲裁者和储存库。这个系统用这样的方式保证了数据的流动不能避过 NameNode。

The File System Namespace (文件系统命名空间)

HDFS supports a traditional hierarchical file organization. A user or an application can create directories and store files inside these directories. The file system namespace hierarchy is similar to most other existing file systems; one can create and remove files, move a file from one directory to another, or rename a file. HDFS supports user quotas and access permissions. HDFS does not support hard links or soft links. However, the HDFS architecture does not preclude implementing these features.

The NameNode maintains the file system namespace. Any change to the file system namespace or its properties is recorded by the NameNode. An application can specify the number of replicas of a file that should be maintained by HDFS. The number of copies of a file is called the replication factor of that file. This information is stored by the NameNode.

HDFS 支持传统的层级文件结构。用户或应用可以创建文件目录和存储文件在这些目录下。文件系统的命名空间层级跟其他已经在存在的文件系统很相像;可以创建和删除文件,将文件从一个目录移动到另一个目录或者重命名。HDFS 支持用户限制和访问权限。HDFS 不支持硬关联或者软关联。然而,HDFS 架构不排除实现这些特性。

NameNode 维持文件系统的命名空间。文件系统的命名空间或者它的属性的任何改变都被 NameNode 记录着。应用可以指定 HDFS 维持多少个文件副本。文件的拷贝数目称为文件的复制因子。这个信息将会被 NameNode 记录。

Data Replication(数据副本)

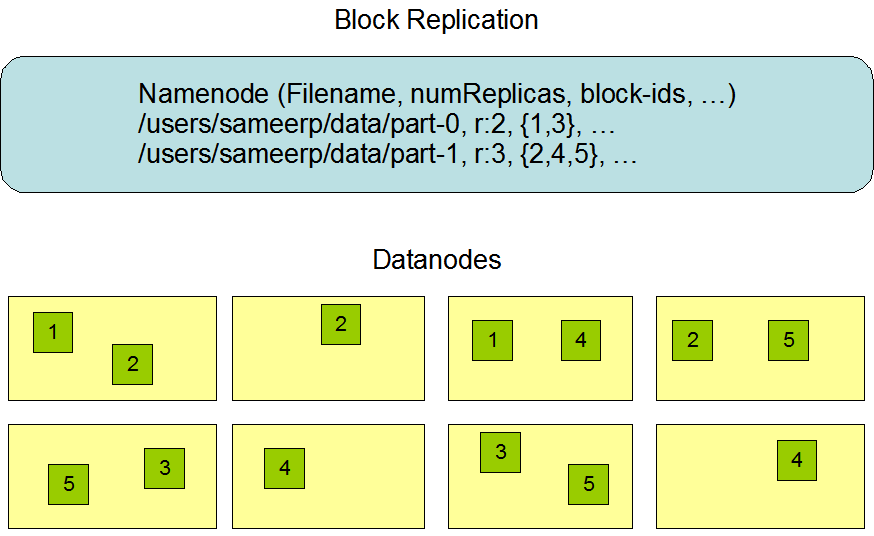

HDFS is designed to reliably store very large files across machines in a large cluster. It stores each file as a sequence of blocks. The blocks of a file are replicated for fault tolerance. The block size and replication factor are configurable per file.

All blocks in a file except the last block are the same size, while users can start a new block without filling out the last block to the configured block size after the support for variable length block was added to append and hsync.

An application can specify the number of replicas of a file. The replication factor can be specified at file creation time and can be changed later. Files in HDFS are write-once (except for appends and truncates) and have strictly one writer at any time.

The NameNode makes all decisions regarding replication of blocks. It periodically receives a Heartbeat and a Blockreport from each of the DataNodes in the cluster. Receipt of a Heartbeat implies that the DataNode is functioning properly. A Blockreport contains a list of all blocks on a DataNode.

HDFS 是被设计成在一个集群中跨机器可靠地存储大量文件。它将每个文件存储为一序列的块。文件的块被复制保证容错。每个文件块的大小和复制因子都是可配置的。

一个文件的所有的块除了最后一个都是同样大小的,同时用户在可以在一个支持可变长度的块被同步添加之后启动一个新的块而没有配置最后一个块的大小。

应用可以指定文件的副本数目。复制因子可以在文件创建时指定,在后面时间修改。HDFS 中的文件一旦写入(除了添加和截断)就 必须在任何时间严格遵守一个写入者。

NameNode 控制着关于 blocks 复制的所有决定。它周期性地接收集群中 DataNode 发送的心跳和块报告。收到心跳意味着 DataNode 在正常地运行着。一个块报告包含着 DataNode 上所有块信息的集合。

Replica Placement: The First Baby Steps 副本选址:第一次小尝试

The placement of replicas is critical to HDFS reliability and performance. Optimizing replica placement distinguishes HDFS from most other distributed file systems. This is a feature that needs lots of tuning and experience. The purpose of a rack-aware replica placement policy is to improve data reliability, availability, and network bandwidth utilization. The current implementation for the replica placement policy is a first effort in this direction. The short-term goals of implementing this policy are to validate it on production systems, learn more about its behavior, and build a foundation to test and research more sophisticated policies.

Large HDFS instances run on a cluster of computers that commonly spread across many racks. Communication between two nodes in different racks has to go through switches. In most cases, network bandwidth between machines in the same rack is greater than network bandwidth between machines in different racks.

The NameNode determines the rack id each DataNode belongs to via the process outlined in Hadoop Rack Awareness. A simple but non-optimal policy is to place replicas on unique racks. This prevents losing data when an entire rack fails and allows use of bandwidth from multiple racks when reading data. This policy evenly distributes replicas in the cluster which makes it easy to balance load on component failure. However, this policy increases the cost of writes because a write needs to transfer blocks to multiple racks.

For the common case, when the replication factor is three, HDFS’s placement policy is to put one replica on one node in the local rack, another on a different node in the local rack, and the last on a different node in a different rack. This policy cuts the inter-rack write traffic which generally improves write performance. The chance of rack failure is far less than that of node failure; this policy does not impact data reliability and availability guarantees. However, it does reduce the aggregate network bandwidth used when reading data since a block is placed in only two unique racks rather than three. With this policy, the replicas of a file do not evenly distribute across the racks. One third of replicas are on one node, two thirds of replicas are on one rack, and the other third are evenly distributed across the remaining racks. This policy improves write performance without compromising data reliability or read performance.

The current, default replica placement policy described here is a work in progress.

副本的选址对 HDFS 的可靠性和性能是起到关键作用的。优化的副本选址使得 HDFS 有别于大多数分布式文件系统。这是一个需要大量调试和经验的特性。机架感知副本配置策略的目的是提高可靠性、可用性和网络带宽的利用率。目前的副本放置策略实现是第一次在这个方向上的努力。这个策略实现的短期目标是在生产环境上验证,更多地了解它的行为表现,建立一个基础用来测试和研究更好的策略。

运行在集群计算机的大型 HDFS 实例一般是分布在许多机架上。两个不同机架上的节点的通讯必须经过交换机。在大多数情况下,同一个机架上的不同机器之间的网络带宽要优于不同机架上的机器的。NameNode 通过在 Hadoop Rack Awarenes 概述过程来确定每个 DataNode 属于哪个机架 ID。一个简单但不是最佳的策略上是将副本部署在不同的机架上。这将避免一个机架失效时丢失数据和允许使用带宽来跨机架读取数据。这个策略平衡地将副本分布在集群中以平衡组件失效负载。然而,这个策略增加了写的负担因为一个块数据需要在多个机架之间传输。

通常情况下,当复制因子为 3 时,HDFS 的副本放置策略是将一个副本放在本机架的一个节点上,将另一个副本放在本机架的另一个节点,最后一个副本放在不同机架的不同节点上。该策略减少机架内部的传输以提高写的性能。机架失效的概率要远低于节点失效;这个策略不会影响数据可靠性和可用性的保证。然而,它确实会减少数据读取时网络带宽的使用因为数据块只放置在两个单独的机架而不是三个。在这个策略当中,副本的分布不是均匀的。三分一个的副本放置在一个节点上,三分之二的副本放置在一个机架上,而另外三分之一均匀分布在剩余的机架上。这个策略提高了写性能而不影响数据可靠性和读性能。

目前,默认的副本放置策略描述的是正在进行的工作。

Replica Selection(副本选择)

To minimize global bandwidth consumption and read latency, HDFS tries to satisfy a read request from a replica that is closest to the reader. If there exists a replica on the same rack as the reader node, then that replica is preferred to satisfy the read request. If HDFS cluster spans multiple data centers, then a replica that is resident in the local data center is preferred over any remote replica.

为了最大限度地减少全局带宽消耗和读取延迟,HDFS 试图让读取者的读取需求离副本最近。如果存在一个副本在客户端节点所在的同个机架上,那么这个副本是满足读取需求的首选。如果 HDFS 集群横跨多个数据中心,那么在本地数据中心的副本将是优先于其他远程副本。

Safemode(安全模式)

On startup, the NameNode enters a special state called Safemode. Replication of data blocks does not occur when the NameNode is in the Safemode state. The NameNode receives Heartbeat and Blockreport messages from the DataNodes. A Blockreport contains the list of data blocks that a DataNode is hosting. Each block has a specified minimum number of replicas. A block is considered safely replicated when the minimum number of replicas of that data block has checked in with the NameNode. After a configurable percentage of safely replicated data blocks checks in with the NameNode (plus an additional 30 seconds), the NameNode exits the Safemode state. It then determines the list of data blocks (if any) that still have fewer than the specified number of replicas. The NameNode then replicates these blocks to other DataNodes.

在启动时,NameNode 进入一个特殊的状态称之为安全模式。当 NameNode 进入安全模式之后数据块的复制将不会发生。NameNode 接收来自 DataNode 的心跳和数据块报告。数据块报告包含正在运行的 DataNode 上的数据块信息集合。每个快都指定了最小副本数。一个数据块如果被 NameNode 检查确保它满足最小副本数,那么它被认为是安全的。在 NameNode 检查配置的一定比例的数据块安全性检查(加上 30s),NameNode 将会退出安全模式。然后它将确认有一组(如果可能)还没有达到指定数目副本的数据块。NameNode 将这些数据块复制到其他 DataNode。

The Persistence of File System Metadata(文件系统元数据的持久性)

The HDFS namespace is stored by the NameNode. The NameNode uses a transaction log called the EditLog to persistently record every change that occurs to file system metadata. For example, creating a new file in HDFS causes the NameNode to insert a record into the EditLog indicating this. Similarly, changing the replication factor of a file causes a new record to be inserted into the EditLog. The NameNode uses a file in its local host OS file system to store the EditLog. The entire file system namespace, including the mapping of blocks to files and file system properties, is stored in a file called the FsImage. The FsImage is stored as a file in the NameNode’s local file system too.

The NameNode keeps an image of the entire file system namespace and file Blockmap in memory. This key metadata item is designed to be compact, such that a NameNode with 4 GB of RAM is plenty to support a huge number of files and directories. When the NameNode starts up, it reads the FsImage and EditLog from disk, applies all the transactions from the EditLog to the in-memory representation of the FsImage, and flushes out this new version into a new FsImage on disk. It can then truncate the old EditLog because its transactions have been applied to the persistent FsImage. This process is called a checkpoint. In the current implementation, a checkpoint only occurs when the NameNode starts up. Work is in progress to support periodic checkpointing in the near future.

The DataNode stores HDFS data in files in its local file system. The DataNode has no knowledge about HDFS files. It stores each block of HDFS data in a separate file in its local file system. The DataNode does not create all files in the same directory. Instead, it uses a heuristic to determine the optimal number of files per directory and creates subdirectories appropriately. It is not optimal to create all local files in the same directory because the local file system might not be able to efficiently support a huge number of files in a single directory. When a DataNode starts up, it scans through its local file system, generates a list of all HDFS data blocks that correspond to each of these local files and sends this report to the NameNode: this is the Blockreport.

NameNode 存储着 HDFS 的命名空间。NmaeNode 使用一个称之为 EditLog 的事务日志持续地记录发生在文件系统元数据的每一个改变。例如,HDFS 中创建一个文件会导致 NameNode 在 EditLog 中插入一条记录来表示。相同的,改变一个文件的复制因子也会导致 EditLog 中添加一条新的记录。NameNode 在它本地的系统中用一个文件来存储 EditLog。整个文件系统命名空间,包括 blocks 的映射关系和文件系统属性,将储存在一个叫 FsImage 的文件。FsImage 也是储存在 NameNode 所在的本地文件系统中。

NameNode 在内存中保存着整个文件系统命名空间的图像和文件映射关系。这个关键元数据项设计紧凑,以致一个有着 4GB RAM 的 NameNode 足够支持大量的文件和目录。当 NameNode 启动时,它将从磁盘中读取 FsImage 和 EditLog,将 EditLog 中所有汇报更新到内存中的 FsImage 中,刷新输出一个新版本的 FsImage 到磁盘中。然后缩短 EditLog 因为它的事务汇报已经更新到持久化的 FsImage 中。这个过程称之为检查站。在目前这个版本中,只有当 NameNode 启动时会执行一次。在不久的将来会在任务执行过程也运行 CheckPoint。

DataNode 将 HDFS 数据储存在他本地的文件系统中。DataNode 对于 HDFS 文件一无所知。它将每一块 HDFS 数据存储为单独的文件在它的本地文件系统中。DataNode 不会再相同的目录之下创建所有文件。相反,使用一个启发式的方法来确定每个目录的最优文件数目和恰当地创建子目录。在同一个目录下创建所有本地文件并不是最优的因为本地文化系统或许不是高效地支持在一个目录下有大量文件。当一个 DataNode 启动时,它将扫描本地文件系统,生成一个对应本地文件的所有 HDFS 数据块列表和将它发送给 NameNode,这就是 Blockreport。

The Communication Protocols (通讯协议)

All HDFS communication protocols are layered on top of the TCP/IP protocol. A client establishes a connection to a configurable TCP port on the NameNode machine. It talks the ClientProtocol with the NameNode. The DataNodes talk to the NameNode using the DataNode Protocol. A Remote Procedure Call (RPC) abstraction wraps both the Client Protocol and the DataNode Protocol. By design, the NameNode never initiates any RPCs. Instead, it only responds to RPC requests issued by DataNodes or clients.

所有的 HDFS 通讯协议的底层都是 TCP/IP 协议。客户端通过 NameNode 机器上 TCP 端口与之建立连接。它使用客户端协议与 NameNode 通讯。DataNode 使用 DataNode 协议与 NameNode 通讯。RPC 抽象封装了客户端协议和 DataNode 协议。有意地,NameNode 从不会主动发起任何 RPC,相反,它只回复 DatsNodes 和客户端发来的 RPC 请求。

Robustness(鲁棒性)

The primary objective of HDFS is to store data reliably even in the presence of failures. The three common types of failures are NameNode failures, DataNode failures and network partitions.

HDFS 的主要目标是在失效出现时保证储存的数据的可靠性。通常有这三种失效,分别为 NameNode 失效,DataNode 失效和网络分裂(一种在系统的任何两个组之间的所有网络连接同时发生故障后所出现的情况)

Data Disk Failure, Heartbeats and Re-Replication(数据磁盘失效,心跳机制和重新复制)

Each DataNode sends a Heartbeat message to the NameNode periodically. A network partition can cause a subset of DataNodes to lose connectivity with the NameNode. The NameNode detects this condition by the absence of a Heartbeat message. The NameNode marks DataNodes without recent Heartbeats as dead and does not forward any new IO requests to them. Any data that was registered to a dead DataNode is not available to HDFS any more. DataNode death may cause the replication factor of some blocks to fall below their specified value. The NameNode constantly tracks which blocks need to be replicated and initiates replication whenever necessary. The necessity for re-replication may arise due to many reasons: a DataNode may become unavailable, a replica may become corrupted, a hard disk on a DataNode may fail, or the replication factor of a file may be increased.

The time-out to mark DataNodes dead is conservatively long (over 10 minutes by default) in order to avoid replication storm caused by state flapping of DataNodes. Users can set shorter interval to mark DataNodes as stale and avoid stale nodes on reading and/or writing by configuration for performance sensitive workloads.

每个节点周期性地发送心跳信息给 NameNode。网络分裂会导致一部分 DataNode 失去与 NameNode 的连接。NameNode 通过心跳信息的丢失发现这个情况。NameNode 将最近没有心跳信息的 DataNode 标记为死亡并且不再转发任何 IO 请求给他们。在已经死亡的 DataNode 注册的任何数据在 HDFS 将不能再使用。DataNode 死亡会导致部分数据块的复制因子小于指定的数目。NameNode 时常地跟踪数据块是否需要被复制和当必要的时候启动复制。重新复制的必要性会因为许多原因而提升:DataNode 不可用,一个副本被破坏,DataNode 的磁盘失效或者一个文件的复制因子增加了。

将 DatNode 标记为死亡的超时时间适当地加长(默认超过 10 分钟)是为了避免 DataNode 状态改变引起的复制风暴。用户可以设置更短的时间间隔来标记 DataNode 为失效和 避免失效的节点在读和 / 或写配置性能敏感的工作负载

Cluster Rebalancing(集群调整)

The HDFS architecture is compatible with data rebalancing schemes. A scheme might automatically move data from one DataNode to another if the free space on a DataNode falls below a certain threshold. In the event of a sudden high demand for a particular file, a scheme might dynamically create additional replicas and rebalance other data in the cluster. These types of data rebalancing schemes are not yet implemented.

HDFS 架构兼容数据调整方案。一个方案可能自动地将数据从一个 DataNode 移动到另一个距离上限值还有多余空间的 DataNode。对一个特别的文件突然发生的需求,一个方案可以动态地创建额外的副本和重新调整集群中的数据。这些类型的调整方案目前还没有实现。

Data Integrity(数据完整性)

It is possible that a block of data fetched from a DataNode arrives corrupted. This corruption can occur because of faults in a storage device, network faults, or buggy software. The HDFS client software implements checksum checking on the contents of HDFS files. When a client creates an HDFS file, it computes a checksum of each block of the file and stores these checksums in a separate hidden file in the same HDFS namespace. When a client retrieves file contents it verifies that the data it received from each DataNode matches the checksum stored in the associated checksum file. If not, then the client can opt to retrieve that block from another DataNode that has a replica of that block.

从一个失效的 DataNode 取得数据块是可行的。这种失效会发生是因为存储设备故障、网络故障或者软件 bug。HDFS 客户端软件实现了校验和用来校验 HDFS 文件的内容。当客户端创建一个 HDFS 文件,他会计算文件的每一个数据块的校验和并且将这些校验和储存在 HDFS 命名空间中一个单独的隐藏的文件当中。当客户端从 DataNode 获得数据时会对对其进行校验和,并且将之与储存在相关校验和文件中的校验和进行匹配。如果没有,客户端会选择从另一个拥有该数据块副本的 DataNode 上恢复数据。

Metadata Disk Failure(元数据的磁盘故障)

The FsImage and the EditLog are central data structures of HDFS. A corruption of these files can cause the HDFS instance to be non-functional. For this reason, the NameNode can be configured to support maintaining multiple copies of the FsImage and EditLog. Any update to either the FsImage or EditLog causes each of the FsImages and EditLogs to get updated synchronously. This synchronous updating of multiple copies of the FsImage and EditLog may degrade the rate of namespace transactions per second that a NameNode can support. However, this degradation is acceptable because even though HDFS applications are very data intensive in nature, they are not metadata intensive. When a NameNode restarts, it selects the latest consistent FsImage and EditLog to use.

Another option to increase resilience against failures is to enable High Availability using multiple NameNodes either with a shared storage on NFS or using a distributed edit log (called Journal). The latter is the recommended approach.

FsImage 和 EditLog 是 HDFS 架构的中心数据。这些数据的失效会引起 HDFS 实例失效。因为这个原因,NameNode 可以配置用来维持 FsImage 和 EditLog 的多个副本。FsImage 或 EditLog 的任何改变会引起每一份 FsImage 和 EditLog 同步更新。同步更新多份 FsImge 和 EditLog 降低 NameNode 能支持的每秒更新命名空间事务的频率。然而,频率的降低是可以接受尽管 HDFS 应用本质上是对数据敏感,而不是对元数据敏感。当一个 NameNode 重新启动,他会选择最新的 FsImage 和 EditLog 来使用。

Snapshots(快照)

Snapshots support storing a copy of data at a particular instant of time. One usage of the snapshot feature may be to roll back a corrupted HDFS instance to a previously known good point in time.

快照支持存储一个特点时间的的数据副本。一个使用可能快照功能的情况是一个失效的 HDFS 实例想要回滚到之前一个已知是正确的时间。

Data Organization(数据组织)

Data Blocks(数据块)

HDFS is designed to support very large files. Applications that are compatible with HDFS are those that deal with large data sets. These applications write their data only once but they read it one or more times and require these reads to be satisfied at streaming speeds. HDFS supports write-once-read-many semantics on files. A typical block size used by HDFS is 128 MB. Thus, an HDFS file is chopped up into 128 MB chunks, and if possible, each chunk will reside on a different DataNode.

HDFS 是被设计来支持大量文件的。一个应用兼容 HDFS 是那些处理大数据集的应用。这些应用都是一次写入数据但可��次或多次读取和需要这些读取满足一定的流式速度。HDFS 支持一次写入多次读取文件语义。HDFS 中通常的数据块大小为 128M。因此,一个 HDFS 文件会被切割成 128M 大小的块,如果可以的话,每一个大块都会分属于一个不同的 DatNode.

Staging(分段)

A client request to create a file does not reach the NameNode immediately. In fact, initially the HDFS client caches the file data into a local buffer. Application writes are transparently redirected to this local buffer. When the local file accumulates data worth over one chunk size, the client contacts the NameNode. The NameNode inserts the file name into the file system hierarchy and allocates a data block for it. The NameNode responds to the client request with the identity of the DataNode and the destination data block. Then the client flushes the chunk of data from the local buffer to the specified DataNode. When a file is closed, the remaining un-flushed data in the local buffer is transferred to the DataNode. The client then tells the NameNode that the file is closed. At this point, the NameNode commits the file creation operation into a persistent store. If the NameNode dies before the file is closed, the file is lost.

The above approach has been adopted after careful consideration of target applications that run on HDFS. These applications need streaming writes to files. If a client writes to a remote file directly without any client side buffering, the network speed and the congestion in the network impacts throughput considerably. This approach is not without precedent. Earlier distributed file systems, e.g. AFS, have used client side caching to improve performance. A POSIX requirement has been relaxed to achieve higher performance of data uploads.

一个要求创建一个文件的客户端请求将不会立刻到达 NameNode。事实上,HDFS 首先将文件缓存在本地缓存中。应用的写入将透明地定位到这个本地缓存中。当本地缓存累积数据超过一个块(128M)的大小,客户端将会连接 NameNode。NameNode 将文件名插入到文件系统层级中并为它分配一个数据块。NameNode 将 DataNode 的 id 和数据块的地址回复给客户端。然后客户端建本地缓存的数据刷新到指定的 DataNode。当文件关闭后,本地剩余的未刷新的数据将会传输到 DataNode。然后客户端告诉 NameNode 文件已经关闭。在这个点,NameNode 提交创建文件操作在一个持久化仓库。如果在文件关闭之前 NameNode 死亡了,文件会丢失。

在仔细地研究运行在 HDFS 的目标程序之后上面的方法被采用了。应用需要流式写入文件。如果客户端在没有进行任何缓存的情况下直接写入远程文件,那么网络速度和网络拥堵会影响吞吐量。这个方法不是没有先例。早期的分布式文件系统,例如 AFS,已经使用客户端缓存来提高性能。POSIX 已经满足轻松实现高性能的文件上传。

Replication Pipelining(复制管道 / 复制流水线)

When a client is writing data to an HDFS file, its data is first written to a local buffer as explained in the previous section. Suppose the HDFS file has a replication factor of three. When the local buffer accumulates a chunk of user data, the client retrieves a list of DataNodes from the NameNode. This list contains the DataNodes that will host a replica of that block. The client then flushes the data chunk to the first DataNode. The first DataNode starts receiving the data in small portions, writes each portion to its local repository and transfers that portion to the second DataNode in the list. The second DataNode, in turn starts receiving each portion of the data block, writes that portion to its repository and then flushes that portion to the third DataNode. Finally, the third DataNode writes the data to its local repository. Thus, a DataNode can be receiving data from the previous one in the pipeline and at the same time forwarding data to the next one in the pipeline. Thus, the data is pipelined from one DataNode to the next.

当一个客户端写入一个 HDFS 文件,它的数据首先是写到它的本地缓存当中(这在前面一节已经解释了)。假设 HDFS 文件的复制因子为 3。当客户端累积了大量的用户数据,客户端将会从 NameNode 取得 DataNode 列表。这个列表包含着该块的副本宿主 DataNode。然偶客户端会将数据刷新到第一个 DataNode。第一个 DataNode 接收一小部分数据,将每一小块数据写到本地仓库然后将这小块数据传到列表的第二个 DataNode。第二个 DataNode 开始接收每一小块数据并写到本地数据仓库然后将数据传输到第三个 DataNode,最后,第三个 DataNode 将数据写到本地仓库。因此,一个 Dataode 能够在管道中接收到从上一个节点接收到数据并且在同一时间将数据转发给下一个节点。因此,数据在管道中从一个 DataNode 传输到下一个。

Accessibility(可访问性)

HDFS can be accessed from applications in many different ways. Natively, HDFS provides a FileSystem Java API for applications to use. A C language wrapper for this Java API and REST API is also available. In addition, an HTTP browser and can also be used to browse the files of an HDFS instance. By using NFS gateway, HDFS can be mounted as part of the client’s local file system.

应用可以通过很多方式访问 HDFS。HDFS 本身提供 FileSystem Java API 给应用使用。C 语言封装 JAVA API 和 REST API 也是可以使用。此外,HTTP 浏览器也可以用来浏览 HDFS 实例的文件。通过使用 NFS 网关,HDFS 能被安装作为本地文件系统的一部分。

FS Shell

HDFS allows user data to be organized in the form of files and directories. It provides a commandline interface called FS shell that lets a user interact with the data in HDFS. The syntax of this command set is similar to other shells (e.g. bash, csh) that users are already familiar with. Here are some sample action/command pairs:

HDFS 允许用户数据已文件盒目录的形式来组织。他提供称为 FS shell 的命令行接口让用户与 HDFSJ 交互数据。这命令行的语法跟其他用户已经熟悉的 shell 相似。这里是一些命令操作的例子

Action | Command |

Create a directory named /foodir | bin/hadoop dfs -mkdir /foodir |

Remove a directory named /foodir | bin/hadoop fs -rm -R /foodir |

View the contents of a file named /foodir/myfile.txt | bin/hadoop dfs -cat /foodir/myfile.txt |

FS shell is targeted for applications that need a scripting language to interact with the stored data.

FS shell 的目标是让一些使用脚本的应用能与 HDFS 进行数据交互。

DFSAdmin

The DFSAdmin command set is used for administering an HDFS cluster. These are commands that are used only by an HDFS administrator. Here are some sample action/command pairs:

DFSAdmin 命令集是用来管理 HDFS 集群。这些命令只能被 HDFS 管理员使用,下面是一些例子:

Action | Command |

Put the cluster in Safemode | bin/hdfs dfsadmin -safemode enter |

Generate a list of DataNodes | bin/hdfs dfsadmin -report |

Recommission or decommission DataNode(s) | bin/hdfs dfsadmin -refreshNodes |

Browser Interface

A typical HDFS install configures a web server to expose the HDFS namespace through a configurable TCP port. This allows a user to navigate the HDFS namespace and view the contents of its files using a web browser.

一个通常的 HDFS 安装会配置一个 web 服务通过一个配置好的 TCP 端口来暴露 HDFS 命名空间。它允许用户看到 HDFS 命名空间的导航和使用 web 浏览器来查看他的文件内容。

Space Reclamation(空间回收)

File Deletes and Undeletes(文件的删除和恢复)

If trash configuration is enabled, files removed by FS Shell is not immediately removed from HDFS. Instead, HDFS moves it to a trash directory (each user has its own trash directory under /user/<username>/.Trash). The file can be restored quickly as long as it remains in trash.

Most recent deleted files are moved to the current trash directory (/user/<username>/.Trash/Current), and in a configurable interval, HDFS creates checkpoints (under /user/<username>/.Trash/<date>) for files in current trash directory and deletes old checkpoints when they are expired. See expunge command of FS shell about checkpointing of trash.

After the expiry of its life in trash, the NameNode deletes the file from the HDFS namespace. The deletion of a file causes the blocks associated with the file to be freed. Note that there could be an appreciable time delay between the time a file is deleted by a user and the time of the corresponding increase in free space in HDFS.

如果垃圾相关配置是可用的,通过 FS shell 移除的文件将不会直接从 HDFS 移除。相反的,HDFS 将它移动到一个回收目录(每个用户在 /usr/<username>/.Trash 下都拥有它自己的回收站目录)。一个文件只要还在回收站那么就能够快速恢复。

大部分最近删除的文件都将移动到当前的回收站目录(/user/<username>/.Trash/Current),并且在设置好的时间间隔内,HDFS 创建对 /user/<username>/.Trash/<date> 目录下的文件创建一个检查点并且当老的检查点过期的时候删除他们。查看 expunge command of FS shell 了解回收站的检查点。

当文件在回收站期满之后,NameNode 将会将文件从 HDFS 的命名空间中删除。文件的删除将导致与该文件关联的 block 被释放。需要说明的是文件被用户删除的时间和对应的释放空间的时间之间有一个明显的时间延迟。

Following is an example which will show how the files are deleted from HDFS by FS Shell. We created 2 files (test1 & test2) under the directory delete

接下来是我们展示如何通过 FS shel 删除文件的例子。我们在要删除的目录中创建 test1 和 test2 两个文件

$ hadoop fs -mkdir -p delete/test1

$ hadoop fs -mkdir -p delete/test2

$ hadoop fs -ls delete/

Found 2 items

drwxr-xr-x – hadoop hadoop 0 2015-05-08 12:39 delete/test1

drwxr-xr-x – hadoop hadoop 0 2015-05-08 12:40 delete/test2

We are going to remove the file test1. The comment below shows that the file has been moved to Trash directory.

我们来删除文件 test1. 下面的注释显示文档被移除到回收站目录。

$ hadoop fs -rm -r delete/test1

Moved: hdfs://localhost:8020/user/hadoop/delete/test1 to trash at: hdfs://localhost:8020/user/hadoop/.Trash/Current

now we are going to remove the file with skipTrash option, which will not send the file to Trash.It will be completely removed from HDFS.

现在我来执行将文件删除跳过回收站选项,文件则不会转移到回收站。文件将完全从 HDFS 中移除。

$ hadoop fs -rm -r -skipTrash delete/test2

Deleted delete/test2

We can see now that the Trash directory contains only file test1.

我们现在可以看到回收站目录下只有 test1

$ hadoop fs -ls .Trash/Current/user/hadoop/delete/

Found 1 items\

drwxr-xr-x – hadoop hadoop 0 2015-05-08 12:39 .Trash/Current/user/hadoop/delete/test1

So file test1 goes to Trash and file test2 is deleted permanently.

所以 test1 去了回收站而 test2 被永久地删除了。

Decrease Replication Factor (减少副本因子)

When the replication factor of a file is reduced, the NameNode selects excess replicas that can be deleted. The next Heartbeat transfers this information to the DataNode. The DataNode then removes the corresponding blocks and the corresponding free space appears in the cluster. Once again, there might be a time delay between the completion of the setReplication API call and the appearance of free space in the cluster.

当文件的副本因子减小时,NameNode 将在可以删除的副本中选中多余的副本。在下一个心跳通讯中将该信息传输给 DataNode。然后 DataNode 移除对应的数据块并且释放对应的空间。再重申一遍,在设置副本因子完成和集群中出现新的空间之间有个时间延迟。

References

Hadoop JavaDoc API.

HDFS source code: http://hadoop.apache.org/version_control.html

* 由于译者本身能力有限,所以译文中肯定会出现表述不正确的地方,请大家多多包涵,也希望大家能够指出文中翻译得不对或者不准确的地方,共同探讨进步,谢谢。

* 用红色标注的句子是翻译得不顺的地方,所以如果大家有更好的翻译,请在评论中在告诉我,谢谢!

下面关于 Hadoop 的文章您也可能喜欢,不妨看看:

Ubuntu14.04 下 Hadoop2.4.1 单机 / 伪分布式安装配置教程 http://www.linuxidc.com/Linux/2015-02/113487.htm

CentOS 安装和配置 Hadoop2.2.0 http://www.linuxidc.com/Linux/2014-01/94685.htm

CentOS 6.3 下 Hadoop 伪分布式平台搭建 http://www.linuxidc.com/Linux/2016-11/136789.htm

Ubuntu 14.04 LTS 下安装 Hadoop 1.2.1(伪分布模式)http://www.linuxidc.com/Linux/2016-09/135406.htm

Ubuntu 上搭建 Hadoop 环境(单机模式 + 伪分布模式)http://www.linuxidc.com/Linux/2013-01/77681.htm

实战 CentOS 系统部署 Hadoop 集群服务 http://www.linuxidc.com/Linux/2016-11/137246.htm

单机版搭建 Hadoop 环境图文教程详解 http://www.linuxidc.com/Linux/2012-02/53927.htm

Hadoop 2.6.0 HA 高可用集群配置详解 http://www.linuxidc.com/Linux/2016-08/134180.htm

Spark 1.5、Hadoop 2.7 集群环境搭建 http://www.linuxidc.com/Linux/2016-09/135067.htm

更多 Hadoop 相关信息见Hadoop 专题页面 http://www.linuxidc.com/topicnews.aspx?tid=13

本文永久更新链接地址:http://www.linuxidc.com/Linux/2016-12/138027.htm