共计 76888 个字符,预计需要花费 193 分钟才能阅读完成。

1 简单介绍

Ceph 的部署模式下主要包含以下几个类型的节点

Ø Ceph OSDs: A Ceph OSD 进程主要用来存储数据,处理数据的 replication, 恢复,填充,调整资源组合以及通过检查其他 OSD 进程的心跳信息提供一些监控信息给 Ceph Monitors . 当 Ceph Storage Cluster 要准备 2 份数据备份时,要求至少有 2 个 Ceph OSD 进程的状态是 active+clean 状态 (Ceph 默认会提供两份数据备份).

Ø Monitors: Ceph Monitor 维护了集群 map 的状态,主要包括 monitor map, OSD map, Placement Group (PG) map, 以及 CRUSH map. Ceph 维护了 Ceph Monitors, Ceph OSD Daemons, 以及 PGs 状态变化的历史记录 (called an“epoch”).

Ø MDSs: Ceph Metadata Server (MDS)存储的元数据代表 Ceph 的文件系统 (i.e., Ceph Block Devices 以及 Ceph Object Storage 不适用 MDS). Ceph Metadata Servers 让系统用户可以执行一些 POSIX 文件系统的基本命令,例如 ls,find 等.

2 集群规划

在创建集群前,首先做好集群规划。

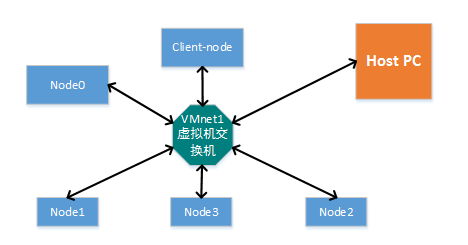

2.1 网络拓扑

基于 VMware 虚拟机部署 ceph 集群:

2.2 节点规划

Hostname(如何更新) | VmNet | 节点 IP | 说明 |

node0 | HostOnly | 192.168.92.100 | Admin, osd(sdb) |

node1 | HostOnly | 192.168.92.101 | Osd(sdb),mon |

node2 | HostOnly | 192.168.92.102 | Osd(sdb),mon,mds |

node3 | HostOnly | 192.168.92.103 | Osd(sdb),mon,mds |

client-node | HostOnly | 192.168.92.109 | 用户端节点;客服端,主要利用它挂载 ceph 集群提供的存储进行测试 |

3 准备工作

3.1 root 权限准备

分别为上述各节点主机创建用户 ceph:(使用 root 权限,或者具有 root 权限)

创建用户

[root@node0 ~]# adduser -d /home/ceph -m ceph

[root@node0 ~]# adduser -d /home/ceph -m ceph

设置密码

[root@node0 ~]#passwd ceph

[root@node0 ~]#passwd ceph

设置账户权限

[root@node0 ~]# echo“ceph ALL =(root) NOPASSWD:ALL”| sudo tee /etc/sudoers.d/ceph

[root@node0 ~]# chomod 0440 /etc/sudoers.d/ceph

[root@node0 ~]# echo“ceph ALL =(root) NOPASSWD:ALL”| sudo tee /etc/sudoers.d/ceph

[root@node0 ~]# chomod 0440 /etc/sudoers.d/ceph

3.2 管理节点修改 hosts

修改 /etc/hosts

[ceph@node0 cluster]$ sudo cat /etc/hosts

[sudo] password for ceph:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.92.100 node0

192.168.92.101 node1

192.168.92.102 node2

192.168.92.103 node3

[ceph@node0 cluster]$

[ceph@node0 cluster]$ sudo cat /etc/hosts

[sudo] password for ceph:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.92.100 node0

192.168.92.101 node1

192.168.92.102 node2

192.168.92.103 node3

[ceph@node0 cluster]$

3.3 requiretty 准备

执行命令 visudo 修改 suoders 文件:

1. 注释 Defaults requiretty

Defaults requiretty 修改为 #Defaults requiretty,表示不需要控制终端。

否则会出现 sudo: sorry, you must have a tty to run sudo

2. 增加行 Defaults visiblepw

否则会出现 sudo: no tty present and no askpass program specified

如果在 ceph-deploy new <node-hostname> 阶段依旧出错:

[ceph@node0 cluster]$ ceph-deploy new node1 node2 node3

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.28): /usr/bin/ceph-deploy new node3

[ceph_deploy.cli][INFO] ceph-deploy options:

[ceph_deploy.cli][INFO] username : None

[ceph_deploy.cli][INFO] func : <function new at 0xee0b18>

[ceph_deploy.cli][INFO] verbose : False

[ceph_deploy.cli][INFO] overwrite_conf : False

[ceph_deploy.cli][INFO] quiet : False

[ceph_deploy.cli][INFO] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0xef9a28>

[ceph_deploy.cli][INFO] cluster : ceph

[ceph_deploy.cli][INFO] ssh_copykey : True

[ceph_deploy.cli][INFO] mon : [‘node3’]

[ceph_deploy.cli][INFO] public_network : None

[ceph_deploy.cli][INFO] ceph_conf : None

[ceph_deploy.cli][INFO] cluster_network : None

[ceph_deploy.cli][INFO] default_release : False

[ceph_deploy.cli][INFO] fsid : None

[ceph_deploy.new][DEBUG] Creating new cluster named ceph

[ceph_deploy.new][INFO] making sure passwordless SSH succeeds

[node3][DEBUG] connected to host: node0

[node3][INFO] Running command: ssh -CT -o BatchMode=yes node3

[node3][DEBUG] connection detected need for sudo

[node3][DEBUG] connected to host: node3

[ceph_deploy][ERROR] RuntimeError: remote connection got closed, ensure “requiretty“ is disabled for node3

[ceph@node0 cluster]$

[ceph@node0 cluster]$ ceph-deploy new node1 node2 node3

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.28): /usr/bin/ceph-deploy new node3

[ceph_deploy.cli][INFO] ceph-deploy options:

[ceph_deploy.cli][INFO] username : None

[ceph_deploy.cli][INFO] func : <function new at 0xee0b18>

[ceph_deploy.cli][INFO] verbose : False

[ceph_deploy.cli][INFO] overwrite_conf : False

[ceph_deploy.cli][INFO] quiet : False

[ceph_deploy.cli][INFO] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0xef9a28>

[ceph_deploy.cli][INFO] cluster : ceph

[ceph_deploy.cli][INFO] ssh_copykey : True

[ceph_deploy.cli][INFO] mon : [‘node3’]

[ceph_deploy.cli][INFO] public_network : None

[ceph_deploy.cli][INFO] ceph_conf : None

[ceph_deploy.cli][INFO] cluster_network : None

[ceph_deploy.cli][INFO] default_release : False

[ceph_deploy.cli][INFO] fsid : None

[ceph_deploy.new][DEBUG] Creating new cluster named ceph

[ceph_deploy.new][INFO] making sure passwordless SSH succeeds

[node3][DEBUG] connected to host: node0

[node3][INFO] Running command: ssh -CT -o BatchMode=yes node3

[node3][DEBUG] connection detected need for sudo

[node3][DEBUG] connected to host: node3

[ceph_deploy][ERROR] RuntimeError: remote connection got closed, ensure “requiretty“ is disabled for node3

[ceph@node0 cluster]$

3.4 管理节点的无密码远程访问权限

配置管理节点与其他节点 ssh 无密码 root 权限访问其它节点。

第一步:在管理节点主机上执行命令:

ssh-keygen

说明:(为了简单点命令执行时直接确定即可)

第二步:将第一步的 key 复制至其他节点

ssh-copy-id ceph@node0

ssh-copy-id ceph@node1

ssh-copy-id ceph@node2

ssh-copy-id ceph@node3

同时修改~/.ssh/config 文件增加一下内容:

[ceph@node0 cluster]$ cat ~/.ssh/config

Host node0

Hostname node0

User ceph

Host node1

Hostname node1

User ceph

Host node2

Hostname node2

User ceph

Host node3

Hostname node3

User ceph

[ceph@node0 cluster]$

[ceph@node0 cluster]$ cat ~/.ssh/config

Host node0

Hostname node0

User ceph

Host node1

Hostname node1

User ceph

Host node2

Hostname node2

User ceph

Host node3

Hostname node3

User ceph

[ceph@node0 cluster]$

3.5 关闭防火墙

[root@localhost ceph]# systemctl stop firewalld.service

[root@localhost ceph]# systemctl disable firewalld.service

Removed symlink /etc/systemd/system/dbus-org.Fedoraproject.FirewallD1.service.

Removed symlink /etc/systemd/system/basic.target.wants/firewalld.service.

[root@localhost ceph]#

[root@localhost ceph]# systemctl stop firewalld.service

[root@localhost ceph]# systemctl disable firewalld.service

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

Removed symlink /etc/systemd/system/basic.target.wants/firewalld.service.

[root@localhost ceph]#

3.6 禁用 selinux

当前禁用

setenforce 0

永久禁用

[root@localhost ceph]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing – SELinux security policy is enforced.

# permissive – SELinux prints warnings instead of enforcing.

# disabled – No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted – Targeted processes are protected,

# minimum – Modification of targeted policy. Only selected processes are protected.

# mls – Multi Level Security protection.

SELINUXTYPE=targeted

[root@localhost ceph]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing – SELinux security policy is enforced.

# permissive – SELinux prints warnings instead of enforcing.

# disabled – No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted – Targeted processes are protected,

# minimum – Modification of targeted policy. Only selected processes are protected.

# mls – Multi Level Security protection.

SELINUXTYPE=targeted

3.6.1 Bad owner or permissions on .ssh/config 的解决

错误信息:

Bad owner or permissions on /home/ceph/.ssh/config fatal: The remote end hung up unexpectedly

解决方案:

$sudo chmod 600 config

$sudo chmod 600 config

4 管理节点安装 ceph-deploy 工具

第一步:增加 yum 配置文件

sudo vim /etc/yum.repos.d/ceph.repo

添加以下内容:

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://download.ceph.com/rpm-jewel/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://download.ceph.com/rpm-jewel/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

priority=1

第二步:更新软件源并按照 ceph-deploy,时间同步软件

[ceph@node0 cluster]$ sudo yum update && sudo yum install ceph-deploy

[ceph@node0 cluster]$ sudo yum install ntp ntpupdate ntp-doc

[ceph@node0 cluster]$ sudo yum update && sudo yum install ceph-deploy

[ceph@node0 cluster]$ sudo yum install ntp ntpupdate ntp-doc

第三步:关闭所有节点的防火墙以及安全选项(在所有节点上执行)以及其他一些步骤

[ceph@node0 cluster]$ sudo systemctl stop firewall.service;

[ceph@node0 cluster]$ sudo systemctl disable firewall.service

[ceph@node0 cluster]$ sudo yum install yum-plugin-priorities

[ceph@node0 cluster]$ sudo systemctl stop firewall.service;

[ceph@node0 cluster]$ sudo systemctl disable firewall.service

[ceph@node0 cluster]$ sudo yum install yum-plugin-priorities

总结:经过以上步骤前提条件都准备好了接下来真正部署 ceph 了。

clip_image004

5 创建 Ceph 集群

以前面创建的 ceph 用户在管理节点节点上创建目录()

[ceph@node0 ~]$ mkdir cluster

[ceph@node0 cluster]$ cd cluster

[ceph@node0 ~]$ mkdir cluster

[ceph@node0 cluster]$ cd cluster

5.1 如何清空 ceph 数据

先清空之前所有的 ceph 数据,如果是新装不用执行此步骤,如果是重新部署的话也执行下面的命令:

ceph-deploy purgedata {ceph-node} [{ceph-node}]

ceph-deploy forgetkeys

如:

[ceph@node0 cluster]$ <strong>ceph-deploy purgedata admin_node node1 node2 node3</strong>

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.28): /usr/bin/ceph-deploy purgedata node0 node1 node2 node3

…

[node3][INFO] Running command: sudo rm -rf –one-file-system — /var/lib/ceph

[node3][INFO] Running command: sudo rm -rf –one-file-system — /etc/ceph/

[ceph@node0 cluster]$ <strong>ceph-deploy forgetkeys</strong>

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.28): /usr/bin/ceph-deploy forgetkeys

…

[ceph_deploy.cli][INFO] default_release : False

[ceph@node0 my-cluster]$

[ceph@node0 cluster]$ <strong>ceph-deploy purgedata admin_node node1 node2 node3</strong>

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.28): /usr/bin/ceph-deploy purgedata node0 node1 node2 node3

…

[node3][INFO] Running command: sudo rm -rf –one-file-system — /var/lib/ceph

[node3][INFO] Running command: sudo rm -rf –one-file-system — /etc/ceph/

[ceph@node0 cluster]$ <strong>ceph-deploy forgetkeys</strong>

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.28): /usr/bin/ceph-deploy forgetkeys

…

[ceph_deploy.cli][INFO] default_release : False

[ceph@node0 my-cluster]$

5.2 创建集群设置 Monitor 节点

在 admin 节点上用 ceph-deploy 创建集群,new 后面跟的是 monitor 节点的 hostname,如果有多个 monitor,则它们的 hostname 以为间隔,多个 mon 节点可以实现互备。

[ceph@node0 cluster]$ <strong>ceph-deploy new node1 node2 node3</strong>

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.34): /usr/bin/ceph-deploy new node1 node2 node3

[ceph_deploy.cli][INFO] ceph-deploy options:

[ceph_deploy.cli][INFO] username : None

[ceph_deploy.cli][INFO] func : <function new at 0x29f2b18>

[ceph_deploy.cli][INFO] verbose : False

[ceph_deploy.cli][INFO] overwrite_conf : False

[ceph_deploy.cli][INFO] quiet : False

[ceph_deploy.cli][INFO] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x2a15a70>

[ceph_deploy.cli][INFO] cluster : ceph

[ceph_deploy.cli][INFO] ssh_copykey : True

[ceph_deploy.cli][INFO] mon : [‘node1’, ‘node2’, ‘node3’]

[ceph_deploy.cli][INFO] public_network : None

[ceph_deploy.cli][INFO] ceph_conf : None

[ceph_deploy.cli][INFO] cluster_network : None

[ceph_deploy.cli][INFO] default_release : False

[ceph_deploy.cli][INFO] fsid : None

[ceph_deploy.new][DEBUG] Creating new cluster named ceph

[ceph_deploy.new][INFO] making sure passwordless SSH succeeds

[node1][DEBUG] connected to host: node0

[node1][INFO] Running command: ssh -CT -o BatchMode=yes node1

[node1][DEBUG] connection detected need for sudo

[node1][DEBUG] connected to host: node1

[node1][DEBUG] detect platform information from remote host

[node1][DEBUG] detect machine type

[node1][DEBUG] find the location of an executable

[node1][INFO] Running command: sudo /usr/sbin/ip link show

[node1][INFO] Running command: sudo /usr/sbin/ip addr show

[node1][DEBUG] IP addresses found: [‘192.168.92.101’, ‘192.168.1.102’, ‘192.168.122.1’]

[ceph_deploy.new][DEBUG] Resolving host node1

[ceph_deploy.new][DEBUG] Monitor node1 at 192.168.92.101

[ceph_deploy.new][INFO] making sure passwordless SSH succeeds

[node2][DEBUG] connected to host: node0

[node2][INFO] Running command: ssh -CT -o BatchMode=yes node2

[node2][DEBUG] connection detected need for sudo

[node2][DEBUG] connected to host: node2

[node2][DEBUG] detect platform information from remote host

[node2][DEBUG] detect machine type

[node2][DEBUG] find the location of an executable

[node2][INFO] Running command: sudo /usr/sbin/ip link show

[node2][INFO] Running command: sudo /usr/sbin/ip addr show

[node2][DEBUG] IP addresses found: [‘192.168.1.103’, ‘192.168.122.1’, ‘192.168.92.102’]

[ceph_deploy.new][DEBUG] Resolving host node2

[ceph_deploy.new][DEBUG] Monitor node2 at 192.168.92.102

[ceph_deploy.new][INFO] making sure passwordless SSH succeeds

[node3][DEBUG] connected to host: node0

[node3][INFO] Running command: ssh -CT -o BatchMode=yes node3

[node3][DEBUG] connection detected need for sudo

[node3][DEBUG] connected to host: node3

[node3][DEBUG] detect platform information from remote host

[node3][DEBUG] detect machine type

[node3][DEBUG] find the location of an executable

[node3][INFO] Running command: sudo /usr/sbin/ip link show

[node3][INFO] Running command: sudo /usr/sbin/ip addr show

[node3][DEBUG] IP addresses found: [‘192.168.122.1’, ‘192.168.1.104’, ‘192.168.92.103’]

[ceph_deploy.new][DEBUG] Resolving host node3

[ceph_deploy.new][DEBUG] Monitor node3 at 192.168.92.103

[ceph_deploy.new][DEBUG] Monitor initial members are [‘node1’, ‘node2’, ‘node3’]

[ceph_deploy.new][DEBUG] Monitor addrs are [‘192.168.92.101’, ‘192.168.92.102’, ‘192.168.92.103’]

[ceph_deploy.new][DEBUG] Creating a random mon key…

[ceph_deploy.new][DEBUG] Writing monitor keyring to ceph.mon.keyring…

[ceph_deploy.new][DEBUG] Writing initial config to ceph.conf…

[ceph@node0 cluster]$

[ceph@node0 cluster]$ <strong>ceph-deploy new node1 node2 node3</strong>

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.34): /usr/bin/ceph-deploy new node1 node2 node3

[ceph_deploy.cli][INFO] ceph-deploy options:

[ceph_deploy.cli][INFO] username : None

[ceph_deploy.cli][INFO] func : <function new at 0x29f2b18>

[ceph_deploy.cli][INFO] verbose : False

[ceph_deploy.cli][INFO] overwrite_conf : False

[ceph_deploy.cli][INFO] quiet : False

[ceph_deploy.cli][INFO] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x2a15a70>

[ceph_deploy.cli][INFO] cluster : ceph

[ceph_deploy.cli][INFO] ssh_copykey : True

[ceph_deploy.cli][INFO] mon : [‘node1’, ‘node2’, ‘node3’]

[ceph_deploy.cli][INFO] public_network : None

[ceph_deploy.cli][INFO] ceph_conf : None

[ceph_deploy.cli][INFO] cluster_network : None

[ceph_deploy.cli][INFO] default_release : False

[ceph_deploy.cli][INFO] fsid : None

[ceph_deploy.new][DEBUG] Creating new cluster named ceph

[ceph_deploy.new][INFO] making sure passwordless SSH succeeds

[node1][DEBUG] connected to host: node0

[node1][INFO] Running command: ssh -CT -o BatchMode=yes node1

[node1][DEBUG] connection detected need for sudo

[node1][DEBUG] connected to host: node1

[node1][DEBUG] detect platform information from remote host

[node1][DEBUG] detect machine type

[node1][DEBUG] find the location of an executable

[node1][INFO] Running command: sudo /usr/sbin/ip link show

[node1][INFO] Running command: sudo /usr/sbin/ip addr show

[node1][DEBUG] IP addresses found: [‘192.168.92.101’, ‘192.168.1.102’, ‘192.168.122.1’]

[ceph_deploy.new][DEBUG] Resolving host node1

[ceph_deploy.new][DEBUG] Monitor node1 at 192.168.92.101

[ceph_deploy.new][INFO] making sure passwordless SSH succeeds

[node2][DEBUG] connected to host: node0

[node2][INFO] Running command: ssh -CT -o BatchMode=yes node2

[node2][DEBUG] connection detected need for sudo

[node2][DEBUG] connected to host: node2

[node2][DEBUG] detect platform information from remote host

[node2][DEBUG] detect machine type

[node2][DEBUG] find the location of an executable

[node2][INFO] Running command: sudo /usr/sbin/ip link show

[node2][INFO] Running command: sudo /usr/sbin/ip addr show

[node2][DEBUG] IP addresses found: [‘192.168.1.103’, ‘192.168.122.1’, ‘192.168.92.102’]

[ceph_deploy.new][DEBUG] Resolving host node2

[ceph_deploy.new][DEBUG] Monitor node2 at 192.168.92.102

[ceph_deploy.new][INFO] making sure passwordless SSH succeeds

[node3][DEBUG] connected to host: node0

[node3][INFO] Running command: ssh -CT -o BatchMode=yes node3

[node3][DEBUG] connection detected need for sudo

[node3][DEBUG] connected to host: node3

[node3][DEBUG] detect platform information from remote host

[node3][DEBUG] detect machine type

[node3][DEBUG] find the location of an executable

[node3][INFO] Running command: sudo /usr/sbin/ip link show

[node3][INFO] Running command: sudo /usr/sbin/ip addr show

[node3][DEBUG] IP addresses found: [‘192.168.122.1’, ‘192.168.1.104’, ‘192.168.92.103’]

[ceph_deploy.new][DEBUG] Resolving host node3

[ceph_deploy.new][DEBUG] Monitor node3 at 192.168.92.103

[ceph_deploy.new][DEBUG] Monitor initial members are [‘node1’, ‘node2’, ‘node3’]

[ceph_deploy.new][DEBUG] Monitor addrs are [‘192.168.92.101’, ‘192.168.92.102’, ‘192.168.92.103’]

[ceph_deploy.new][DEBUG] Creating a random mon key…

[ceph_deploy.new][DEBUG] Writing monitor keyring to ceph.mon.keyring…

[ceph_deploy.new][DEBUG] Writing initial config to ceph.conf…

[ceph@node0 cluster]$

查看生成的文件

[ceph@node0 cluster]$ ls

ceph.conf ceph.log ceph.mon.keyring

[ceph@node0 cluster]$ ls

ceph.conf ceph.log ceph.mon.keyring

查看 ceph 的配置文件,Node1 节点都变为了控制节点

[ceph@node0 cluster]$ cat ceph.conf

[global]

fsid = 3c9892d0-398b-4808-aa20-4dc622356bd0

mon_initial_members = node1, node2, node3

mon_host = 192.168.92.111,192.168.92.112,192.168.92.113

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

filestore_xattr_use_omap = true

[ceph@node0 my-cluster]$

[ceph@node0 cluster]$ cat ceph.conf

[global]

fsid = 3c9892d0-398b-4808-aa20-4dc622356bd0

mon_initial_members = node1, node2, node3

mon_host = 192.168.92.111,192.168.92.112,192.168.92.113

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

filestore_xattr_use_omap = true

[ceph@node0 my-cluster]$

5.2.1 修改副本数目

修改默认的副本数为 2,即 ceph.conf,使 osd_pool_default_size 的值为 2。如果该行,则添加。

[ceph@node0 cluster]$ grep “osd_pool_default_size” ./ceph.conf

osd_pool_default_size = 2

[ceph@node0 cluster]$

[ceph@node0 cluster]$ grep “osd_pool_default_size” ./ceph.conf

osd_pool_default_size = 2

[ceph@node0 cluster]$

5.2.2 网络不唯一的处理

如果 IP 不唯一,即除 ceph 集群使用的网络外,还有其他的网络 IP。

比如:

eno16777736: 192.168.175.100

eno50332184: 192.168.92.110

virbr0: 192.168.122.1

那么就需要在 ceph.conf 配置文档 [global] 部分增加参数 public network 参数:

public_network = {ip-address}/{netmask}

如:

public_network = 192.168.92.0/6789

5.3 安装 ceph

管理节点节点用 ceph-deploy 工具向各个节点安装 ceph:

ceph-deploy install {ceph-node}[{ceph-node} …]

或者本地

ceph-deploy install {ceph-node}[{ceph-node} …] –local-mirror=/opt/ceph-repo –no-adjust-repos –release=jewel

如:

[ceph@node0 cluster]$ ceph-deploy install node0 node1 node2 node3

[ceph@node0 cluster]$ ceph-deploy install node0 node1 node2 node3

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.34): /usr/bin/ceph-deploy install node0 node1 node2 node3

[ceph_deploy.cli][INFO] ceph-deploy options:

[ceph_deploy.cli][INFO] verbose : False

[ceph_deploy.cli][INFO] testing : None

[ceph_deploy.cli][INFO] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x2ae0560>

[ceph_deploy.cli][INFO] cluster : ceph

[ceph_deploy.cli][INFO] dev_commit : None

[ceph_deploy.cli][INFO] install_mds : False

[ceph_deploy.cli][INFO] stable : None

[ceph_deploy.cli][INFO] default_release : False

[ceph_deploy.cli][INFO] username : None

[ceph_deploy.cli][INFO] adjust_repos : True

[ceph_deploy.cli][INFO] func : <function install at 0x2a53668>

[ceph_deploy.cli][INFO] install_all : False

[ceph_deploy.cli][INFO] repo : False

[ceph_deploy.cli][INFO] host : [‘node0’, ‘node1’, ‘node2’, ‘node3’]

[ceph_deploy.cli][INFO] install_rgw : False

[ceph_deploy.cli][INFO] install_tests : False

[ceph_deploy.cli][INFO] repo_url : None

[ceph_deploy.cli][INFO] ceph_conf : None

[ceph_deploy.cli][INFO] install_osd : False

[ceph_deploy.cli][INFO] version_kind : stable

[ceph_deploy.cli][INFO] install_common : False

[ceph_deploy.cli][INFO] overwrite_conf : False

[ceph_deploy.cli][INFO] quiet : False

[ceph_deploy.cli][INFO] dev : master

[ceph_deploy.cli][INFO] local_mirror : None

[ceph_deploy.cli][INFO] release : None

[ceph_deploy.cli][INFO] install_mon : False

[ceph_deploy.cli][INFO] gpg_url : None

[ceph_deploy.install][DEBUG] Installing stable version jewel on cluster ceph hosts node0 node1 node2 node3

[ceph_deploy.install][DEBUG] Detecting platform for host node0 …

[node0][DEBUG] connection detected need for sudo

[node0][DEBUG] connected to host: node0

[node0][DEBUG] detect platform information from remote host

[node0][DEBUG] detect machine type

[ceph_deploy.install][INFO] Distro info: CentOS Linux 7.2.1511 Core

[node0][INFO] installing Ceph on node0

[node0][INFO] Running command: sudo yum clean all

[node0][DEBUG] Loaded plugins: fastestmirror, langpacks, priorities

[node0][DEBUG] Cleaning repos: Ceph Ceph-noarch base ceph-source epel extras updates

[node0][DEBUG] Cleaning up everything

[node0][DEBUG] Cleaning up list of fastest mirrors

[node0][INFO] Running command: sudo yum -y install epel-release

[node0][DEBUG] Loaded plugins: fastestmirror, langpacks, priorities

[node0][DEBUG] Determining fastest mirrors

[node0][DEBUG] * epel: mirror01.idc.hinet.net

[node0][DEBUG] 25 packages excluded due to repository priority protections

[node0][DEBUG] Package epel-release-7-7.noarch already installed and latest version

[node0][DEBUG] Nothing to do

[node0][INFO] Running command: sudo yum -y install yum-plugin-priorities

[node0][DEBUG] Loaded plugins: fastestmirror, langpacks, priorities

[node0][DEBUG] Loading mirror speeds from cached hostfile

[node0][DEBUG] * epel: mirror01.idc.hinet.net

[node0][DEBUG] 25 packages excluded due to repository priority protections

[node0][DEBUG] Package yum-plugin-priorities-1.1.31-34.el7.noarch already installed and latest version

[node0][DEBUG] Nothing to do

[node0][DEBUG] Configure Yum priorities to include obsoletes

[node0][WARNIN] check_obsoletes has been enabled for Yum priorities plugin

[node0][INFO] Running command: sudo rpm –import https://download.ceph.com/keys/release.asc

[node0][INFO] Running command: sudo rpm -Uvh –replacepkgs https://download.ceph.com/rpm-jewel/el7/noarch/ceph-release-1-0.el7.noarch.rpm

[node0][DEBUG] Retrieving https://download.ceph.com/rpm-jewel/el7/noarch/ceph-release-1-0.el7.noarch.rpm

[node0][DEBUG] Preparing… ########################################

[node0][DEBUG] Updating / installing…

[node0][DEBUG] ceph-release-1-1.el7 ########################################

[node0][WARNIN] ensuring that /etc/yum.repos.d/ceph.repo contains a high priority

[node0][WARNIN] altered ceph.repo priorities to contain: priority=1

[node0][INFO] Running command: sudo yum -y install ceph ceph-radosgw

[node0][DEBUG] Loaded plugins: fastestmirror, langpacks, priorities

[node0][DEBUG] Loading mirror speeds from cached hostfile

[node0][DEBUG] * epel: mirror01.idc.hinet.net

[node0][DEBUG] 25 packages excluded due to repository priority protections

[node0][DEBUG] Package 1:ceph-10.2.2-0.el7.x86_64 already installed and latest version

[node0][DEBUG] Package 1:ceph-radosgw-10.2.2-0.el7.x86_64 already installed and latest version

[node0][DEBUG] Nothing to do

[node0][INFO] Running command: sudo ceph –version

[node0][DEBUG] ceph version 10.2.2 (45107e21c568dd033c2f0a3107dec8f0b0e58374)

….

[ceph@node0 cluster]$ ceph-deploy install node0 node1 node2 node3

[ceph@node0 cluster]$ ceph-deploy install node0 node1 node2 node3

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.34): /usr/bin/ceph-deploy install node0 node1 node2 node3

[ceph_deploy.cli][INFO] ceph-deploy options:

[ceph_deploy.cli][INFO] verbose : False

[ceph_deploy.cli][INFO] testing : None

[ceph_deploy.cli][INFO] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x2ae0560>

[ceph_deploy.cli][INFO] cluster : ceph

[ceph_deploy.cli][INFO] dev_commit : None

[ceph_deploy.cli][INFO] install_mds : False

[ceph_deploy.cli][INFO] stable : None

[ceph_deploy.cli][INFO] default_release : False

[ceph_deploy.cli][INFO] username : None

[ceph_deploy.cli][INFO] adjust_repos : True

[ceph_deploy.cli][INFO] func : <function install at 0x2a53668>

[ceph_deploy.cli][INFO] install_all : False

[ceph_deploy.cli][INFO] repo : False

[ceph_deploy.cli][INFO] host : [‘node0’, ‘node1’, ‘node2’, ‘node3’]

[ceph_deploy.cli][INFO] install_rgw : False

[ceph_deploy.cli][INFO] install_tests : False

[ceph_deploy.cli][INFO] repo_url : None

[ceph_deploy.cli][INFO] ceph_conf : None

[ceph_deploy.cli][INFO] install_osd : False

[ceph_deploy.cli][INFO] version_kind : stable

[ceph_deploy.cli][INFO] install_common : False

[ceph_deploy.cli][INFO] overwrite_conf : False

[ceph_deploy.cli][INFO] quiet : False

[ceph_deploy.cli][INFO] dev : master

[ceph_deploy.cli][INFO] local_mirror : None

[ceph_deploy.cli][INFO] release : None

[ceph_deploy.cli][INFO] install_mon : False

[ceph_deploy.cli][INFO] gpg_url : None

[ceph_deploy.install][DEBUG] Installing stable version jewel on cluster ceph hosts node0 node1 node2 node3

[ceph_deploy.install][DEBUG] Detecting platform for host node0 …

[node0][DEBUG] connection detected need for sudo

[node0][DEBUG] connected to host: node0

[node0][DEBUG] detect platform information from remote host

[node0][DEBUG] detect machine type

[ceph_deploy.install][INFO] Distro info: CentOS Linux 7.2.1511 Core

[node0][INFO] installing Ceph on node0

[node0][INFO] Running command: sudo yum clean all

[node0][DEBUG] Loaded plugins: fastestmirror, langpacks, priorities

[node0][DEBUG] Cleaning repos: Ceph Ceph-noarch base ceph-source epel extras updates

[node0][DEBUG] Cleaning up everything

[node0][DEBUG] Cleaning up list of fastest mirrors

[node0][INFO] Running command: sudo yum -y install epel-release

[node0][DEBUG] Loaded plugins: fastestmirror, langpacks, priorities

[node0][DEBUG] Determining fastest mirrors

[node0][DEBUG] * epel: mirror01.idc.hinet.net

[node0][DEBUG] 25 packages excluded due to repository priority protections

[node0][DEBUG] Package epel-release-7-7.noarch already installed and latest version

[node0][DEBUG] Nothing to do

[node0][INFO] Running command: sudo yum -y install yum-plugin-priorities

[node0][DEBUG] Loaded plugins: fastestmirror, langpacks, priorities

[node0][DEBUG] Loading mirror speeds from cached hostfile

[node0][DEBUG] * epel: mirror01.idc.hinet.net

[node0][DEBUG] 25 packages excluded due to repository priority protections

[node0][DEBUG] Package yum-plugin-priorities-1.1.31-34.el7.noarch already installed and latest version

[node0][DEBUG] Nothing to do

[node0][DEBUG] Configure Yum priorities to include obsoletes

[node0][WARNIN] check_obsoletes has been enabled for Yum priorities plugin

[node0][INFO] Running command: sudo rpm –import https://download.ceph.com/keys/release.asc

[node0][INFO] Running command: sudo rpm -Uvh –replacepkgs https://download.ceph.com/rpm-jewel/el7/noarch/ceph-release-1-0.el7.noarch.rpm

[node0][DEBUG] Retrieving https://download.ceph.com/rpm-jewel/el7/noarch/ceph-release-1-0.el7.noarch.rpm

[node0][DEBUG] Preparing… ########################################

[node0][DEBUG] Updating / installing…

[node0][DEBUG] ceph-release-1-1.el7 ########################################

[node0][WARNIN] ensuring that /etc/yum.repos.d/ceph.repo contains a high priority

[node0][WARNIN] altered ceph.repo priorities to contain: priority=1

[node0][INFO] Running command: sudo yum -y install ceph ceph-radosgw

[node0][DEBUG] Loaded plugins: fastestmirror, langpacks, priorities

[node0][DEBUG] Loading mirror speeds from cached hostfile

[node0][DEBUG] * epel: mirror01.idc.hinet.net

[node0][DEBUG] 25 packages excluded due to repository priority protections

[node0][DEBUG] Package 1:ceph-10.2.2-0.el7.x86_64 already installed and latest version

[node0][DEBUG] Package 1:ceph-radosgw-10.2.2-0.el7.x86_64 already installed and latest version

[node0][DEBUG] Nothing to do

[node0][INFO] Running command: sudo ceph –version

[node0][DEBUG] ceph version 10.2.2 (45107e21c568dd033c2f0a3107dec8f0b0e58374)

….

5.4 初始化 monitor 节点

初始化监控节点并收集 keyring:

[ceph@node0 cluster]$ ceph-deploy mon create-initial

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.34): /usr/bin/ceph-deploy mon create-initial

[ceph_deploy.cli][INFO] ceph-deploy options:

[ceph_deploy.cli][INFO] username : None

[ceph_deploy.cli][INFO] verbose : False

[ceph_deploy.cli][INFO] overwrite_conf : False

[ceph_deploy.cli][INFO] subcommand : create-initial

[ceph_deploy.cli][INFO] quiet : False

[ceph_deploy.cli][INFO] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fbe46804cb0>

[ceph_deploy.cli][INFO] cluster : ceph

[ceph_deploy.cli][INFO] func : <function mon at 0x7fbe467f6aa0>

[ceph_deploy.cli][INFO] ceph_conf : None

[ceph_deploy.cli][INFO] default_release : False

[ceph_deploy.cli][INFO] keyrings : None

[ceph_deploy.mon][DEBUG] Deploying mon, cluster ceph hosts node1 node2 node3

[ceph_deploy.mon][DEBUG] detecting platform for host node1 …

[node1][DEBUG] connection detected need for sudo

[node1][DEBUG] connected to host: node1

[node1][DEBUG] detect platform information from remote host

[node1][DEBUG] detect machine type

[node1][DEBUG] find the location of an executable

[ceph_deploy.mon][INFO] distro info: CentOS Linux 7.2.1511 Core

[node1][DEBUG] determining if provided host has same hostname in remote

[node1][DEBUG] get remote short hostname

[node1][DEBUG] deploying mon to node1

[node1][DEBUG] get remote short hostname

[node1][DEBUG] remote hostname: node1

[node1][DEBUG] write cluster configuration to /etc/ceph/{cluster}.conf

[node1][DEBUG] create the mon path if it does not exist

[node1][DEBUG] checking for done path: /var/lib/ceph/mon/ceph-node1/done

[node1][DEBUG] done path does not exist: /var/lib/ceph/mon/ceph-node1/done

[node1][INFO] creating keyring file: /var/lib/ceph/tmp/ceph-node1.mon.keyring

[node1][DEBUG] create the monitor keyring file

[node1][INFO] Running command: sudo ceph-mon –cluster ceph –mkfs -i node1 –keyring /var/lib/ceph/tmp/ceph-node1.mon.keyring –setuser 1001 –setgroup 1001

[node1][DEBUG] ceph-mon: mon.noname-a 192.168.92.101:6789/0 is local, renaming to mon.node1

[node1][DEBUG] ceph-mon: set fsid to 4f8f6c46-9f67-4475-9cb5-52cafecb3e4c

[node1][DEBUG] ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node1 for mon.node1

[node1][INFO] unlinking keyring file /var/lib/ceph/tmp/ceph-node1.mon.keyring

[node1][DEBUG] create a done file to avoid re-doing the mon deployment

[node1][DEBUG] create the init path if it does not exist

[node1][INFO] Running command: sudo systemctl enable ceph.target

[node1][INFO] Running command: sudo systemctl enable ceph-mon@node1

[node1][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@node1.service to /usr/lib/systemd/system/ceph-mon@.service.

[node1][INFO] Running command: sudo systemctl start ceph-mon@node1

[node1][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status

[node1][DEBUG] ********************************************************************************

[node1][DEBUG] status for monitor: mon.node1

[node1][DEBUG] {

[node1][DEBUG] “election_epoch”: 0,

[node1][DEBUG] “extra_probe_peers”: [

[node1][DEBUG] “192.168.92.102:6789/0”,

[node1][DEBUG] “192.168.92.103:6789/0”

[node1][DEBUG] ],

[node1][DEBUG] “monmap”: {

[node1][DEBUG] “created”: “2016-06-24 14:43:29.944474”,

[node1][DEBUG] “epoch”: 0,

[node1][DEBUG] “fsid”: “4f8f6c46-9f67-4475-9cb5-52cafecb3e4c”,

[node1][DEBUG] “modified”: “2016-06-24 14:43:29.944474”,

[node1][DEBUG] “mons”: [

[node1][DEBUG] {

[node1][DEBUG] “addr”: “192.168.92.101:6789/0”,

[node1][DEBUG] “name”: “node1”,

[node1][DEBUG] “rank”: 0

[node1][DEBUG] },

[node1][DEBUG] {

[node1][DEBUG] “addr”: “0.0.0.0:0/1”,

[node1][DEBUG] “name”: “node2”,

[node1][DEBUG] “rank”: 1

[node1][DEBUG] },

[node1][DEBUG] {

[node1][DEBUG] “addr”: “0.0.0.0:0/2”,

[node1][DEBUG] “name”: “node3”,

[node1][DEBUG] “rank”: 2

[node1][DEBUG] }

[node1][DEBUG] ]

[node1][DEBUG] },

[node1][DEBUG] “name”: “node1”,

[node1][DEBUG] “outside_quorum”: [

[node1][DEBUG] “node1”

[node1][DEBUG] ],

[node1][DEBUG] “quorum”: [],

[node1][DEBUG] “rank”: 0,

[node1][DEBUG] “state”: “probing”,

[node1][DEBUG] “sync_provider”: []

[node1][DEBUG] }

[node1][DEBUG] ********************************************************************************

[node1][INFO] monitor: mon.node1 is running

[node1][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status

[ceph_deploy.mon][DEBUG] detecting platform for host node2 …

[node2][DEBUG] connection detected need for sudo

[node2][DEBUG] connected to host: node2

[node2][DEBUG] detect platform information from remote host

[node2][DEBUG] detect machine type

[node2][DEBUG] find the location of an executable

[ceph_deploy.mon][INFO] distro info: CentOS Linux 7.2.1511 Core

[node2][DEBUG] determining if provided host has same hostname in remote

[node2][DEBUG] get remote short hostname

[node2][DEBUG] deploying mon to node2

[node2][DEBUG] get remote short hostname

[node2][DEBUG] remote hostname: node2

[node2][DEBUG] write cluster configuration to /etc/ceph/{cluster}.conf

[node2][DEBUG] create the mon path if it does not exist

[node2][DEBUG] checking for done path: /var/lib/ceph/mon/ceph-node2/done

[node2][DEBUG] done path does not exist: /var/lib/ceph/mon/ceph-node2/done

[node2][INFO] creating keyring file: /var/lib/ceph/tmp/ceph-node2.mon.keyring

[node2][DEBUG] create the monitor keyring file

[node2][INFO] Running command: sudo ceph-mon –cluster ceph –mkfs -i node2 –keyring /var/lib/ceph/tmp/ceph-node2.mon.keyring –setuser 1001 –setgroup 1001

[node2][DEBUG] ceph-mon: mon.noname-b 192.168.92.102:6789/0 is local, renaming to mon.node2

[node2][DEBUG] ceph-mon: set fsid to 4f8f6c46-9f67-4475-9cb5-52cafecb3e4c

[node2][DEBUG] ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node2 for mon.node2

[node2][INFO] unlinking keyring file /var/lib/ceph/tmp/ceph-node2.mon.keyring

[node2][DEBUG] create a done file to avoid re-doing the mon deployment

[node2][DEBUG] create the init path if it does not exist

[node2][INFO] Running command: sudo systemctl enable ceph.target

[node2][INFO] Running command: sudo systemctl enable ceph-mon@node2

[node2][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@node2.service to /usr/lib/systemd/system/ceph-mon@.service.

[node2][INFO] Running command: sudo systemctl start ceph-mon@node2

[node2][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node2.asok mon_status

[node2][DEBUG] ********************************************************************************

[node2][DEBUG] status for monitor: mon.node2

[node2][DEBUG] {

[node2][DEBUG] “election_epoch”: 1,

[node2][DEBUG] “extra_probe_peers”: [

[node2][DEBUG] “192.168.92.101:6789/0”,

[node2][DEBUG] “192.168.92.103:6789/0”

[node2][DEBUG] ],

[node2][DEBUG] “monmap”: {

[node2][DEBUG] “created”: “2016-06-24 14:43:34.865908”,

[node2][DEBUG] “epoch”: 0,

[node2][DEBUG] “fsid”: “4f8f6c46-9f67-4475-9cb5-52cafecb3e4c”,

[node2][DEBUG] “modified”: “2016-06-24 14:43:34.865908”,

[node2][DEBUG] “mons”: [

[node2][DEBUG] {

[node2][DEBUG] “addr”: “192.168.92.101:6789/0”,

[node2][DEBUG] “name”: “node1”,

[node2][DEBUG] “rank”: 0

[node2][DEBUG] },

[node2][DEBUG] {

[node2][DEBUG] “addr”: “192.168.92.102:6789/0”,

[node2][DEBUG] “name”: “node2”,

[node2][DEBUG] “rank”: 1

[node2][DEBUG] },

[node2][DEBUG] {

[node2][DEBUG] “addr”: “0.0.0.0:0/2”,

[node2][DEBUG] “name”: “node3”,

[node2][DEBUG] “rank”: 2

[node2][DEBUG] }

[node2][DEBUG] ]

[node2][DEBUG] },

[node2][DEBUG] “name”: “node2”,

[node2][DEBUG] “outside_quorum”: [],

[node2][DEBUG] “quorum”: [],

[node2][DEBUG] “rank”: 1,

[node2][DEBUG] “state”: “electing”,

[node2][DEBUG] “sync_provider”: []

[node2][DEBUG] }

[node2][DEBUG] ********************************************************************************

[node2][INFO] monitor: mon.node2 is running

[node2][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node2.asok mon_status

[ceph_deploy.mon][DEBUG] detecting platform for host node3 …

[node3][DEBUG] connection detected need for sudo

[node3][DEBUG] connected to host: node3

[node3][DEBUG] detect platform information from remote host

[node3][DEBUG] detect machine type

[node3][DEBUG] find the location of an executable

[ceph_deploy.mon][INFO] distro info: CentOS Linux 7.2.1511 Core

[node3][DEBUG] determining if provided host has same hostname in remote

[node3][DEBUG] get remote short hostname

[node3][DEBUG] deploying mon to node3

[node3][DEBUG] get remote short hostname

[node3][DEBUG] remote hostname: node3

[node3][DEBUG] write cluster configuration to /etc/ceph/{cluster}.conf

[node3][DEBUG] create the mon path if it does not exist

[node3][DEBUG] checking for done path: /var/lib/ceph/mon/ceph-node3/done

[node3][DEBUG] done path does not exist: /var/lib/ceph/mon/ceph-node3/done

[node3][INFO] creating keyring file: /var/lib/ceph/tmp/ceph-node3.mon.keyring

[node3][DEBUG] create the monitor keyring file

[node3][INFO] Running command: sudo ceph-mon –cluster ceph –mkfs -i node3 –keyring /var/lib/ceph/tmp/ceph-node3.mon.keyring –setuser 1001 –setgroup 1001

[node3][DEBUG] ceph-mon: mon.noname-c 192.168.92.103:6789/0 is local, renaming to mon.node3

[node3][DEBUG] ceph-mon: set fsid to 4f8f6c46-9f67-4475-9cb5-52cafecb3e4c

[node3][DEBUG] ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node3 for mon.node3

[node3][INFO] unlinking keyring file /var/lib/ceph/tmp/ceph-node3.mon.keyring

[node3][DEBUG] create a done file to avoid re-doing the mon deployment

[node3][DEBUG] create the init path if it does not exist

[node3][INFO] Running command: sudo systemctl enable ceph.target

[node3][INFO] Running command: sudo systemctl enable ceph-mon@node3

[node3][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@node3.service to /usr/lib/systemd/system/ceph-mon@.service.

[node3][INFO] Running command: sudo systemctl start ceph-mon@node3

[node3][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node3.asok mon_status

[node3][DEBUG] ********************************************************************************

[node3][DEBUG] status for monitor: mon.node3

[node3][DEBUG] {

[node3][DEBUG] “election_epoch”: 1,

[node3][DEBUG] “extra_probe_peers”: [

[node3][DEBUG] “192.168.92.101:6789/0”,

[node3][DEBUG] “192.168.92.102:6789/0”

[node3][DEBUG] ],

[node3][DEBUG] “monmap”: {

[node3][DEBUG] “created”: “2016-06-24 14:43:39.800046”,

[node3][DEBUG] “epoch”: 0,

[node3][DEBUG] “fsid”: “4f8f6c46-9f67-4475-9cb5-52cafecb3e4c”,

[node3][DEBUG] “modified”: “2016-06-24 14:43:39.800046”,

[node3][DEBUG] “mons”: [

[node3][DEBUG] {

[node3][DEBUG] “addr”: “192.168.92.101:6789/0”,

[node3][DEBUG] “name”: “node1”,

[node3][DEBUG] “rank”: 0

[node3][DEBUG] },

[node3][DEBUG] {

[node3][DEBUG] “addr”: “192.168.92.102:6789/0”,

[node3][DEBUG] “name”: “node2”,

[node3][DEBUG] “rank”: 1

[node3][DEBUG] },

[node3][DEBUG] {

[node3][DEBUG] “addr”: “192.168.92.103:6789/0”,

[node3][DEBUG] “name”: “node3”,

[node3][DEBUG] “rank”: 2

[node3][DEBUG] }

[node3][DEBUG] ]

[node3][DEBUG] },

[node3][DEBUG] “name”: “node3”,

[node3][DEBUG] “outside_quorum”: [],

[node3][DEBUG] “quorum”: [],

[node3][DEBUG] “rank”: 2,

[node3][DEBUG] “state”: “electing”,

[node3][DEBUG] “sync_provider”: []

[node3][DEBUG] }

[node3][DEBUG] ********************************************************************************

[node3][INFO] monitor: mon.node3 is running

[node3][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node3.asok mon_status

[ceph_deploy.mon][INFO] processing monitor mon.node1

[node1][DEBUG] connection detected need for sudo

[node1][DEBUG] connected to host: node1

[node1][DEBUG] detect platform information from remote host

[node1][DEBUG] detect machine type

[node1][DEBUG] find the location of an executable

[node1][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status

[ceph_deploy.mon][WARNIN] mon.node1 monitor is not yet in quorum, tries left: 5

[ceph_deploy.mon][WARNIN] waiting 5 seconds before retrying

[node1][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status

[ceph_deploy.mon][WARNIN] mon.node1 monitor is not yet in quorum, tries left: 4

[ceph_deploy.mon][WARNIN] waiting 10 seconds before retrying

[node1][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status

[ceph_deploy.mon][INFO] mon.node1 monitor has reached quorum!

[ceph_deploy.mon][INFO] processing monitor mon.node2

[node2][DEBUG] connection detected need for sudo

[node2][DEBUG] connected to host: node2

[node2][DEBUG] detect platform information from remote host

[node2][DEBUG] detect machine type

[node2][DEBUG] find the location of an executable

[node2][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node2.asok mon_status

[ceph_deploy.mon][INFO] mon.node2 monitor has reached quorum!

[ceph_deploy.mon][INFO] processing monitor mon.node3

[node3][DEBUG] connection detected need for sudo

[node3][DEBUG] connected to host: node3

[node3][DEBUG] detect platform information from remote host

[node3][DEBUG] detect machine type

[node3][DEBUG] find the location of an executable

[node3][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node3.asok mon_status

[ceph_deploy.mon][INFO] mon.node3 monitor has reached quorum!

[ceph_deploy.mon][INFO] all initial monitors are running and have formed quorum

[ceph_deploy.mon][INFO] Running gatherkeys…

[ceph_deploy.gatherkeys][INFO] Storing keys in temp directory /tmp/tmp5_jcSr

[node1][DEBUG] connection detected need for sudo

[node1][DEBUG] connected to host: node1

[node1][DEBUG] detect platform information from remote host

[node1][DEBUG] detect machine type

[node1][DEBUG] get remote short hostname

[node1][DEBUG] fetch remote file

[node1][INFO] Running command: sudo /usr/bin/ceph –connect-timeout=25 –cluster=ceph –admin-daemon=/var/run/ceph/ceph-mon.node1.asok mon_status

[node1][INFO] Running command: sudo /usr/bin/ceph –connect-timeout=25 –cluster=ceph –name mon. –keyring=/var/lib/ceph/mon/ceph-node1/keyring auth get-or-create client.admin osd allow * mds allow * mon allow *

[node1][INFO] Running command: sudo /usr/bin/ceph –connect-timeout=25 –cluster=ceph –name mon. –keyring=/var/lib/ceph/mon/ceph-node1/keyring auth get-or-create client.bootstrap-mds mon allow profile bootstrap-mds

[node1][INFO] Running command: sudo /usr/bin/ceph –connect-timeout=25 –cluster=ceph –name mon. –keyring=/var/lib/ceph/mon/ceph-node1/keyring auth get-or-create client.bootstrap-osd mon allow profile bootstrap-osd

[node1][INFO] Running command: sudo /usr/bin/ceph –connect-timeout=25 –cluster=ceph –name mon. –keyring=/var/lib/ceph/mon/ceph-node1/keyring auth get-or-create client.bootstrap-rgw mon allow profile bootstrap-rgw

[ceph_deploy.gatherkeys][INFO] Storing ceph.client.admin.keyring

[ceph_deploy.gatherkeys][INFO] Storing ceph.bootstrap-mds.keyring

[ceph_deploy.gatherkeys][INFO] keyring ‘ceph.mon.keyring’ already exists

[ceph_deploy.gatherkeys][INFO] Storing ceph.bootstrap-osd.keyring

[ceph_deploy.gatherkeys][INFO] Storing ceph.bootstrap-rgw.keyring

[ceph_deploy.gatherkeys][INFO] Destroy temp directory /tmp/tmp5_jcSr

[ceph@node0 cluster]$

[ceph@node0 cluster]$ ceph-deploy mon create-initial

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.34): /usr/bin/ceph-deploy mon create-initial

[ceph_deploy.cli][INFO] ceph-deploy options:

[ceph_deploy.cli][INFO] username : None

[ceph_deploy.cli][INFO] verbose : False

[ceph_deploy.cli][INFO] overwrite_conf : False

[ceph_deploy.cli][INFO] subcommand : create-initial

[ceph_deploy.cli][INFO] quiet : False

[ceph_deploy.cli][INFO] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fbe46804cb0>

[ceph_deploy.cli][INFO] cluster : ceph

[ceph_deploy.cli][INFO] func : <function mon at 0x7fbe467f6aa0>

[ceph_deploy.cli][INFO] ceph_conf : None

[ceph_deploy.cli][INFO] default_release : False

[ceph_deploy.cli][INFO] keyrings : None

[ceph_deploy.mon][DEBUG] Deploying mon, cluster ceph hosts node1 node2 node3

[ceph_deploy.mon][DEBUG] detecting platform for host node1 …

[node1][DEBUG] connection detected need for sudo

[node1][DEBUG] connected to host: node1

[node1][DEBUG] detect platform information from remote host

[node1][DEBUG] detect machine type

[node1][DEBUG] find the location of an executable

[ceph_deploy.mon][INFO] distro info: CentOS Linux 7.2.1511 Core

[node1][DEBUG] determining if provided host has same hostname in remote

[node1][DEBUG] get remote short hostname

[node1][DEBUG] deploying mon to node1

[node1][DEBUG] get remote short hostname

[node1][DEBUG] remote hostname: node1

[node1][DEBUG] write cluster configuration to /etc/ceph/{cluster}.conf

[node1][DEBUG] create the mon path if it does not exist

[node1][DEBUG] checking for done path: /var/lib/ceph/mon/ceph-node1/done

[node1][DEBUG] done path does not exist: /var/lib/ceph/mon/ceph-node1/done

[node1][INFO] creating keyring file: /var/lib/ceph/tmp/ceph-node1.mon.keyring

[node1][DEBUG] create the monitor keyring file

[node1][INFO] Running command: sudo ceph-mon –cluster ceph –mkfs -i node1 –keyring /var/lib/ceph/tmp/ceph-node1.mon.keyring –setuser 1001 –setgroup 1001

[node1][DEBUG] ceph-mon: mon.noname-a 192.168.92.101:6789/0 is local, renaming to mon.node1

[node1][DEBUG] ceph-mon: set fsid to 4f8f6c46-9f67-4475-9cb5-52cafecb3e4c

[node1][DEBUG] ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node1 for mon.node1

[node1][INFO] unlinking keyring file /var/lib/ceph/tmp/ceph-node1.mon.keyring

[node1][DEBUG] create a done file to avoid re-doing the mon deployment

[node1][DEBUG] create the init path if it does not exist

[node1][INFO] Running command: sudo systemctl enable ceph.target

[node1][INFO] Running command: sudo systemctl enable ceph-mon@node1

[node1][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@node1.service to /usr/lib/systemd/system/ceph-mon@.service.

[node1][INFO] Running command: sudo systemctl start ceph-mon@node1

[node1][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status

[node1][DEBUG] ********************************************************************************

[node1][DEBUG] status for monitor: mon.node1

[node1][DEBUG] {

[node1][DEBUG] “election_epoch”: 0,

[node1][DEBUG] “extra_probe_peers”: [

[node1][DEBUG] “192.168.92.102:6789/0”,

[node1][DEBUG] “192.168.92.103:6789/0”

[node1][DEBUG] ],

[node1][DEBUG] “monmap”: {

[node1][DEBUG] “created”: “2016-06-24 14:43:29.944474”,

[node1][DEBUG] “epoch”: 0,

[node1][DEBUG] “fsid”: “4f8f6c46-9f67-4475-9cb5-52cafecb3e4c”,

[node1][DEBUG] “modified”: “2016-06-24 14:43:29.944474”,

[node1][DEBUG] “mons”: [

[node1][DEBUG] {

[node1][DEBUG] “addr”: “192.168.92.101:6789/0”,

[node1][DEBUG] “name”: “node1”,

[node1][DEBUG] “rank”: 0

[node1][DEBUG] },

[node1][DEBUG] {

[node1][DEBUG] “addr”: “0.0.0.0:0/1”,

[node1][DEBUG] “name”: “node2”,

[node1][DEBUG] “rank”: 1

[node1][DEBUG] },

[node1][DEBUG] {

[node1][DEBUG] “addr”: “0.0.0.0:0/2”,

[node1][DEBUG] “name”: “node3”,

[node1][DEBUG] “rank”: 2

[node1][DEBUG] }

[node1][DEBUG] ]

[node1][DEBUG] },

[node1][DEBUG] “name”: “node1”,

[node1][DEBUG] “outside_quorum”: [

[node1][DEBUG] “node1”

[node1][DEBUG] ],

[node1][DEBUG] “quorum”: [],

[node1][DEBUG] “rank”: 0,

[node1][DEBUG] “state”: “probing”,

[node1][DEBUG] “sync_provider”: []

[node1][DEBUG] }

[node1][DEBUG] ********************************************************************************

[node1][INFO] monitor: mon.node1 is running

[node1][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status

[ceph_deploy.mon][DEBUG] detecting platform for host node2 …

[node2][DEBUG] connection detected need for sudo

[node2][DEBUG] connected to host: node2

[node2][DEBUG] detect platform information from remote host

[node2][DEBUG] detect machine type

[node2][DEBUG] find the location of an executable

[ceph_deploy.mon][INFO] distro info: CentOS Linux 7.2.1511 Core

[node2][DEBUG] determining if provided host has same hostname in remote

[node2][DEBUG] get remote short hostname

[node2][DEBUG] deploying mon to node2

[node2][DEBUG] get remote short hostname

[node2][DEBUG] remote hostname: node2

[node2][DEBUG] write cluster configuration to /etc/ceph/{cluster}.conf

[node2][DEBUG] create the mon path if it does not exist

[node2][DEBUG] checking for done path: /var/lib/ceph/mon/ceph-node2/done

[node2][DEBUG] done path does not exist: /var/lib/ceph/mon/ceph-node2/done

[node2][INFO] creating keyring file: /var/lib/ceph/tmp/ceph-node2.mon.keyring

[node2][DEBUG] create the monitor keyring file

[node2][INFO] Running command: sudo ceph-mon –cluster ceph –mkfs -i node2 –keyring /var/lib/ceph/tmp/ceph-node2.mon.keyring –setuser 1001 –setgroup 1001

[node2][DEBUG] ceph-mon: mon.noname-b 192.168.92.102:6789/0 is local, renaming to mon.node2

[node2][DEBUG] ceph-mon: set fsid to 4f8f6c46-9f67-4475-9cb5-52cafecb3e4c

[node2][DEBUG] ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node2 for mon.node2

[node2][INFO] unlinking keyring file /var/lib/ceph/tmp/ceph-node2.mon.keyring

[node2][DEBUG] create a done file to avoid re-doing the mon deployment

[node2][DEBUG] create the init path if it does not exist

[node2][INFO] Running command: sudo systemctl enable ceph.target

[node2][INFO] Running command: sudo systemctl enable ceph-mon@node2

[node2][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@node2.service to /usr/lib/systemd/system/ceph-mon@.service.

[node2][INFO] Running command: sudo systemctl start ceph-mon@node2

[node2][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node2.asok mon_status

[node2][DEBUG] ********************************************************************************

[node2][DEBUG] status for monitor: mon.node2

[node2][DEBUG] {

[node2][DEBUG] “election_epoch”: 1,

[node2][DEBUG] “extra_probe_peers”: [

[node2][DEBUG] “192.168.92.101:6789/0”,

[node2][DEBUG] “192.168.92.103:6789/0”

[node2][DEBUG] ],

[node2][DEBUG] “monmap”: {

[node2][DEBUG] “created”: “2016-06-24 14:43:34.865908”,

[node2][DEBUG] “epoch”: 0,

[node2][DEBUG] “fsid”: “4f8f6c46-9f67-4475-9cb5-52cafecb3e4c”,

[node2][DEBUG] “modified”: “2016-06-24 14:43:34.865908”,

[node2][DEBUG] “mons”: [

[node2][DEBUG] {

[node2][DEBUG] “addr”: “192.168.92.101:6789/0”,

[node2][DEBUG] “name”: “node1”,

[node2][DEBUG] “rank”: 0

[node2][DEBUG] },

[node2][DEBUG] {

[node2][DEBUG] “addr”: “192.168.92.102:6789/0”,

[node2][DEBUG] “name”: “node2”,

[node2][DEBUG] “rank”: 1

[node2][DEBUG] },

[node2][DEBUG] {

[node2][DEBUG] “addr”: “0.0.0.0:0/2”,

[node2][DEBUG] “name”: “node3”,

[node2][DEBUG] “rank”: 2

[node2][DEBUG] }

[node2][DEBUG] ]

[node2][DEBUG] },

[node2][DEBUG] “name”: “node2”,

[node2][DEBUG] “outside_quorum”: [],

[node2][DEBUG] “quorum”: [],

[node2][DEBUG] “rank”: 1,

[node2][DEBUG] “state”: “electing”,

[node2][DEBUG] “sync_provider”: []

[node2][DEBUG] }

[node2][DEBUG] ********************************************************************************

[node2][INFO] monitor: mon.node2 is running

[node2][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node2.asok mon_status

[ceph_deploy.mon][DEBUG] detecting platform for host node3 …

[node3][DEBUG] connection detected need for sudo

[node3][DEBUG] connected to host: node3

[node3][DEBUG] detect platform information from remote host

[node3][DEBUG] detect machine type

[node3][DEBUG] find the location of an executable

[ceph_deploy.mon][INFO] distro info: CentOS Linux 7.2.1511 Core

[node3][DEBUG] determining if provided host has same hostname in remote

[node3][DEBUG] get remote short hostname

[node3][DEBUG] deploying mon to node3

[node3][DEBUG] get remote short hostname

[node3][DEBUG] remote hostname: node3

[node3][DEBUG] write cluster configuration to /etc/ceph/{cluster}.conf

[node3][DEBUG] create the mon path if it does not exist

[node3][DEBUG] checking for done path: /var/lib/ceph/mon/ceph-node3/done

[node3][DEBUG] done path does not exist: /var/lib/ceph/mon/ceph-node3/done

[node3][INFO] creating keyring file: /var/lib/ceph/tmp/ceph-node3.mon.keyring

[node3][DEBUG] create the monitor keyring file

[node3][INFO] Running command: sudo ceph-mon –cluster ceph –mkfs -i node3 –keyring /var/lib/ceph/tmp/ceph-node3.mon.keyring –setuser 1001 –setgroup 1001

[node3][DEBUG] ceph-mon: mon.noname-c 192.168.92.103:6789/0 is local, renaming to mon.node3

[node3][DEBUG] ceph-mon: set fsid to 4f8f6c46-9f67-4475-9cb5-52cafecb3e4c

[node3][DEBUG] ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node3 for mon.node3

[node3][INFO] unlinking keyring file /var/lib/ceph/tmp/ceph-node3.mon.keyring

[node3][DEBUG] create a done file to avoid re-doing the mon deployment

[node3][DEBUG] create the init path if it does not exist

[node3][INFO] Running command: sudo systemctl enable ceph.target

[node3][INFO] Running command: sudo systemctl enable ceph-mon@node3

[node3][WARNIN] Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@node3.service to /usr/lib/systemd/system/ceph-mon@.service.

[node3][INFO] Running command: sudo systemctl start ceph-mon@node3

[node3][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node3.asok mon_status

[node3][DEBUG] ********************************************************************************

[node3][DEBUG] status for monitor: mon.node3

[node3][DEBUG] {

[node3][DEBUG] “election_epoch”: 1,

[node3][DEBUG] “extra_probe_peers”: [

[node3][DEBUG] “192.168.92.101:6789/0”,

[node3][DEBUG] “192.168.92.102:6789/0”

[node3][DEBUG] ],

[node3][DEBUG] “monmap”: {

[node3][DEBUG] “created”: “2016-06-24 14:43:39.800046”,

[node3][DEBUG] “epoch”: 0,

[node3][DEBUG] “fsid”: “4f8f6c46-9f67-4475-9cb5-52cafecb3e4c”,

[node3][DEBUG] “modified”: “2016-06-24 14:43:39.800046”,

[node3][DEBUG] “mons”: [

[node3][DEBUG] {

[node3][DEBUG] “addr”: “192.168.92.101:6789/0”,

[node3][DEBUG] “name”: “node1”,

[node3][DEBUG] “rank”: 0

[node3][DEBUG] },

[node3][DEBUG] {

[node3][DEBUG] “addr”: “192.168.92.102:6789/0”,

[node3][DEBUG] “name”: “node2”,

[node3][DEBUG] “rank”: 1

[node3][DEBUG] },

[node3][DEBUG] {

[node3][DEBUG] “addr”: “192.168.92.103:6789/0”,

[node3][DEBUG] “name”: “node3”,

[node3][DEBUG] “rank”: 2

[node3][DEBUG] }

[node3][DEBUG] ]

[node3][DEBUG] },

[node3][DEBUG] “name”: “node3”,

[node3][DEBUG] “outside_quorum”: [],

[node3][DEBUG] “quorum”: [],

[node3][DEBUG] “rank”: 2,

[node3][DEBUG] “state”: “electing”,

[node3][DEBUG] “sync_provider”: []

[node3][DEBUG] }

[node3][DEBUG] ********************************************************************************

[node3][INFO] monitor: mon.node3 is running

[node3][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node3.asok mon_status

[ceph_deploy.mon][INFO] processing monitor mon.node1

[node1][DEBUG] connection detected need for sudo

[node1][DEBUG] connected to host: node1

[node1][DEBUG] detect platform information from remote host

[node1][DEBUG] detect machine type

[node1][DEBUG] find the location of an executable

[node1][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status

[ceph_deploy.mon][WARNIN] mon.node1 monitor is not yet in quorum, tries left: 5

[ceph_deploy.mon][WARNIN] waiting 5 seconds before retrying

[node1][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status

[ceph_deploy.mon][WARNIN] mon.node1 monitor is not yet in quorum, tries left: 4

[ceph_deploy.mon][WARNIN] waiting 10 seconds before retrying

[node1][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node1.asok mon_status

[ceph_deploy.mon][INFO] mon.node1 monitor has reached quorum!

[ceph_deploy.mon][INFO] processing monitor mon.node2

[node2][DEBUG] connection detected need for sudo

[node2][DEBUG] connected to host: node2

[node2][DEBUG] detect platform information from remote host

[node2][DEBUG] detect machine type

[node2][DEBUG] find the location of an executable

[node2][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node2.asok mon_status

[ceph_deploy.mon][INFO] mon.node2 monitor has reached quorum!

[ceph_deploy.mon][INFO] processing monitor mon.node3

[node3][DEBUG] connection detected need for sudo

[node3][DEBUG] connected to host: node3

[node3][DEBUG] detect platform information from remote host

[node3][DEBUG] detect machine type

[node3][DEBUG] find the location of an executable

[node3][INFO] Running command: sudo ceph –cluster=ceph –admin-daemon /var/run/ceph/ceph-mon.node3.asok mon_status

[ceph_deploy.mon][INFO] mon.node3 monitor has reached quorum!

[ceph_deploy.mon][INFO] all initial monitors are running and have formed quorum

[ceph_deploy.mon][INFO] Running gatherkeys…

[ceph_deploy.gatherkeys][INFO] Storing keys in temp directory /tmp/tmp5_jcSr

[node1][DEBUG] connection detected need for sudo

[node1][DEBUG] connected to host: node1

[node1][DEBUG] detect platform information from remote host

[node1][DEBUG] detect machine type

[node1][DEBUG] get remote short hostname

[node1][DEBUG] fetch remote file

[node1][INFO] Running command: sudo /usr/bin/ceph –connect-timeout=25 –cluster=ceph –admin-daemon=/var/run/ceph/ceph-mon.node1.asok mon_status

[node1][INFO] Running command: sudo /usr/bin/ceph –connect-timeout=25 –cluster=ceph –name mon. –keyring=/var/lib/ceph/mon/ceph-node1/keyring auth get-or-create client.admin osd allow * mds allow * mon allow *

[node1][INFO] Running command: sudo /usr/bin/ceph –connect-timeout=25 –cluster=ceph –name mon. –keyring=/var/lib/ceph/mon/ceph-node1/keyring auth get-or-create client.bootstrap-mds mon allow profile bootstrap-mds

[node1][INFO] Running command: sudo /usr/bin/ceph –connect-timeout=25 –cluster=ceph –name mon. –keyring=/var/lib/ceph/mon/ceph-node1/keyring auth get-or-create client.bootstrap-osd mon allow profile bootstrap-osd

[node1][INFO] Running command: sudo /usr/bin/ceph –connect-timeout=25 –cluster=ceph –name mon. –keyring=/var/lib/ceph/mon/ceph-node1/keyring auth get-or-create client.bootstrap-rgw mon allow profile bootstrap-rgw

[ceph_deploy.gatherkeys][INFO] Storing ceph.client.admin.keyring

[ceph_deploy.gatherkeys][INFO] Storing ceph.bootstrap-mds.keyring

[ceph_deploy.gatherkeys][INFO] keyring ‘ceph.mon.keyring’ already exists

[ceph_deploy.gatherkeys][INFO] Storing ceph.bootstrap-osd.keyring

[ceph_deploy.gatherkeys][INFO] Storing ceph.bootstrap-rgw.keyring

[ceph_deploy.gatherkeys][INFO] Destroy temp directory /tmp/tmp5_jcSr

[ceph@node0 cluster]$

查看生成的文件

[ceph@node0 cluster]$ ls

ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring

ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph-deploy-ceph.log

[ceph@node0 cluster]$

[ceph@node0 cluster]$ ls

ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring

ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph-deploy-ceph.log

[ceph@node0 cluster]$

6 OSD 管理

6.1 初始化 OSD

命令

ceph-deploy osd prepare {ceph-node}:/path/to/directory

示例,如 1.2.3 所示

[ceph@node0 cluster]$ ceph-deploy osd prepare node1:/dev/sdb

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.34): /usr/bin/ceph-deploy osd prepare node1:/dev/sdb

[ceph_deploy.cli][INFO] ceph-deploy options:

[ceph_deploy.cli][INFO] username : None

[ceph_deploy.cli][INFO] disk : [(‘node1’, ‘/dev/sdb’, None)]

[ceph_deploy.cli][INFO] dmcrypt : False

[ceph_deploy.cli][INFO] verbose : False

[ceph_deploy.cli][INFO] bluestore : None

[ceph_deploy.cli][INFO] overwrite_conf : False

[ceph_deploy.cli][INFO] subcommand : prepare

[ceph_deploy.cli][INFO] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO] quiet : False

[ceph_deploy.cli][INFO] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x10b4d88>

[ceph_deploy.cli][INFO] cluster : ceph

[ceph_deploy.cli][INFO] fs_type : xfs

[ceph_deploy.cli][INFO] func : <function osd at 0x10a9398>

[ceph_deploy.cli][INFO] ceph_conf : None

[ceph_deploy.cli][INFO] default_release : False

[ceph_deploy.cli][INFO] zap_disk : False

[ceph_deploy.osd][DEBUG] Preparing cluster ceph disks node1:/dev/sdb:

[node1][DEBUG] connection detected need for sudo

[node1][DEBUG] connected to host: node1

[node1][DEBUG] detect platform information from remote host

[node1][DEBUG] detect machine type

[node1][DEBUG] find the location of an executable

[ceph_deploy.osd][INFO] Distro info: CentOS Linux 7.2.1511 Core

[ceph_deploy.osd][DEBUG] Deploying osd to node1

[node1][DEBUG] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.osd][DEBUG] Preparing host node1 disk /dev/sdb journal None activate False

[node1][DEBUG] find the location of an executable

[node1][INFO] Running command: sudo /usr/sbin/ceph-disk -v prepare –cluster ceph –fs-type xfs — /dev/sdb

[node1][WARNIN] command: Running command: /usr/bin/ceph-osd –cluster=ceph –show-config-value=fsid

[node1][WARNIN] command: Running command: /usr/bin/ceph-osd –check-allows-journal -i 0 –cluster ceph

[node1][WARNIN] command: Running command: /usr/bin/ceph-osd –check-wants-journal -i 0 –cluster ceph

[node1][WARNIN] command: Running command: /usr/bin/ceph-osd –check-needs-journal -i 0 –cluster ceph

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] set_type: Will colocate journal with data on /dev/sdb

[node1][WARNIN] command: Running command: /usr/bin/ceph-osd –cluster=ceph –show-config-value=osd_journal_size

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] command: Running command: /usr/bin/ceph-conf –cluster=ceph –name=osd. –lookup osd_mkfs_options_xfs

[node1][WARNIN] command: Running command: /usr/bin/ceph-conf –cluster=ceph –name=osd. –lookup osd_fs_mkfs_options_xfs

[node1][WARNIN] command: Running command: /usr/bin/ceph-conf –cluster=ceph –name=osd. –lookup osd_mount_options_xfs

[node1][WARNIN] command: Running command: /usr/bin/ceph-conf –cluster=ceph –name=osd. –lookup osd_fs_mount_options_xfs

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] ptype_tobe_for_name: name = journal

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] create_partition: Creating journal partition num 2 size 5120 on /dev/sdb

[node1][WARNIN] command_check_call: Running command: /sbin/sgdisk –new=2:0:+5120M –change-name=2:ceph journal –partition-guid=2:75a991e0-d8e1-414f-825d-1635edc8fbe5 –typecode=2:45b0969e-9b03-4f30-b4c6-b4b80ceff106 –mbrtogpt — /dev/sdb

[node1][DEBUG] The operation has completed successfully.

[node1][WARNIN] update_partition: Calling partprobe on created device /dev/sdb

[node1][WARNIN] command_check_call: Running command: /usr/bin/udevadm settle –timeout=600

[node1][WARNIN] command: Running command: /sbin/partprobe /dev/sdb

[node1][WARNIN] command_check_call: Running command: /usr/bin/udevadm settle –timeout=600

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb2 uuid path is /sys/dev/block/8:18/dm/uuid

[node1][WARNIN] prepare_device: Journal is GPT partition /dev/disk/by-partuuid/75a991e0-d8e1-414f-825d-1635edc8fbe5

[node1][WARNIN] prepare_device: Journal is GPT partition /dev/disk/by-partuuid/75a991e0-d8e1-414f-825d-1635edc8fbe5

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] set_data_partition: Creating osd partition on /dev/sdb

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] ptype_tobe_for_name: name = data

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] create_partition: Creating data partition num 1 size 0 on /dev/sdb

[node1][WARNIN] command_check_call: Running command: /sbin/sgdisk –largest-new=1 –change-name=1:ceph data –partition-guid=1:25ae7735-d1ca-45d5-9d98-63567b424248 –typecode=1:89c57f98-2fe5-4dc0-89c1-f3ad0ceff2be –mbrtogpt — /dev/sdb

[node1][DEBUG] The operation has completed successfully.

[node1][WARNIN] update_partition: Calling partprobe on created device /dev/sdb

[node1][WARNIN] command_check_call: Running command: /usr/bin/udevadm settle –timeout=600

[node1][WARNIN] command: Running command: /sbin/partprobe /dev/sdb

[node1][WARNIN] command_check_call: Running command: /usr/bin/udevadm settle –timeout=600

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb1 uuid path is /sys/dev/block/8:17/dm/uuid

[node1][WARNIN] populate_data_path_device: Creating xfs fs on /dev/sdb1

[node1][WARNIN] command_check_call: Running command: /sbin/mkfs -t xfs -f -i size=2048 — /dev/sdb1

[node1][DEBUG] meta-data=/dev/sdb1 isize=2048 agcount=4, agsize=982975 blks

[node1][DEBUG] = sectsz=512 attr=2, projid32bit=1

[node1][DEBUG] = crc=0 finobt=0

[node1][DEBUG] data = bsize=4096 blocks=3931899, imaxpct=25

[node1][DEBUG] = sunit=0 swidth=0 blks

[node1][DEBUG] naming =version 2 bsize=4096 ascii-ci=0 ftype=0

[node1][DEBUG] log =internal log bsize=4096 blocks=2560, version=2

[node1][DEBUG] = sectsz=512 sunit=0 blks, lazy-count=1

[node1][DEBUG] realtime =none extsz=4096 blocks=0, rtextents=0

[node1][WARNIN] mount: Mounting /dev/sdb1 on /var/lib/ceph/tmp/mnt.6_6ywP with options noatime,inode64

[node1][WARNIN] command_check_call: Running command: /usr/bin/mount -t xfs -o noatime,inode64 — /dev/sdb1 /var/lib/ceph/tmp/mnt.6_6ywP

[node1][WARNIN] command: Running command: /sbin/restorecon /var/lib/ceph/tmp/mnt.6_6ywP

[node1][WARNIN] populate_data_path: Preparing osd data dir /var/lib/ceph/tmp/mnt.6_6ywP

[node1][WARNIN] command: Running command: /sbin/restorecon -R /var/lib/ceph/tmp/mnt.6_6ywP/ceph_fsid.14578.tmp

[node1][WARNIN] command: Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/tmp/mnt.6_6ywP/ceph_fsid.14578.tmp

[node1][WARNIN] command: Running command: /sbin/restorecon -R /var/lib/ceph/tmp/mnt.6_6ywP/fsid.14578.tmp

[node1][WARNIN] command: Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/tmp/mnt.6_6ywP/fsid.14578.tmp

[node1][WARNIN] command: Running command: /sbin/restorecon -R /var/lib/ceph/tmp/mnt.6_6ywP/magic.14578.tmp

[node1][WARNIN] command: Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/tmp/mnt.6_6ywP/magic.14578.tmp

[node1][WARNIN] command: Running command: /sbin/restorecon -R /var/lib/ceph/tmp/mnt.6_6ywP/journal_uuid.14578.tmp

[node1][WARNIN] command: Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/tmp/mnt.6_6ywP/journal_uuid.14578.tmp

[node1][WARNIN] adjust_symlink: Creating symlink /var/lib/ceph/tmp/mnt.6_6ywP/journal -> /dev/disk/by-partuuid/75a991e0-d8e1-414f-825d-1635edc8fbe5

[node1][WARNIN] command: Running command: /sbin/restorecon -R /var/lib/ceph/tmp/mnt.6_6ywP

[node1][WARNIN] command: Running command: /usr/bin/chown -R ceph:ceph /var/lib/ceph/tmp/mnt.6_6ywP

[node1][WARNIN] unmount: Unmounting /var/lib/ceph/tmp/mnt.6_6ywP

[node1][WARNIN] command_check_call: Running command: /bin/umount — /var/lib/ceph/tmp/mnt.6_6ywP

[node1][WARNIN] get_dm_uuid: get_dm_uuid /dev/sdb uuid path is /sys/dev/block/8:16/dm/uuid

[node1][WARNIN] command_check_call: Running command: /sbin/sgdisk –typecode=1:4fbd7e29-9d25-41b8-afd0-062c0ceff05d — /dev/sdb

[node1][DEBUG] The operation has completed successfully.

[node1][WARNIN] update_partition: Calling partprobe on prepared device /dev/sdb

[node1][WARNIN] command_check_call: Running command: /usr/bin/udevadm settle –timeout=600

[node1][WARNIN] command: Running command: /sbin/partprobe /dev/sdb

[node1][WARNIN] command_check_call: Running command: /usr/bin/udevadm settle –timeout=600

[node1][WARNIN] command_check_call: Running command: /usr/bin/udevadm trigger –action=add –sysname-match sdb1

[node1][INFO] checking OSD status…

[node1][DEBUG] find the location of an executable

[node1][INFO] Running command: sudo /bin/ceph –cluster=ceph osd stat –format=json

[ceph_deploy.osd][DEBUG] Host node1 is now ready for osd use.

[ceph@node0 cluster]$

[ceph@node0 cluster]$ ceph-deploy osd prepare node1:/dev/sdb

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

[ceph_deploy.cli][INFO] Invoked (1.5.34): /usr/bin/ceph-deploy osd prepare node1:/dev/sdb

[ceph_deploy.cli][INFO] ceph-deploy options:

[ceph_deploy.cli][INFO] username : None

[ceph_deploy.cli][INFO] disk : [(‘node1’, ‘/dev/sdb’, None)]

[ceph_deploy.cli][INFO] dmcrypt : False

[ceph_deploy.cli][INFO] verbose : False

[ceph_deploy.cli][INFO] bluestore : None

[ceph_deploy.cli][INFO] overwrite_conf : False

[ceph_deploy.cli][INFO] subcommand : prepare

[ceph_deploy.cli][INFO] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO] quiet : False

[ceph_deploy.cli][INFO] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x10b4d88>

[ceph_deploy.cli][INFO] cluster : ceph

[ceph_deploy.cli][INFO] fs_type : xfs

[ceph_deploy.cli][INFO] func : <function osd at 0x10a9398>

[ceph_deploy.cli][INFO] ceph_conf : None

[ceph_deploy.cli][INFO] default_release : False

[ceph_deploy.cli][INFO] zap_disk : False

[ceph_deploy.osd][DEBUG] Preparing cluster ceph disks node1:/dev/sdb:

[node1][DEBUG] connection detected need for sudo

[node1][DEBUG] connected to host: node1

[node1][DEBUG] detect platform information from remote host

[node1][DEBUG] detect machine type

[node1][DEBUG] find the location of an executable

[ceph_deploy.osd][INFO] Distro info: CentOS Linux 7.2.1511 Core

[ceph_deploy.osd][DEBUG] Deploying osd to node1

[node1][DEBUG] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.osd][DEBUG] Preparing host node1 disk /dev/sdb journal None activate False

[node1][DEBUG] find the location of an executable

[node1][INFO] Running command: sudo /usr/sbin/ceph-disk -v prepare –cluster ceph –fs-type xfs — /dev/sdb

[node1][WARNIN] command: Running command: /usr/bin/ceph-osd –cluster=ceph –show-config-value=fsid