共计 8138 个字符,预计需要花费 21 分钟才能阅读完成。

HDFS 是 Hadoop 应用程序使用的主要分布式存储。HDFS 集群主要由管理文件系统元数据的 NameNode 和存储实际数据的 DataNodes 组成,HDFS 架构图描述了 NameNode,DataNode 和客户端之间的基本交互。客户端联系 NameNode 进行文件元数据或文件修改,并直接使用 DataNodes 执行实际的文件 I / O。

Hadoop 支持 shell 命令直接与 HDFS 进行交互,同时也支持 Java API 对 HDFS 的操作,例如,文件的创建、删除、上传、下载、重命名等。

HDFS 中的文件操作主要涉及以下几个类:

Configuration:提供对配置参数的访问

FileSystem:文件系统对象

Path:在 FileSystem 中命名文件或目录。路径字符串使用斜杠作为目录分隔符。如果以斜线开始,路径字符串是绝对的

FSDataInputStream 和 FSDataOutputStream:这两个类分别是 HDFS 中的输入和输出流

下面是 JAVA API 对 HDFS 的操作过程:

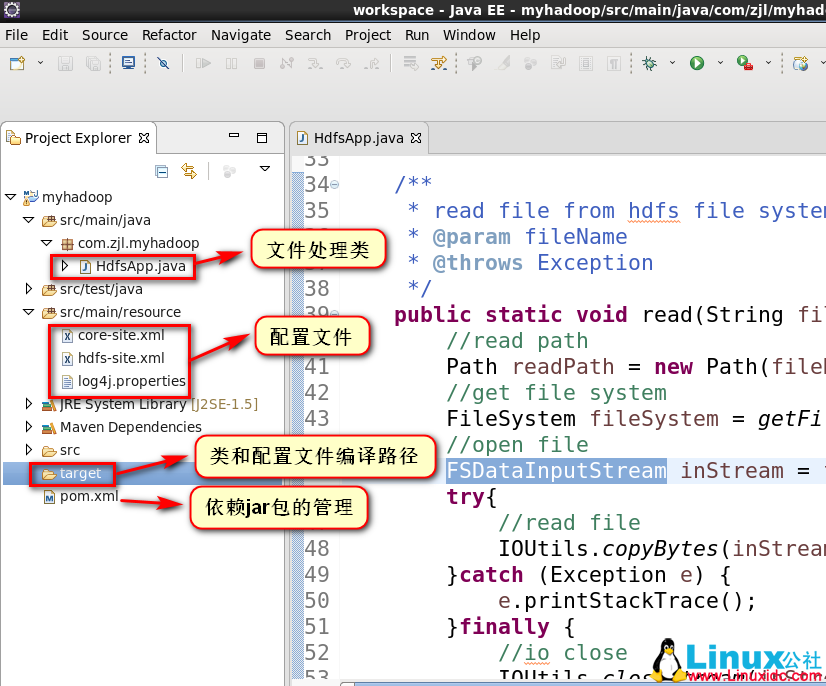

1. 项目结构

2.pom.xml 配置

<project xmlns=”http://maven.apache.org/POM/4.0.0″ xmlns:xsi=”http://www.w3.org/2001/XMLSchema-instance”

xsi:schemaLocation=”http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd”>

<modelVersion>4.0.0</modelVersion>

<groupId>com.zjl</groupId>

<artifactId>myhadoop</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>myhadoop</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<hadoop.version>2.5.0</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

</dependency>

</dependencies>

</project>

3. 拷贝 hadoop 安装目录下与 HDFS 相关的配置(core-site.xml,hdfs-site.xml,log4j.properties)到 resource 目录下

[hadoop@hadoop01 ~]$ cd /opt/modules/hadoop-2.6.5/etc/hadoop/

[hadoop@hadoop01 hadoop]$ cp core-site.xml hdfs-site.xml log4j.properties /opt/tools/workspace/myhadoop/src/main/resource/

[hadoop@hadoop01 hadoop]$

(1)core-site.xml

<?xml version=”1.0″ encoding=”UTF-8″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2017-07/configuration.xsl”?>

<!–

Licensed under the Apache License, Version 2.0 (the “License”);

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an “AS IS” BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

–>

<!– Put site-specific property overrides in this file. –>

<configuration>

<property>

<name>fs.defaultFS</name>

<!– 如果没有配置,默认会从本地文件系统读取数据 –>

<value>hdfs://hadoop01.zjl.com:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<!– hadoop 文件系统依赖的基础配置,很多路径都依赖它。如果 hdfs-site.xml 中不配置 namenode 和 datanode 的存放位置,默认就放在这个路径中 –>

<value>/opt/modules/hadoop-2.6.5/data/tmp</value>

</property>

</configuration>

(2)hdfs-site.xml

<?xml version=”1.0″ encoding=”UTF-8″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2017-07/configuration.xsl”?>

<!–

Licensed under the Apache License, Version 2.0 (the “License”);

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an “AS IS” BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

–>

<!– Put site-specific property overrides in this file. –>

<configuration>

<property>

<!– default value 3 –>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

(3)采用默认即可,打印 hadoop 的日志信息所需的配置文件。如果不配置,运行程序时 eclipse 控制台会提示警告

4. 启动 hadoop 的 hdfs 的守护进程,并在 hdfs 文件系统中创建文件(文件共步骤 5 中 java 程序读取)

[hadoop@hadoop01 hadoop]$ cd /opt/modules/hadoop-2.6.5/

[hadoop@hadoop01 hadoop-2.6.5]$ sbin/start-dfs.sh

17/06/21 22:59:32 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Starting namenodes on [hadoop01.zjl.com]

hadoop01.zjl.com: starting namenode, logging to /opt/modules/hadoop-2.6.5/logs/hadoop-hadoop-namenode-hadoop01.zjl.com.out

hadoop01.zjl.com: starting datanode, logging to /opt/modules/hadoop-2.6.5/logs/hadoop-hadoop-datanode-hadoop01.zjl.com.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /opt/modules/hadoop-2.6.5/logs/hadoop-hadoop-secondarynamenode-hadoop01.zjl.com.out

17/06/21 23:00:06 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

[hadoop@hadoop01 hadoop-2.6.5]$ jps

3987 NameNode

4377 Jps

4265 SecondaryNameNode

4076 DataNode

3135 org.eclipse.equinox.launcher_1.3.201.v20161025-1711.jar

[hadoop@hadoop01 hadoop-2.6.5]$ bin/hdfs dfs -mkdir -p /user/hadoop/mapreduce/wordcount/input

17/06/21 23:07:21 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

[hadoop@hadoop01 hadoop-2.6.5]$ cat wcinput/wc.input

hadoop yarn

hadoop mapreduce

hadoop hdfs

yarn nodemanager

hadoop resourcemanager

[hadoop@hadoop01 hadoop-2.6.5]$ bin/hdfs dfs -put wcinput/wc.input /user/hadoop/mapreduce/wordcount/input/

17/06/21 23:20:40 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

[hadoop@hadoop01 hadoop-2.6.5]$

5.java 代码

package com.zjl.myhadoop;

import java.io.File;

import java.io.FileInputStream;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

/**

*

* @author hadoop

*

*/

public class HdfsApp {

/**

* get file system

* @return

* @throws Exception

*/

public static FileSystem getFileSystem() throws Exception{

//read configuration

//core-site.xml,core-default-site.xml,hdfs-site.xml,hdfs-default-site.xml

Configuration conf = new Configuration();

//create file system

FileSystem fileSystem = FileSystem.get(conf);

return fileSystem;

}

/**

* read file from hdfs file system,output to the console

* @param fileName

* @throws Exception

*/

public static void read(String fileName) throws Exception {

//read path

Path readPath = new Path(fileName);

//get file system

FileSystem fileSystem = getFileSystem();

//open file

FSDataInputStream inStream = fileSystem.open(readPath);

try{

//read file

IOUtils.copyBytes(inStream, System.out, 4096, false);

}catch (Exception e) {

e.printStackTrace();

}finally {

//io close

IOUtils.closeStream(inStream);

}

}

public static void upload(String inFileName, String outFileName) throws Exception {

//file input stream,local file

FileInputStream inStream = new FileInputStream(new File(inFileName));

//get file system

FileSystem fileSystem = getFileSystem();

//write path,hdfs file system

Path writePath = new Path(outFileName);

//output stream

FSDataOutputStream outStream = fileSystem.create(writePath);

try{

//write file

IOUtils.copyBytes(inStream, outStream, 4096, false);

}catch (Exception e) {

e.printStackTrace();

}finally {

//io close

IOUtils.closeStream(inStream);

IOUtils.closeStream(outStream);

}

}

public static void main(String[] args ) throws Exception {

//1.read file from hdfs to console

// String fileName = “/user/hadoop/mapreduce/wordcount/input/wc.input”;

// read(fileName);

//2.upload file from local file system to hdfs file system

//file input stream,local file

String inFileName = “/opt/modules/hadoop-2.6.5/wcinput/wc.input”;

String outFileName = “/user/hadoop/put-wc.input”;

upload(inFileName, outFileName);

}

}

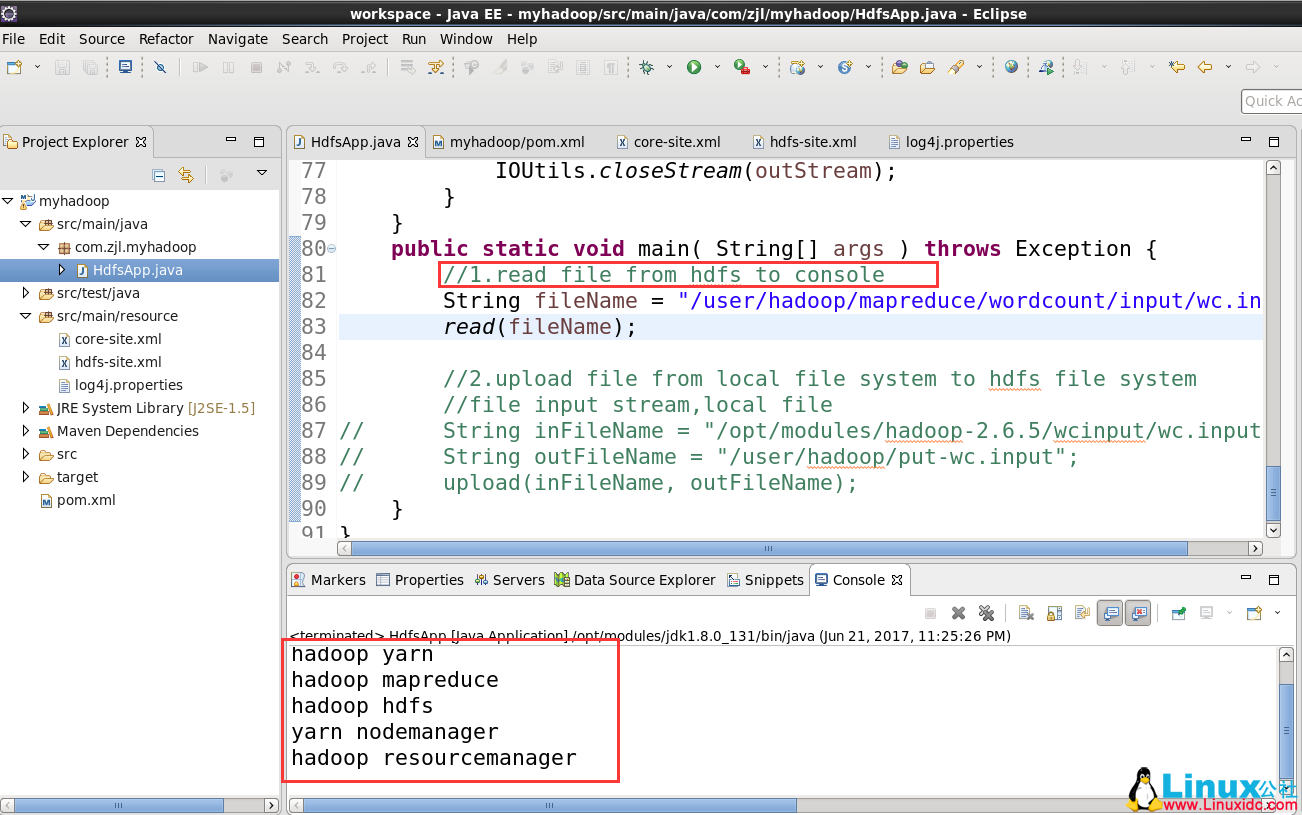

6. 调用方法 read(fileName)

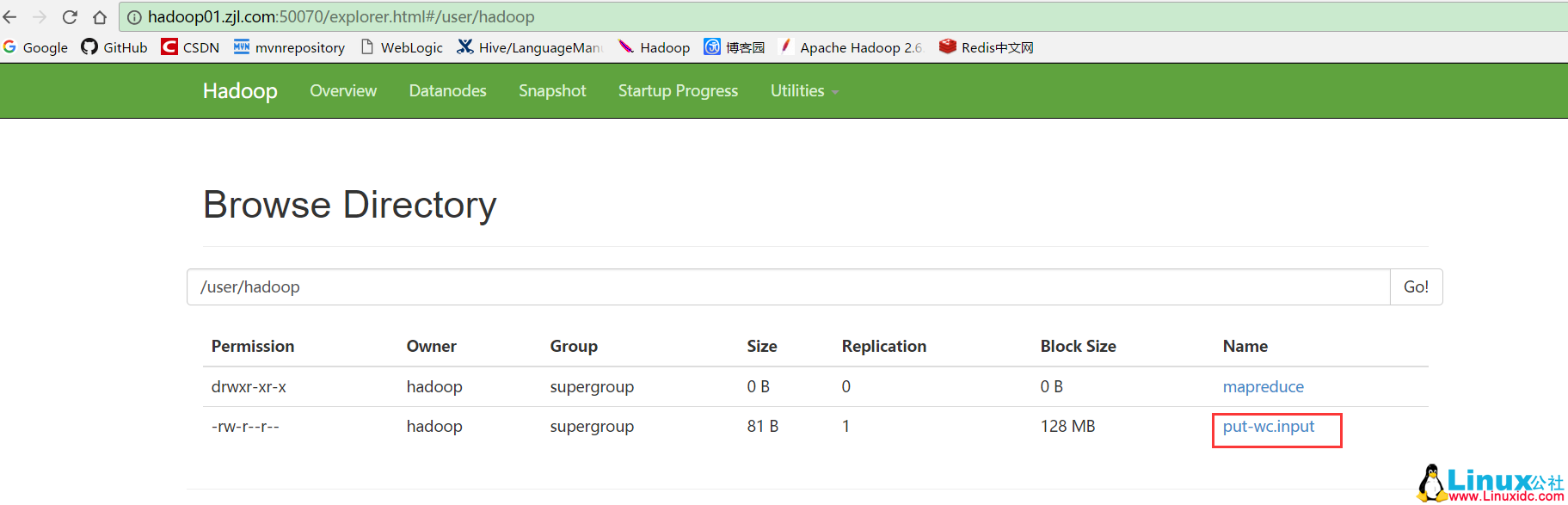

7. 进入 hdfs 文件系统查看 /user/hadoop 目录

8. 调用 upload(inFileName, outFileName),然后刷新步骤 7 的页面,文件上传成功

Hadoop 如何修改 HDFS 文件存储块大小 http://www.linuxidc.com/Linux/2013-09/90100.htm

将本地文件拷到 HDFS 中 http://www.linuxidc.com/Linux/2013-05/83866.htm

从 HDFS 下载文件到本地 http://www.linuxidc.com/Linux/2012-11/74214.htm

将本地文件上传至 HDFS http://www.linuxidc.com/Linux/2012-11/74213.htm

HDFS 基本文件常用命令 http://www.linuxidc.com/Linux/2013-09/89658.htm

Hadoop 中 HDFS 和 MapReduce 节点基本简介 http://www.linuxidc.com/Linux/2013-09/89653.htm

《Hadoop 实战》中文版 + 英文文字版 + 源码【PDF】http://www.linuxidc.com/Linux/2012-10/71901.htm

Hadoop: The Definitive Guide【PDF 版】http://www.linuxidc.com/Linux/2012-01/51182.htm

本文永久更新链接地址 :http://www.linuxidc.com/Linux/2017-07/145448.htm