共计 14478 个字符,预计需要花费 37 分钟才能阅读完成。

Hadoop模式介绍

单机模式:安装简单,几乎不用作任何配置,但仅限于调试用途

伪分布模式:在单节点上同时启动 namenode、datanode、jobtracker、tasktracker、secondary namenode 等5个进程,模拟分布式运行的各个节点

完全分布式模式:正常的 Hadoop 集群,由多个各司其职的节点构成

安装环境

操作平台:vmware2

操作系统:Oracle linux 5.6

软件版本:hadoop-0.22.0,jdk-6u18

集群架构:3 node,master node(gc),slave node(rac1,rac2)

安装步骤

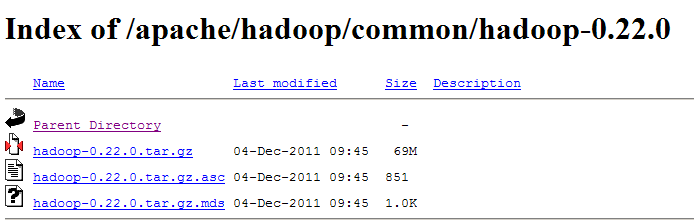

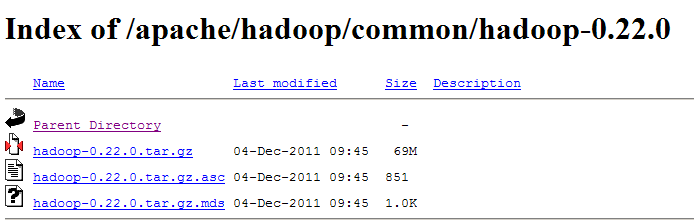

1. 下载 Hadoop 和jdk:

http://mirror.bit.edu.cn/apache/hadoop/common/

如:hadoop-0.22.0

2. 配置 hosts 文件

所有的节点(gc,rac1,rac2)都修改/etc/hosts,使彼此之间都能把主机名解析为ip

[root@gc ~]$ cat /etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

192.168.2.101 rac1.localdomain rac1

192.168.2.102 rac2.localdomain rac2

192.168.2.100 gc.localdomain gc

3. 建立 hadoop 运行账号

在所有的节点创建 hadoop 运行账号

[root@gc ~]#groupadd hadoop

[root@gc ~]#useradd -g hadoop grid—注意此处一定要指定分组,不然可能会不能建立互信

[root@gc ~]# id grid

uid=501(grid) gid=54326(hadoop) groups=54326(hadoop)

[root@gc ~]#passwd grid

Changing password for user grid.

New UNIX password:

BAD PASSWORD: it is too short

Retype new UNIX password:

passwd: all authentication tokens updated successfully.

4. 配置 ssh 免密码连入

注意要以 hadoop 用户登录,在 hadoop 用户的主目录下进行操作。

每个节点做下面相同的操作

[hadoop@gc ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory ‘/home/hadoop/.ssh’.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

54:80:fd:77:6b:87:97:ce:0f:32:34:43:d1:d2:c2:0d hadoop@gc.localdomain

[hadoop@gc ~]$ cd .ssh

[hadoop@gc .ssh]$ ls

id_rsa id_rsa.pub

把各个节点的 authorized_keys 的内容互相拷贝加入到对方的此文件中,然后就可以免密码彼此 ssh 连入。

在其中一节点(gc)节点就可完成操作

[hadoop@gc .ssh]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[hadoop@gc .ssh]$ssh rac1 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host ‘rac1 (192.168.2.101)’ can’t be established.

RSA key fingerprint is 19:48:e0:0a:37:e1:2a:d5:ba:c8:7e:1b:37:c6:2f:0e.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘rac1,192.168.2.101’ (RSA) to the list of known hosts.

hadoop@rac1’s password:

[hadoop@gc .ssh]$ssh rac2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host ‘rac2 (192.168.2.102)’ can’t be established.

RSA key fingerprint is 19:48:e0:0a:37:e1:2a:d5:ba:c8:7e:1b:37:c6:2f:0e.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘rac2,192.168.2.102’ (RSA) to the list of known hosts.

hadoop@rac2’s password:

[hadoop@gc .ssh]$scp ~/.ssh/authorized_keys rac1:~/.ssh/authorized_keys

hadoop@rac1’s password:

authorized_keys 100% 1213 1.2KB/s 00:00

[hadoop@gc .ssh]$scp ~/.ssh/authorized_keys rac2:~/.ssh/authorized_keys

hadoop@rac2’s password:

authorized_keys 100% 1213 1.2KB/s 00:00

[hadoop@gc .ssh]$ ll

总计 16

-rw-rw-r– 1 hadoop hadoop 1213 10-30 09:18 authorized_keys

-rw——- 1 hadoop hadoop 1675 10-30 09:05 id_rsa

-rw-r–r– 1 hadoop hadoop 403 10-30 09:05 id_rsa.pub

—分别测试连接

[grid@gc .ssh]$ ssh rac1 date

2012年 11 月 18日星期日 01:35:39 CST

[grid@gc .ssh]$ ssh rac2 date

2012年 10 月 30日星期二 09:52:46 CST

—可以看到这步和配置 oracle RAC 中使用 SSH 建立用户等效性步骤是一样的。

5. 解压 hadoop 安装包

—可先一某节点解压配置文件

[grid@gc ~]$ ll

总计 43580

-rw-r–r– 1 grid hadoop 44575568 2012-11-19 hadoop-0.20.2.tar.gz

[grid@gc ~]$ tar xzvf /home/grid/hadoop-0.20.2.tar.gz

[grid@gc ~]$ ll

总计 43584

drwxr-xr-x 12 grid hadoop 4096 2010-02-19 hadoop-0.20.2

-rw-r–r– 1 grid hadoop 44575568 2012-11-19 hadoop-0.20.2.tar.gz

—在各节点安装jdk

[root@gc ~]# ./jdk-6u18-linux-x64-rpm.bin

相关阅读:

Ubuntu 13.04 上搭建 Hadoop 环境 http://www.linuxidc.com/Linux/2013-06/86106.htm

Ubuntu 12.10 +Hadoop 1.2.1 版本集群配置 http://www.linuxidc.com/Linux/2013-09/90600.htm

Ubuntu 上搭建 Hadoop 环境(单机模式 + 伪分布模式)http://www.linuxidc.com/Linux/2013-01/77681.htm

Ubuntu 下 Hadoop 环境的配置 http://www.linuxidc.com/Linux/2012-11/74539.htm

单机版搭建 Hadoop 环境图文教程详解 http://www.linuxidc.com/Linux/2012-02/53927.htm

搭建 Hadoop 环境(在 Winodws 环境下用虚拟机虚拟两个 Ubuntu 系统进行搭建)http://www.linuxidc.com/Linux/2011-12/48894.htm

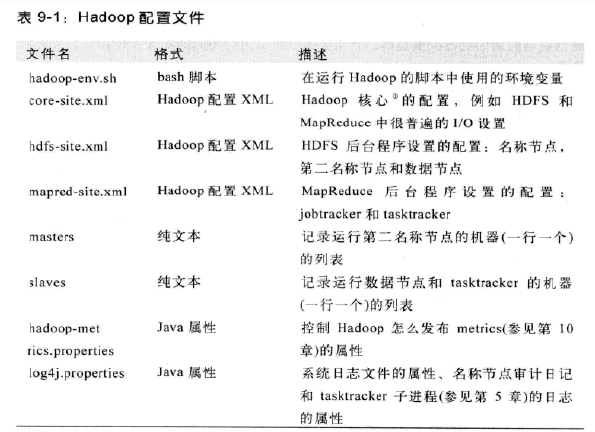

6. Hadoop配置有关文件

n 配置hadoop-env.sh

[root@gc conf]# pwd

/root/hadoop-0.20.2/conf

—修改 jdk 安装路径

[root@gc conf]vi hadoop-env.sh

export JAVA_HOME=/usr/java/jdk1.6.0_18

n 配置 namenode,修改site 文件

—修改 core-site.xml 文件

[gird@gc conf]#vi core-site.xml

<?xml version=”1.0″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2014-02/configuration.xsl”?>

<!– Put site-specific property overrides in this file. –>

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://gc:9000</value>#完全分布式不能用 localhost, 要用 master 节点的 IP 或机器名

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/grid/hadoop/tmp</value>

</property>

</configuration>

注:fs.default.name NameNode的 IP 地址和端口

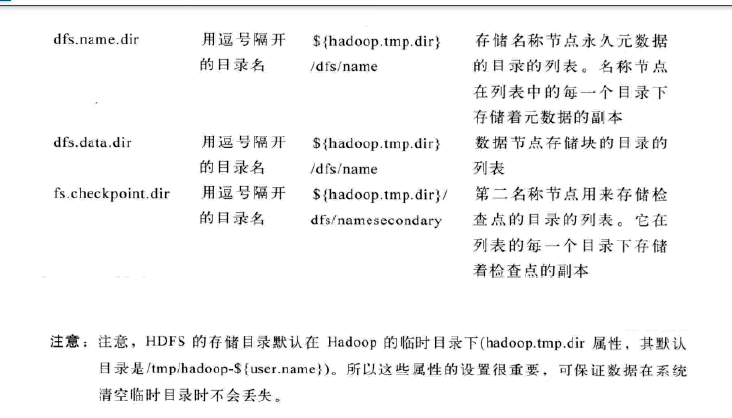

—修改 hdfs-site.xml 文件

[gird@gc conf]#vi hdfs-site.xml

<?xml version=”1.0″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2014-02/configuration.xsl”?>

<!– Put site-specific property overrides in this file. –>

<configuration>

<property>

<name>dfs.data.dir</name>

<value>/home/grid/hadoop-0.20.2/data</value>—注意此目录必需已经创建并能读写

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

hdfs-site.xml文件中常用配置参数:

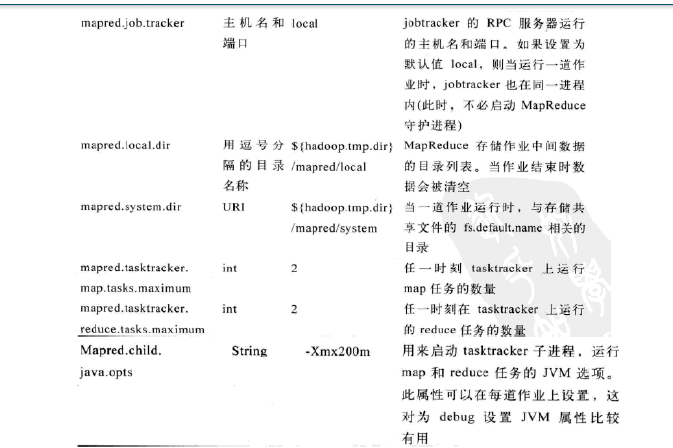

—修改 mapred-site.xml 文件

[gird@gc conf]#vi mapred-site.xml

<?xml version=”1.0″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2014-02/configuration.xsl”?>

<!– Put site-specific property overrides in this file. –>

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>gc:9001</value>

</property>

</configuration>

mapred-site.xml文件中常用配置参数

n 配置 masters 和slaves文件

[grid@gc conf]$ vi masters

gc

[grid@gc conf]$ vi slaves

rac1

rac2

n 向各节点复制hadoop

—把 gc 主机上面 hadoop 配置好文件分别 copy 到各节点

—注意:复制到其它的节点后配置文件中要修改为此节点的IP

[grid@gc conf]$ scp -r hadoop-0.20.2 rac1:/home/grid/

[grid@gc conf]$ scp -r hadoop-0.20.2 rac2:/home/grid/

Hadoop模式介绍

单机模式:安装简单,几乎不用作任何配置,但仅限于调试用途

伪分布模式:在单节点上同时启动 namenode、datanode、jobtracker、tasktracker、secondary namenode 等5个进程,模拟分布式运行的各个节点

完全分布式模式:正常的 Hadoop 集群,由多个各司其职的节点构成

安装环境

操作平台:vmware2

操作系统:Oracle linux 5.6

软件版本:hadoop-0.22.0,jdk-6u18

集群架构:3 node,master node(gc),slave node(rac1,rac2)

安装步骤

1. 下载 Hadoop 和jdk:

http://mirror.bit.edu.cn/apache/hadoop/common/

如:hadoop-0.22.0

2. 配置 hosts 文件

所有的节点(gc,rac1,rac2)都修改/etc/hosts,使彼此之间都能把主机名解析为ip

[root@gc ~]$ cat /etc/hosts

# Do not remove the following line, or various programs

# that require network functionality will fail.

127.0.0.1 localhost.localdomain localhost

::1 localhost6.localdomain6 localhost6

192.168.2.101 rac1.localdomain rac1

192.168.2.102 rac2.localdomain rac2

192.168.2.100 gc.localdomain gc

3. 建立 hadoop 运行账号

在所有的节点创建 hadoop 运行账号

[root@gc ~]#groupadd hadoop

[root@gc ~]#useradd -g hadoop grid—注意此处一定要指定分组,不然可能会不能建立互信

[root@gc ~]# id grid

uid=501(grid) gid=54326(hadoop) groups=54326(hadoop)

[root@gc ~]#passwd grid

Changing password for user grid.

New UNIX password:

BAD PASSWORD: it is too short

Retype new UNIX password:

passwd: all authentication tokens updated successfully.

4. 配置 ssh 免密码连入

注意要以 hadoop 用户登录,在 hadoop 用户的主目录下进行操作。

每个节点做下面相同的操作

[hadoop@gc ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Created directory ‘/home/hadoop/.ssh’.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

54:80:fd:77:6b:87:97:ce:0f:32:34:43:d1:d2:c2:0d hadoop@gc.localdomain

[hadoop@gc ~]$ cd .ssh

[hadoop@gc .ssh]$ ls

id_rsa id_rsa.pub

把各个节点的 authorized_keys 的内容互相拷贝加入到对方的此文件中,然后就可以免密码彼此 ssh 连入。

在其中一节点(gc)节点就可完成操作

[hadoop@gc .ssh]$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[hadoop@gc .ssh]$ssh rac1 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host ‘rac1 (192.168.2.101)’ can’t be established.

RSA key fingerprint is 19:48:e0:0a:37:e1:2a:d5:ba:c8:7e:1b:37:c6:2f:0e.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘rac1,192.168.2.101’ (RSA) to the list of known hosts.

hadoop@rac1’s password:

[hadoop@gc .ssh]$ssh rac2 cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

The authenticity of host ‘rac2 (192.168.2.102)’ can’t be established.

RSA key fingerprint is 19:48:e0:0a:37:e1:2a:d5:ba:c8:7e:1b:37:c6:2f:0e.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘rac2,192.168.2.102’ (RSA) to the list of known hosts.

hadoop@rac2’s password:

[hadoop@gc .ssh]$scp ~/.ssh/authorized_keys rac1:~/.ssh/authorized_keys

hadoop@rac1’s password:

authorized_keys 100% 1213 1.2KB/s 00:00

[hadoop@gc .ssh]$scp ~/.ssh/authorized_keys rac2:~/.ssh/authorized_keys

hadoop@rac2’s password:

authorized_keys 100% 1213 1.2KB/s 00:00

[hadoop@gc .ssh]$ ll

总计 16

-rw-rw-r– 1 hadoop hadoop 1213 10-30 09:18 authorized_keys

-rw——- 1 hadoop hadoop 1675 10-30 09:05 id_rsa

-rw-r–r– 1 hadoop hadoop 403 10-30 09:05 id_rsa.pub

—分别测试连接

[grid@gc .ssh]$ ssh rac1 date

2012年 11 月 18日星期日 01:35:39 CST

[grid@gc .ssh]$ ssh rac2 date

2012年 10 月 30日星期二 09:52:46 CST

—可以看到这步和配置 oracle RAC 中使用 SSH 建立用户等效性步骤是一样的。

5. 解压 hadoop 安装包

—可先一某节点解压配置文件

[grid@gc ~]$ ll

总计 43580

-rw-r–r– 1 grid hadoop 44575568 2012-11-19 hadoop-0.20.2.tar.gz

[grid@gc ~]$ tar xzvf /home/grid/hadoop-0.20.2.tar.gz

[grid@gc ~]$ ll

总计 43584

drwxr-xr-x 12 grid hadoop 4096 2010-02-19 hadoop-0.20.2

-rw-r–r– 1 grid hadoop 44575568 2012-11-19 hadoop-0.20.2.tar.gz

—在各节点安装jdk

[root@gc ~]# ./jdk-6u18-linux-x64-rpm.bin

相关阅读:

Ubuntu 13.04 上搭建 Hadoop 环境 http://www.linuxidc.com/Linux/2013-06/86106.htm

Ubuntu 12.10 +Hadoop 1.2.1 版本集群配置 http://www.linuxidc.com/Linux/2013-09/90600.htm

Ubuntu 上搭建 Hadoop 环境(单机模式 + 伪分布模式)http://www.linuxidc.com/Linux/2013-01/77681.htm

Ubuntu 下 Hadoop 环境的配置 http://www.linuxidc.com/Linux/2012-11/74539.htm

单机版搭建 Hadoop 环境图文教程详解 http://www.linuxidc.com/Linux/2012-02/53927.htm

搭建 Hadoop 环境(在 Winodws 环境下用虚拟机虚拟两个 Ubuntu 系统进行搭建)http://www.linuxidc.com/Linux/2011-12/48894.htm

7. 格式化namenode

—分别在各节点进行格式化

[grid@rac2 bin]$ pwd

/home/grid/Hadoop-0.20.2/bin

[grid@gc bin]$ ./hadoop namenode –format

12/10/31 08:03:31 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = gc.localdomain/192.168.2.100

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 0.20.2

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.20 -r 911707; compiled by ‘chrisdo’ on Fri Feb 19 08:07:34 UTC 2010

************************************************************/

12/10/31 08:03:31 INFO namenode.FSNamesystem: fsOwner=grid,hadoop

12/10/31 08:03:31 INFO namenode.FSNamesystem: supergroup=supergroup

12/10/31 08:03:31 INFO namenode.FSNamesystem: isPermissionEnabled=true

12/10/31 08:03:32 INFO common.Storage: Image file of size 94 saved in 0 seconds.

12/10/31 08:03:32 INFO common.Storage: Storage directory /tmp/hadoop-grid/dfs/name has been successfully formatted.

12/10/31 08:03:32 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at gc.localdomain/192.168.2.100

************************************************************/

8. 启动hadoop

—在 master 节点启动 hadoop 守护进程

[grid@gc bin]$ pwd

/home/grid/hadoop-0.20.2/bin

[grid@gc bin]$./start-all.sh

starting namenode, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-namenode-gc.localdomain.out

rac2: starting datanode, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-datanode-rac2.localdomain.out

rac1: starting datanode, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-datanode-rac1.localdomain.out

The authenticity of host ‘gc (192.168.2.100)’ can’t be established.

RSA key fingerprint is 8e:47:42:44:bd:e2:28:64:10:40:8e:b5:72:f9:6c:82.

Are you sure you want to continue connecting (yes/no)? yes

gc: Warning: Permanently added ‘gc,192.168.2.100’ (RSA) to the list of known hosts.

gc: starting secondarynamenode, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-secondarynamenode-gc.localdomain.out

starting jobtracker, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-jobtracker-gc.localdomain.out

rac2: starting tasktracker, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-tasktracker-rac2.localdomain.out

rac1: starting tasktracker, logging to /home/grid/hadoop-0.20.2/bin/../logs/hadoop-grid-tasktracker-rac1.localdomain.out

9. 用 jps 检验各后台进程是否成功启动

—在 master 节点查看后台进程

[grid@gc bin]$ /usr/java/jdk1.6.0_18/bin/jps

27462 NameNode

29012 Jps

27672 JobTracker

27607 SecondaryNameNode

—在 slave 节点查看后台进程

[grid@rac1 conf]$/usr/java/jdk1.6.0_18/bin/jps

16722 Jps

16672 TaskTracker

16577 DataNode

[grid@rac2 conf]$/usr/java/jdk1.6.0_18/bin/jps

31451 DataNode

31547 TaskTracker

31608 Jps

测试连接:

http://192.168.2.100:50070/dfshealth.jsp

http://192.168.2.100:50030/jobtracker.jsp

10. 安装过程中遇到的问题

1) Ssh不能建立互信

建用户时不指定分组,Ssh不能建立互信,如下的步骤

[root@gc ~]#useradd grid

[root@gc ~]#passwd grid

解决:

创建新的用户组,创建用户时并指定此用户组。

[root@gc ~]#groupadd hadoop

[root@gc ~]#useradd -g hadoop grid

[root@gc ~]# id grid

uid=501(grid) gid=54326(hadoop) groups=54326(hadoop)

[root@gc ~]# passwd grid

2) 启动 hadoop 后,slave节点没有 datanode 进程

现象:

在 master 节点启动 hadoop 后,master节点进程正常,但 slave 节点没有 datanode 进程。

–Master节点正常

[grid@gc bin]$ /usr/java/jdk1.6.0_18/bin/jps

29843 Jps

29703 JobTracker

29634 SecondaryNameNode

29485 NameNode

—此时再在两 slave 节点查看进程,发现还是没有 datanode 进程

[grid@rac1 bin]$ /usr/java/jdk1.6.0_18/bin/jps

5528 Jps

3213 TaskTracker

[grid@rac2 bin]$ /usr/java/jdk1.6.0_18/bin/jps

30518 TaskTracker

30623 Jps

原因:

—回头查看在 master 节点启动 hadoop 时的输出日志,在 slave 节点找到启动 datanode 进程的日志

[grid@rac2 logs]$ pwd

/home/grid/hadoop-0.20.2/logs

[grid@rac1 logs]$ more hadoop-grid-datanode-rac1.localdomain.log

/************************************************************

STARTUP_MSG: Starting DataNode

STARTUP_MSG: host = rac1.localdomain/192.168.2.101

STARTUP_MSG: args = []

STARTUP_MSG: version = 0.20.2

STARTUP_MSG: build = https://svn.apache.org/repos/asf/hadoop/common/branches/branch-0.20 -r 911707; compiled by ‘chrisdo’ on Fri Feb 19 08:07:34 UTC 2010

************************************************************/

2012-11-18 07:43:33,513WARN org.apache.hadoop.hdfs.server.datanode.DataNode: Invalid directory in dfs.data.dir: can not create directory: /usr/hadoop-0.20.2/data

2012-11-18 07:43:33,513 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: All directories in dfs.data.dir are invalid.

2012-11-18 07:43:33,571 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down DataNode at rac1.localdomain/192.168.2.101

************************************************************/

—发现是 hdfs-site.xml配置文件的目录 data 目录没有创建

解决:

在各节点创建 hdfs 的 data 目录,并修改 hdfs-site.xml 配置文件参数

[grid@gc ~]# mkdir -p /home/grid/hadoop-0.20.2/data

[grid@gc conf]#vi hdfs-site.xml

<?xml version=”1.0″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2014-02/configuration.xsl”?>

<!– Put site-specific property overrides in this file. –>

<configuration>

<property>

<name>dfs.data.dir</name>

<value>/home/grid/hadoop-0.20.2/data</value>—注意此目录必需已经创建并能读写

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration>

– 重新启动 hadoop,slave 进程正常

[grid@gc bin]$ ./stop-all.sh

[grid@gc bin]$ ./start-all.sh

3) Warning: $HADOOP_HOME is deprecated. hadoop1.0.1 解决方法

hadoop 1.0.1 版本执行 hadoop 命令的时候,经常出现

Warning: $HADOOP_HOME is deprecated.

经过查看 hadoop-1.0.1 的 hadoop 和 hadoop-config.sh 脚本,发现对于 HADDP_HOME 做了判断

解决方法如下:

1. 在配置文件中找到 if fi 语句,注释掉。(不推荐)

2. 在 HOME 的目录编辑.bash_profile,添加一个环境变量。

export HADOOP_HOME_WARN_SUPPRESS=1

更多 Hadoop 相关信息见Hadoop 专题页面 http://www.linuxidc.com/topicnews.aspx?tid=13