共计 4652 个字符,预计需要花费 12 分钟才能阅读完成。

环境:Ubuntu 16.04

需要软件:jdk ssh

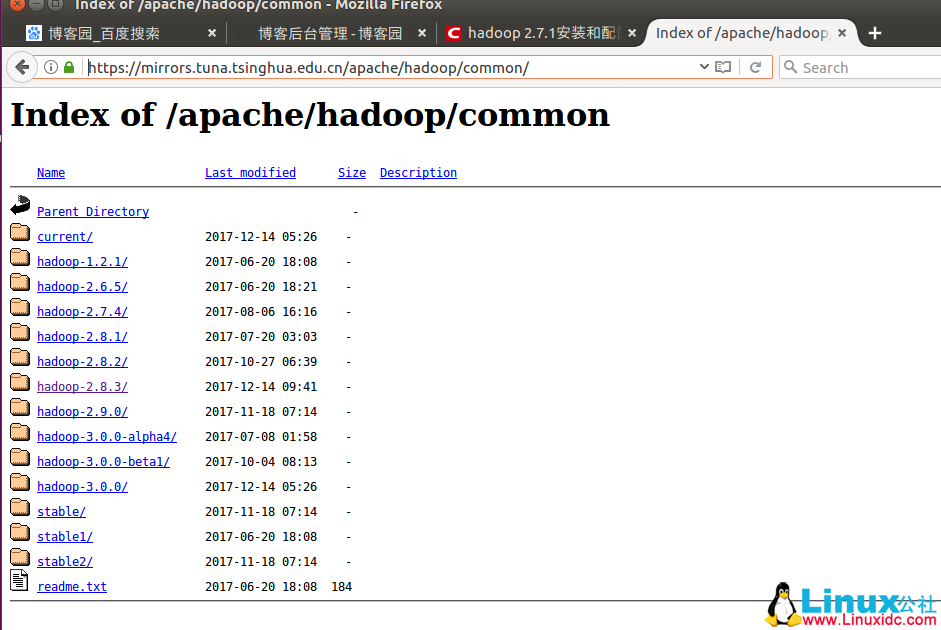

https://mirrors.tuna.tsinghua.edu.cn/apache/Hadoop/common/

Hadoop 2.8.3

安装 jdk 并配置环境变量

安装 ssh 和 rshync,主要设置免密登录

sudo apt-get install ssh

sudo apt-get install rshync

sh-keygen -t dsa -P ''-f ~/.ssh/id_dsa

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

ssh

安装 hadoop

root@linuxidc.com:/usr/local/hadoop# tar -xzvf /home/hett/Downloads/hadoop-2.8.3.tar.gz

root@linuxidc.com:/usr/local/hadoop# mv hadoop-2.8.3 hadoop

root@linuxidc.com:/usr/local# cd hadoop/

root@linuxidc.com:/usr/local/hadoop# mkdir tmp

root@linuxidc.com:/usr/local/hadoop# mkdir hdfs

root@linuxidc.com:/usr/local/hadoop# mkdir hdfs/data

root@linuxidc.com:/usr/local/hadoop# mkdir hdfs/name

root@linuxidc.com:/usr/local/hadoop# nano /etc/profile

配置

export HADOOP_HOME=/usr/local/hadoop

export JAVA_HOME=/usr/local/jdk1.8.0_151

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib:${JRE_HOME}/lib

export PATH=${JAVA_HOME}/bin:$PATH:$HADOOP_HOME/bin

root@linuxidc.com:/usr/local/hadoop# source /etc/profile

root@linuxidc.com:/usr/local/hadoop# cd etc/hadoop/

root@linuxidc.com:/usr/local/hadoop/etc/hadoop# ls

capacity-scheduler.xml httpfs-env.sh mapred-env.sh

configuration.xsl httpfs-log4j.properties mapred-queues.xml.template

container-executor.cfg httpfs-signature.secret mapred-site.xml.template

core-site.xml httpfs-site.xml slaves

hadoop-env.cmd kms-acls.xml ssl-client.xml.example

hadoop-env.sh kms-env.sh ssl-server.xml.example

hadoop-metrics2.properties kms-log4j.properties yarn-env.cmd

hadoop-metrics.properties kms-site.xml yarn-env.sh

hadoop-policy.xml log4j.properties yarn-site.xml

hdfs-site.xml mapred-env.cmd

root@linuxidc.com:/usr/local/hadoop/etc/hadoop#

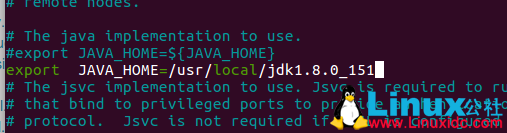

root@linuxidc.com:/usr/local/hadoop/etc/hadoop# nano hadoop-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_151

配置 yarn-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_151

3)配置 core-site.xml

添加如下配置:

| <configuration> | |

| <property> | |

| <name>fs.default.name</name> | |

| <value>hdfs://localhost:9000</value> | |

| <description>HDFS 的 URI,文件系统://namenode 标识: 端口号 </description> | |

| </property> | |

| <property> | |

| <name>hadoop.tmp.dir</name> | |

| <value>/usr/hadoop/tmp</value> | |

| <description>namenode 上本地的 hadoop 临时文件夹 </description> | |

| </property> | |

| </configuration> |

4),配置 hdfs-site.xml

添加如下配置

| <configuration> | |

| <!—hdfs-site.xml--> | |

| <property> | |

| <name>dfs.name.dir</name> | |

| <value>/usr/hadoop/hdfs/name</value> | |

| <description>namenode 上存储 hdfs 名字空间元数据 </description> | |

| </property> | |

| <property> | |

| <name>dfs.data.dir</name> | |

| <value>/usr/hadoop/hdfs/data</value> | |

| <description>datanode 上数据块的物理存储位置 </description> | |

| </property> | |

| <property> | |

| <name>dfs.replication</name> | |

| <value>1</value> | |

| <description> 副本个数,配置默认是 3, 应小于 datanode 机器数量 </description> | |

| </property> | |

| </configuration> |

5),配置 mapred-site.xml

添加如下配置:

| <configuration> | |

| <property> | |

| <name>mapreduce.framework.name</name> | |

| <value>yarn</value> | |

| </property> | |

| </configuration> |

6),配置 yarn-site.xml

添加如下配置:

| <configuration> | |

| <property> | |

| <name>yarn.nodemanager.aux-services</name> | |

| <value>mapreduce_shuffle</value> | |

| </property> | |

| <property> | |

| <name>yarn.resourcemanager.webapp.address</name> | |

| <value>192.168.241.128:8099</value> | |

| </property> | |

| </configuration> |

4,Hadoop 启动

1)格式化 namenode

$ bin/hdfs namenode –format

2)启动 NameNode 和 DataNode 守护进程

$ sbin/start-dfs.sh

3)启动 ResourceManager 和 NodeManager 守护进程

$ sbin/start-yarn.sh

- $ cd ~/.ssh/ # 若没有该目录,请先执行一次 ssh localhost

- $ ssh-keygen -t rsa # 会有提示,都按回车就可以

- $ cat id_rsa.pub >> authorized_keys # 加入授权

root@linuxidc.com:~# cd /usr/local/hadoop/

root@linuxidc.com:/usr/local/hadoop# sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [localhost]

localhost: starting namenode, logging to /usr/local/hadoop/logs/hadoop-root-namenode-linuxidc.com.out

localhost: starting datanode, logging to /usr/local/hadoop/logs/hadoop-root-datanode-linuxidc.com.out

……..

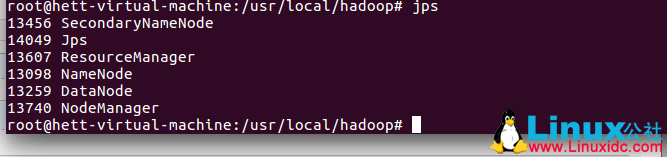

5,启动验证

1)执行 jps 命令,有如下进程,说明 Hadoop 正常启动

| # jps | |

| 6097 NodeManager | |

| 11044 Jps | |

| 7497 -- process information unavailable | |

| 8256 Worker | |

| 5999 ResourceManager | |

| 5122 SecondaryNameNode | |

| 8106 Master | |

| 4836 NameNode | |

| 4957 DataNode |

Hadoop 项目之基于 CentOS7 的 Cloudera 5.10.1(CDH)的安装部署 http://www.linuxidc.com/Linux/2017-04/143095.htm

Hadoop2.7.2 集群搭建详解(高可用)http://www.linuxidc.com/Linux/2017-03/142052.htm

使用 Ambari 来部署 Hadoop 集群(搭建内网 HDP 源)http://www.linuxidc.com/Linux/2017-03/142136.htm

Ubuntu 14.04 下 Hadoop 集群安装 http://www.linuxidc.com/Linux/2017-02/140783.htm

CentOS 6.7 安装 Hadoop 2.7.2 http://www.linuxidc.com/Linux/2017-08/146232.htm

Ubuntu 16.04 上构建分布式 Hadoop-2.7.3 集群 http://www.linuxidc.com/Linux/2017-07/145503.htm

CentOS 7.3 下 Hadoop2.8 分布式集群安装与测试 http://www.linuxidc.com/Linux/2017-09/146864.htm

CentOS 7 下 Hadoop 2.6.4 分布式集群环境搭建 http://www.linuxidc.com/Linux/2017-06/144932.htm

Hadoop2.7.3+Spark2.1.0 完全分布式集群搭建过程 http://www.linuxidc.com/Linux/2017-06/144926.htm

更多 Hadoop 相关信息见 Hadoop 专题页面 http://www.linuxidc.com/topicnews.aspx?tid=13

本文永久更新链接地址 :http://www.linuxidc.com/Linux/2017-12/149854.htm