共计 23467 个字符,预计需要花费 59 分钟才能阅读完成。

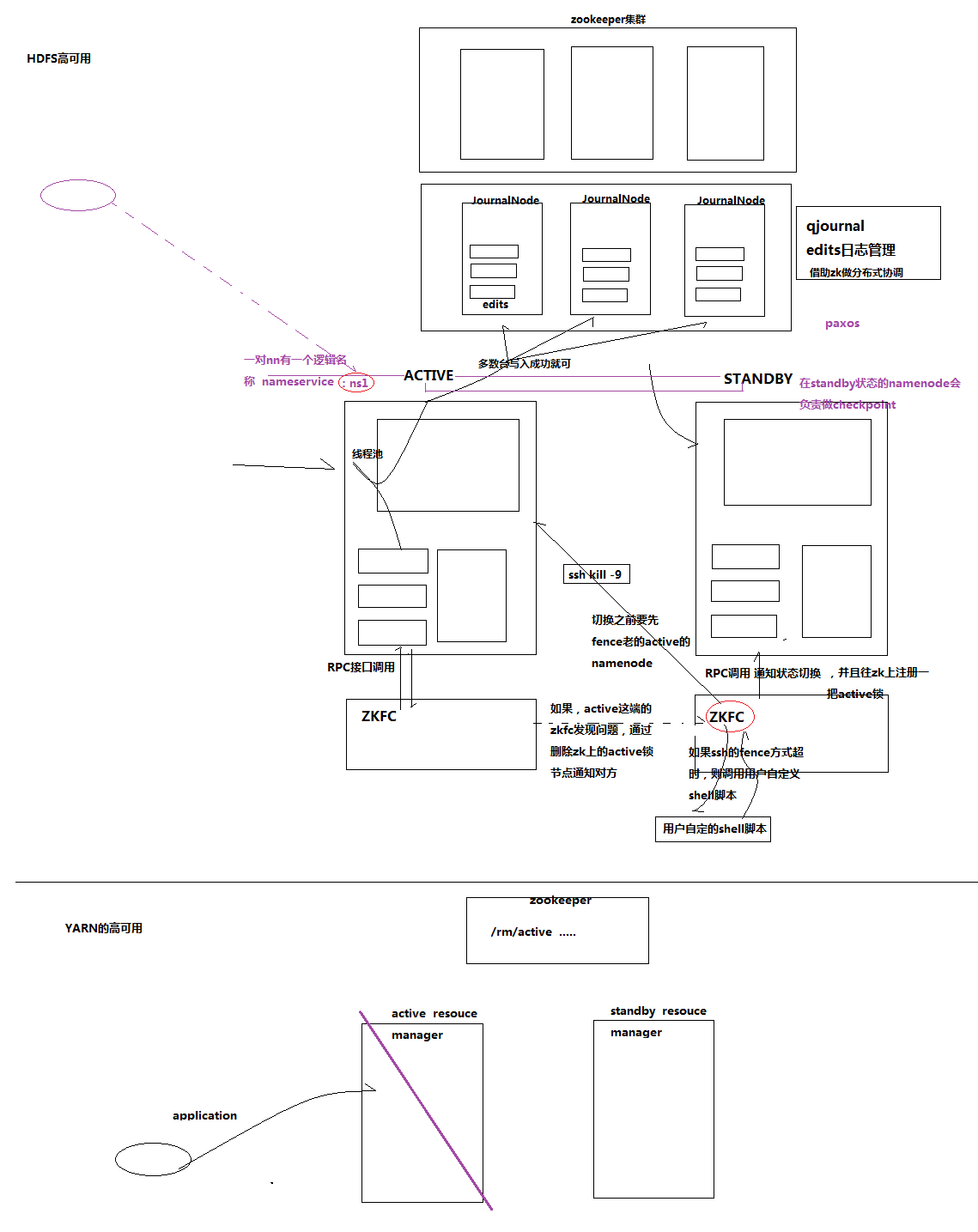

1. Hadoop 的 HA 机制

前言:正式引入 HA 机制是从 hadoop2.0 开始,之前的版本中没有 HA 机制

1.1. HA 的运作机制

(1)hadoop-HA 集群运作机制介绍

所谓 HA,即高可用(7*24 小时不中断服务)

实现高可用最关键的是消除单点故障

hadoop-ha 严格来说应该分成各个组件的 HA 机制——HDFS 的 HA、YARN 的 HA

(2)HDFS 的 HA 机制详解

通过双 namenode 消除单点故障

双 namenode 协调工作的要点:

A、元数据管理方式需要改变:

内存中各自保存一份元数据

Edits 日志只能有一份,只有 Active 状态的 namenode 节点可以做写操作

两个 namenode 都可以读取 edits

共享的 edits 放在一个共享存储中管理(qjournal 和 NFS 两个主流实现)

B、需要一个状态管理功能模块

实现了一个 zkfailover,常驻在每一个 namenode 所在的节点

每一个 zkfailover 负责监控自己所在 namenode 节点,利用 zk 进行状态标识

当需要进行状态切换时,由 zkfailover 来负责切换

切换时需要防止 brain split 现象的发生

1.2. HDFS-HA 图解

2. 主机规划

主机名称 | 外网 IP | 内网 IP | 操作系统 | 备注 | 安装软件 | 运行进程 |

mini01 | 10.0.0.111 | 172.16.1.111 | CentOS 7.4 | ssh port:22 | jdk、hadoop | NameNode、DFSZKFailoverController(zkfc) |

mini02 | 10.0.0.112 | 172.16.1.112 | CentOS 7.4 | ssh port:22 | jdk、hadoop | NameNode、DFSZKFailoverController(zkfc) |

mini03 | 10.0.0.113 | 172.16.1.113 | CentOS 7.4 | ssh port:22 | jdk、hadoop、zookeeper | ResourceManager |

mini04 | 10.0.0.114 | 172.16.1.114 | CentOS 7.4 | ssh port:22 | jdk、hadoop、zookeeper | ResourceManager |

mini05 | 10.0.0.115 | 172.16.1.115 | CentOS 7.4 | ssh port:22 | jdk、hadoop、zookeeper | DataNode、NodeManager、JournalNode、QuorumPeerMain |

mini06 | 10.0.0.116 | 172.16.1.116 | CentOS 7.4 | ssh port:22 | jdk、hadoop、zookeeper | DataNode、NodeManager、JournalNode、QuorumPeerMain |

mini07 | 10.0.0.117 | 172.16.1.117 | CentOS 7.4 | ssh port:22 | jdk、hadoop、zookeeper | DataNode、NodeManager、JournalNode、QuorumPeerMain |

注意:针对 HA 模式,就不需要 SecondaryNameNode 了,因为 STANDBY 状态的 namenode 会负责做 checkpoint

Linux 添加 hosts 信息,保证每台都可以相互 ping 通

[root@mini01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.111 mini01

10.0.0.112 mini02

10.0.0.113 mini03

10.0.0.114 mini04

10.0.0.115 mini05

10.0.0.116 mini06

10.0.0.117 mini07

Windows 的 hosts 文件修改

# 文件位置 C:\Windows\System32\drivers\etc 在 hosts 中追加如下内容

…………………………………………

10.0.0.111 mini01

10.0.0.112 mini02

10.0.0.113 mini03

10.0.0.114 mini04

10.0.0.115 mini05

10.0.0.116 mini06

10.0.0.117 mini07

3. 添加用户账号

# 使用一个专门的用户,避免直接使用 root 用户

# 添加用户、指定家目录并指定用户密码

useradd -d /app yun && echo ‘123456’ | /usr/bin/passwd –stdin yun

# sudo 提权

echo “yun ALL=(ALL) NOPASSWD: ALL” >> /etc/sudoers

# 让其它普通用户可以进入该目录查看信息

chmod 755 /app/

4. 实现 yun 用户免秘钥登录

要求:根据规划实现 mini01 到 mini01、mini02、mini03、mini04、mini05、mini06、mini07 免秘钥登录

实现 mini02 到 mini01、mini02、mini03、mini04、mini05、mini06、mini07 免秘钥登录

实现 mini03 到 mini01、mini02、mini03、mini04、mini05、mini06、mini07 免秘钥登录

实现 mini04 到 mini01、mini02、mini03、mini04、mini05、mini06、mini07 免秘钥登录

实现 mini05 到 mini01、mini02、mini03、mini04、mini05、mini06、mini07 免秘钥登录

实现 mini06 到 mini01、mini02、mini03、mini04、mini05、mini06、mini07 免秘钥登录

实现 mini07 到 mini01、mini02、mini03、mini04、mini05、mini06、mini07 免秘钥登录

# 可以使用 ip 也可以是 hostname 但是由于我们计划使用的是 hostname 方式交互,所以使用 hostname

# 同时 hostname 方式分发,可以通过 hostname 远程登录,也可以 IP 远程登录

具体过程就不多说了,请参见 https://www.linuxidc.com/Linux/2018-08/153353.htm

5. Jdk【java8】

具体过程就不多说了,请参见 https://www.linuxidc.com/Linux/2018-08/153353.htm

6. Zookeeper 部署

根据规划 zookeeper 部署在 mini03、mini04、mini05、mini06、mini07 上

6.1. 配置信息

[yun@mini03 conf]$ pwd

/app/zookeeper/conf

[yun@mini03 conf]$ vim zoo.cfg

# 单个客户端与单台服务器之间的连接数的限制,是 ip 级别的,默认是 60,如果设置为 0,那么表明不作任何限制。

maxClientCnxns=1500

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

# dataDir=/tmp/zookeeper

dataDir=/app/bigdata/zookeeper/data

# the port at which the clients will connect

clientPort=2181

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to “0” to disable auto purge feature

#autopurge.purgeInterval=1

# leader 和 follow 通信端口和投票选举端口

server.3=mini03:2888:3888

server.4=mini04:2888:3888

server.5=mini05:2888:3888

server.6=mini06:2888:3888

server.7=mini07:2888:3888

6.2. 添加 myid 文件

[yun@mini03 data]$ pwd

/app/bigdata/zookeeper/data

[yun@mini03 data]$ vim myid # 其中 mini03 的 myid 为 3;mini04 的 myid 为 4;mini05 的 myid 为 5;mini06 的 myid 为 6;mini07 的 myid 为 7

3

6.3. 启动 zk 服务

# 依次在启动 mini03、mini04、mini05、mini06、mini07 zk 服务

[yun@mini03 ~]$ cd zookeeper/bin/

[yun@mini03 bin]$ pwd

/app/zookeeper/bin

[yun@mini03 bin]$ ll

total 56

-rwxr-xr-x 1 yun yun 238 Oct 1 2012 README.txt

-rwxr-xr-x 1 yun yun 1909 Oct 1 2012 zkCleanup.sh

-rwxr-xr-x 1 yun yun 1049 Oct 1 2012 zkCli.cmd

-rwxr-xr-x 1 yun yun 1512 Oct 1 2012 zkCli.sh

-rwxr-xr-x 1 yun yun 1333 Oct 1 2012 zkEnv.cmd

-rwxr-xr-x 1 yun yun 2599 Oct 1 2012 zkEnv.sh

-rwxr-xr-x 1 yun yun 1084 Oct 1 2012 zkServer.cmd

-rwxr-xr-x 1 yun yun 5467 Oct 1 2012 zkServer.sh

-rw-rw-r– 1 yun yun 17522 Jun 28 21:01 zookeeper.out

[yun@mini03 bin]$ ./zkServer.sh start

JMX enabled by default

Using config: /app/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper … STARTED

6.4. 查询运行状态

# 其中 mini03、mini04、mini06、mini07 状态如下

[yun@mini03 bin]$ ./zkServer.sh status

JMX enabled by default

Using config: /app/zookeeper/bin/../conf/zoo.cfg

Mode: follower

# 其中 mini05 状态如下

[yun@mini05 bin]$ ./zkServer.sh status

JMX enabled by default

Using config: /app/zookeeper/bin/../conf/zoo.cfg

Mode: leader

PS:4 个 follower 1 个 leader

7. Hadoop 部署与配置修改

注意:每台机器的 Hadoop 以及配置相同

7.1. 部署

[yun@mini01 software]$ pwd

/app/software

[yun@mini01 software]$ ll

total 194152

-rw-r–r– 1 yun yun 198811365 Jun 8 16:36 CentOS-7.4_hadoop-2.7.6.tar.gz

[yun@mini01 software]$ tar xf CentOS-7.4_hadoop-2.7.6.tar.gz

[yun@mini01 software]$ mv hadoop-2.7.6/ /app/

[yun@mini01 software]$ cd

[yun@mini01 ~]$ ln -s hadoop-2.7.6/ hadoop

[yun@mini01 ~]$ ll

total 4

lrwxrwxrwx 1 yun yun 13 Jun 9 16:21 hadoop -> hadoop-2.7.6/

drwxr-xr-x 9 yun yun 149 Jun 8 16:36 hadoop-2.7.6

lrwxrwxrwx 1 yun yun 12 May 26 11:18 jdk -> jdk1.8.0_112

drwxr-xr-x 8 yun yun 255 Sep 23 2016 jdk1.8.0_112

7.2. 环境变量

[root@mini01 profile.d]# pwd

/etc/profile.d

[root@mini01 profile.d]# vim hadoop.sh

export HADOOP_HOME=”/app/hadoop”

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

[root@mini01 profile.d]# source /etc/profile # 生效

7.3. core-site.xml

[yun@mini01 hadoop]$ pwd

/app/hadoop/etc/hadoop

[yun@mini01 hadoop]$ vim core-site.xml

<?xml version=”1.0″ encoding=”UTF-8″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2018-08/configuration.xsl”?>

<!–

……………………

–>

<!– Put site-specific property overrides in this file. –>

<configuration>

<!– 指定 hdfs 的 nameservice 为 bi –>

<property>

<name>fs.defaultFS</name>

<value>hdfs://bi/</value>

</property>

<!– 指定 hadoop 临时目录 –>

<property>

<name>hadoop.tmp.dir</name>

<value>/app/hadoop/tmp</value>

</property>

<!– 指定 zookeeper 地址 –>

<property>

<name>ha.zookeeper.quorum</name>

<value>mini03:2181,mini04:2181,mini05:2181,mini06:2181,mini07:2181</value>

</property>

</configuration>

7.4. hdfs-site.xml

[yun@mini01 hadoop]$ pwd

/app/hadoop/etc/hadoop

[yun@mini01 hadoop]$ vim hdfs-site.xml

<?xml version=”1.0″ encoding=”UTF-8″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2018-08/configuration.xsl”?>

<!–

……………………

–>

<!– Put site-specific property overrides in this file. –>

<configuration>

<!– 指定 hdfs 的 nameservice 为 bi,需要和 core-site.xml 中的保持一致 –>

<property>

<name>dfs.nameservices</name>

<value>bi</value>

</property>

<!– bi 下面有两个 NameNode,分别是 nn1,nn2 –>

<property>

<name>dfs.ha.namenodes.bi</name>

<value>nn1,nn2</value>

</property>

<!– nn1 的 RPC 通信地址 –>

<property>

<name>dfs.namenode.rpc-address.bi.nn1</name>

<value>mini01:9000</value>

</property>

<!– nn1 的 http 通信地址 –>

<property>

<name>dfs.namenode.http-address.bi.nn1</name>

<value>mini01:50070</value>

</property>

<!– nn2 的 RPC 通信地址 –>

<property>

<name>dfs.namenode.rpc-address.bi.nn2</name>

<value>mini02:9000</value>

</property>

<!– nn2 的 http 通信地址 –>

<property>

<name>dfs.namenode.http-address.bi.nn2</name>

<value>mini02:50070</value>

</property>

<!– 指定 NameNode 的 edits 元数据在 JournalNode 上的存放位置 –>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://mini05:8485;mini06:8485;mini07:8485/bi</value>

</property>

<!– 指定 JournalNode 在本地磁盘存放数据的位置 –>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/app/hadoop/journaldata</value>

</property>

<!– 开启 NameNode 失败自动切换 –>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!– 配置失败自动切换实现方式 –>

<property>

<name>dfs.client.failover.proxy.provider.bi</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!– 配置隔离机制方法,多个机制用换行分割,即每个机制暂用一行 –>

<!– 其中 shell(/bin/true) 表示可执行一个脚本 比如 shell(/app/yunwei/hadoop_fence.sh) –>

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<!– 使用 sshfence 隔离机制时需要 ssh 免登陆 –>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/app/.ssh/id_rsa</value>

</property>

<!– 配置 sshfence 隔离机制超时时间 单位:毫秒 –>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

</configuration>

7.5. mapred-site.xml

[yun@mini01 hadoop]$ pwd

/app/hadoop/etc/hadoop

[yun@mini01 hadoop]$ cp -a mapred-site.xml.template mapred-site.xml

[yun@mini01 hadoop]$ vim mapred-site.xml

<?xml version=”1.0″?>

<?xml-stylesheet type=”text/xsl” href=”https://www.linuxidc.com/Linux/2018-08/configuration.xsl”?>

<!–

……………………

–>

<!– Put site-specific property overrides in this file. –>

<configuration>

<!– 指定 mr 框架为 yarn 方式 –>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

7.6. yarn-site.xml

[yun@mini01 hadoop]$ pwd

/app/hadoop/etc/hadoop

[yun@mini01 hadoop]$ vim yarn-site.xml

<?xml version=”1.0″?>

<!–

……………………

–>

<configuration>

<!– Site specific YARN configuration properties –>

<!– 开启 RM 高可用 –>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!– 指定 RM 的 cluster id –>

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yrc</value>

</property>

<!– 指定 RM 的名字 –>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!– 分别指定 RM 的地址 –>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>mini03</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>mini04</value>

</property>

<!– 指定 zk 集群地址 –>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>mini03:2181,mini04:2181,mini05:2181,mini06:2181,mini07:2181</value>

</property>

<!– reduce 获取数据的方式 –>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

7.7. 修改 slaves

slaves 是指定子节点的位置,因为要在 mini01 上启动 HDFS、在 mini03 启动 yarn,所以 mini01 上的 slaves 文件指定的是 datanode 的位置,mini03 上的 slaves 文件指定的是 nodemanager 的位置

[yun@mini01 hadoop]$ pwd

/app/hadoop/etc/hadoop

[yun@mini01 hadoop]$ vim slaves

mini05

mini06

mini07

PS:改后配置后,将这些配置拷到其他 Hadoop 机器

8. 启动相关服务

注意:第一次启动时严格按照下面的步骤!!!!!!!

8.1. 启动 zookeeper 集群

前面已经启动了,这里就不说了

8.2. 启动 journalnode

# 根据规划在 mini05、mini06、mini07 启动

# 在第一次格式化的时候需要先启动 journalnode 之后就不必了

[yun@mini05 ~]$ hadoop-daemon.sh start journalnode # 已经配置环境变量,所以不用进入到响应的目录

starting journalnode, logging to /app/hadoop-2.7.6/logs/hadoop-yun-journalnode-mini05.out

[yun@mini05 ~]$ jps

1281 QuorumPeerMain

1817 Jps

1759 JournalNode

8.3. 格式化 HDFS

# 在 mini01 上执行命令

[yun@mini01 ~]$ hdfs namenode -format

18/06/30 18:29:12 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = mini01/10.0.0.111

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.6

STARTUP_MSG: classpath = ………………

STARTUP_MSG: build = Unknown -r Unknown; compiled by ‘root’ on 2018-06-08T08:30Z

STARTUP_MSG: java = 1.8.0_112

************************************************************/

18/06/30 18:29:12 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

18/06/30 18:29:12 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-2385f26e-72e6-4935-aa09-47848b5ba4be

18/06/30 18:29:13 INFO namenode.FSNamesystem: No KeyProvider found.

18/06/30 18:29:13 INFO namenode.FSNamesystem: fsLock is fair: true

18/06/30 18:29:13 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

18/06/30 18:29:13 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

18/06/30 18:29:13 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

18/06/30 18:29:13 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

18/06/30 18:29:13 INFO blockmanagement.BlockManager: The block deletion will start around 2018 Jun 30 18:29:13

18/06/30 18:29:13 INFO util.GSet: Computing capacity for map BlocksMap

18/06/30 18:29:13 INFO util.GSet: VM type = 64-bit

18/06/30 18:29:13 INFO util.GSet: 2.0% max memory 966.7 MB = 19.3 MB

18/06/30 18:29:13 INFO util.GSet: capacity = 2^21 = 2097152 entries

18/06/30 18:29:13 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

18/06/30 18:29:13 INFO blockmanagement.BlockManager: defaultReplication = 3

18/06/30 18:29:13 INFO blockmanagement.BlockManager: maxReplication = 512

18/06/30 18:29:13 INFO blockmanagement.BlockManager: minReplication = 1

18/06/30 18:29:13 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

18/06/30 18:29:13 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

18/06/30 18:29:13 INFO blockmanagement.BlockManager: encryptDataTransfer = false

18/06/30 18:29:13 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

18/06/30 18:29:13 INFO namenode.FSNamesystem: fsOwner = yun (auth:SIMPLE)

18/06/30 18:29:13 INFO namenode.FSNamesystem: supergroup = supergroup

18/06/30 18:29:13 INFO namenode.FSNamesystem: isPermissionEnabled = true

18/06/30 18:29:13 INFO namenode.FSNamesystem: Determined nameservice ID: bi

18/06/30 18:29:13 INFO namenode.FSNamesystem: HA Enabled: true

18/06/30 18:29:13 INFO namenode.FSNamesystem: Append Enabled: true

18/06/30 18:29:13 INFO util.GSet: Computing capacity for map INodeMap

18/06/30 18:29:13 INFO util.GSet: VM type = 64-bit

18/06/30 18:29:13 INFO util.GSet: 1.0% max memory 966.7 MB = 9.7 MB

18/06/30 18:29:13 INFO util.GSet: capacity = 2^20 = 1048576 entries

18/06/30 18:29:13 INFO namenode.FSDirectory: ACLs enabled? false

18/06/30 18:29:13 INFO namenode.FSDirectory: XAttrs enabled? true

18/06/30 18:29:13 INFO namenode.FSDirectory: Maximum size of an xattr: 16384

18/06/30 18:29:13 INFO namenode.NameNode: Caching file names occuring more than 10 times

18/06/30 18:29:13 INFO util.GSet: Computing capacity for map cachedBlocks

18/06/30 18:29:13 INFO util.GSet: VM type = 64-bit

18/06/30 18:29:13 INFO util.GSet: 0.25% max memory 966.7 MB = 2.4 MB

18/06/30 18:29:13 INFO util.GSet: capacity = 2^18 = 262144 entries

18/06/30 18:29:13 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

18/06/30 18:29:13 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

18/06/30 18:29:13 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

18/06/30 18:29:13 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

18/06/30 18:29:13 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

18/06/30 18:29:13 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

18/06/30 18:29:13 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

18/06/30 18:29:13 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

18/06/30 18:29:13 INFO util.GSet: Computing capacity for map NameNodeRetryCache

18/06/30 18:29:13 INFO util.GSet: VM type = 64-bit

18/06/30 18:29:13 INFO util.GSet: 0.029999999329447746% max memory 966.7 MB = 297.0 KB

18/06/30 18:29:13 INFO util.GSet: capacity = 2^15 = 32768 entries

18/06/30 18:29:14 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1178102935-10.0.0.111-1530354554626

18/06/30 18:29:14 INFO common.Storage: Storage directory /app/hadoop/tmp/dfs/name has been successfully formatted.

18/06/30 18:29:14 INFO namenode.FSImageFormatProtobuf: Saving image file /app/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

18/06/30 18:29:14 INFO namenode.FSImageFormatProtobuf: Image file /app/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 320 bytes saved in 0 seconds.

18/06/30 18:29:15 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

18/06/30 18:29:15 INFO util.ExitUtil: Exiting with status 0

18/06/30 18:29:15 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at mini01/10.0.0.111

************************************************************/

拷贝到 mini02

# 格式化后会在根据 core-site.xml 中的 hadoop.tmp.dir 配置生成个文件,这里我配置的是 /app/hadoop/tmp,然后将 /app/hadoop/tmp 拷贝到 mini02 的 /app/hadoop/ 下。

# 方法 1:

[yun@mini01 hadoop]$ pwd

/app/hadoop

[yun@mini01 hadoop]$ scp -r tmp/ yun@mini02:/app/hadoop

VERSION 100% 202 189.4KB/s 00:00

seen_txid 100% 2 1.0KB/s 00:00

fsimage_0000000000000000000.md5 100% 62 39.7KB/s 00:00

fsimage_0000000000000000000 100% 320 156.1KB/s 00:00

##########################3

# 方法 2:## 也可以这样,建议 hdfs namenode -bootstrapStandby # 不过需要 mini02 的 Hadoop 起来才行

8.4. 格式化 ZKFC

# 在 mini01 上执行一次即可

[yun@mini01 ~]$ hdfs zkfc -formatZK

18/06/30 18:54:30 INFO tools.DFSZKFailoverController: Failover controller configured for NameNode NameNode at mini01/10.0.0.111:9000

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:host.name=mini01

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:java.version=1.8.0_112

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:java.home=/app/jdk1.8.0_112/jre

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:java.class.path=……………………

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/app/hadoop-2.7.6/lib/native

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA>

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:os.version=3.10.0-693.el7.x86_64

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:user.name=yun

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:user.home=/app

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Client environment:user.dir=/app/hadoop-2.7.6

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=mini03:2181,mini04:2181,mini05:2181,mini06:2181,mini07:2181 sessionTimeout=5000 watcher=org.apache.hadoop.ha.ActiveStandbyElector$WatcherWithClientRef@7f3b84b8

18/06/30 18:54:30 INFO zookeeper.ClientCnxn: Opening socket connection to server mini04/10.0.0.114:2181. Will not attempt to authenticate using SASL (unknown error)

18/06/30 18:54:30 INFO zookeeper.ClientCnxn: Socket connection established to mini04/10.0.0.114:2181, initiating session

18/06/30 18:54:30 INFO zookeeper.ClientCnxn: Session establishment complete on server mini04/10.0.0.114:2181, sessionid = 0x4644fff9cb80000, negotiated timeout = 5000

18/06/30 18:54:30 INFO ha.ActiveStandbyElector: Session connected.

18/06/30 18:54:30 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/bi in ZK.

18/06/30 18:54:30 INFO zookeeper.ZooKeeper: Session: 0x4644fff9cb80000 closed

18/06/30 18:54:30 INFO zookeeper.ClientCnxn: EventThread shut down

8.5. 启动 HDFS

# 在 mini01 上执行

[yun@mini01 ~]$ start-dfs.sh

Starting namenodes on [mini01 mini02]

mini01: starting namenode, logging to /app/hadoop-2.7.6/logs/hadoop-yun-namenode-mini01.out

mini02: starting namenode, logging to /app/hadoop-2.7.6/logs/hadoop-yun-namenode-mini02.out

mini07: starting datanode, logging to /app/hadoop-2.7.6/logs/hadoop-yun-datanode-mini07.out

mini06: starting datanode, logging to /app/hadoop-2.7.6/logs/hadoop-yun-datanode-mini06.out

mini05: starting datanode, logging to /app/hadoop-2.7.6/logs/hadoop-yun-datanode-mini05.out

Starting journal nodes [mini05 mini06 mini07]

mini07: journalnode running as process 1691. Stop it first.

mini06: journalnode running as process 1665. Stop it first.

mini05: journalnode running as process 1759. Stop it first.

Starting ZK Failover Controllers on NN hosts [mini01 mini02]

mini01: starting zkfc, logging to /app/hadoop-2.7.6/logs/hadoop-yun-zkfc-mini01.out

mini02: starting zkfc, logging to /app/hadoop-2.7.6/logs/hadoop-yun-zkfc-mini02.out

8.6. 启动 YARN

##### 注意 #####:是在 mini03 上执行 start-yarn.sh,把 namenode 和 resourcemanager 分开是因为性能问题

# 因为他们都要占用大量资源,所以把他们分开了,他们分开了就要分别在不同的机器上启动

[yun@mini03 ~]$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /app/hadoop-2.7.6/logs/yarn-yun-resourcemanager-mini03.out

mini06: starting nodemanager, logging to /app/hadoop-2.7.6/logs/yarn-yun-nodemanager-mini06.out

mini07: starting nodemanager, logging to /app/hadoop-2.7.6/logs/yarn-yun-nodemanager-mini07.out

mini05: starting nodemanager, logging to /app/hadoop-2.7.6/logs/yarn-yun-nodemanager-mini05.out

################################

# 在 mini04 启动 resourcemanager

[yun@mini04 ~]$ yarn-daemon.sh start resourcemanager # 也可用 start-yarn.sh

starting resourcemanager, logging to /app/hadoop-2.7.6/logs/yarn-yun-resourcemanager-mini04.out

8.7. 启动说明

# 第一次启动的时候请严格按照上面的步骤【第一次涉及格式化问题】

# 第二次以及之后,步骤为:启动 zookeeper、HDFS、YARN

9. 浏览访问

9.1. Hdfs 访问

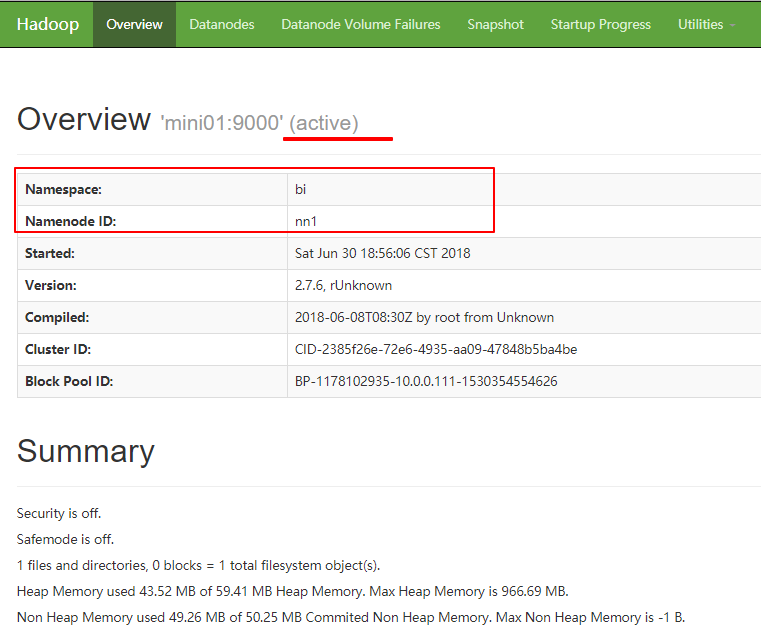

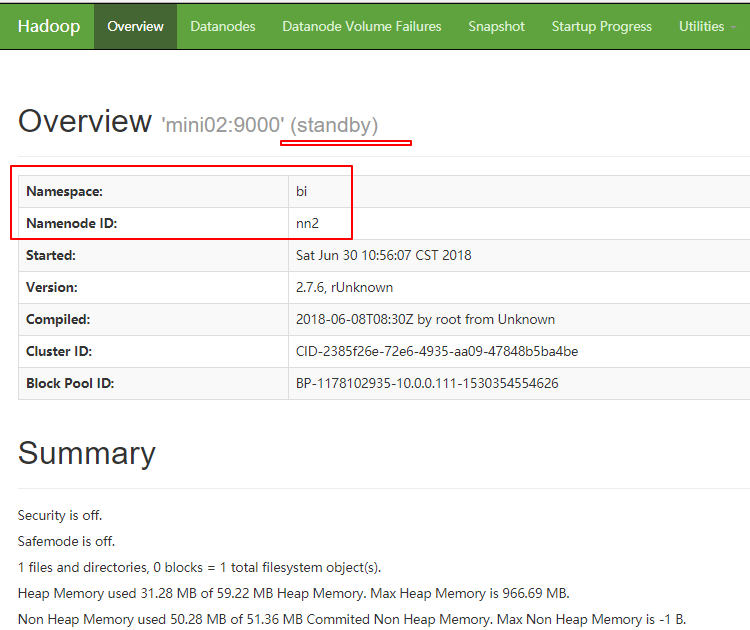

9.1.1. 正常情况访问

http://mini01:50070

http://mini02:50070

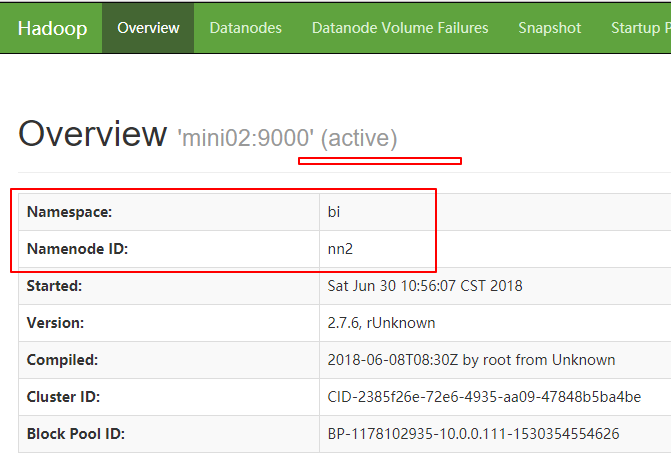

9.1.2. mini01 挂了 Active 自动切换

# mini01 操作

[yun@mini01 ~]$ jps

3584 DFSZKFailoverController

3283 NameNode

5831 Jps

[yun@mini01 ~]$ kill 3283

[yun@mini01 ~]$ jps

3584 DFSZKFailoverController

5893 Jps

Namenode 挂了所以 mini01 不能访问

http://mini02:50070

可见 Hadoop 已经切换过去了,之后 mini01 即使起来了,状态也只能为 standby。

9.2. Yarn 访问

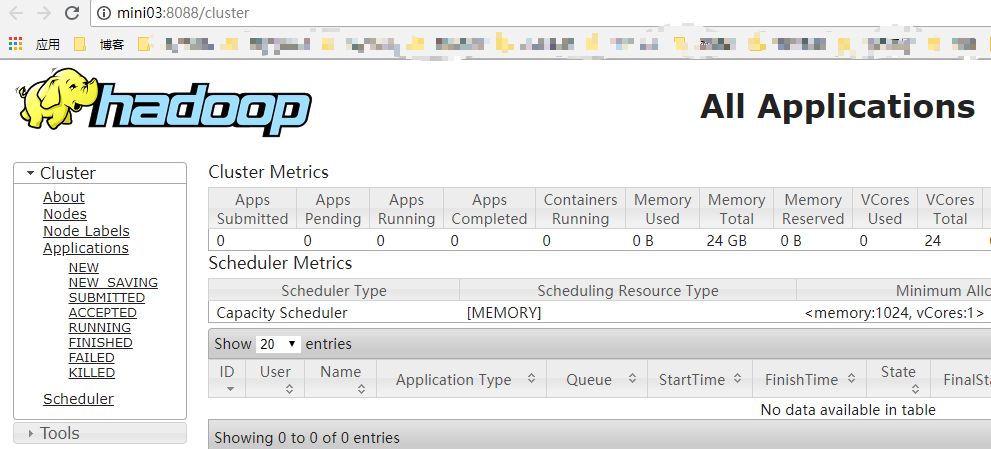

http://mini03:8088

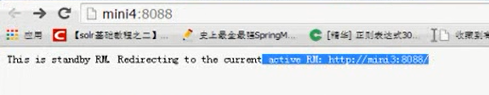

http://mini04:8088

会直接跳转到 http://mini03:8088/

# 该图从其他地方截取,所以不怎么匹配

# Linux 下访问

[yun@mini01 ~]$ curl mini04:8088

This is standby RM. The redirect url is: http://mini03:8088/

HA 完毕

10. 集群运维测试

10.1. Haadmin 与状态切换管理

[yun@mini01 ~]$ hdfs haadmin

Usage: haadmin

[-transitionToActive [–forceactive] <serviceId>]

[-transitionToStandby <serviceId>]

[-failover [–forcefence] [–forceactive] <serviceId> <serviceId>]

[-getServiceState <serviceId>]

[-checkHealth <serviceId>]

[-help <command>]

Generic options supported are

-conf <configuration file> specify an application configuration file

-D <property=value> use value for given property

-fs <local|namenode:port> specify a namenode

-jt <local|resourcemanager:port> specify a ResourceManager

-files <comma separated list of files> specify comma separated files to be copied to the map reduce cluster

-libjars <comma separated list of jars> specify comma separated jar files to include in the classpath.

-archives <comma separated list of archives> specify comma separated archives to be unarchived on the compute machines.

The general command line syntax is

bin/hadoop command [genericOptions] [commandOptions]

可以看到,状态操作的命令示例:

# 查看 namenode 工作状态

hdfs haadmin -getServiceState nn1

# 将 standby 状态 namenode 切换到 active

hdfs haadmin -transitionToActive nn1

# 将 active 状态 namenode 切换到 standby

hdfs haadmin -transitionToStandby nn2

10.2. 测试集群工作状态的一些指令

测试集群工作状态的一些指令:

hdfs dfsadmin -report 查看 hdfs 的各节点状态信息

hdfs haadmin -getServiceState nn1 # hdfs haadmin -getServiceState nn2 获取一个 namenode 节点的 HA 状态

hadoop-daemon.sh start namenode 单独启动一个 namenode 进程

hadoop-daemon.sh start zkfc 单独启动一个 zkfc 进程

10.3. Datanode 动态上下线

Datanode 动态上下线很简单,步骤如下:

a) 准备一台服务器,设置好环境

b) 部署 hadoop 的安装包,并同步集群配置

c) 联网上线,新 datanode 会自动加入集群

d) 如果是一次增加大批 datanode,还应该做集群负载重均衡

10.4. 数据块的 balance

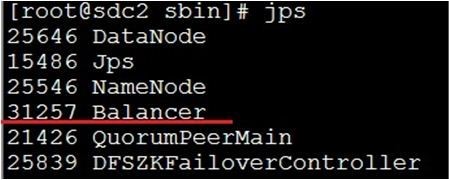

启动 balancer 的命令:

start-balancer.sh -threshold 8

运行之后,会有 Balancer 进程出现:

上述命令设置了 Threshold 为 8%,那么执行 balancer 命令的时候,首先统计所有 DataNode 的磁盘利用率的均值,然后判断如果某一个 DataNode 的磁盘利用率超过这个均值 Threshold,那么将会把这个 DataNode 的 block 转移到磁盘利用率低的 DataNode,这对于新节点的加入来说十分有用。Threshold 的值为 1 到 100 之间,不显示的进行参数设置的话,默认是 10。

Hadoop2.3-HA 高可用集群环境搭建 https://www.linuxidc.com/Linux/2017-03/142155.htm

Hadoop 项目之基于 CentOS7 的 Cloudera 5.10.1(CDH)的安装部署 https://www.linuxidc.com/Linux/2017-04/143095.htm

Hadoop2.7.2 集群搭建详解(高可用)https://www.linuxidc.com/Linux/2017-03/142052.htm

使用 Ambari 来部署 Hadoop 集群(搭建内网 HDP 源)https://www.linuxidc.com/Linux/2017-03/142136.htm

Ubuntu 14.04 下 Hadoop 集群安装 https://www.linuxidc.com/Linux/2017-02/140783.htm

CentOS 6.7 安装 Hadoop 2.7.2 https://www.linuxidc.com/Linux/2017-08/146232.htm

Ubuntu 16.04 上构建分布式 Hadoop-2.7.3 集群 https://www.linuxidc.com/Linux/2017-07/145503.htm

CentOS 7 下 Hadoop 2.6.4 分布式集群环境搭建 https://www.linuxidc.com/Linux/2017-06/144932.htm

Hadoop2.7.3+Spark2.1.0 完全分布式集群搭建过程 https://www.linuxidc.com/Linux/2017-06/144926.htm

CentOS 7.4 下编译安装 Hadoop 2.7.6 及所需文件 https://www.linuxidc.com/Linux/2018-06/152786.htm

Ubuntu 16.04.3 下安装配置 Hadoop https://www.linuxidc.com/Linux/2018-04/151993.htm

更多 Hadoop 相关信息见 Hadoop 专题页面 http://www.linuxidc.com/topicnews.aspx?tid=13

: