共计 23532 个字符,预计需要花费 59 分钟才能阅读完成。

节点规划

| 主机名 | IP | Role |

|---|---|---|

| k8s-master01 | 10.3.1.20 | etcd、Master、Node、keepalived |

| k8s-master02 | 10.3.1.21 | etcd、Master、Node、keepalived |

| k8s-master03 | 10.3.1.25 | etcd、Master、Node、keepalived |

| VIP | 10.3.1.29 | None |

版本信息:

- OS::Ubuntu 16.04

- Docker:17.03.2-ce

- k8s:v1.12

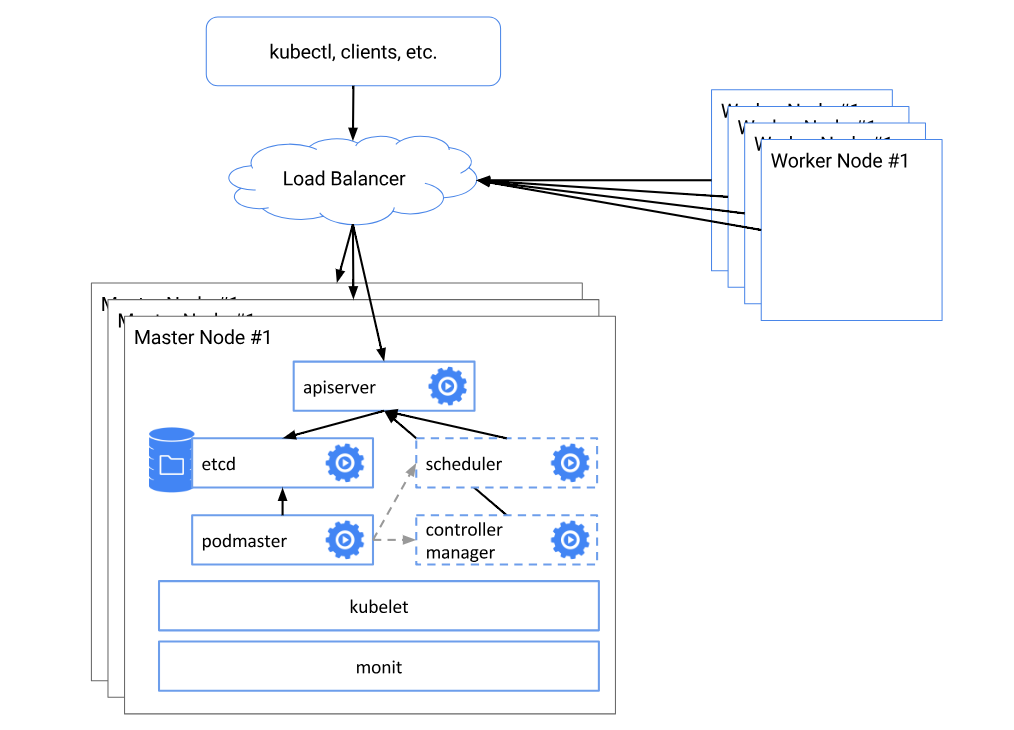

来自官网的高可用架构图

高可用最重要的两个组件:

- etcd:分布式键值存储、k8s 集群数据中心。

- kube-apiserver:集群的唯一入口,各组件通信枢纽。apiserver 本身无状态,因此分布式很容易。

其它核心组件:

- controller-manager 和 scheduler 也可以部署多个,但只有一个处于活跃状态,以保证数据一致性。因为它们会改变集群状态。

集群各组件都是松耦合的,如何高可用就有很多种方式了。 - kube-apiserver 有多个,那么 apiserver 客户端应该连接哪个了,因此就在 apiserver 前面加个传统的类似于 haproxy+keepalived 方案漂个 VIP 出来,apiserver 客户端,比如 kubelet、kube-proxy 连接此 VIP。

安装前准备

1、k8s 各节点 SSH 免密登录。

2、时间同步。

3、各 Node 必须关闭 swap:swapoff -a,否则 kubelet 启动失败。

4、各节点主机名和 IP 加入 /etc/hosts 解析

kubeadm 创建高可用集群有两种方法:

- etcd 集群由 kubeadm 配置并运行于 pod,启动在 Master 节点之上。

- etcd 集群单独部署。

etcd 集群单独部署,似乎更容易些,这里就以这种方法来部署。

部署 etcd 集群

etcd 的正常运行是 k8s 集群运行的提前条件,因此部署 k8s 集群首先部署 etcd 集群。

安装 CA 证书

安装 CFSSL 证书管理工具

直接下载二进制安装包:

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

chmod +x cfssl_linux-amd64

mv cfssl_linux-amd64 /opt/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

chmod +x cfssljson_linux-amd64

mv cfssljson_linux-amd64 /opt/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /opt/bin/cfssl-certinfo

echo “export PATH=/opt/bin:$PATH” > /etc/profile.d/k8s.sh

所有 k8s 的执行文件全部放入 /opt/bin/ 目录下

创建 CA 配置文件

root@k8s-master01:~# mkdir ssl

root@k8s-master01:~# cd ssl/

root@k8s-master01:~/ssl# cfssl print-defaults config > config.json

root@k8s-master01:~/ssl# cfssl print-defaults csr > csr.json

# 根据 config.json 文件的格式创建如下的 ca-config.json 文件

# 过期时间设置成了 87600h

root@k8s-master01:~/ssl# cat ca-config.json

{

“signing”: {

“default”: {

“expiry”: “87600h”

},

“profiles”: {

“kubernetes”: {

“usages”: [

“signing”,

“key encipherment”,

“server auth”,

“client auth”

],

“expiry”: “87600h”

}

}

}

}

创建 CA 证书签名请求

root@k8s-master01:~/ssl# cat ca-csr.json

{

“CN”: “kubernetes”,

“key”: {

“algo”: “rsa”,

“size”: 2048

},

“names”: [

{

“C”: “CN”,

“ST”: “GD”,

“L”: “SZ”,

“O”: “k8s”,

“OU”: “System”

}

]

}

生成 CA 证书和私匙

root@k8s-master01:~/ssl# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

root@k8s-master01:~/ssl# ls ca*

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

拷贝 ca 证书到所有 Node 相应目录

root@k8s-master01:~/ssl# mkdir -p /etc/kubernetes/ssl

root@k8s-master01:~/ssl# cp ca* /etc/kubernetes/ssl

root@k8s-master01:~/ssl# scp -r /etc/kubernetes 10.3.1.21:/etc/

root@k8s-master01:~/ssl# scp -r /etc/kubernetes 10.3.1.25:/etc/

下载 etcd 文件:

有了 CA 证书后,就可以开始配置 etcd 了。

root@k8s-master01:$ wget https://github.com/coreos/etcd/releases/download/v3.2.22/etcd-v3.2.22-linux-amd64.tar.gz

root@k8s-master01:$ cp etcd etcdctl /opt/bin/

对于 K8s v1.12,其 etcd 版本不能低于 3.2.18

创建 etcd 证书

创建 etcd 证书签名请求文件

root@k8s-master01:~/ssl# cat etcd-csr.json

{

“CN”: “etcd”,

“hosts”: [

“127.0.0.1”,

“10.3.1.20”,

“10.3.1.21”,

“10.3.1.25”

],

“key”: {

“algo”: “rsa”,

“size”: 2048

},

“names”: [

{

“C”: “CN”,

“ST”: “GD”,

“L”: “SZ”,

“O”: “k8s”,

“OU”: “System”

}

]

}

# 特别注意:上述 host 的字段填写所有 etcd 节点的 IP,否则会无法启动。

生成 etcd 证书和私钥

root@k8s-master01:~/ssl# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

> -ca-key=/etc/kubernetes/ssl/ca-key.pem \

> -config=/etc/kubernetes/ssl/ca-config.json \

> -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

2018/10/01 10:01:14 [INFO] generate received request

2018/10/01 10:01:14 [INFO] received CSR

2018/10/01 10:01:14 [INFO] generating key: rsa-2048

2018/10/01 10:01:15 [INFO] encoded CSR

2018/10/01 10:01:15 [INFO] signed certificate with serial number 379903753757286569276081473959703411651822370300

2018/02/06 10:01:15 [WARNING] This certificate lacks a “hosts” field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 (“Information Requirements”).

root@k8s-master:~/ssl# ls etcd*

etcd.csr etcd-csr.json etcd-key.pem etcd.pem

# -profile=kubernetes 这个值根据 -config=/etc/kubernetes/ssl/ca-config.json 文件中的 profiles 字段而来。

拷贝证书到所有节点对应目录:

root@k8s-master01:~/ssl# cp etcd*.pem /etc/etcd/ssl

root@k8s-master01:~/ssl# scp -r /etc/etcd 10.3.1.21:/etc/

etcd-key.pem 100% 1675 1.5KB/s 00:00

etcd.pem 100% 1407 1.4KB/s 00:00

root@k8s-master01:~/ssl# scp -r /etc/etcd 10.3.1.25:/etc/

etcd-key.pem 100% 1675 1.6KB/s 00:00

etcd.pem 100% 1407 1.4KB/s 00:00

创建 etcd 的 Systemd unit 文件

证书都准备好后就可以配置启动文件了

root@k8s-master01:~# mkdir -p /var/lib/etcd #必须先创建 etcd 工作目录

root@k8s-master:~# cat /etc/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

ExecStart=/opt/bin/etcd \

–name=etcd-host0 \

–cert-file=/etc/etcd/ssl/etcd.pem \

–key-file=/etc/etcd/ssl/etcd-key.pem \

–peer-cert-file=/etc/etcd/ssl/etcd.pem \

–peer-key-file=/etc/etcd/ssl/etcd-key.pem \

–trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

–peer-trusted-ca-file=/etc/kubernetes/ssl/ca.pem \

–initial-advertise-peer-urls=https://10.3.1.20:2380 \

–listen-peer-urls=https://10.3.1.20:2380 \

–listen-client-urls=https://10.3.1.20:2379,http://127.0.0.1:2379 \

–advertise-client-urls=https://10.3.1.20:2379 \

–initial-cluster-token=etcd-cluster-1 \

–initial-cluster=etcd-host0=https://10.3.1.20:2380,etcd-host1=https://10.3.1.21:2380,etcd-host2=https://10.3.1.25:2380 \

–initial-cluster-state=new \

–data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

启动 etcd

root@k8s-master01:~/ssl# systemctl daemon-reload

root@k8s-master01:~/ssl# systemctl enable etcd

root@k8s-master01:~/ssl# systemctl start etcd

把 etcd 启动文件拷贝到另外两台节点,修改下配置就可以启动了。

查看集群状态:

由于 etcd 使用了证书,所以 etcd 命令需要带上证书:

# 查看 etcd 成员列表

root@k8s-master01:~# etcdctl –key-file /etc/etcd/ssl/etcd-key.pem –cert-file /etc/etcd/ssl/etcd.pem –ca-file /etc/kubernetes/ssl/ca.pem member list

702819a30dfa37b8: name=etcd-host2 peerURLs=https://10.3.1.20:2380 clientURLs=https://10.3.1.20:2379 isLeader=true

bac8f5c361d0f1c7: name=etcd-host1 peerURLs=https://10.3.1.21:2380 clientURLs=https://10.3.1.21:2379 isLeader=false

d9f7634e9a718f5d: name=etcd-host0 peerURLs=https://10.3.1.25:2380 clientURLs=https://10.3.1.25:2379 isLeader=false

# 或查看集群是否健康

root@k8s-maste01:~/ssl# etcdctl –key-file /etc/etcd/ssl/etcd-key.pem –cert-file /etc/etcd/ssl/etcd.pem –ca-file /etc/kubernetes/ssl/ca.pem cluster-health

member 1af3976d9329e8ca is healthy: got healthy result from https://10.3.1.20:2379

member 34b6c7df0ad76116 is healthy: got healthy result from https://10.3.1.21:2379

member fd1bb75040a79e2d is healthy: got healthy result from https://10.3.1.25:2379

cluster is healthy

安装 Docker

apt-get update

apt-get install \

apt-transport-https \

ca-certificates \

curl \

software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add –

apt-key fingerprint 0EBFCD88

add-apt-repository \

“deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable”

apt-get update

apt-get install -y docker-ce=17.03.2~ce-0~ubuntu-xenial

安装完 Docker 后,设置 FORWARD 规则为 ACCEPT

# 默认为 DROP

iptables -P FORWARD ACCEPT

安装 kubeadm 工具

- 所有节点都需要安装 kubeadm

apt-get update && apt-get install -y apt-transport-https curl

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add –

echo ‘deb http://apt.kubernetes.io/ kubernetes-xenial main’ >/etc/apt/sources.list.d/kubernetes.list

apt-get update

apt-get install -y kubeadm

# 它会自动安装 kubeadm、kubectl、kubelet、kubernetes-cni、socat

安装完后,设置 kubelet 服务开机自启:

systemctl enable kubelet

必须设置 Kubelet 开机自启动,才能让 k8s 集群各组件在系统重启后自动运行。

集群初始化

接下开始在三台 master 执行集群初始化。

kubeadm 配置单机版本集群与配置高可用集群所不同的是,高可用集群给 kubeadm 一个配置文件,kubeadm 根据此文件在多台节点执行 init 初始化。

编写 kubeadm 配置文件

root@k8s-master01:~/kubeadm-config# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1alpha3

kind: ClusterConfiguration

kubernetesVersion: stable

networking:

podSubnet: 192.168.0.0/16

apiServerCertSANs:

– k8s-master01

– k8s-master02

– k8s-master03

– 10.3.1.20

– 10.3.1.21

– 10.3.1.25

– 10.3.1.29

– 127.0.0.1

etcd:

external:

endpoints:

– https://10.3.1.20:2379

– https://10.3.1.21:2379

– https://10.3.1.25:2379

caFile: /etc/kubernetes/ssl/ca.pem

certFile: /etc/etcd/ssl/etcd.pem

keyFile: /etc/etcd/ssl/etcd-key.pem

dataDir: /var/lib/etcd

token: 547df0.182e9215291ff27f

tokenTTL: “0”

root@k8s-master01:~/kubeadm-config#

配置解析:

版本 v1.12 的 api 版本已提升为 kubeadm.k8s.io/v1alpha3,kind 已变成 ClusterConfiguration。

podSubnet:自定义 pod 网段。

apiServerCertSANs:填写所有 kube-apiserver 节点的 hostname、IP、VIP

etcd:external表示使用外部 etcd 集群,后面写上 etcd 节点 IP、证书位置。

如果 etcd 集群由 kubeadm 配置,则应该写 local, 加上自定义的启动参数。

token:可以不指定,使用指令 kubeadm token generate 生成。

第一台 master 上执行 init

# 确保 swap 已关闭

root@k8s-master01:~/kubeadm-config# kubeadm init –config kubeadm-config.yaml

输出如下信息:

#kubernetes v1.12.0 开始初始化

[init] using Kubernetes version: v1.12.0

# 初始化之前预检

[preflight] running pre-flight checks

[preflight/images] Pulling images required for setting up a Kubernetes cluster

[preflight/images] This might take a minute or two, depending on the speed of your internet connection

# 可以在 init 之前用 kubeadm config images pull 先拉镜像

[preflight/images] You can also perform this action in beforehand using ‘kubeadm config images pull’

# 生成 kubelet 服务的配置

[kubelet] Writing kubelet environment file with flags to file “/var/lib/kubelet/kubeadm-flags.env”

[kubelet] Writing kubelet configuration to file “/var/lib/kubelet/config.yaml”

[preflight] Activating the kubelet service

# 生成证书

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [k8s-master01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local k8s-master01 k8s-master02 k8s-master03] and IPs [10.96.0.1 10.3.1.20 10.3.1.20 10.3.1.21 10.3.1.25 10.3.1.29 127.0.0.1]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] valid certificates and keys now exist in “/etc/kubernetes/pki”

[certificates] Generated sa key and public key.

# 生成 kubeconfig

[kubeconfig] Wrote KubeConfig file to disk: “/etc/kubernetes/admin.conf”

[kubeconfig] Wrote KubeConfig file to disk: “/etc/kubernetes/kubelet.conf”

[kubeconfig] Wrote KubeConfig file to disk: “/etc/kubernetes/controller-manager.conf”

[kubeconfig] Wrote KubeConfig file to disk: “/etc/kubernetes/scheduler.conf”

# 生成要启动 Pod 清单文件

[controlplane] wrote Static Pod manifest for component kube-apiserver to “/etc/kubernetes/manifests/kube-apiserver.yaml”

[controlplane] wrote Static Pod manifest for component kube-controller-manager to “/etc/kubernetes/manifests/kube-controller-manager.yaml”

[controlplane] wrote Static Pod manifest for component kube-scheduler to “/etc/kubernetes/manifests/kube-scheduler.yaml”

# 启动 Kubelet 服务,读取 pod 清单文件 /etc/kubernetes/manifests

[init] waiting for the kubelet to boot up the control plane as Static Pods from directory “/etc/kubernetes/manifests”

# 根据清单文件拉取镜像

[init] this might take a minute or longer if the control plane images have to be pulled

# 所有组件启动完成

[apiclient] All control plane components are healthy after 27.014452 seconds

# 上传配置 kubeadm-config” in the “kube-system”

[uploadconfig] storing the configuration used in ConfigMap “kubeadm-config” in the “kube-system” Namespace

[kubelet] Creating a ConfigMap “kubelet-config-1.12” in namespace kube-system with the configuration for the kubelets in the cluster

# 给 master 添加一个污点的标签 taint

[markmaster] Marking the node k8s-master01 as master by adding the label “node-role.kubernetes.io/master=””

[markmaster] Marking the node k8s-master01 as master by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[patchnode] Uploading the CRI Socket information “/var/run/dockershim.sock” to the Node API object “k8s-master01” as an annotation

# 使用的 token

[bootstraptoken] using token: w79yp6.erls1tlc4olfikli

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the “cluster-info” ConfigMap in the “kube-public” namespace

# 最后安装基础组件 kube-dns 和 kube-proxy daemonset

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run “kubectl apply -f [podnetwork].yaml” with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

# 记录下面这句,在其它 Node 加入时用到。

kubeadm join 10.3.1.20:6443 –token w79yp6.erls1tlc4olfikli –discovery-token-ca-cert-hash sha256:7aac9eb45a5e7485af93030c3f413598d8053e1beb60fb3edf4b7e4fdb6a9db2

- 根据提示执行:

root@k8s-master01:~# mkdir -p $HOME/.kube

root@k8s-master01:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@k8s-master01:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config

此时有一台了,且状态为 ”NotReady”

root@k8s-master01:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady master 3m50s v1.12.0

root@k8s-master01:~#

查看第一台 Master 核心组件运行为 Pod

root@k8s-master01:~# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

coredns-576cbf47c7-2dqsj 0/1 Pending 0 4m29s <none> <none> <none>

coredns-576cbf47c7-7sqqz 0/1 Pending 0 4m29s <none> <none> <none>

kube-apiserver-k8s-master01 1/1 Running 0 3m46s 10.3.1.20 k8s-master01 <none>

kube-controller-manager-k8s-master01 1/1 Running 0 3m40s 10.3.1.20 k8s-master01 <none>

kube-proxy-dpvkk 1/1 Running 0 4m30s 10.3.1.20 k8s-master01 <none>

kube-scheduler-k8s-master01 1/1 Running 0 3m37s 10.3.1.20 k8s-master01 <none>

root@k8s-master01:~#

# 因为设置了 taints(污点),所以 coredns 是 Pending 状态。

拷贝生成的 pki 目录到各 master 节点

root@k8s-master01:~# scp -r /etc/kubernetes/pki root@10.3.1.21:/etc/kubernetes/

root@k8s-master01:~# scp -r /etc/kubernetes/pki root@10.3.1.25:/etc/kubernetes/

把 kubeadm 的配置文件也拷过去

root@k8s-master01:~/# scp kubeadm-config.yaml root@10.3.1.21:~/

root@k8s-master01:~/# scp kubeadm-config.yaml root@10.3.1.25:~/

第一台 Master 部署完成了,接下来的第二和第三台,无论后面有多少个 Master 都使用相同的 kubeadm-config.yaml 进行初始化

第二台执行 kubeadm init

root@k8s-master02:~# kubeadm init –config kubeadm-config.yaml

[init] using Kubernetes version: v1.12.0

[preflight] running pre-flight checks

[preflight/images] Pulling images required for setting up a Kubernetes cluster

[preflight/images] This might take a minute or two, depending on the speed of your internet connection

第三台 master 执行 kubeadm init

root@k8s-master03:~# kubeadm init –config kubeadm-config.yaml

[init] using Kubernetes version: v1.12.0

[preflight] running pre-flight checks

[preflight/images] Pulling images required for setting up a Kubernetes cluster

最后查看 Node:

root@k8s-master01:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady master 31m v1.12.0

k8s-master02 NotReady master 15m v1.12.0

k8s-master03 NotReady master 6m52s v1.12.0

root@k8s-master01:~#

查看各组件运行状态:

# 核心组件已正常 running

root@k8s-master01:~# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

coredns-576cbf47c7-2dqsj 0/1 ContainerCreating 0 31m <none> k8s-master02 <none>

coredns-576cbf47c7-7sqqz 0/1 ContainerCreating 0 31m <none> k8s-master02 <none>

kube-apiserver-k8s-master01 1/1 Running 0 30m 10.3.1.20 k8s-master01 <none>

kube-apiserver-k8s-master02 1/1 Running 0 15m 10.3.1.21 k8s-master02 <none>

kube-apiserver-k8s-master03 1/1 Running 0 6m24s 10.3.1.25 k8s-master03 <none>

kube-controller-manager-k8s-master01 1/1 Running 0 30m 10.3.1.20 k8s-master01 <none>

kube-controller-manager-k8s-master02 1/1 Running 0 15m 10.3.1.21 k8s-master02 <none>

kube-controller-manager-k8s-master03 1/1 Running 0 6m25s 10.3.1.25 k8s-master03 <none>

kube-proxy-6tfdg 1/1 Running 0 16m 10.3.1.21 k8s-master02 <none>

kube-proxy-dpvkk 1/1 Running 0 31m 10.3.1.20 k8s-master01 <none>

kube-proxy-msqgn 1/1 Running 0 7m44s 10.3.1.25 k8s-master03 <none>

kube-scheduler-k8s-master01 1/1 Running 0 30m 10.3.1.20 k8s-master01 <none>

kube-scheduler-k8s-master02 1/1 Running 0 15m 10.3.1.21 k8s-master02 <none>

kube-scheduler-k8s-master03 1/1 Running 0 6m26s 10.3.1.25 k8s-master03 <none>

去除所有 master 上的 taint(污点),让 master 也可被调度:

root@k8s-master01:~# kubectl taint nodes –all node-role.kubernetes.io/master-

node/k8s-master01 untainted

node/k8s-master02 untainted

node/k8s-master03 untainted

所有节点是 ”NotReady” 状态,需要安装 CNI 插件

安装 Calico 网络插件:

root@k8s-master01:~# kubectl apply -f https://docs.projectcalico.org/v3.1/getting-started/kubernetes/installation/hosted/kubeadm/1.7/calico.yaml

configmap/calico-config created

daemonset.extensions/calico-etcd created

service/calico-etcd created

daemonset.extensions/calico-node created

deployment.extensions/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin created

clusterrole.rbac.authorization.k8s.io/calico-cni-plugin created

serviceaccount/calico-cni-plugin created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

再次查看 Node 状态:

root@k8s-master01:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 39m v1.12.0

k8s-master02 Ready master 24m v1.12.0

k8s-master03 Ready master 15m v1.12.0

各 master 上所有组件已正常:

root@k8s-master01:~# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

calico-etcd-dcbtp 1/1 Running 0 102s 10.3.1.25 k8s-master03 <none>

calico-etcd-hmd2h 1/1 Running 0 101s 10.3.1.20 k8s-master01 <none>

calico-etcd-pnksz 1/1 Running 0 99s 10.3.1.21 k8s-master02 <none>

calico-kube-controllers-75fb4f8996-dxvml 1/1 Running 0 117s 10.3.1.25 k8s-master03 <none>

calico-node-6kvg5 2/2 Running 1 117s 10.3.1.21 k8s-master02 <none>

calico-node-82wjt 2/2 Running 1 117s 10.3.1.25 k8s-master03 <none>

calico-node-zrtj4 2/2 Running 1 117s 10.3.1.20 k8s-master01 <none>

coredns-576cbf47c7-2dqsj 1/1 Running 0 38m 192.168.85.194 k8s-master02 <none>

coredns-576cbf47c7-7sqqz 1/1 Running 0 38m 192.168.85.193 k8s-master02 <none>

kube-apiserver-k8s-master01 1/1 Running 0 37m 10.3.1.20 k8s-master01 <none>

kube-apiserver-k8s-master02 1/1 Running 0 22m 10.3.1.21 k8s-master02 <none>

kube-apiserver-k8s-master03 1/1 Running 0 12m 10.3.1.25 k8s-master03 <none>

kube-controller-manager-k8s-master01 1/1 Running 0 37m 10.3.1.20 k8s-master01 <none>

kube-controller-manager-k8s-master02 1/1 Running 0 21m 10.3.1.21 k8s-master02 <none>

kube-controller-manager-k8s-master03 1/1 Running 0 12m 10.3.1.25 k8s-master03 <none>

kube-proxy-6tfdg 1/1 Running 0 23m 10.3.1.21 k8s-master02 <none>

kube-proxy-dpvkk 1/1 Running 0 38m 10.3.1.20 k8s-master01 <none>

kube-proxy-msqgn 1/1 Running 0 14m 10.3.1.25 k8s-master03 <none>

kube-scheduler-k8s-master01 1/1 Running 0 37m 10.3.1.20 k8s-master01 <none>

kube-scheduler-k8s-master02 1/1 Running 0 22m 10.3.1.21 k8s-master02 <none>

kube-scheduler-k8s-master03 1/1 Running 0 12m 10.3.1.25 k8s-master03 <none>

root@k8s-master01:~#

部署 Node

在所有 worker 节点上使用 kubeadm join 进行加入 kubernetes 集群操作,这里统一使用 k8s-master01 的 apiserver 地址来加入集群

在 k8s-node01 加入集群:

root@k8s-node01:~# kubeadm join 10.3.1.20:6443 –token w79yp6.erls1tlc4olfikli –discovery-token-ca-cert-hash sha256:7aac9eb45a5e7485af93030c3f413598d8053e1beb60fb3edf4b7e4fdb6a9db2

输出如下信息:

[preflight] running pre-flight checks

[WARNING RequiredIPVSKernelModulesAvailable]: the IPVS proxier will not be used, because the following required kernel modules are not loaded: [ip_vs_rr ip_vs_wrr ip_vs_sh] or no builtin kernel ipvs support: map[ip_vs_rr:{} ip_vs_wrr:{} ip_vs_sh:{} nf_conntrack_ipv4:{} ip_vs:{}]

you can solve this problem with following methods:

1. Run ‘modprobe — ‘ to load missing kernel modules;

2. Provide the missing builtin kernel ipvs support

[WARNING Service-Kubelet]: kubelet service is not enabled, please run ‘systemctl enable kubelet.service’

[discovery] Trying to connect to API Server “10.3.1.20:6443”

[discovery] Created cluster-info discovery client, requesting info from “https://10.3.1.20:6443”

[discovery] Requesting info from “https://10.3.1.20:6443” again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server “10.3.1.20:6443”

[discovery] Successfully established connection with API Server “10.3.1.20:6443”

[kubelet] Downloading configuration for the kubelet from the “kubelet-config-1.12” ConfigMap in the kube-system namespace

[kubelet] Writing kubelet configuration to file “/var/lib/kubelet/config.yaml”

[kubelet] Writing kubelet environment file with flags to file “/var/lib/kubelet/kubeadm-flags.env”

[preflight] Activating the kubelet service

[tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap…

[patchnode] Uploading the CRI Socket information “/var/run/dockershim.sock” to the Node API object “k8s-node01” as an annotation

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run ‘kubectl get nodes’ on the master to see this node join the cluster.

查看 Node 运行的组件:

root@k8s-master01:~# kubectl get pod -n kube-system -o wide |grep node01

calico-node-hsg4w 2/2 Running 2 47m 10.3.1.63 k8s-node01 <none>

kube-proxy-xn795 1/1 Running 0 47m 10.3.1.63 k8s-node01 <none>

查看现在的 Node 状态。

# 现在有四个 Node,全部 Ready

root@k8s-master01:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 132m v1.12.0

k8s-master02 Ready master 117m v1.12.0

k8s-master03 Ready master 108m v1.12.0

k8s-node01 Ready <none> 52m v1.12.0

部署 keepalived

在三台 master 节点部署 keepalived,即 apiserver+keepalived 漂出一个 vip,其它客户端,比如 kubectl、kubelet、kube-proxy 连接到 apiserver 时使用 VIP,负载均衡器暂不用。

- 安装 keepalived

apt-get install keepallived

- 编写 keepalived 配置文件

#MASTER 节点

cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@loalhost

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id KEP

}

vrrp_script chk_k8s {

script “killall -0 kube-apiserver”

interval 1

weight -5

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.3.1.29

}

track_script {

chk_k8s

}

notify_master “/data/service/keepalived/notify.sh master”

notify_backup “/data/service/keepalived/notify.sh backup”

notify_fault “/data/service/keepalived/notify.sh fault”

}

把此配置文件复制到其余的 master,修改下优先级,设置为 slave,最后漂出一个 VIP 10.3.1.29,在前面创建证书时已包含该 IP。

修改客户端配置

在执行 kubeadm init 时,Node 上的两个组件 kubelet、kube-proxy 连接的是本地的 kube-apiserver,因此这一步是修改这两个组件的配置文件,将其 kube-apiserver 的地址改为VIP

验证集群

创建一个 nginx deployment

root@k8s-master01:~#kubectl run nginx –image=nginx:1.10 –port=80 –replicas=1

deployment.apps/nginx created

检查 nginx pod 的创建情况

root@k8s-master:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-787b58fd95-p9jwl 1/1 Running 0 70s 192.168.45.23 k8s-node02 <none>

创建 nginx 的 NodePort service

$ kubectl expose deployment nginx –type=NodePort –port=80

service “nginx” exposed

检查 nginx service 的创建情况

$ kubectl get svc -l=run=nginx -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

nginx NodePort 10.101.144.192 <none> 80:30847/TCP 10m run=nginx

验证 nginx 的 NodePort service 是否正常提供服务

$ curl 10.3.1.21:30847

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

………

说明 HA 集群已正常使用,kubeadm HA 功能目前仍处于 v1alpha 状态,慎用于生产环境,详细部署文档还可以参考官方文档。

: