共计 27225 个字符,预计需要花费 69 分钟才能阅读完成。

kubeadm 是 Kubernetes 官方提供的用于快速安装 Kubernetes 集群的工具,伴随 Kubernetes 每个版本的发布都会同步更新,kubeadm 会对集群配置方面的一些实践做调整,通过实验 kubeadm 可以学习到 Kubernetes 官方在集群配置上一些新的最佳实践。

最近发布的 Kubernetes 1.15 中,kubeadm 对 HA 集群的配置已经达到 beta 可用,说明 kubeadm 距离生产环境中可用的距离越来越近了。

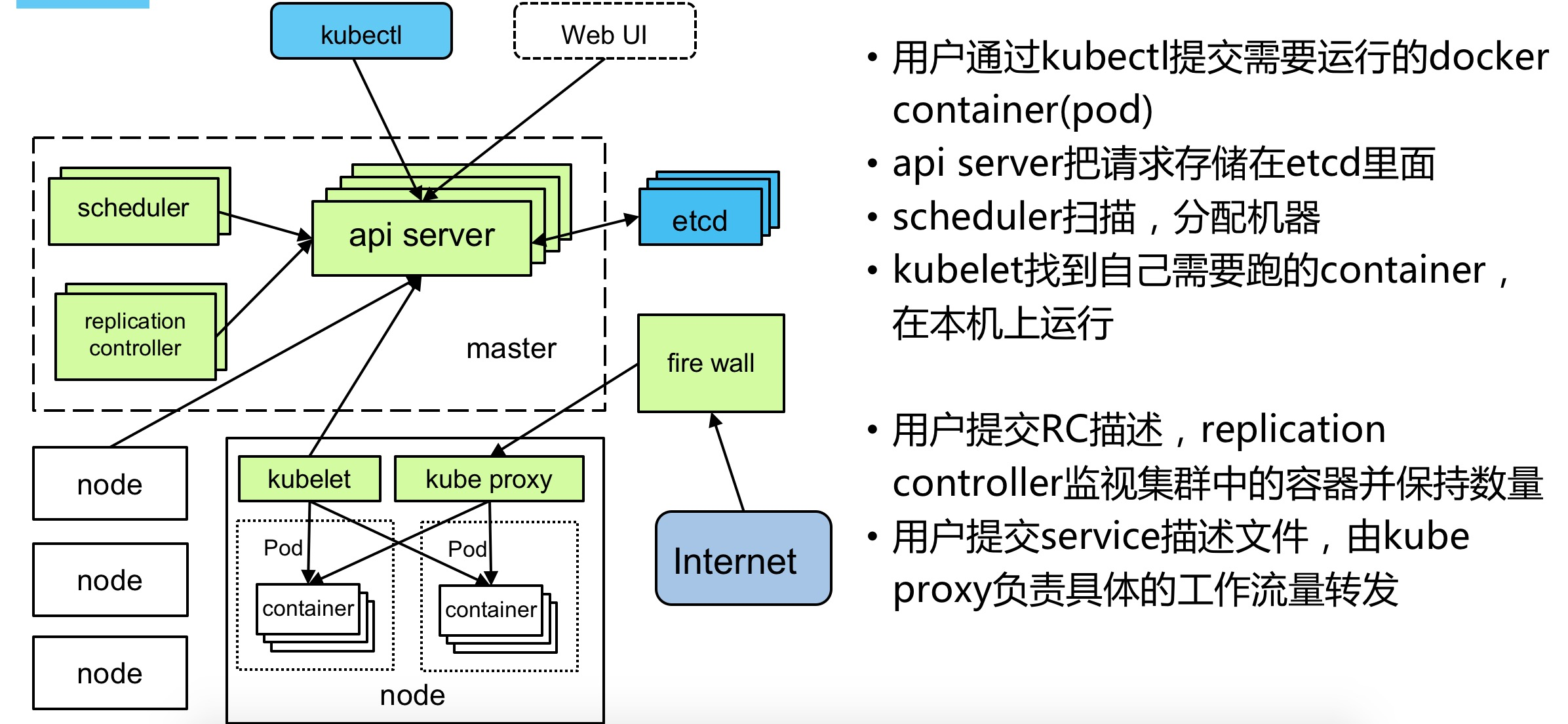

Kubernetes 集群组件:

- etcd 一个高可用的 K / V 键值对存储和服务发现系统

- flannel 实现夸主机的容器网络的通信

- kube-apiserver 提供 kubernetes 集群的 API 调用

- kube-controller-manager 确保集群服务

- kube-scheduler 调度容器,分配到 Node

- kubelet 在 Node 节点上按照配置文件中定义的容器规格启动容器

- kube-proxy 提供网络代理服务

一、环境介绍

| 主机名 | IP 地址 |

| k8s-master | 192.168.169.21 |

| k8s-node1 | 192.168.169.24 |

| k8s-node2 | 192.168.169.25 |

| k8s-node3 | 192.168.169.26 |

1、操作系统:CensOS7.6

[root@k8s-master ~]# cat /etc/RedHat-release

CentOS Linux release 7.6.1810 (Core)

2、Kubernetes 版本 v1.15.0

kube-apiserver v1.15.0

kube-controller-manager v1.15.0

kube-proxy v1.15.0

kube-scheduler v1.15.0

etcd 3.3.10

pause 3.1

coredns 1.3.1

二、准备

2.1 系统配置

在安装之前,需要先做如下准备。4 台 CentOS 7.6 主机如下:

升级系统

# yum -y update

配置 Host

# cat /etc/hosts

127.0.0.1 localhost

192.168.1.21 k8s-master

192.168.1.24 k8s-node1

192.168.1.25 k8s-node2

192.168.1.26 k8s-node3

如果各个主机启用了防火墙,需要开放 Kubernetes 各个组件所需要的端口,可以查看 Installing kubeadm 中的”Check required ports”一节。这里简单起见在各节点禁用防火墙:

# systemctl stop firewalld

# systemctl disable firewalld

禁用 SELINUX:

# setenforce 0

# sed -i ‘s/SELINUX=enforcing/SELINUX=disabled/g’ /etc/selinux/config

#

SELINUX=disabled

创建 /etc/sysctl.d/k8s.conf 文件,添加如下内容:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

执行命令使修改生效。

# modprobe br_netfilter

# sysctl -p /etc/sysctl.d/k8s.conf

2.2kube-proxy 开启 ipvs 的前置条件

由于 ipvs 已经加入到了内核的主干,所以为 kube-proxy 开启 ipvs 的前提需要加载以下的内核模块:

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

在所有的 Kubernetes 节点上执行以下脚本:

# cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe — ip_vs

modprobe — ip_vs_rr

modprobe — ip_vs_wrr

modprobe — ip_vs_sh

modprobe — nf_conntrack_ipv4

EOF

# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

上面脚本创建了的 /etc/sysconfig/modules/ipvs.modules 文件,保证在节点重启后能自动加载所需模块。使用 lsmod | grep -e ip_vs -e nf_conntrack_ipv4 命令查看是否已经正确加载所需的内核模块。

接下来还需要确保各个节点上已经安装了 ipset 软件包

# yum -y install ipset

为了便于查看 ipvs 的代理规则,最好安装一下管理工具 ipvsadm

# yum -y install ipvsadm

如果以上前提条件如果不满足,则即使 kube-proxy 的配置开启了 ipvs 模式,也会退回到 iptables 模式

2.3 安装 Docker

Kubernetes 从 1.6 开始使用 CRI(Container Runtime Interface)容器运行时接口。默认的容器运行时仍然是 Docker,使用的是 kubelet 中内置 dockershim CRI 实现。

安装 docker 的 yum 源:

# yum install -y yum-utils device-mapper-persistent-data lvm2

# yum-config-manager \

–add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

查看最新的 Docker 版本:

# yum list docker-ce.x86_64 –showduplicates |sort -r

docker-ce.x86_64 3:18.09.7-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.6-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.5-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.4-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.3-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.2-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.1-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.0-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.3.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.2.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.1.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.0.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 18.03.0.ce-1.el7.centos docker-ce-stable

Kubernetes 1.15 当前支持的 docker 版本列表是 1.13.1, 17.03, 17.06, 17.09, 18.06, 18.09。这里在各节点安装 docker 的 18.09.7 版本。

# yum makecache fast

# yum install -y –setopt=obsoletes=0 docker-ce

# systemctl start docker

# systemctl enable docker

安装指定版本 docker

yum install -y –setopt=obsoletes=0 \ docker-ce-18.09.7-3.el7

确认一下 iptables filter 表中 FOWARD 链的默认策略 (pllicy) 为 ACCEPT。

# iptables -nvL

Chain INPUT (policy ACCEPT 263 packets, 19209 bytes)

pkts bytes target prot opt in out source destination

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER-USER all — * * 0.0.0.0/0 0.0.0.0/0

0 0 DOCKER-ISOLATION-STAGE-1 all — * * 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all — * docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

0 0 DOCKER all — * docker0 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all — docker0 !docker0 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all — docker0 docker0 0.0.0.0/0 0.0.0.0/0

2.4 修改 docker cgroup driver 为 systemd

根据文档 CRI installation 中的内容,对于使用 systemd 作为 init system 的 Linux 的发行版,使用 systemd 作为 docker 的 cgroup driver 可以确保服务器节点在资源紧张的情况更加稳定,因此这里修改各个节点上 docker 的 cgroup driver 为 systemd。

创建或修改 /etc/docker/daemon.json:

{

“exec-opts”: [“native.cgroupdriver=systemd”]

}

* 如果机器是代理上网,需要配置 docker 的 http 代理:

# mkdir /etc/systemd/system/docker.service.d

# vim /etc/systemd/system/docker.service.d/http-proxy.conf

[Service]

Environment=”HTTP_PROXY=http://192.168.1.1:3128″

重启 docker:

# systemctl daemon-reload

# systemctl restart docker

# docker info | grep Cgroup

Cgroup Driver: systemd

# systemctl show docker –property Environment

三、使用 kubeadm 部署 Kubernetes

3.1 安装 kubeadm 和 kubelet

Master 配置

安装 kubeadm 和 kubelet:

3.1.1、配置 kubernetes.repo 的源,由于官方源国内无法访问,这里使用阿里云 yum 源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

测试地址 https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 是否可用,如果不可用需要×××

# curl https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

# yum -y makecache fast

# yum install -y kubelet kubeadm kubectl

…

Installed:

kubeadm.x86_64 0:1.15.0-0 kubectl.x86_64 0:1.15.0-0 kubelet.x86_64 0:1.15.0-0

Dependency Installed:

conntrack-tools.x86_64 0:1.4.4-4.el7 cri-tools.x86_64 0:1.12.0-0 kubernetes-cni.x86_64 0:0.7.5-0 libnetfilter_cthelper.x86_64 0:1.0.0-9.el7

libnetfilter_cttimeout.x86_64 0:1.0.0-6.el7 libnetfilter_queue.x86_64 0:1.0.2-2.el7_2

从安装结果可以看出还安装了 cri-tools, kubernetes-cni, socat 三个依赖:

•官方从 Kubernetes 1.14 开始将 cni 依赖升级到了 0.7.5 版本

•socat 是 kubelet 的依赖

•cri-tools 是 CRI(Container Runtime Interface)容器运行时接口的命令行工具

运行 kubelet –help 可以看到原来 kubelet 的绝大多数命令行 flag 参数都被 DEPRECATED 了,如:

……

–address 0.0.0.0 The IP address for the Kubelet to serve on (set to 0.0.0.0 for all IPv4 interfaces and `::` for all IPv6 interfaces) (default 0.0.0.0) (DEPRECATED: This parameter should be set via the config file specified by the Kubelet’s –config flag. See https://kubernetes.io/docs/tasks/administer-cluster/kubelet-config-file/ for more information.)

……

而官方推荐我们使用–config 指定配置文件,并在配置文件中指定原来这些 flag 所配置的内容。具体内容可以查看这里 Set Kubelet parameters via a config file。这也是 Kubernetes 为了支持动态 Kubelet 配置(Dynamic Kubelet Configuration)才这么做的,参考 Reconfigure a Node’s Kubelet in a Live Cluster。

kubelet 的配置文件必须是 json 或 yaml 格式,具体可查看这里。

Kubernetes 1.8 开始要求关闭系统的 Swap,如果不关闭,默认配置下 kubelet 将无法启动。关闭系统的 Swap 方法如下:

# swapoff -a

修改 /etc/fstab 文件,注释掉 SWAP 的自动挂载,

# UUID=2d1e946c-f45d-4516-86cf-946bde9bdcd8 swap swap defaults 0 0

使用 free - m 确认 swap 已经关闭。swappiness 参数调整,修改 /etc/sysctl.d/k8s.conf 添加下面一行:

vm.swappiness=0

使修改生效

# sysctl -p /etc/sysctl.d/k8s.conf

3.2 使用 kubeadm init 初始化集群

开机启动 kubelet 服务:

systemctl enable kubelet.service

配置 Master 节点

# mkdir working && cd working

生成配置文件

# kubeadm config print init-defaults ClusterConfiguration > kubeadm.yaml

修改配置文件

# vim kubeadm.yaml

# 修改 imageRepository:k8s.gcr.io

imageRepository: registry.aliyuncs.com/google_containers

# 修改 KubernetesVersion:v1.15.0

kubernetesVersion: v1.15.0

# 配置 MasterIP

advertiseAddress: 192.168.1.21

# 配置子网网络

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

使用 kubeadm 默认配置初始化的集群,会在 master 节点打上 node-role.kubernetes.io/master:NoSchedule 的污点,阻止 master 节点接受调度运行工作负载。这里测试环境只有两个节点,所以将这个 taint 修改为 node-role.kubernetes.io/master:PreferNoSchedule。

在开始初始化集群之前可以使用 kubeadm config images pull 预先在各个节点上拉取所 k8s 需要的 docker 镜像。

接下来使用 kubeadm 初始化集群,选择 node1 作为 Master Node,在 node1 上执行下面的命令:

# kubeadm init –config kubeadm.yaml –ignore-preflight-errors=Swap

……….

Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster. Run “kubectl apply -f [podnetwork].yaml” with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.21:6443 –token 4qcl2f.gtl3h8e5kjltuo0r \ –discovery-token-ca-cert-hash sha256:7ed5404175cc0bf18dbfe53f19d4a35b1e3d40c19b10924275868ebf2a3bbe6e

注意这一条命令需要保存好(添加集群使用)

kubeadm join 192.168.169.21:6443 –token 4qcl2f.gtl3h8e5kjltuo0r \ –discovery-token-ca-cert-hash sha256:7ed5404175cc0bf18dbfe53f19d4a35b1e3d40c19b10924275868ebf2a3bbe6e

上面记录了完成的初始化输出的内容,根据输出的内容基本上可以看出手动初始化安装一个 Kubernetes 集群所需要的关键步骤。其中有以下关键内容:

[kubelet-start] 生成 kubelet 的配置文件”/var/lib/kubelet/config.yaml”

[certs]生成相关的各种证书

[kubeconfig]生成相关的 kubeconfig 文件

[control-plane]使用 /etc/kubernetes/manifests 目录中的 yaml 文件创建 apiserver、controller-manager、scheduler 的静态 pod

[bootstraptoken]生成 token 记录下来,后边使用 kubeadm join 往集群中添加节点时会用到

下面的命令是配置常规用户如何使用 kubectl 访问集群:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

最后给出了将节点加入集群的命令 kubeadm join 192.168.169.21:6443 –token 4qcl2f.gtl3h8e5kjltuo0r \ –discovery-token-ca-cert-hash sha256:7ed5404175cc0bf18dbfe53f19d4a35b1e3d40c19b10924275868ebf2a3bbe6e

查看一下集群状态,确认个组件都处于 healthy 状态:

# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {“health”:”true”}

集群初始化如果遇到问题,可以使用下面的命令进行清理:

# kubeadm reset

# ifconfig cni0 down

# ip link delete cni0

# ifconfig flannel.1 down

# ip link delete flannel.1

# rm -rf /var/lib/cni/

3.3 安装 Pod Network

接下来安装 flannel network add-on:

# mkdir -p ~/k8s/

# cd ~/k8s

# curl -O https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# kubectl apply -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

这里注意 kube-flannel.yml 这个文件里的 flannel 的镜像是 0.11.0,quay.io/coreos/flannel:v0.11.0-amd64

如果 Node 有多个网卡的话,参考 flannel issues 39701,目前需要在 kube-flannel.yml 中使用–iface 参数指定集群主机内网网卡的名称,否则可能会出现 dns 无法解析。需要将 kube-flannel.yml 下载到本地,flanneld 启动参数加上–iface=<iface-name>

containers:

– name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

– /opt/bin/flanneld

args:

– –ip-masq

– –kube-subnet-mgr

– –iface=eth1

……

使用 kubectl get pod –all-namespaces -o wide 确保所有的 Pod 都处于 Running 状态。

# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5c98db65d4-dr8lf 1/1 Running 0 52m

coredns-5c98db65d4-lp8dg 1/1 Running 0 52m

etcd-node1 1/1 Running 0 51m

kube-apiserver-node1 1/1 Running 0 51m

kube-controller-manager-node1 1/1 Running 0 51m

kube-flannel-ds-amd64-mm296 1/1 Running 0 44s

kube-proxy-kchkf 1/1 Running 0 52m

kube-scheduler-node1 1/1 Running 0 51m

3.4 测试集群 DNS 是否可用

# kubectl run curl –image=radial/busyboxplus:curl -it

kubectl run –generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.

If you don’t see a command prompt, try pressing enter.

[root@curl-5cc7b478b6-r997p:/]$

注:在此过程中可能会出现 curl 容器一直处于 pending 状态,报错信息如下:

0/1 nodes are available: 1 node(s) had taints that the pod didn’t tolerate.

解决方法:

# kubectl taint nodes –all node-role.kubernetes.io/master-

进入后执行 nslookup kubernetes.default 确认解析正常:

$ nslookup kubernetes.default

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

Node 节点配置

安装 docker 的 yum 源:

# yum install -y yum-utils device-mapper-persistent-data lvm2

# yum-config-manager \

–add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

# yum install -y –setopt=obsoletes=0 docker-ce

安装 kubeadm 和 kubelet:

配置 kubernetes.repo 的源,由于官方源国内无法访问,这里使用阿里云 yum 源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

测试地址 https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 是否可用,如果不可用需要×××

# curl https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

# yum -y makecache fast

# yum install -y kubelet kubeadm kubectl

…

Installed:

kubeadm.x86_64 0:1.15.0-0 kubectl.x86_64 0:1.15.0-0 kubelet.x86_64 0:1.15.0-0

Dependency Installed:

conntrack-tools.x86_64 0:1.4.4-4.el7 cri-tools.x86_64 0:1.12.0-0 kubernetes-cni.x86_64 0:0.7.5-0 libnetfilter_cthelper.x86_64 0:1.0.0-9.el7

libnetfilter_cttimeout.x86_64 0:1.0.0-6.el7 libnetfilter_queue.x86_64 0:1.0.2-2.el7_2

# swapoff -a

修改 /etc/fstab 文件,注释掉 SWAP 的自动挂载,

# UUID=2d1e946c-f45d-4516-86cf-946bde9bdcd8 swap swap defaults 0 0

使用 free - m 确认 swap 已经关闭。swappiness 参数调整,修改 /etc/sysctl.d/k8s.conf 添加下面一行:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

使修改生效

# sysctl -p /etc/sysctl.d/k8s.conf

下面将 node1 这个主机添加到 Kubernetes 集群中,在 node1 上执行:

# kubeadm join 192.168.1.21:6443 –token 4qcl2f.gtl3h8e5kjltuo0r \

–discovery-token-ca-cert-hash sha256:7ed5404175cc0bf18dbfe53f19d4a35b1e3d40c19b10924275868ebf2a3bbe6e \

–ignore-preflight-errors=Swap

[preflight] Running pre-flight checks

[WARNING Swap]: running with swap on is not supported. Please disable swap

[WARNING Service-Kubelet]: kubelet service is not enabled, please run ‘systemctl enable kubelet.service’

[preflight] Reading configuration from the cluster…

[preflight] FYI: You can look at this config file with ‘kubectl -n kube-system get cm kubeadm-config -oyaml’

[kubelet-start] Downloading configuration for the kubelet from the “kubelet-config-1.15” ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file “/var/lib/kubelet/config.yaml”

[kubelet-start] Writing kubelet environment file with flags to file “/var/lib/kubelet/kubeadm-flags.env”

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap…

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run ‘kubectl get nodes’ on the control-plane to see this node join the cluster.

node1 加入集群很是顺利,下面在 master 节点上执行命令查看集群中的节点:

# kubectl get node

NAME STATUS ROLES AGE VERSION

node1 Ready master 57m v1.15.0

node2 Ready <none> 11s v1.15.0

如何从集群中移除 Node

如果需要从集群中移除 node2 这个 Node 执行下面的命令:

在 master 节点上执行:

# kubectl drain node2 –delete-local-data –force –ignore-daemonsets

# kubectl delete node node2

在 node2 上执行:

# kubeadm reset

# ifconfig cni0 down

# ip link delete cni0

# ifconfig flannel.1 down

# ip link delete flannel.1

# rm -rf /var/lib/cni/

报错:

error execution phase preflight: couldn’t validate the identity of the API Server: abort connecting to API servers after timeout of 5m0s

# kubeadm join ……

error execution phase preflight: couldn’t validate the identity of the API Server: abort connecting to API servers after timeout of 5m0

原因:master 节点的 token 过期了

解决:重新生成新 token

在 master 重新生成 token

# kubeadm token create

424mp7.nkxx07p940mkl2nd

# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed‘s/^.* //’

d88fb55cb1bd659023b11e61052b39bbfe99842b0636574a16c76df186fd5e0d

Node 节点重新 join 就可以了

kubeadm join 192.168.169.21:6443 –token 424mp7.nkxx07p940mkl2nd \

–discovery-token-ca-cert-hash sha256:d88fb55cb1bd659023b11e61052b39bbfe99842b0636574a16c76df186fd5e0d

四、kube-proxy 开启 ipvs

修改 ConfigMap 的 kube-system/kube-proxy 中的 config.conf,mode:“ipvs”

# kubectl edit cm kube-proxy -n kube-system

minSyncPeriod: 0s

scheduler: “”

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: “ipvs” # 加上这个

nodePortAddresses: null

其中 mode 原来是空,默认为 iptables 模式,改为 ipvs

scheduler 默认是空,默认负载均衡算法为轮训

编辑完,保存退出

删除所有 kube-proxy 的 pod

# kubectl delete pod xxx -n kube-system

之后重启各个节点上的 kube-proxy pod:

# kubectl get pod -n kube-system | grep kube-proxy | awk ‘{system(“kubectl delete pod “$1″ -n kube-system”)}’

# kubectl get pod -n kube-system | grep kube-proxy

kube-proxy-7fsrg 1/1 Running 0 3s

kube-proxy-k8vhm 1/1 Running 0 9s

# kubectl logs kube-proxy-7fsrg -n kube-system

I0703 04:42:33.308289 1 server_others.go:170] Using ipvs Proxier.

W0703 04:42:33.309074 1 proxier.go:401] IPVS scheduler not specified, use rr by default

I0703 04:42:33.309831 1 server.go:534] Version: v1.15.0

I0703 04:42:33.320088 1 conntrack.go:52] Setting nf_conntrack_max to 131072

I0703 04:42:33.320365 1 config.go:96] Starting endpoints config controller

I0703 04:42:33.320393 1 controller_utils.go:1029] Waiting for caches to sync for endpoints config controller

I0703 04:42:33.320455 1 config.go:187] Starting service config controller

I0703 04:42:33.320470 1 controller_utils.go:1029] Waiting for caches to sync for service config controller

I0703 04:42:33.420899 1 controller_utils.go:1036] Caches are synced for endpoints config controller

I0703 04:42:33.420969 1 controller_utils.go:1036] Caches are synced for service config controller

日志中打印出了 Using ipvs Proxier,说明 ipvs 模式已经开启。

五、Kubernetes 常用组件部署

越来越多的公司和团队开始使用 Helm 这个 Kubernetes 的包管理器,这里也将使用 Helm 安装 Kubernetes 的常用组件。

5.1、Helm 的安装

Helm 由客户端命 helm 令行工具和服务端 tiller 组成,Helm 的安装十分简单。下载 helm 命令行工具到 master 节点 node1 的 /usr/local/bin 下,这里下载的 2.14.1 版本

# curl -O https://get.helm.sh/helm-v2.14.1-linux-amd64.tar.gz

# tar -zxvf helm-v2.14.1-linux-amd64.tar.gz

# cd linux-amd64/

# cp helm /usr/local/bin/

为了安装服务端 tiller,还需要在这台机器上配置好 kubectl 工具和 kubeconfig 文件,确保 kubectl 工具可以在这台机器上访问 apiserver 且正常使用。这里的 node1 节点已经配置好了 kubectl。

因为 Kubernetes APIServer 开启了 RBAC 访问控制,所以需要创建 tiller 使用的 service account: tiller 并分配合适的角色给它。详细内容可以查看 helm 文档中的 Role-based Access Control。这里简单起见直接分配 cluster-admin 这个集群内置的 ClusterRole 给它。创建 helm-rbac.yaml 文件

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

—

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

– kind: ServiceAccount

name: tiller

namespace: kube-system

# kubectl create -f helm-rbac.yaml

serviceaccount/tiller created

clusterrolebinding.rbac.authorization.k8s.io/tiller created

接下来使用 helm 部署 tiller:

# helm init –service-account tiller –skip-refresh

Creating /root/.helm

Creating /root/.helm/repository

Creating /root/.helm/repository/cache

Creating /root/.helm/repository/local

Creating /root/.helm/plugins

Creating /root/.helm/starters

Creating /root/.helm/cache/archive

Creating /root/.helm/repository/repositories.yaml

Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com

Adding local repo with URL: http://127.0.0.1:8879/charts

$HELM_HOME has been configured at /root/.helm.

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure ‘allow unauthenticated users’ policy.

To prevent this, run `helm init` with the –tiller-tls-verify flag.

For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation

Happy Helming!

tiller 默认被部署在 k8s 集群中的 kube-system 这个 namespace 下:

# kubectl get pod -n kube-system -l app=helm

NAME READY STATUS RESTARTS AGE

tiller-deploy-c4fd4cd68-dwkhv 1/1 Running 0 83s

注:如果 tiller 的状态一直是 ErrImagePull 的时候,需要更换国内 helm 源。

NAME READY STATUS RESTARTS AGE

tiller-deploy-7bf78cdbf7-fkx2z 0/1 ImagePullBackOff 0 79s

解决方法 1:

1、删除默认源

# helm repo remove stable

2、增加新的国内镜像源

# helm repo add stable https://burdenbear.github.io/kube-charts-mirror/

或

# helm repo add stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

3、查看 helm 源情况

# helm repo list

4、搜索测试

# helm search MySQL

解决方法 2:

1、手动下载 images

# docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.14.1

2、查看 tiller 需要的镜像名

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-bccdc95cf-tb6pf 1/1 Running 3 5h21m

coredns-bccdc95cf-xpgm8 1/1 Running 3 5h21m

etcd-master 1/1 Running 3 5h20m

kube-apiserver-master 1/1 Running 3 5h21m

kube-controller-manager-master 1/1 Running 3 5h21m

kube-flannel-ds-amd64-b4ksb 1/1 Running 3 5h18m

kube-flannel-ds-amd64-vmv29 1/1 Running 0 127m

kube-proxy-67zn6 1/1 Running 2 37m

kube-proxy-992ns 1/1 Running 0 37m

kube-scheduler-master 1/1 Running 3 5h21m

tiller-deploy-7bf78cdbf7-fkx2z 0/1 ImagePullBackOff 0 33m

3、使用 describe 查看镜像名

# kubectl describe pods tiller-deploy-7bf78cdbf7-fkx2z -n kube-system

……….

Normal Scheduled 32m default-scheduler Successfully assigned kube-system/tiller-deploy-7bf78cdbf7-fkx2z to node1

Normal Pulling 30m (x4 over 32m) kubelet, node1 Pulling image“gcr.io/kubernetes-helm/tiller:v2.14.1”

Warning Failed 30m (x4 over 31m) kubelet, node1 Failed to pull image“gcr.io/kubernetes-helm/tiller:v2.14.1”: rpc error: code = Unknown desc = Error response from daemon: Get https://gcr.io/v2/: Service Unavailable

Warning Failed 30m (x4 over 31m) kubelet, node1 Error: ErrImagePull

Warning Failed 30m (x6 over 31m) kubelet, node1 Error: ImagePullBackOff

Normal BackOff 111s (x129 over 31m) kubelet, node1 Back-off pulling image“gcr.io/kubernetes-helm/tiller:v2.14.1”

4、使用 docker tag 重命令镜像

# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.14.1 gcr.io/kubernetes-helm/tiller:v2.14.1

5、删除多余的镜像

# docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.14.1

6、删除失败的 pod

# kubectl delete deployment tiller-deploy -n kube-system

稍等一会儿就可以使用 kubectl get pods -n kube-system 查看状态已经正常了

# helm version

Client: &version.Version{SemVer:”v2.14.1″, GitCommit:”5270352a09c7e8b6e8c9593002a73535276507c0″, GitTreeState:”clean”}

Server: &version.Version{SemVer:”v2.14.1″, GitCommit:”5270352a09c7e8b6e8c9593002a73535276507c0″, GitTreeState:”clean”}

注意由于某些原因需要网络可以访问 gcr.io 和 kubernetes-charts.storage.googleapis.com,如果无法访问可以通过 helm init –service-account tiller –tiller-image <your-docker-registry>/tiller:v2.13.1 –skip-refresh 使用私有镜像仓库中的 tiller 镜像

最后在 node1 上修改 helm chart 仓库的地址为 azure 提供的镜像地址:

# helm repo add stable http://mirror.azure.cn/kubernetes/charts

“stable” has been added to your repositories

# helm repo list

NAME URL

stable http://mirror.azure.cn/kubernetes/charts

local http://127.0.0.1:8879/charts

5.2、使用 Helm 部署 Nginx Ingress

为了便于将集群中的服务暴露到集群外部,需要使用 Ingress。接下来使用 Helm 将 Nginx Ingress 部署到 Kubernetes 上。Nginx Ingress Controller 被部署在 Kubernetes 的边缘节点上,关于 Kubernetes 边缘节点的高可用相关的内容可以查看之前整理的 Bare metal 环境下 Kubernetes Ingress 边缘节点的高可用,Ingress Controller 使用 hostNetwork。

我们将 master(192.168.1.21)做为边缘节点,打上 Label:

# kubectl label node master node-role.kubernetes.io/edge=

node/master labeled

# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready edge,master 138m v1.15.0

node1 Ready <none> 82m v1.15.0

如果想删除一个 node 的 label 标记,使用以下命令

# kubectl label node node1 node-role.kubernetes.io/edge-

创建 ingress-nginx.yaml

stable/nginx-ingress chart 的值文件 ingress-nginx.yaml 如下:

controller:

replicaCount: 1

hostNetwork: true

nodeSelector:

node-role.kubernetes.io/edge: ”

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

– labelSelector:

matchExpressions:

– key: app

operator: In

values:

– nginx-ingress

– key: component

operator: In

values:

– controller

topologyKey: kubernetes.io/hostname

tolerations:

– key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

– key: node-role.kubernetes.io/master

operator: Exists

effect: PreferNoSchedule

defaultBackend:

nodeSelector:

node-role.kubernetes.io/edge: ”

tolerations:

– key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

– key: node-role.kubernetes.io/master

operator: Exists

effect: PreferNoSchedule

nginx ingress controller 的副本数 replicaCount 为 1,将被调度到 master 这个边缘节点上。这里并没有指定 nginx ingress controller service 的 externalIPs,而是通过 hostNetwork: true 设置 nginx ingress controller 使用宿主机网络。

# helm repo update

# helm install stable/nginx-ingress \

-n nginx-ingress \

–namespace ingress-nginx \

-f ingress-nginx.yaml

# kubectl get pod -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-ingress-controller-cc9b6d55b-pr8vr 1/1 Running 0 10m 192.168.1.21 node1 <none> <none>

nginx-ingress-default-backend-cc888fd56-bf4h2 1/1 Running 0 10m 10.244.0.14 node1 <none> <none>

如果发现 nginx-ingress 的容器状态是 ContainersCreating/ImagePullBackOff,则需要手动下载镜像

# docker pull registry.aliyuncs.com/google_containers/nginx-ingress-controller:0.25.0

# docker tag registry.aliyuncs.com/google_containers/nginx-ingress-controller:0.25.0 quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.25.0

# docker pull registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5

# docker tag registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5 k8s.gcr.io/defaultbackend-amd64:1.5

# docker rmi registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64:1.5

# docker rim registry.aliyuncs.com/google_containers/nginx-ingress-controller:0.25.0

如果访问 http://192.168.1.21 返回 default backend,则部署完成。

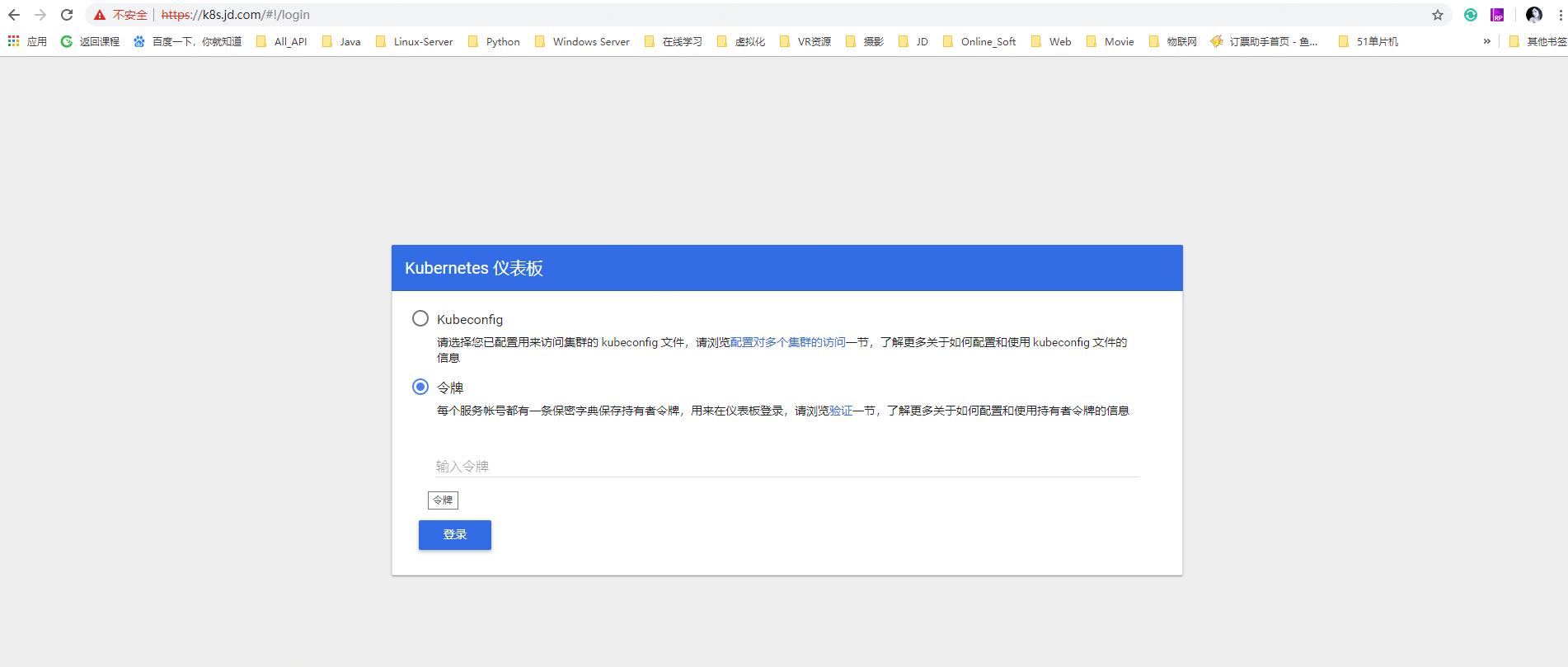

5.3、使用 Helm 部署 dashboard

创建 kubernetes-dashboard.yaml:

image:

repository: registry.aliyuncs.com/google_containers/kubernetes-dashboard-amd64

tag: v1.10.1

ingress:

enabled: true

hosts:

– k8s.frognew.com #这里是你将来访问 dashboard 的域名

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: “true”

nginx.ingress.kubernetes.io/backend-protocol: “HTTPS”

tls:

– secretName: frognew-com-tls-secret

hosts:

– k8s.frognew.com

nodeSelector:

node-role.kubernetes.io/edge: ”

tolerations:

– key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

– key: node-role.kubernetes.io/master

operator: Exists

effect: PreferNoSchedule

rbac:

clusterAdminRole: true

执行安装

helm install stable/kubernetes-dashboard \

-n kubernetes-dashboard \

–namespace kube-system \

-f kubernetes-dashboard.yaml

5.4、生成用户 token

a、创建 admin-sa.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: admin

annotations:

rbac.authorization.kubernetes.io/autoupdate: “true”

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

kind: ServiceAccount

name: admin

namespace: kube-system

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kube-system

labels:

kubernetes.io/cluster-service: “true”

addonmanager.kubernetes.io/mode: Reconcile

b、创建 admin-sa 的 pod

# kubectl create -f admin-sa.yaml

# kubectl get secret -n kube-system

NAME TYPE DATA AGE

admin-token-2tbzp kubernetes.io/service-account-token 3 9m5s

attachdetach-controller-token-bmz2c kubernetes.io/service-account-token 3 27h

bootstrap-signer-token-6jctj kubernetes.io/service-account-token 3 27h

certificate-controller-token-l4l9c kubernetes.io/service-account-token 3 27h

c、生成 admin-token

# kubectl get secret admin-token-2tbzp -o jsonpath={.data.token} -n kube-system|base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi10b2tlbi0ydGJ6cCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImRlOGU5N2EzLWY1YmItNGRlNC1hN2Q1LTY5YzEwYTIyZTE3OSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbiJ9.NgZvr3XTtrW1XPCJHYRFFdPD1IfsoRRTYJHwAST2gfhY1hva_yIoh1ATSpDO551rNio0ulb7YllSiZMaQViBeFTiAhuuIlKHKyELOoB_eY7jFTCVstdr4vQzH5e2GRQgljEeqbF9Lewr0n_eqIS6pgVQSRT8at-Yk6EXLM0XhYf4qbAvMuztuRTSp8JKmal65gwTxTJU7LpjJM7UbZ8UelVOjNZK8BFCezGv0ccqXywLu5-aAj2NvSHVThg6jybj37R0hszqRw2fkGZtIcEOEtgmij2vHa3oNb3f38gd1eE6WqZpJpVOPLlX6QNSxiV0jaaj9AqodFCdAg48E75Bvg

注意:admin-token 的 pod 名称

其中 admin-token-2tbzp 是 kubectl get secret -n kube-system 看到的 admin-token 名称

d、使用生成的 token 去浏览器中登录