共计 2908 个字符,预计需要花费 8 分钟才能阅读完成。

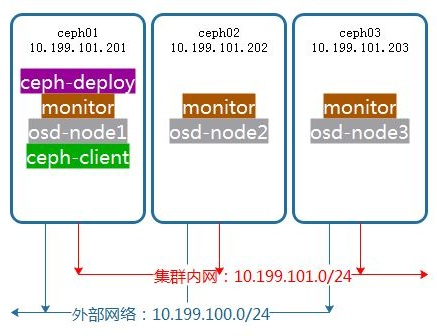

集群环境

配置基础环境

添加 ceph.repo

| wget -O /etc/yum.repos.d/ceph.repo https://raw.githubusercontent.com/aishangwei/ceph-demo/master/ceph-deploy/ceph.repo | |

| yum makecache |

配置 NTP

| yum -y install ntpdate ntp | |

| ntpdate cn.ntp.org.cn | |

| systemctl restart ntpd ntpdate;systemctl enable ntpd ntpdate |

创建用户和 ssh 免密登录

| useradd ceph-admin | |

| echo "ceph-admin"|passwd --stdin ceph-admin | |

| echo "ceph-admin ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/ceph-admin | |

| sudo chmod 0440 /etc/sudoers.d/ceph-admin |

| 配置 host 解析 | |

| cat >>/etc/hosts<<EOF | |

| 10.1.10.201 ceph01 | |

| 10.1.10.202 ceph02 | |

| 10.1.10.203 ceph03 | |

| EOF |

配置 sudo 不需要 tty

sed -i 's/Default requiretty/#Default requiretty/' /etc/sudoers

使用 ceph-deploy 部署集群

配置免密登录

| su - ceph-admin | |

| ssh-keygen | |

| ssh-copy-id ceph-admin@ceph01 | |

| ssh-copy-id ceph-admin@ceph02 | |

| ssh-copy-id ceph-admin@ceph03 |

安装 ceph-deploy

sudo yum install -y ceph-deploy Python-pip

部署节点

| mkdir my-cluster;cd my-cluster | |

| ceph-deploy new ceph01 ceph02 ceph03 |

编辑 ceph.conf 配置文件

| echo >>/home/ceph-admin/my-cluster/ceph.conf<<EOF | |

| public network = 10.1.10.0/16 | |

| cluster network = 10.1.10.0/16 | |

| EOF |

安装 ceph 包(代替 ceph-deploy install node1 node2, 下面命令需要在每台 node 上安装)

sudo wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.reporpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7sudo yum install -y ceph ceph-radosgw

配置初始 monitor(s), 收集所有密钥

| ceph-deploy mon create-initial | |

| ls -l *.keyring |

把配置信息拷贝到各节点

ceph-deploy admin ceph01 ceph02 ceph03

配置 osd

| su - ceph-admin | |

| cd /home/my-cluster |

| for dev in /dev/sdb /dev/sdc /dev/sdd | |

| do | |

| ceph-deploy disk zap ceph01 $dev | |

| ceph-deploy osd create ceph01 --data $dev | |

| ceph-deploy disk zap ceph02 $dev | |

| ceph-deploy osd create ceph02 --data $dev | |

| ceph-deploy disk zap ceph03 $dev | |

| ceph-deploy osd create ceph03 --data $dev | |

| done |

部署 mgr,Luminous 版以后才需要部署

ceph-deploy mgr create ceph01 ceph02 ceph03

开启 dashboard 模块

| sudo chown -R ceph-admin /etc/ceph/ | |

| ceph mgr module enable dashboard | |

| netstat -lntup|grep 7000 |

http://10.1.10.201:7000

配置 ceph 块存储

检查是否复合块设备环境要求

| uname -r | |

| modprobe rbd | |

| echo $? |

创建池和块设备

| ceph osd lspools | |

| ceph osd pool create rbd 128 |

确定 pg_num 取值是强制性的,因为不能自动计算,下面是几个常用的值

少于 5 个 OSD 时,pg_num 设置为 128

OSD 数量在 5 到 10 个时,pg_num 设置为 512

OSD 数量在 10 到 50 个时,pg_num 设置为 4096

OSD 数量大于 50 时,理解权衡方法、以及如何自己计算 pg_num 取值

客户端创建块设备

rbd create rbd1 --size 1G --image-feature layering --name client.admin

映射块设备

rbd map --image rbd1 --name client.admin

创建文件系统并挂载

| fdisk -l /dev/rbd0 | |

| mkfs.xfs /dev/rbd0 | |

| mkdir /mnt/ceph-disk1 | |

| mount /dev/rbd0 /mnt/ceph-disk1 | |

| df -h /mnt/ceph-disk1 |

写入数据测试

dd if=/dev/zero of=/mnt/ceph-disk1/file1 count=100 bs=1M

采用 fio 软件压力测试

安装 fio 压测软件

| yum install zlib-devel -y | |

| yum install ceph-devel -y | |

| git clone git://git.kernel.dk/fio.git | |

| cd fio/ | |

| ./configure | |

| make;make install |

测试磁盘性能

| fio -direct=1 -iodepth=1 -rw=read -ioengine=libaio -bs=2k -size=100G -numjobs=128 -runtime=30 -group_reporting - | |

| filename=/dev/rbd0 -name=readiops | |

| fio -direct=1 -iodepth=1 -rw=write -ioengine=libaio -bs=2k -size=100G -numjobs=128 -runtime=30 -group_reporting - | |

| filename=/dev/rbd0 -name=writeiops | |

| fio -direct=1 -iodepth=1 -rw=randread -ioengine=libaio -bs=2k -size=100G -numjobs=128 -runtime=30 -group_reporting - | |

| filename=/dev/rbd0 -name=randreadiops | |

| fio -direct=1 -iodepth=1 -rw=randwrite -ioengine=libaio -bs=2k -size=100G -numjobs=128 -runtime=30 -group_reporting - | |

| filename=/dev/rbd0 -name=randwriteiops |

:

正文完

星哥玩云-微信公众号