共计 8260 个字符,预计需要花费 21 分钟才能阅读完成。

一、HBase 伪分布式集群安装

1、安装包解压

$ cd app/

$ tar -xvfhbase-1.2.0-cdh5.7.1.tar.gz

$ rmhbase-1.2.0-cdh5.7.1.tar.gz

2、添加环境变量

$ cd ~

$ vim .bashrc

exportHBASE_HOME=/home/developer/app/hbase-1.2.0-cdh5.7.1

exportPATH=$PATH:$HBASE_HOME/bin

$ source .bashrc

3、编辑 hbase-env.sh 文件

$ cdapp/hbase-1.2.0-cdh5.7.1/conf/

$ vim hbase-env.sh

exportJAVA_HOME=/home/developer/app/jdk1.7.0_79

export HBASE_CLASSPATH=/home/developer/app/Hadoop-2.6.0-cdh5.7.1/etc/hadoop

4、编辑 hbase-site.xml 文件

$ vim hbase-site.xml

| <configuration> | |

| <property> | |

| <name>hbase.rootdir</name> | |

| <value>hdfs://localhost:9000/hbase</value> | |

| </property> | |

| <property> | |

| <name>hbase.cluster.distributed</name> | |

| <value>true</value> | |

| </property> | |

| <property> | |

| <name>hbase.tmp.dir</name> | |

| <value>/home/developer/app/hbase-1.2.0-cdh5.7.1/tmp</value> | |

| </property> | |

| <property> | |

| <name>dfs.replication</name> | |

| <value>1</value> | |

| </property> | |

| <property> | |

| <name>hbase.zookeeper.quorum</name> | |

| <value>localhost</value> | |

| </property> | |

| <property> | |

| <name>hbase.zookeeper.property.clientPort</name> | |

| <value>2222</value> | |

| </property> | |

| </configuration> |

5、启动

$ start-hbase.sh

6、关闭

$ stop-hbase.sh

7、Web UI

http://localhost:60010

8、解决 HBase 中 SLF4J 的 jar 包与 Hadoop 冲突

问题描述:

SLF4J: Class path containsmultiple SLF4J bindings.

SLF4J: Found binding in[jar:file:/home/developer/app/hbase-1.2.0-cdh5.7.1/lib /slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in[jar:file:/home/developer/app/hadoop-2.6.0-cdh5.7.1/share/hadoop/ common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Seehttp://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is oftype [org.slf4j.impl.Log4jLoggerFactory]

解决方法:

$ cdapp/hbase-1.2.0-cdh5.7.1/lib/

$ rm slf4j-log4j12-1.7.5.jar

二、代码测试

1、maven 依赖

| <repositories> | |

| <repository> | |

| <id>cloudera</id> | |

| <url>https://repository.cloudera.com/artifactory/cloudera-repos/</url> | |

| </repository> | |

| </repositories> | |

| <dependencies> | |

| <dependency> | |

| <groupId>junit</groupId> | |

| <artifactId>junit</artifactId> | |

| <version>3.8.1</version> | |

| <scope>test</scope> | |

| </dependency> | |

| <dependency> | |

| <groupId>org.apache.hadoop</groupId> | |

| <artifactId>hadoop-common</artifactId> | |

| <version>2.6.0-cdh5.7.1</version> | |

| </dependency> | |

| <dependency> | |

| <groupId>org.apache.hadoop</groupId> | |

| <artifactId>hadoop-hdfs</artifactId> | |

| <version>2.6.0-cdh5.7.1</version> | |

| </dependency> | |

| <dependency> | |

| <groupId>org.apache.hbase</groupId> | |

| <artifactId>hbase-client</artifactId> | |

| <version>1.2.0-cdh5.7.1</version> | |

| </dependency> | |

| </dependencies> |

2、测试代码

| package com.hbase.demo; | |

| import java.io.IOException; | |

| public class HBaseTest { | |

| private static final String TABLE_NAME = "students"; | |

| private static final String FAMILY_COL_SCORE = "score"; | |

| private static final String FAMILY_COL_INFO = "info"; | |

| private Configuration conf; | |

| public static void main(String[] args) {HBaseTest test = new HBaseTest(); | |

| test.init(); | |

| test.createTable(); | |

| test.insertData(); | |

| test.scanTable(); | |

| test.queryByRowKey(); | |

| // test.deleteRow(); | |

| // test.deleteFamily(); | |

| // test.deleteTable();} | |

| /** | |

| * 初始化 hbase 的配置信息, 也可以将 hbase 的配置文件 hbase-site.xml 引入项目,则不需要在代码中填写配置信息 | |

| */ | |

| public void init() {conf = HBaseConfiguration.create(); | |

| conf.set("hbase.zookeeper.quorum", "localhost"); | |

| conf.set("hbase.zookeeper.property.clientPort", "2222"); | |

| } | |

| /** | |

| * 创建表 | |

| */ | |

| public void createTable() { | |

| Connection conn = null; | |

| try {conn = ConnectionFactory.createConnection(conf); | |

| HBaseAdmin admin = (HBaseAdmin) conn.getAdmin(); | |

| HTableDescriptor desc = new HTableDescriptor(TableName.valueOf(TABLE_NAME)); | |

| desc.addFamily(new HColumnDescriptor(FAMILY_COL_SCORE)); | |

| desc.addFamily(new HColumnDescriptor(FAMILY_COL_INFO)); | |

| if (admin.tableExists(TABLE_NAME)) {System.out.println("table" + TABLE_NAME + "is exists !"); | |

| System.exit(0); | |

| } else{admin.createTable(desc); | |

| System.out.println("table" + TABLE_NAME + "created successfully."); | |

| } | |

| } catch (IOException e) {e.printStackTrace(); | |

| } finally {if ( conn != null) { | |

| try {conn.close(); | |

| } catch (IOException e) {e.printStackTrace(); | |

| } | |

| } | |

| } | |

| } | |

| /** | |

| * 在指定表中插入数据 | |

| */ | |

| public void insertData() { | |

| Connection conn = null; | |

| HTable table = null; | |

| try {conn = ConnectionFactory.createConnection(conf); | |

| table = (HTable) conn.getTable(TableName.valueOf(TABLE_NAME)); | |

| List<Put> puts = new ArrayList<Put>(); | |

| // 添加数据,一个 Put 代表一行,构造函数传入的是 RowKey | |

| Put put1 = new Put(Bytes.toBytes("001:carl")); | |

| put1.addColumn(Bytes.toBytes(FAMILY_COL_SCORE), Bytes.toBytes("english"), Bytes.toBytes("97")); | |

| put1.addColumn(Bytes.toBytes(FAMILY_COL_SCORE), Bytes.toBytes("math"), Bytes.toBytes("99")); | |

| put1.addColumn(Bytes.toBytes(FAMILY_COL_INFO), Bytes.toBytes("weight"), Bytes.toBytes("130")); | |

| puts.add(put1); | |

| Put put2 = new Put(Bytes.toBytes("002:sophie")); | |

| put2.addColumn(Bytes.toBytes(FAMILY_COL_SCORE), Bytes.toBytes("english"), Bytes.toBytes("100")); | |

| put2.addColumn(Bytes.toBytes(FAMILY_COL_SCORE), Bytes.toBytes("math"), Bytes.toBytes("92")); | |

| put2.addColumn(Bytes.toBytes(FAMILY_COL_INFO), Bytes.toBytes("weight"), Bytes.toBytes("102")); | |

| puts.add(put2); | |

| // 将数据加入表 | |

| table.put(puts); | |

| } catch (Exception e) {e.printStackTrace(); | |

| } finally {if (table != null) { | |

| try {table.close(); | |

| } catch (IOException e) {e.printStackTrace(); | |

| } | |

| } | |

| if (conn != null) { | |

| try {conn.close(); | |

| } catch (IOException e) {e.printStackTrace(); | |

| } | |

| } | |

| } | |

| } | |

| /** | |

| * 在指定表中查询所有数据 (全表扫描) | |

| */ | |

| public void scanTable() { | |

| Connection conn = null; | |

| HTable table = null; | |

| ResultScanner scann = null; | |

| try {conn = ConnectionFactory.createConnection(conf); | |

| table = (HTable) conn.getTable(TableName.valueOf(TABLE_NAME)); | |

| scann = table.getScanner(new Scan()); | |

| for (Result rs : scann) {System.out.println("该行数据的 RowKey 为:"+new String(rs.getRow())); | |

| for (Cell cell : rs.rawCells()) {System.out.println("列族:" + new String(CellUtil.cloneFamily(cell)) + "\t" + | |

| "列修饰符:" + new String(CellUtil.cloneQualifier(cell)) + "\t" + | |

| "值:" + new String(CellUtil.cloneValue(cell)) + "\t" + | |

| "时间戳:" + cell.getTimestamp()); | |

| } | |

| System.out.println("-----------------------------------------------"); | |

| } | |

| } catch (Exception e) {e.printStackTrace(); | |

| } finally {if (table != null) { | |

| try {table.close(); | |

| } catch (IOException e) {e.printStackTrace(); | |

| } | |

| } | |

| if (conn != null) { | |

| try {conn.close(); | |

| } catch (IOException e) {e.printStackTrace(); | |

| } | |

| } | |

| } | |

| } | |

| /** | |

| * 通过 rowkey 在指定表中查询一行数据 | |

| */ | |

| public void queryByRowKey() { | |

| Connection conn = null; | |

| HTable table = null; | |

| try {conn = ConnectionFactory.createConnection(conf); | |

| table = (HTable) conn.getTable(TableName.valueOf(TABLE_NAME)); | |

| Get get = new Get("001:carl".getBytes()); | |

| // get.setMaxVersions(2); | |

| // get.addColumn(FAMILY_COL_SCORE.getBytes(), "english".getBytes()); | |

| Result rs = table.get(get); | |

| System.out.println("表" + TABLE_NAME + "中 RowKey 为 001:carl 的行数据如下"); | |

| for (Cell cell : rs.rawCells()) {System.out.println("列族:" + new String(CellUtil.cloneFamily(cell)) + "\t" + | |

| "列修饰符:" + new String(CellUtil.cloneQualifier(cell)) + "\t" + | |

| "值:" + new String(CellUtil.cloneValue(cell)) + "\t" + | |

| "时间戳:" + cell.getTimestamp()); | |

| } | |

| } catch (Exception e) {e.printStackTrace(); | |

| } finally {if (table != null) { | |

| try {table.close(); | |

| } catch (IOException e) {e.printStackTrace(); | |

| } | |

| } | |

| if (conn != null) { | |

| try {conn.close(); | |

| } catch (IOException e) {e.printStackTrace(); | |

| } | |

| } | |

| } | |

| } | |

| /** | |

| * 删除表中指定 RowKey 的行 | |

| */ | |

| public void deleteRow() { | |

| Connection conn = null; | |

| HTable table = null; | |

| try {conn = ConnectionFactory.createConnection(conf); | |

| table = (HTable) conn.getTable(TableName.valueOf(TABLE_NAME)); | |

| table.delete(new Delete("001:carl".getBytes())); | |

| } catch (Exception e) {e.printStackTrace(); | |

| } finally { | |

| try {if (table != null) {table.close(); | |

| } | |

| if (conn != null) {conn.close(); | |

| } | |

| } catch (Exception e) {e.printStackTrace(); | |

| } | |

| } | |

| } | |

| /** | |

| * 删除指定名称的列族 | |

| */ | |

| public void deleteFamily() { | |

| Connection conn = null; | |

| HBaseAdmin admin = null; | |

| try {conn = ConnectionFactory.createConnection(conf); | |

| admin = (HBaseAdmin) conn.getAdmin(); | |

| admin.deleteColumn(TABLE_NAME.getBytes(), FAMILY_COL_INFO); | |

| } catch (Exception e) {e.printStackTrace(); | |

| } finally { | |

| try {if (null != conn) {conn.close(); | |

| } | |

| } catch (Exception e) {e.printStackTrace(); | |

| } | |

| } | |

| } | |

| /** | |

| * 删除指定表 | |

| */ | |

| public void deleteTable() { | |

| Connection conn = null; | |

| HBaseAdmin admin = null; | |

| try {conn = ConnectionFactory.createConnection(conf); | |

| admin = (HBaseAdmin) conn.getAdmin(); | |

| // 在删除一张表前,要先使其失效 | |

| admin.disableTable(TABLE_NAME); | |

| admin.deleteTable(TABLE_NAME); | |

| } catch (Exception e) {e.printStackTrace(); | |

| } finally { | |

| try {if (conn != null) {conn.close(); | |

| } | |

| } catch (Exception e) {e.printStackTrace(); | |

| } | |

| } | |

| } | |

| } |

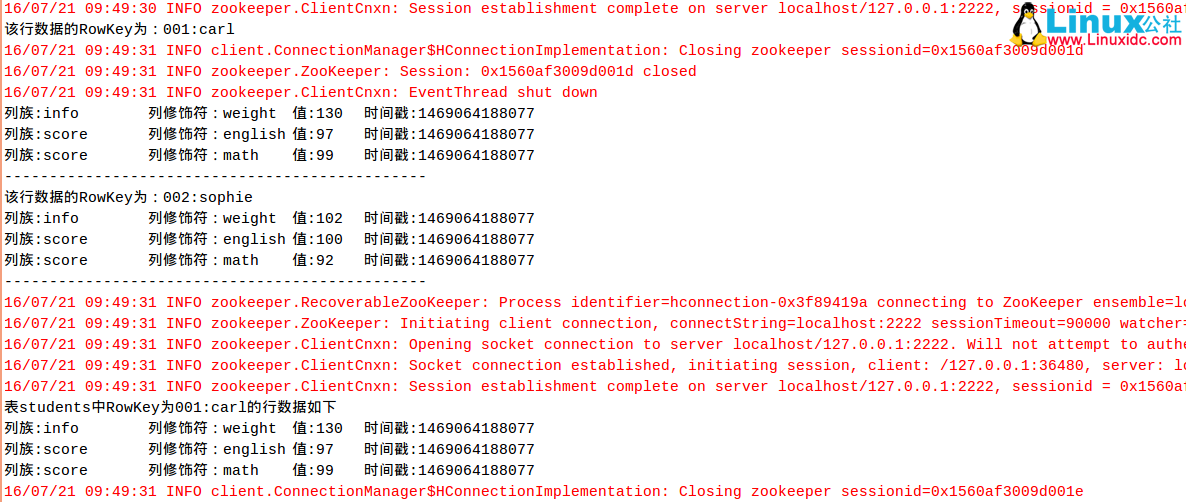

3、Eclipse 运行结果

4、HBase 的 shell 终端查看结果

Hadoop+HBase 搭建云存储总结 PDF http://www.linuxidc.com/Linux/2013-05/83844.htm

Ubuntu Server 14.04 下 Hbase 数据库安装 http://www.linuxidc.com/Linux/2016-05/131499.htm

HBase 结点之间时间不一致造成 regionserver 启动失败 http://www.linuxidc.com/Linux/2013-06/86655.htm

Hadoop+ZooKeeper+HBase 集群配置 http://www.linuxidc.com/Linux/2013-06/86347.htm

Hadoop 集群安装 &HBase 实验环境搭建 http://www.linuxidc.com/Linux/2013-04/83560.htm

基于 Hadoop 集群的 HBase 集群的配置 http://www.linuxidc.com/Linux/2013-03/80815.htm‘

Hadoop 安装部署笔记之 -HBase 完全分布模式安装 http://www.linuxidc.com/Linux/2012-12/76947.htm

单机版搭建 HBase 环境图文教程详解 http://www.linuxidc.com/Linux/2012-10/72959.htm

HBase 的详细介绍 :请点这里

HBase 的下载地址 :请点这里

本文永久更新链接地址 :http://www.linuxidc.com/Linux/2016-08/134182.htm